https://www.techpowerup.com/249557/battlefield-v-with-rtx-initial-tests-performance-halved

So it seems RTX is nowhere near ready for prime (but hey gotta start from the bottom) atm AMD is better suited making cards focusing on raster performance, the might even surpass Nvidia in that department since they will have more transistors to play with-

I think its actually fairly decent, as it highlights really well that yes, RTX is a heavy performance penalty. There are a few things in mind though:

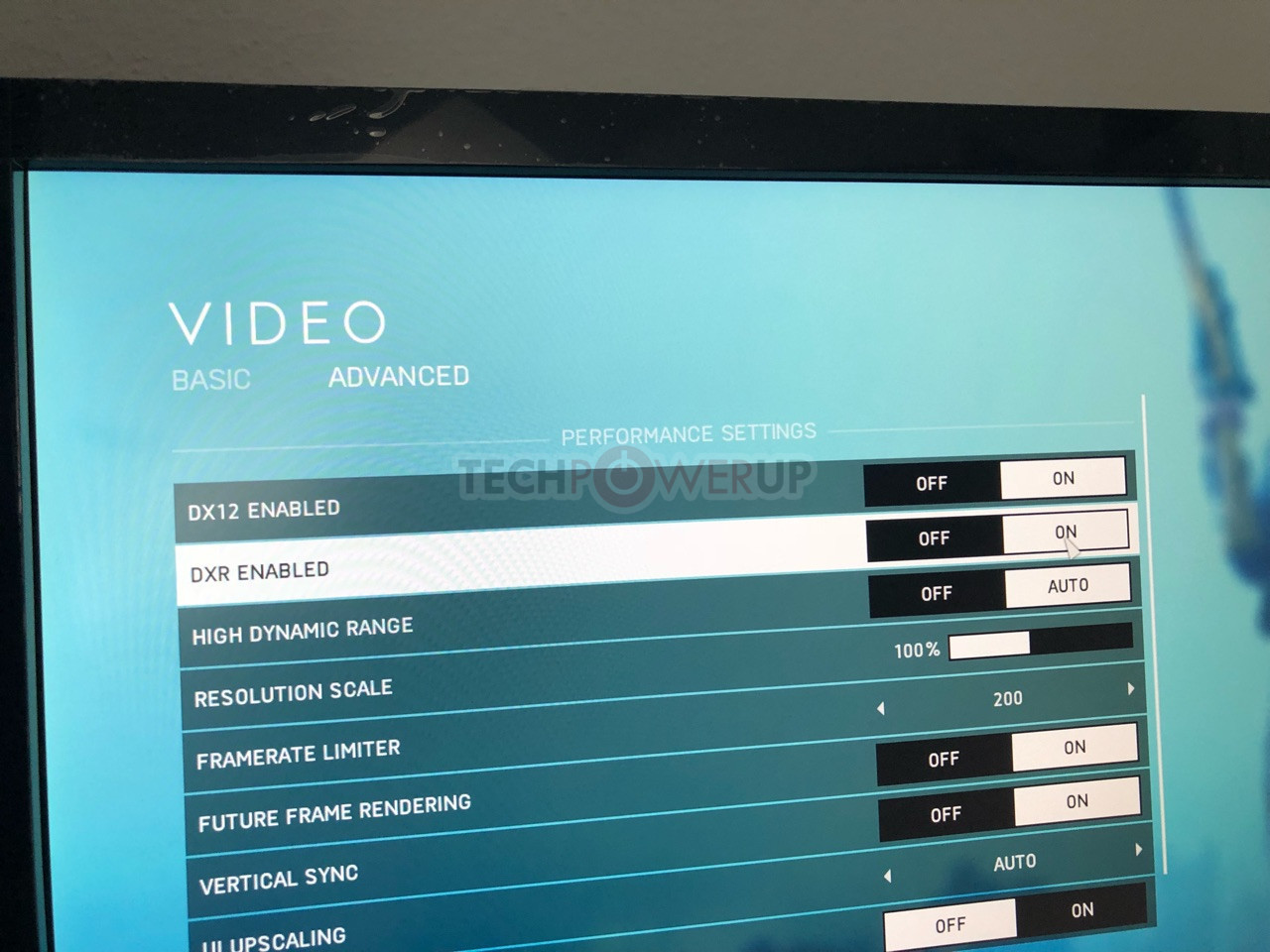

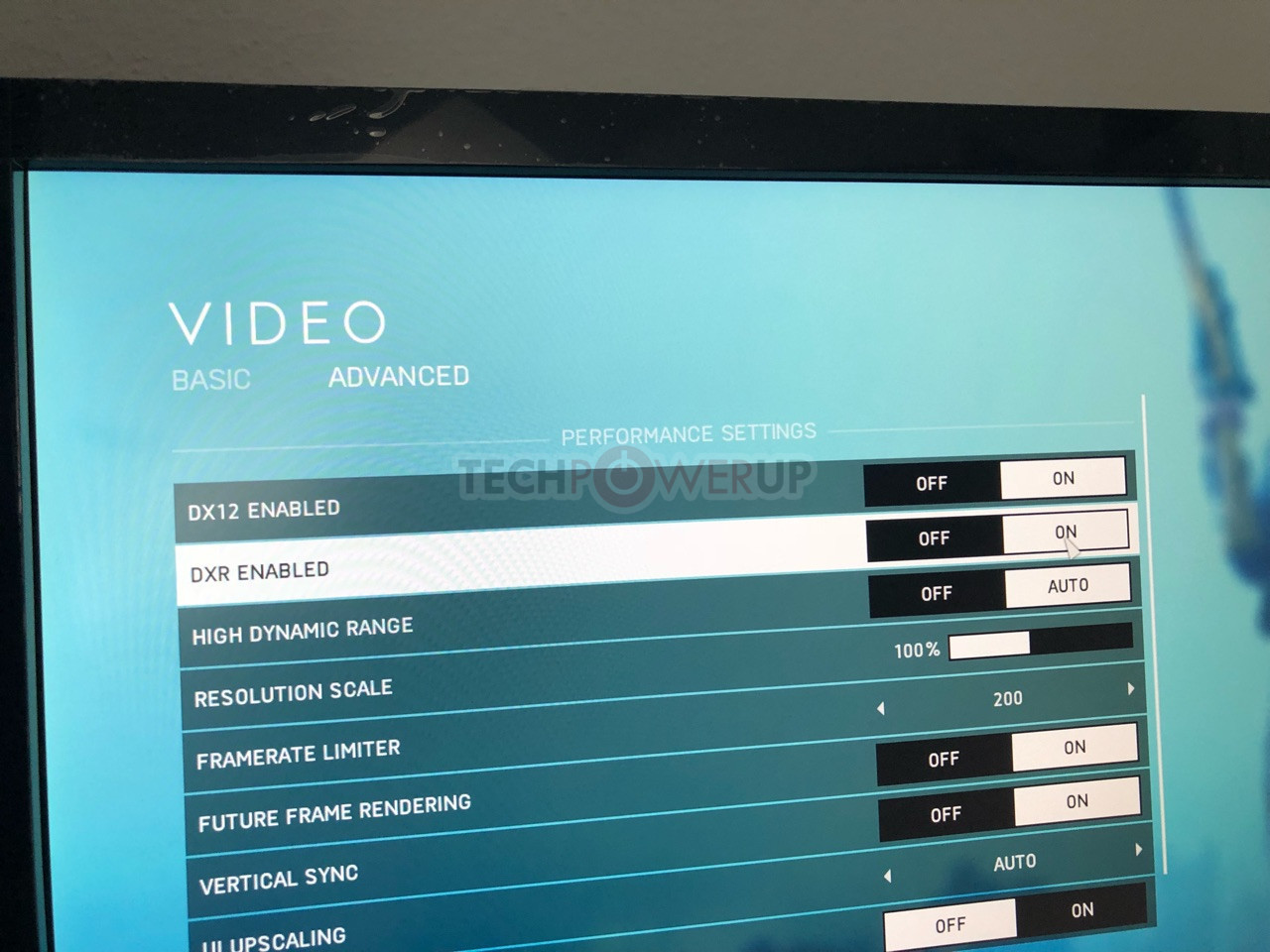

- This is early code.

- This is on Frostbite, so on other implementations, the penalty may vary.

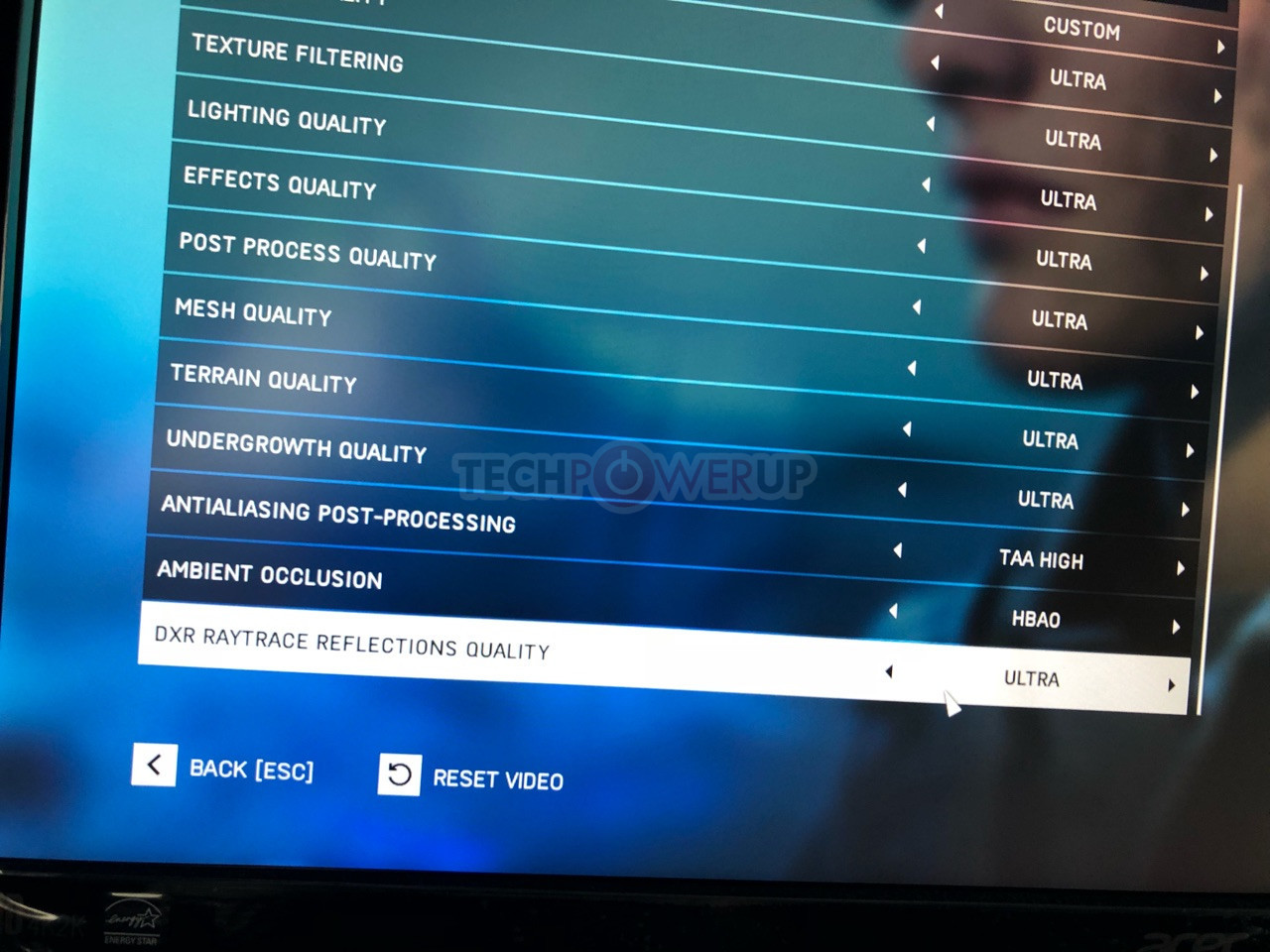

- Raytracing support may differ per engine. Atomic Heart has far more usage of raytracing implemented but is also a late 2019 game. I think that game is going to be the true test for these cards.

- DLSS was not employed, it seems. So hypothetically performance can rise on higher resolutions and higher RTX settings if DLSS is employed (and supported).

Even though its early days, its actually

fairly decent, especially for a generation zero (No pun intended) product.

Remember that the only thing coming close to hybrid raytracing silicon prior to this was Imagination Wizard, and that was just a few years ago.

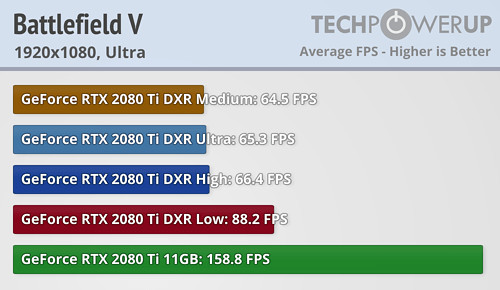

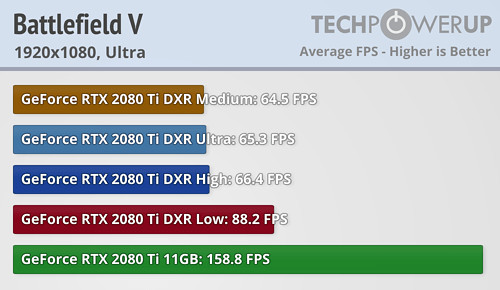

It can't be understated how impressive this geniunely is, even when its a 50-65% performance deficit (Meaning multiple ms are spent doing RT) and at 1080p resolution.

I mean, it's over 60fps which is good... but thats at 1080p. They need do this test with 1440p and 4k monitors.

Why should they? AFAIK BFV does not employ DLSS so higher resolutions will see it (naturally) drop below 60 on anything above RTX Low. 1440p could be achievable with RTX Low and 60 fps, but 4K60 is an absolute pipedream.

Its overkill to expect that out of gen 0 hardware imo.

hw is new born, I'd say we are 5 arch releases away from 4k 60fps. I don't think software optimization will get much more performance out of current hw

Still 1080p at 60fps for raytracing is mighty good

See the quote to DeepEnigma below, but i think RTX Low and Medium are the first steps to tackle. RTX Low is a heavy penalty but does keep it over 80 fps, so there is potential there for that setting on higher resolutions and that is to be accepted imo. Medium, High and Ultra all pose similar performance in BFV, which seems to be an issue, but Medium is the first one to tackle.

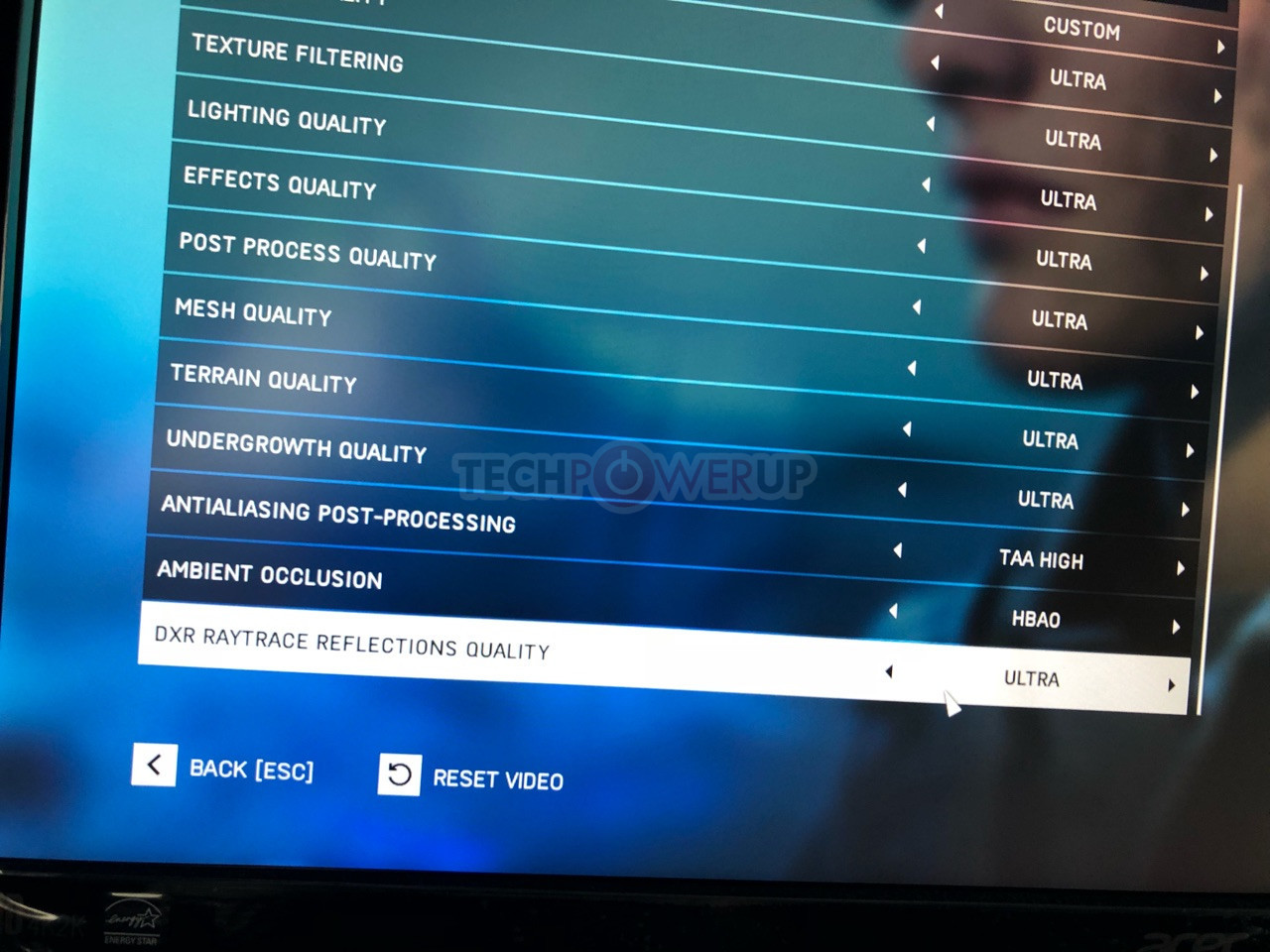

Honestly, DF should take a look at what the

differences between the various settings are: What is the sample size? How many rays are traced? And what is performance with a regular GTX 1080Ti on DXR Fallback (If this is supported by the game)?

All kinds of questions that we need answers to establish a metric of potential for RTX, imo.

Why does medium have a lower frame-rate than Ultra?

Early code. Its already strange that Medium, High and Ultra deliver similar framerates, which is either a bug or there is hardly any performance difference between the 3 (probably the former). Low gives you a 20-30 fps increase compared to Medium so that one is actually interesting: Still a big performance loss, but this is one that could do well on lower spec RTX cards and might be interesting for higher resolutions aswell. 1440p Medium RTX and 4K Low RTX are the first

boundaries that we can overtake within a few gens of cards, sort to say. Id say those are the most

realistic settings for now and forthcoming 1 to 2 years and at those resolutions regarding RTX.

RTX seems cool, but these cards always seemed like early adopter cards. Barely any improvement over the previous series ( I have a 1080ti and nothing I've seen in terms of performance I've seen makes me want to upgrade), and a feature barely anything makes use of tells me that I should wait for the next gen before jumping in.

It is silly to consider an upgrade over GTX 1080 Ti), even DF says so that for

this moment, that's a useless upgrade. But when it comes to future proofing on tech, the RTX cards are an interesting prospect.