Kumomeme

Member

also worth to added that PS5 might have advantages with their API. Their specific API surely can squeeze more from the hardware more than XSX API GDK since it designed to support more than 1 SKU. (XSS and wide range specs of Windows 10 pc out there).I should probably not even respond to this thread but here we go. Some posts here will not age well.

The focus on theoretical peak TFLOPs is misguided at best to measure how good graphics you will get with these two consoles.

The XSX and the PS5 are ridiculously close to each other in terms of hardware rendering specifications.

Having said that, the I/O of the PS5 has a significant advantage over the XSX - and this will in games utilising that I/O result in more and higher resolution textures.

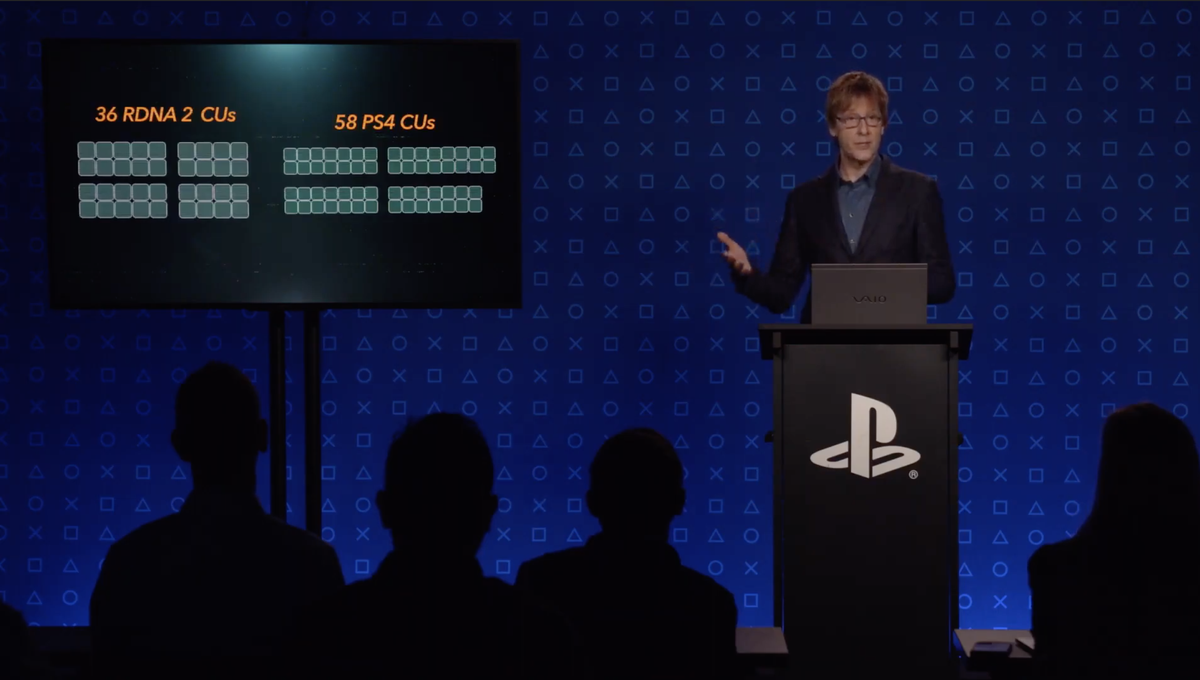

As to the GPU itself the XSX has a theoretical peak TFLOP advantage over the PS5. However, the PS5 has a pixel fill rate advantage and a frequency advantage. if the PS5 has a significant cache advantage (that I believe) - and we should know within the next few weeks - I even think the PS5 has a real-world TFLOP advantage due to higher CU utilisation and practical memory bandwidth (due to the size of the cache and its bandwidth to the CUs).

Net-net - the consoles are really close in power. And if there is a hardware advantage to be had - without current benchmarks - I am willing to bet the PS5 will come out slightly ahead. That relative difference though is close to meaningless when it comes to rendering power. The real difference between the two is in I/O to the advantage of the PS5. And the last point will show itself in some titles.

So dont expect big differences in term of performance between both console. On paper the gpu TF differences is around 18% not to mention other cpu clockspeed, memory bandwith etc but it probably smaller than what we expected.

Last edited: