PaintTinJr

Member

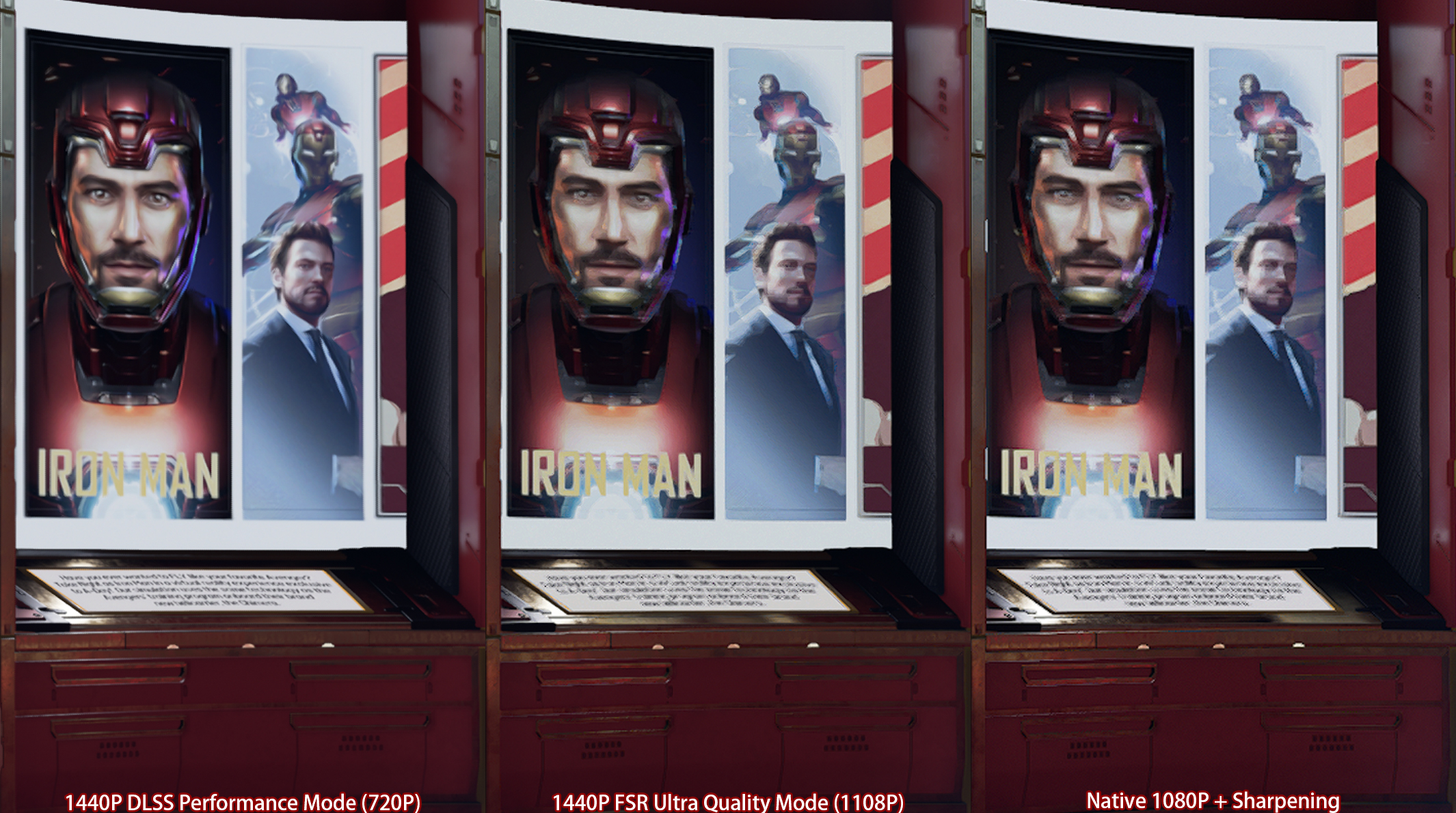

If they haven't bothered to ensure that both images are of identical runs and game states, then the comparison is worthless, and shows they are more concerned about perception of better than actual doing the leg work to level the playing field to show the algorithm can handle both low frequency inference perfectly, along with improving high frequency detail at the same time,It also requires a measurement metric. Compared to the native render as a point of reference, and using the things you listed - higher fidelity resembling supersampled rendering and reduced noise in details - as the metric, the DLSS render is better.

You've chosen something else as the metric, and while I'd agree that for some cases it's important that the result pixel-match the native render, I also struggle to imagine the exact cases where it would be relevant considering the supposed deviation is in terms of pixels, on a 4K image.

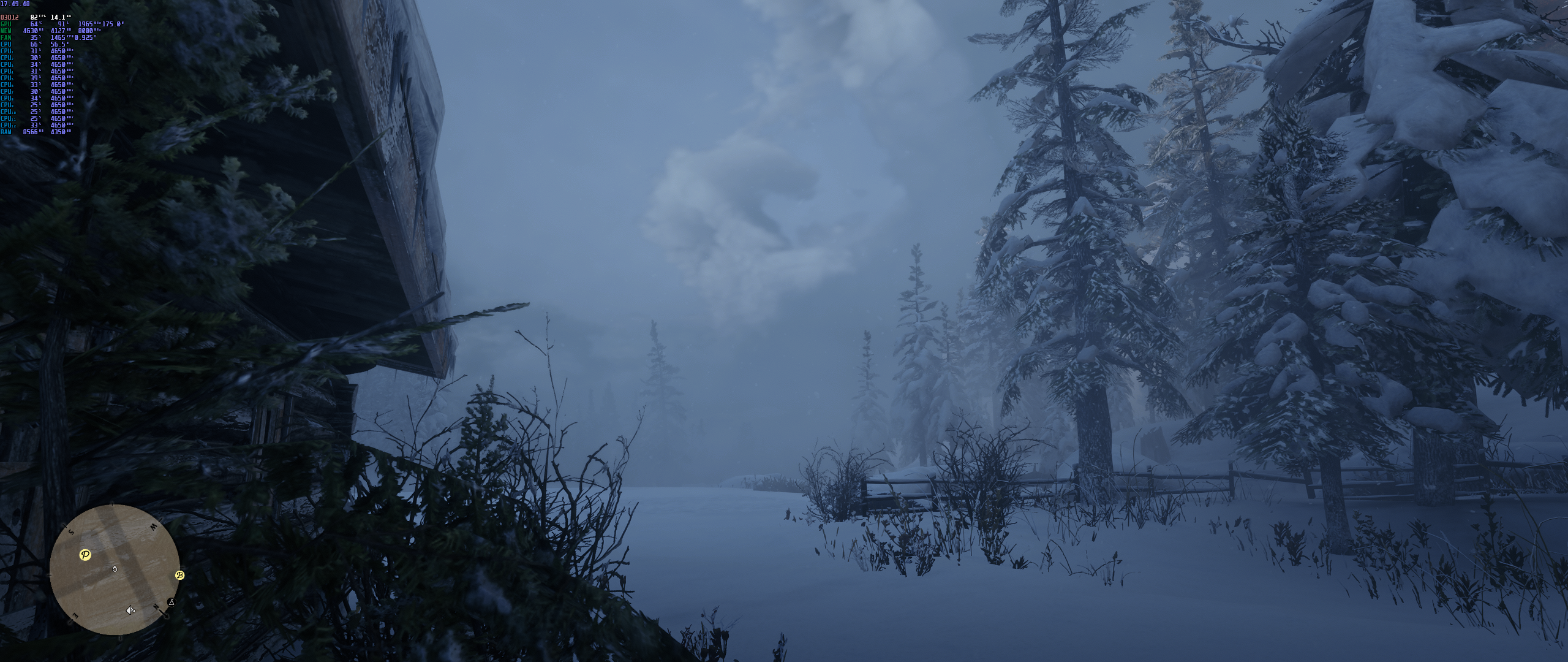

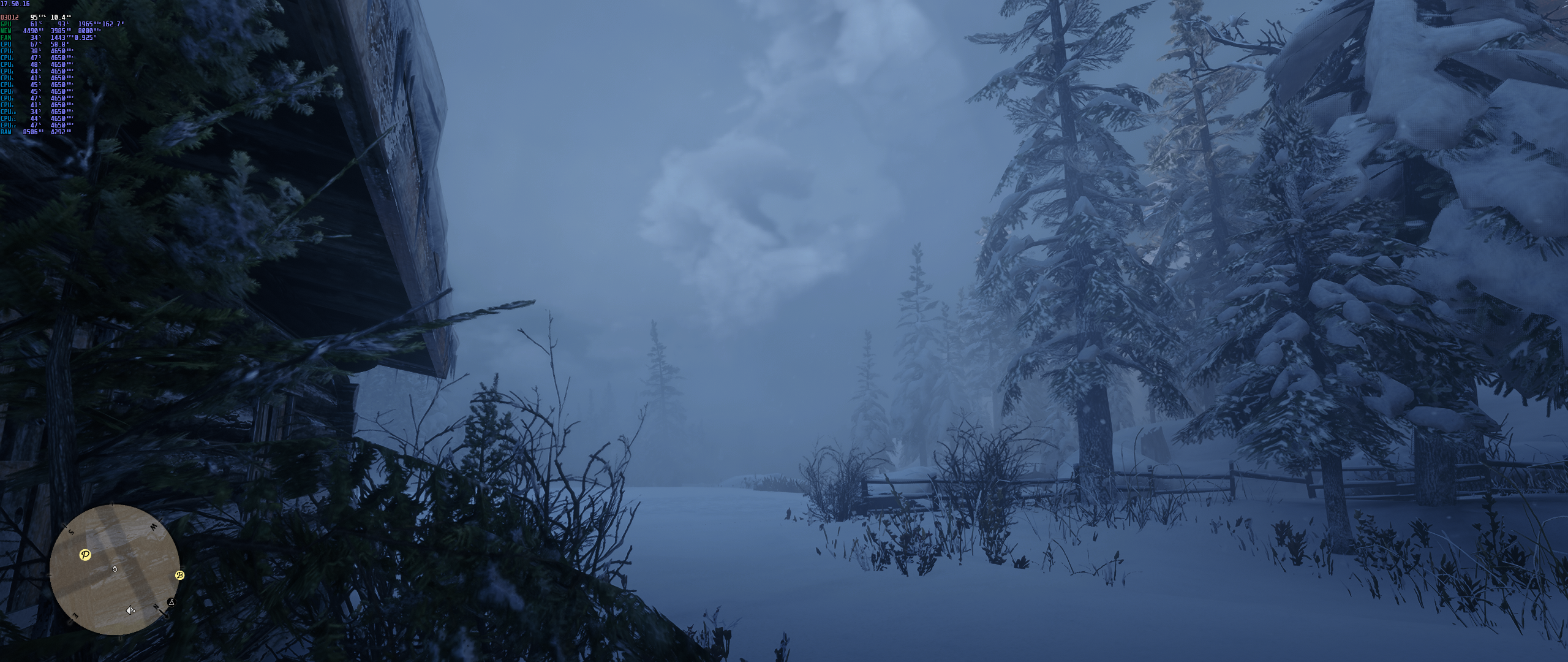

And I also can't quite find what you're referring to in regards to examples, can you point me to the specific comparison? Seeing as a lot of comparisons are from normal gameplay, and recording both DLSS on and DLSS off footage is functionally impossible, it's not out of the question for the comparison shots to be slightly different in timing and scene composition.

For signal processing, low frequency detail is the base signal(and more important), and is the more important metric when it has errors IMO - because images are sampled and built from low to high in an additive way, with the highest details - that can't be perceived - always being left unsampled.

The speaker shows that none of the results are perfect IMO - with the DLSS biasing towards fixing the high frequency at the detriment of losing low frequency by the mesh occluding that low frequency detail, and the other two giving both high and low frequency detail equal importance, but even at native, the resolution is too low to sample the speaker mesh correctly, and leaves both meshes looking like a rendering artefact.