Yep!

http://static.giantbomb.com/uploads/original/9/98500/2440294-0433203838-proxy.jpg[IMG]

And do you remember this ----> [URL="http://www.neogaf.com/forum/showthread.php?t=511170"]"Victory will go not to those who make the most noise, but those who make the most impact".[/URL]

I'm [U][B]still[/B][/U] laughing.....[/QUOTE]

Some golden posts in that thread:

[QUOTE]MS knows how to make a splash. They possess a marketing squad that actually knows what they're doing :P[/QUOTE]

-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

EDGE: "Power struggle: the real differences between PS4 and Xbox One performance"

- Thread starter Musiol

- Start date

btw i hope fyou arent fans of 1080p on xone, wehn hw is out in wild you wil se wat I meant about isues. puclocks too. Risk evwyerhwere. this palce is ntus. 2014 was beter pln.

Referencing previous uplocks, risks, and a development environment being nuts seems to suggest something other than just not running a game at native 1080p. Any chance of an addendum (or "naddemndum")?

Can someone explain who cboat is?

Insider with some connection to Microsoft. Been around forever, writes his info in a way that makes everything more cryptic to hopefully protect his identity. He's had some misses as of late, and he's accused of providing "leaks" that can't be positively or negatively confirmed after-the-fact, but he's been a useful source of info for years. Trusted and revered by most.

AstroNut325

Member

What I got from this thread is that Xbox One is much more powerful than PS4 and Sony should go cry in a corner somewhere.

Finally. The summary I was waiting for! Thank you!

Oh and we're just around 30 days from the first DF comparisons. What's the over/under on the ban count at GAF? 200/600?

Muppet of a Man

Member

Can we just imagine Xbox one has roughly the same effective bandwidth - if programmed carefully - as PS4? Then we can move the discussion on to the more concrete areas like 50% more CUs, 100% more ROPs etc. I'd be interested to know how (assuming similar effective bandwidth), each system would be able to utilise and keep fed those other elements

This argument is hard to frame because, even if in limited instances the effective bandwidth is similar, the ps4 effectively has a true hUMA setup while x1 does not. True hUMA will minimize copy overhead between the cpu and gpu, shrinking the need for additional bandwidth in the first place.

This argument is hard to frame because, even if in limited instances the effective bandwidth is similar, the ps4 effectively has a true hUMA setup while x1 does not. True hUMA will minimize copy overhead between the cpu and gpu, shrinking the need for additional bandwidth in the first place.

I know, but I just wanted to move on from the memory argument, to show that - even if that gap was reduced - there are still significant advantages in PS4's setup

I just wrote a post regarding why the xbox one was sub 1080p due to 32mb esram i another thread and thought it would be useful in here as there was some discussion regarding why xb1 would be stuck lower than 1080 and what CBOAT could have meant:

It's techy but I'm sure some will enjoy the read.

I saw similar maths over and over again about why the 360 eDRAM was too small and wouldn't allow 720p..

As we know, devs used various techniques (tiling, etc) to work with it rather then against it..

So, whilst I agree with your maths, I can't see what's different this gen. They keep saying they've designed the ESRAM to be more flexible, and to have added many features to allow more flexibility with the ESRAM.

It'd be great to get a real insiders viewpoint, someone who is actually working on XB1.. Is the issue the MS tools are just immature and devs just don't have anything to help them leverage all the alledged improvements? Or is it that the sacrificing of CU's for ESRAM is way more of a drag then initially thought?

The last thing I would have plausibly gone for is the ESRAM is too small for 1080p..

Just IMO of course.. end of the day, it's not like any of this is going to bring the XB1 that much closer to PS4, but in the interests of getting to the bottom of things..

Septimus Prime

Member

So, to use an analogy here, we have Shaq and Mugsy Bogues. On paper, Shaq is roughly 50% taller, but in reality, Bogues could probably be just as tall. He holds back some height to be released later.What I got from this thread is that Xbox One is much more powerful than PS4 and Sony should go cry in a corner somewhere.

Think about it.

I saw similar maths over and over again about why the 360 eDRAM was too small and wouldn't allow 720p..

As we know, devs used various techniques (tiling, etc) to work with it rather then against it..

So, whilst I agree with your maths, I can't see what's different this gen. They keep saying they've designed the ESRAM to be more flexible, and to have added many features to allow more flexibility with the ESRAM.

It'd be great to get a real insiders viewpoint, someone who is actually working on XB1.. Is the issue the MS tools are just immature and devs just don't have anything to help them leverage all the alledged improvements? Or is it that the sacrificing of CU's for ESRAM is way more of a drag then initially thought?

The last thing I would have plausibly gone for is the ESRAM is too small for 1080p..

Just IMO of course.. end of the day, it's not like any of this is going to bring the XB1 that much closer to PS4, but in the interests of getting to the bottom of things..

Well, Rendering techniques were different in 2005, so 720p would fit in edram using those techniques but not using deferred rendering. Also there are tons of sub 720p games on xbox 360. Also as you have pointed out yourself there are more things you can do with esram, which also require space, some of these need to be done in esram due to the poor andwidth from main memory (something the 360 didn't suffer from)

EDIT: to clarify, the edram in the 360 had a connection to the GPU of similar bandwidth to the main memory and GPU, in the xb1 this is not the case.

So, to use an analogy here, we have Shaq and Mugsy Bogues. On paper, Shaq is roughly 50% taller, but in reality, Bogues could probably be just as tall. He holds back some height to be released later.

Think about it.

You must've missed the recent CBOAT thread...

Kazuma Kiryu

Member

Some golden posts in that thread:

This year has been awesome. Seriously, it's been crazy, from all 3 camps.

geordiemp

Member

Finally. The summary I was waiting for! Thank you!

Oh and we're just around 30 days from the first DF comparisons. What's the over/under on the ban count at GAF? 200/600?

I am 100 % sure it was humour - made me laugh anyway.

Good info, it could go very well in this thread - http://neogaf.com/forum/showthread.php?t=674333I just wrote a post regarding why the xbox one was sub 1080p due to 32mb esram i another thread and thought it would be useful in here as there was some discussion regarding why xb1 would be stuck lower than 1080 and what CBOAT could have meant:

It's techy but I'm sure some will enjoy the read.

Well, Rendering techniques were different in 2005, so 720p would fit in edram using those techniques but not using deferred rendering. Also there are tons of sub 720p games on xbox 360. Also as you have pointed out yourself there are more things you can do with esram, which also require space, some of these need to be done in esram

due to the poor andwidth from main memory (something the 360 didn't suffer from)

?? A 720p framebuffer with 2xMSAA doesn't even fit in 10MB (just did some quick googling), And there are tons of sub-720p games on PS3 that had a massive framebuffer in comparison..

Not to mention there where 720p games using tiled deferred rendering on the 360 (Crysis 2/ Trials HD? etc), I presume that tiling was something foremost in MS' thinking when evolving the architecture..

I still don't see not fitting the entire frame buffer is the main reason for sub 1080p. but I'm no expert, I would have thought the lack of CU's and poor tools as a primary candidate, unless MS fucked up so badly you can't do tiled rendering due to insufficient bandwidth despite it being a current 360 development technique..

[edit] I have found this, http://neogaf.com/forum/showpost.php?p=81325693&postcount=454

Note sure what he is inferring, that crappy API's are not utilising ESRAM very well , as in, they may in the future, or that the manually fill/flush is a hardware limitation the APIs will never be able to overcome.. in which case, I am happy to be wrong and it would seem MS have fucked up royally..This is anecdotal from E3, but...

I've heard the architecture with the ESRAM is actually a major hurdle in development because you need to manually fill and flush it.

So unless MS's APIs have improved to the point that this is essentially automatic, the bandwidth and hardware speed are probably irrelevant.

For reference, the story going around E3 went something like this:

"ATVI was doing the CoD: Ghosts port to nextgen. It took three weeks for PS4 and came out at 90 FPS unoptimized, and four months on Xbone and came out at 15 FPS."

KoopaTheCasual

Junior Member

Favorite analogy of the thread.So, to use an analogy here, we have Shaq and Mugsy Bogues. On paper, Shaq is roughly 50% taller, but in reality, Bogues could probably be just as tall. He holds back some height to be released later.

Think about it.

You must not know who Mugsy Bogues is and why Septimus's comment is exceedingly sarcastic.You must've missed the recent CBOAT thread...

Takes a special kind of talent to summarise a 159 page thread so succintly, thank you very much.Finally. The summary I was waiting for! Thank you!

Oh and we're just around 30 days from the first DF comparisons. What's the over/under on the ban count at GAF? 200/600?

Sword Of Doom

Member

This argument is hard to frame because, even if in limited instances the effective bandwidth is similar, the ps4 effectively has a true hUMA setup while x1 does not. True hUMA will minimize copy overhead between the cpu and gpu, shrinking the need for additional bandwidth in the first place.

How much of an effect does hUMA have? How does the efficiency translate into frame rates?

You must not know who Mugsy Bogues is and why Septimus's comment is exceedingly sarcastic.

I do know who he is. I guess I just didn't detect the sarcasm.

SHADES

Member

And I remember Major Nelson passing off that sony event as nothing, posting pictures of MS guys watching it and suggesting that there was popcorn (iirc). The messaging from MS on that event made it seem like it didn't effect them at all. It made me interested in seeing what they had up their sleeves because of that, then I see TV TV Sports TV.

I remember thinking at the time when Larry said "well that's one way to announce a console, by not showing it!" That props to Larry & that in his smugness MS had something just as excellent up their sleeves, then the go and announce a console that came across more akin to a set top box, that was game over for me. ( reveal a console that was actually not a console 1st & foremost), yeah Larry that's the way to do it :-s

I just wrote a post regarding why the xbox one was sub 1080p due to 32mb esram i another thread and thought it would be useful in here as there was some discussion regarding why xb1 would be stuck lower than 1080 and what CBOAT could have meant:

It's techy but I'm sure some will enjoy the read.

Great post, Skeff. Didn't realize that I never commented on it yesterday.

Quick question: There have been talks about the front buffer further split into 3 independent display panes (2 for the game and one for the system UI) that are overlapped on top of each other. What I read is that each of these planes can have their own resolution and frame rate. Would your calculations on the memory requirements on the front buffer increase as a result of this? My guess is, it would. Thoughts?

Probably not the best reference to post here, but this site explained it best for me

http://www.vgleaks.com/durango-display-planes/

SHADES

Member

This just in. According to misterxmedia's insider, Xbox One has an additional 4 old CU's to reserve the 10% GPU for the Kinect so its TFLOPs is still unchanged....

LOLOLOLOLOLOLOL!

Oh gosh. I know it's bad to bring him up but every time a bad news about Xbox One gets reported he undoubtedly has an explanation for it. Now with Cboat's leaks, I don't know what to say anymore.

Would be nice if you could necro the thread to make those people ,saying the MS PR knows what they're doing, eat crow. I'm a junior though so it wouldn't be nice.

Lol, last week he was claiming a 3-4Tflop machine

lyrick

Member

How much of an effect does hUMA have? How does the efficiency translate into frame rates?

Instead of having to transfer entire Data Structures from one memory allocation to another only a pointer is transferred, so the savings in terms of efficiency is huge. Realistically though it only applies to processes which share memory resources, which is a very small quantity of resources. The Guerrilla Game's Killzone Slides had less than 3% (only 128MB) of memory usage shared between the CPU & GPU.

It's not too unrealistic to expect very small performance increases due to HSA (hUMA) tech.

qa_engineer

Member

The thing is that "looking amazing" is a moving target. Uncharted looked amazing...but not so much when compared to Uncharted 2. My old 42" 1024x768 TV looked amazing...but not so much when I upgraded to a 60" 1080p one.

Contrary to what many people say, wanting better hardware to enable better graphics isn't some tangential pursuit. It is inextricably part of the desire for better games. There will be good-looking games everywhere; there can be great games on weak hardware; fun isn't driven by pixel counts or AA levels; many will be satisfied with lesser graphics, and more still will tolerate them--to all of that, let's accede.

But settling for more constrictive parameters and a more limited goal from the beginning is defeatist. This is my entertainment, which (when good) I enjoy deeply. Story, gameplay, fun, graphics--I want it all! And since many others do too, you shouldn't be surprised or dismissive when they're passionately disappointed by underachievement.

This is the sentiment many are trying to argue, explain, whatever you want to call it. Well said.

?? A 720p framebuffer with 2xMSAA doesn't even fit in 10MB (just did some quick googling), And there are tons of sub-720p games on PS3 that had a massive framebuffer in comparison..

Not to mention there where 720p games using tiled deferred rendering on the 360 (Crysis 2/ Trials HD? etc), I presume that tiling was something foremost in MS' thinking when evolving the architecture..

I still don't see not fitting the entire frame buffer is the main reason for sub 1080p. but I'm no expert, I would have thought the lack of CU's and poor tools as a primary candidate, unless MS fucked up so badly you can't do tiled rendering due to insufficient bandwidth despite it being a current 360 development technique..

[edit] I have found this, http://neogaf.com/forum/showpost.php?p=81325693&postcount=454

Note sure what he is inferring, that crappy API's are not utilising ESRAM very well , as in, they may in the future, or that the manually fill/flush is a hardware limitation the APIs will never be able to overcome.. in which case, I am happy to be wrong and it would seem MS have fucked up royally..

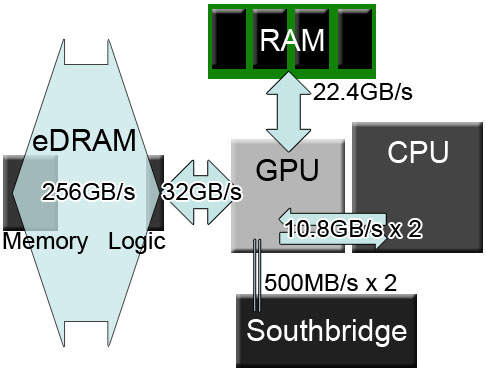

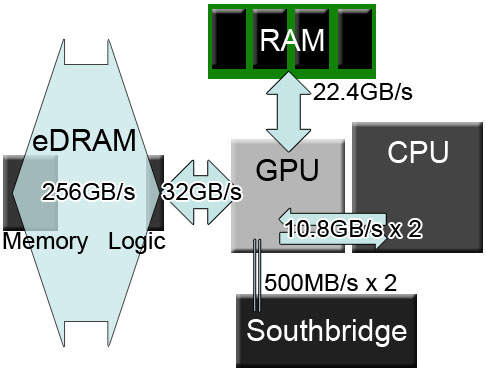

Well No, It's not just esram that means a lack of 1080p, it is also the power of the consoles GPUs, but this is a significant factor and would help determine where a developer would cut scale back, regarding fitting it into 10mb if we look back at my previous post ad just throw 720p numbers into it we can get:

Back Buffer:

1280x720 [Resolution] * 32 [Bits Per Pixel] * 2[FSAA Depth]

= 58982400 bits = 7MB

Depth Buffer:

1280x720 [Resolution] * 32 [24Bit Z, 8Bit Stencil] * 2 [FSAA Depth]

= 58982400 bits = 7MB

Front Buffer:

1280x720 [Resolution] * 32 [24Bit Z, 8Bit Stencil]

= 29491200 bits = 3.5MB

For a total of 17.5MB with 2x AA, of course this doesn't fit in 10mb edram, however if we look at the memory setup of the xbox 360 bandwidth we get this:

That has bandwidth of just 32GB/s for Esram compared to the 22.4GB/s to main ram, I comparison to the PS3, it has similar bandwidth between GPU and main memory, transferring data from esram to main memory and back on the xb1 is relatively a much bigger hit to performance than it was in the 360, All three of the buffers at 720p and 2x FSAA fit into edram and can be moved between edram and main memory with less of a performance hit than if the same thing was done on XB1. For instance on XB1 it would drop a potential bandwidth from 140gb/s down to 68gb/s for a drop of over 50% (and around 60% slower than PS4), compared to the drop in the 360 which would only be from 32 to 22, which is around a 30% drop (and around the same as PS3)

Also further in that thread it is clarified by Kagari that a Japaese developer was having similar Esram issues.

We don't know for sure what it the main cause of sub 1080p But I would assume both the lower Graphical power and the esram will both contribute.

We are in the same situation for tiled deferred rendering as well AFAIK

Great post, Skeff. Didn't realize that I never commented on it yesterday.

Quick question: There have been talks about the front buffer further split into 3 independent display panes (2 for the game and one for the system UI) that are overlapped on top of each other. What I read is that each of these planes can have their own resolution and frame rate. Would your calculations on the memory requirements on the front buffer increase as a result of this? My guess is, it would. Thoughts?

Probably not the best reference to post here, but this site explained it best for me

http://www.vgleaks.com/durango-display-planes/

I don't know, I heard that each can display four rectangle Meaning that 3 of the rectangles could be flagged as empty and only display one portion of the screen in the buffer. If that was the case they could Display 1 quarter of the screen at 1080p (main character) and another at 3 quarters of the screen at 720, I think this would save memory, However it is far more likely the additional display plane would be used for a 1080p UI stuck on top of a 720p screen. But I really don't know.

I don't know, I heard that each can display four rectangle Meaning that 3 of the rectangles could be flagged as empty and only display one portion of the screen in the buffer. If that was the case they could Display 1 quarter of the screen at 1080p (main character) and another at 3 quarters of the screen at 720, I think this would save memory, However it is far more likely the additional display plane would be used for a 1080p UI stuck on top of a 720p screen. But I really don't know.

Yeah, I think this is most likely, for exclusives at least. Senjutsu brought it up yesterday and that's what encouraged me to read more about it. Thanks Senjutsu!

Don't think 3D games would ever try to have different resolutions for different quarters of the screen.

Wonder how much ESRAM is required for the 3rd display plane (which is dedicated to the OS). Also, how many multi-plats would use this option is anyone's guess. It sounds like a feature specific to the xbox one. PS4 has 2 layers, 1 for the game and the other for the OS and PC wouldn't need this type of stuff. In any case, this definitely adds an unknown variable to the ESRAM situation. We might have even lesser available for native 1080p capability.

I just learned something. Not sure if this has been posted here or not. If it's 2x MSAA it would explain why 900p is going to be common for Xbone multiplat's.

Edit: this is a bogus article. No mandate exists.

I. GPU Tradeoffs, 720p vs. 1080p Resolution Gaming Explained

Comments Mr. Baker:

We've chosen to let title developers make the trade-off of resolution vs. per-pixel quality in whatever way is most appropriate to their game content. A lower resolution generally means that there can be more quality per pixel. With a high-quality scaler and antialiasing and render resolutions such as 720p or '900p', some games look better with more GPU processing going to each pixel than to the number of pixels; others look better at 1080p with less GPU processing per pixel.

Microsoft also revealed that it's mandating at least 2x anti-aliasing in all its titles, a guideline that had not yet received significant media attention. Additionally, Mr. Baker and Mr. Goosen detail in the interview how the Xbox One operating system, firmware, and hardware are designed to allow system apps (e.g. a messaging client) to run alongside games at minimum cost.

http://www.dailytech.com/Microsoft+...aster+GPU+Less+Compute+Units/article33506.htm

Edit: this is a bogus article. No mandate exists.

What AA method (if any) does Forza 5 use?

I seem to remember the early build having no AA, but haven't been keeping track of its progress.

Remember that MS set technical requirements for 360 games (720p, 2x AA), yet it had plenty of early games that were sub-HD and had no AA.

This does not look like 2x MSAA. Not for a second.

They said this exact thing before the 360 launched too. It won't happen.I just learned something. Not sure if this has been posted here or not. If it's 2x MSAA it would explain why 900p is going to be common for Xbone multiplat's.

This does not look like 2x MSAA. Not for a second.

Maybe that's how they're the only game hitting 1080p. They're not following MS requirements for 2xMSAA.

SwiftDeath

Member

They said this exact thing before the 360 launched too. It won't happen.

http://www.joystiq.com/2009/09/02/microsoft-lifts-xbox-360-minimum-720p-anti-aliasing-mandate-for/

In a column published today on Develop, Black Rock Studio (Pure, Split/Second) technical director David Jeffrries revealed that Microsoft has removed an item from its TCRs (Technical Certification Requirements) that stated all Xbox 360 games must run at a minimum of 1280x720 (720p) resolution if the system is in HD mode. According to Jeffries, this was done earlier this year so that developers could be "free to make the trade-off between resolution and image quality as we see fit."

Joystiq has confirmed with a trusted source familiar with Microsoft's TCRs that Jeffries' claim is legit. Not only that, but, as of March 2009, Xbox 360 developers are no longer required to utilize full-screen anti-aliasing in their games. The elimination of both requirements is especially noteworthy since the console maker had touted that all 360 games would run at a minimum of 720p with at least 2x FSAA since before the hardware launched.

This does not look like 2x MSAA. Not for a second.

This is a 360 game right?

Maybe that's how they're the only game hitting 1080p. They're not following MS requirements for 2xMSAA.

Putting 1 and 1 together - ya that's probably what's going on here. I wonder if the dev's will like that? Personally I'd rather have 900p with 2x MSAA than 1080p no AA.

This is a 360 game right?

Unfortunately no that's a pic from the newest Forza 5 video running on Xbone.

Dailytech misread the Eurogamer article.

There's no mentioning of mandating anything for X1, just the 360.

There's no mentioning of mandating anything for X1, just the 360.

Eurogamer said:This matter of choice was a lesson we learned from Xbox 360 where at launch we had a Technical Certification Requirement mandate that all titles had to be 720p or better with at least 2x anti-aliasing

Dailytech misread the Eurogamer article.

There's no mentioning of mandating anything for X1, just the 360.

Yup. Bad translation. It's probably for the best to leave it up to dev's. I think a big motivator for MS to enforce 2x AA was to make it difficult for mulitplats to run equally on Sony because there was no free AA hardware in the PS3 GPU. Next gen it would probably be in Sony's favour to do something similar.

Dr. Kitty Muffins

Member

When Microsoft first spoke of "balance" they were admitting to be slightly weaker but stated the difference wasn't that vast. Seriously, I'm getting tired of hearing real world fanboys state that the Xbox One is more powerful because it is more balanced. Lol It really is funny how people behave in regard to consoles.

No amount of "balance" allows a console to out perform it's max theoretical performance.

No amount of "balance" allows a console to out perform it's max theoretical performance.

SniperHunter

Banned

Msaa and new engines.dont mix well. It would nuke performance. Bs article

This is a 360 game right?

Oh lawd. What have you done.

LiLSnorpSnorp

Member

As someone proudly purchasing the xbone at launch (I see no reason to get the PS4 right now with its launch lineup, friends going with xbone, have had a profile for a long time on XBL, etc.) I will not say the X1 is more powerful than the PS4 (I understand hardware), or that there is no difference between the two. The PS4 is clearly the stronger system, however I do not believe that sub 1080p will be a generation long issue for MS, nor do I believe multiplats will be an issue (not saying they won't look better on the PS4 than the xbone).

To me, the real power struggle happens when Sony's 1P studios take advantage of the hardware given to them, then what does MS do? Sure, T10 will make beautiful racing games, and 343 will make a beautiful Halo game, but then what, then who? It's then that MS will have to deal with the power difference between the two, it's at that point in my opinion that even your average Joe will notice the difference in power between the two consoles. MS will really need to offer some great software, including 1P games, or they will hit a wall first few years during the generation.

It's funny because it is the opposite of what happened with the x360, the easier system to develop for at launch of the current generation, had the better architecture, had the great games, but then died off with exclusives and such last few years, while Sony has done the opposite. Now MS has the weaker console, and better come up with a way to offer some great software during these first few years like Sony's 1P studios almost surely will.

And I know they have Ryse, which looks fantastic (even at 900p) but Crytek isn't going to keep shelling out exclusives to MS, and Ryse has a lot to prove gameplay wise still. Same with DR3, great game at launch for MS, and nice to have it as an exclusive (like Titanfall-even if it is most likely a timed exclusive) but it's 1P studios that shine through a generation.

To me, the real power struggle happens when Sony's 1P studios take advantage of the hardware given to them, then what does MS do? Sure, T10 will make beautiful racing games, and 343 will make a beautiful Halo game, but then what, then who? It's then that MS will have to deal with the power difference between the two, it's at that point in my opinion that even your average Joe will notice the difference in power between the two consoles. MS will really need to offer some great software, including 1P games, or they will hit a wall first few years during the generation.

It's funny because it is the opposite of what happened with the x360, the easier system to develop for at launch of the current generation, had the better architecture, had the great games, but then died off with exclusives and such last few years, while Sony has done the opposite. Now MS has the weaker console, and better come up with a way to offer some great software during these first few years like Sony's 1P studios almost surely will.

And I know they have Ryse, which looks fantastic (even at 900p) but Crytek isn't going to keep shelling out exclusives to MS, and Ryse has a lot to prove gameplay wise still. Same with DR3, great game at launch for MS, and nice to have it as an exclusive (like Titanfall-even if it is most likely a timed exclusive) but it's 1P studios that shine through a generation.

Well No, It's not just esram that means a lack of 1080p, it is also the power of the consoles GPUs, but this is a significant factor and would help determine where a developer would cut scale back, regarding fitting it into 10mb if we look back at my previous post ad just throw 720p numbers into it we can get:

Back Buffer:

1280x720 [Resolution] * 32 [Bits Per Pixel] * 2[FSAA Depth]

= 58982400 bits = 7MB

Depth Buffer:

1280x720 [Resolution] * 32 [24Bit Z, 8Bit Stencil] * 2 [FSAA Depth]

= 58982400 bits = 7MB

Front Buffer:

1280x720 [Resolution] * 32 [24Bit Z, 8Bit Stencil]

= 29491200 bits = 3.5MB

For a total of 17.5MB with 2x AA, of course this doesn't fit in 10mb edram, however if we look at the memory setup of the xbox 360 bandwidth we get this:

That has bandwidth of just 32GB/s for Esram compared to the 22.4GB/s to main ram, I comparison to the PS3, it has similar bandwidth between GPU and main memory, transferring data from esram to main memory and back on the xb1 is relatively a much bigger hit to performance than it was in the 360, All three of the buffers at 720p and 2x FSAA fit into edram and can be moved between edram and main memory with less of a performance hit than if the same thing was done on XB1. For instance on XB1 it would drop a potential bandwidth from 140gb/s down to 68gb/s for a drop of over 50% (and around 60% slower than PS4), compared to the drop in the 360 which would only be from 32 to 22, which is around a 30% drop (and around the same as PS3)

Also further in that thread it is clarified by Kagari that a Japaese developer was having similar Esram issues.

We don't know for sure what it the main cause of sub 1080p But I would assume both the lower Graphical power and the esram will both contribute.

We are in the same situation for tiled deferred rendering as well AFAIK

I don't know, I heard that each can display four rectangle Meaning that 3 of the rectangles could be flagged as empty and only display one portion of the screen in the buffer. If that was the case they could Display 1 quarter of the screen at 1080p (main character) and another at 3 quarters of the screen at 720, I think this would save memory, However it is far more likely the additional display plane would be used for a 1080p UI stuck on top of a 720p screen. But I really don't know.

Great post. Really put the 32MB number in perspective.

NoMoreTrolls

Banned

Dat CBoaT post breathing some new life into this thread. Thank you, buttocks, for making some of the liveliest threads on NeoGAF that I've ever seen.

This does not look like 2x MSAA. Not for a second.

No offense but that game doesn't even look next gen to me. I guess IQ is the thing I consider most next gen, as it's the hardest to complete, but those textures man. Some of that stuff looks positively PS3/360 to me. Is that actually Xbox One or PS4 there?

EDIT: NEVERMIND. Holy cows man! That doesn't even barely look like an upgrade to me. I honestly thought that was Forza Horizon. Tears.

No offense but that game doesn't even look next gen to me. I guess IQ is the thing I consider most next gen, as it's the hardest to complete, but those textures man. Some of that stuff looks positively PS3/360 to me. Is that actually Xbox One or PS4 there?

Actually the IQ is shit. Motion blur is helping but there's actually no or little AA.

DirgeExtinction

Member

The more I look at this image, the less next-gen it looks to me.

No offense but that game doesn't even look next gen to me. I guess IQ is the thing I consider most next gen, as it's the hardest to complete, but those textures man. Some of that stuff looks positively PS3/360 to me. Is that actually Xbox One or PS4 there?

I though that Drive Club looked insane during the PS4 reveal, but all the in-game footage I've seen since just looks like PS3.1 stuff.

Same with Forza 5. I was suitably impressed when it was first revealed, but it doesn't look particularly great to me. Certainly not compared to other Xbone games, let alone the likes of Killzone or Resogun.

To be fair, I haven't paid a great deal of attention to either racer, just basing my comments on screenshots and movies that pop up on GAF like the above Forza 5 one.

Actually the IQ is shit. Motion blur is helping but there's actually no or little AA.

Yeah, I was just saying the IQ for that game is dogshit, I thought it was 360 but people telling me it not. IQ for me is next gen because we should not be seeing jaggies like that. It totally kills it for me. And no I am not trolling I don't keep up with this thread and am fairly new.

I'll chalk this up to a severely rushed game.

I hope Driveclub aims for 30fps to be totally honest. That IQ isn't acceptable to me and DC looked to have some mighty fine jaggies on cars as well.

This does not look like 2x MSAA. Not for a second.

This is not next gen. The only next gen looking game on the Xbox 1 is Ryse. Unfortunately, the gameplay is shallow. Everything else just looks like a 360 game running at a higher resolution. A prime example of this is Titanfall. The gameplay looks amazing but the graphics look no better than call of duty. I will only be buying next gen looking games for my next gen consoles. Devs can take their cross gen games elsewhere.