-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

G-SYNC - New nVidia monitor tech (continuously variable refresh; no tearing/stutter)

- Thread starter artist

- Start date

Will this work on Linux (more like SteamOS) or is it just a Windows platform technology for now? I see Kepler cards and Display-port cable is a requirement.

I think it will work on anything as long as you have the GPU and Screen talking.

Great, I'm in as soon as I have to change my monitor/GPU.

But I highly doubt most gamers even know there is an issue to begin with, and I wouldn't risk betting on a unilateral success.

If Gsync monitors are >75$ more expansive than their normal counterparts, I'm pretty sure the whole thing will crash.

But I highly doubt most gamers even know there is an issue to begin with, and I wouldn't risk betting on a unilateral success.

If Gsync monitors are >75$ more expansive than their normal counterparts, I'm pretty sure the whole thing will crash.

mdrejhon

Member

AndyBNV, I sent you a PM, with a link to my adaptive variable-rate strobing algorithm that avoids the flicker problem at low refresh rates:Andy, send this commentary along with some of the other light boost stuff over to the engineering team.

www.blurbusters.com/faq/creating-strobe-backlight/#variablerefresh

-- We know G-Sync will predictably be superior to LightBoost for variable framerate situations. LightBoost is often bad for variable framerate situations.

-- However, since LightBoost motion is sharper than 144fps@144Hz during maxed-out framerate situations (e.g. perfect locked 120fps@120Hz VSYNC ON).

-- Therefore, we'd love to keep our cake and eat it too -- gain great looking LightBoost clarity when G-Sync caps out at its maximum framerate. This wold produce far clearer motion than 144fps@144Hz (especially in motion tests)

mdrejhon

Member

Actually, based on what I know, I think this feature is worth at least $200 extra to me. In fact, I'd pay $500 extra for LightBoost+G+Sync.If Gsync monitors are >75$ more expansive than their normal counterparts, I'm pretty sure the whole thing will crash.

I think $175 is still great, because G-Sync improves your graphics far more than adding an additional $400 graphics card in SLI. Cheaper than an SLI upgrade, with more benefits. That's how good G-Sync is. G-Sync'd 40-55fps looks better than stuttery 70-90fps@120Hz. It will even feel like it has less lag. As Chief Blur Buster, I'm quite impressed dynamic variable refresh rate LCD monitors have arrived this decade -- it is a very impressive engineering achievement. The bonus is I keep my existing framerates, but the stutters disappears more strongly than adding a second card into SLI !!! That's how hugely the stutters disappear! And the G-Sync driver boards have an improved LightBoost strobing circuit, according to what John Carmack said.

But I'd love it to have LightBoost, too!

Great, I'm in as soon as I have to change my monitor/GPU.

But I highly doubt most gamers even know there is an issue to begin with, and I wouldn't risk betting on a unilateral success.

If Gsync monitors are >75$ more expansive than their normal counterparts, I'm pretty sure the whole thing will crash.

Most gamers weren't gonna buy a Titan yet those sold like hotcakes, this isn't for ''most gamers'' but for gamers who want the best gaming experience and those don't mind paying for it.

TouchMyBox

Member

Will this work on Linux (more like SteamOS) or is it just a Windows platform technology for now? I see Kepler cards and Display-port cable is a requirement.

The system requirements state Windows 7/8, but with nvidia being as big of a supporter of linux and SteamOS as they are, it's pretty hard to imagine that it won't ever come to linux.

I wonder if it's a DirectX feature. You can 'mess' with the DirectX layer externally from the game (ergo the Dark Souls HD fix etc) but not OpenGL. I might be wrong.The system requirements state Windows 7/8, but with nvidia being as big of a supporter of linux and SteamOS as they are, it's pretty hard to imagine that it won't ever come to linux.

Though all graphics libs ultimately send their buffer of data to the gpu driver so it's probably just a Nvidia hardware to Nvidia hardware endpoint implementation.

I just hate that SED/FED never got an actual chance and we ended up with this crappy LCD tech where all of the options have their portion of defects.

LCD tech, you'll never get rid of that.

CRT/Plasma/OLED, way to go.

SuperFast LCD TN 1-2ms grey to grey

Panasonic Plasma 0.1ms black to black

OLED something in between

CRT what's a ms?

http://www.youtube.com/watch?v=hxv7mmKHRhs

Don't watch if you use an LCD Monitor(it won't make the SED example accurate), sorry that you buy obsolete technology even if its "new".

Will check that out. I didnt even think they made Plasma PC monitors. Thought the technology was mostly for 40+ TV's

Prophet Steve

Member

You can feel the difference between 60 fps and 120fps. Your eyes may not discern it, but your brain can sense that something is different. 60fps+ at 60hz is smooth. 120fps on a 144hz monitor is just buttery smooth. It's the same sensation you get when you go from playing consoles all of your life to a PC gaming setup on a nice monitor. It's a revelation.

More frames will still always be better. That's just extra slack in the system that can be used to drive the display or drive visual goodies. But you no longer have the 60 or 120 fps goalposts to shoot for. If it works as advertised (which it does, according to everyone who has witnessed it) then all you really need is a system that never really dips below 30 or 40 fps.

This technology means the most to entry level cards. The 'no more tearing' thing is nice, but what it does under 60 is just as important.

No. You still get the motion blur problem.

Contrary to popular myth, there is no limit to the benefits of even higher refresh rates, because of motion blur. As you track moving objects on a screen, your eyes are in a different position at the beginning of a refresh than at the end of a refresh. That causes frames to be blurred across your vision. Mathematically, 1ms of static refresh time translates to 1 pixel of motion blur during 1000 pixels/second motion, and it's already well documented as "sample-and-hold" in scientific references and already talked about, by John Carmack (iD Software), and Michael Abrash (Valve Software).

You have heard of people raving about LightBoost motion clarity recently, in some of the high-end PC gamer forums lately. Motion blur on strobe-free LCD's only gradually declines as you go higher in refresh rates. Fixing this motion blur limit is easier to do by strobing. LightBoost is already equivalent to 400fps@400Hz motion clarity via 1/400sec strobe flash lengths. If you use LightBoost=10%, you need'd at least 1000fps@1000Hz G-Sync to get less motion blur during non-strobed G-Sync than with strobed LightBoost. Yes, strobing is a bandaid until we have the magic 1000fps@1000Hz display. But so are Rube Goldberg vaccuum-filled glass balloons (aka CRTs) are also a bandaid too, in the scientific progress to Holodeck perfection.

Good animation of how motion blur works -- www.testufo.com/eyetracking

Good animation of how strobing fixes blur -- www.testufo.com/blackframes

The strobe effect is why CRT 60fps@60Hz still has less motion blur than non-strobed LCD 120fps@120Hz, noticed by exterme gamers who are used to CRT motion clarity.

LightBoost 100% uses 2.4ms strobe flash lengths. (1/400sec)

LightBoost 10% uses 1.4ms strobe flash lengths. (1/700sec)

The motion clarity difference between the two (using ToastyX Strobelight) is actually noticeable in fast panning motion, such as TestUFO: Panning Map Test (blurry mess on your monitor; perfect sharp on CRT and LightBoost) when toggling between LightBoost Strobe Brightness 10% versus 100%. It's like having an adjustable-phosphor-persistence CRT. Mathematically, based on science papers, 1ms of persistence (visible static frame duration time) translates to 1 pixel of motion blur for every 1000 pixel/second of eye-tracking motion.

(For those living under a rock, LightBoost (for 2D) is a strobe backlight that eliminates motion blur in a CRT-style fashion. It eliminates crosstalk for 3D, but also eliminates motion blur (For 2D too). LightBoost is now more popular for 2D. Just google "lightboost". See the "It's like a CRT" testimonials, the LightBoost media coverage (AnandTech, ArsTechnica, TFTCentral, etc), the improved Battlefield 3 scores from LightBoost, the photos of 60Hz vs 120Hz vs LightBoost, the science behind strobe backlights, and the LightBoost instructions for existing LightBoost-compatible 120Hz monitors. It is truly an amazing technology that allows LCD to have less motion blur than plasma/CRT. John Carmack uses a LightBoost monitor, and Valve Software talked about strobing solutions too. Now you're no longer living under a rock!)

Sorry for the late and boring response, but I was assuming it would be a 60Hz monitor since there are no 120Hz or 144Hz 4k monitors coming yet so I was wondering why you would want 120FPS on 60hz.

Will check that out. I didnt even think they made Plasma PC monitors. Thought the technology was mostly for 40+ TV's

They don't make Plasma monitors and it is for large tv's. Plasma tv's make shitty computer monitors so I don't even know why people bring them up in PC conversations.

mdrejhon

Member

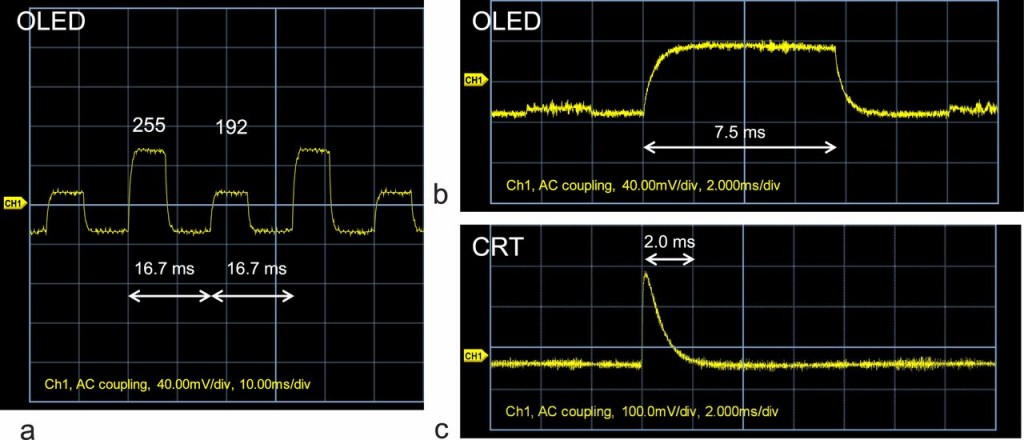

Sorry, OLED's still has more motion blur than 144Hz LCD's.CRT/Plasma/OLED, way to go.

Why Do Some OLED's Have Motion Blur?

GtG is not the main cause of motion blur on modern LCD's anymore. 1-2ms is only a tiny fraction of a refresh cycle. It's the persistence (static frame) -- see www.testufo.com/eyetracking for display motion blur that isn't caused by GtG transitions.SuperFast LCD TN 1-2ms grey to grey

View this animation on a modern flickerfree display such as an LCD.

Plasmas use phosphor, and is approximately 5ms of phosphor persistence, which can create ghosting effects, which is noticeable on bright objects on dark backgrounds. Plasmas are great, though.Panasonic Plasma 0.1ms black to black

Depends on phosphor. Long-persistence phosphor versus short-persistence phosphor. A high-brightness Sony CRT monitor such as GDM-W900, uses a phosphor decay of 2ms. This creates more motion blur than a LightBoost monitor (configured to 1.4ms strobe-backlight flash cycle). Take a look at Photos: 60Hz vs 120Hz vs LightBoost.CRT what's a ms?

You'd be surprised that strobe-backlight LightBoost LCD's can have less motion blur than medium-persistence CRT's such as the Sony FW900. Have you seen the "It's like a CRT" testimonials?Don't watch if you use an LCD Monitor(it won't make the SED example accurate)

High-speed video of a strobe-backlight LCD:

http://www.youtube.com/watch?v=hD5gjAs1A2s

The backlight is turned off while waiting for pixel transitions (unseen by human eyes), and the backlight is strobed only on fully-refreshed LCD frames (seen by human eyes). The strobes can be shorter than pixel transitions, breaking the pixel transition speed barrier.

Again, GtG is no longer the primary cause of motion blur ever since GtG times (1-5ms) is now today a tiny fraction of a refresh cycle (16.7ms at 60Hz). Science papers confirms a secondary cause of motion blur called "sample-and-hold". Most motion blur on modern displays is caused by persistence (how long a frame shines for). As you track your eyes on moving objects on a screen, your eyes are in a different position at the beginning of a refresh, versus at the end of a refresh. That blurs the frame across your eyes; creating a different cause of display motion blur not caused by GtG. See animation: www.testufo.com/eyetracking -- view this on a non-strobed LCD (you see motion blur not caused by GtG). Then view again on a CRT/LightBoost (Both look identical). This is what John Carmack and Valve Software has been talking about lately.

The LCD motion blur breakthroughs of the last several months must have missed your attention. Did you know I can read the map labels in this TestUFO Panning Map Test, on my LightBoost LCD display at a whopping 1920 pixels per second? That's CRT clarity motion (LightBoost has 12x less motion blur than a 60Hz LCD -- where you used to get 10 pixels of motion blurring, you now only have less than 1 pixel of motion blurring. That's how dramatic LightBoost blur reduction can be). LCD motion blur problem has been conquered already with LightBoost, as well as G-Sync board's own optional low-persistence strobing feature, confirmed by AndyBNV, and confirmed by John Carmack. This is exciting; a strobe mode that's better than LightBoost! Even though you can only choose G-Sync or strobing (not simultaneously) at the moment.

Yes, LCD's have crappy blacks.

Yes, LCD's have crappy view angles.

But LCD motion blur is a scientifically solved problem since early 2013 because of ultra-short strobe backlights.

CRT-clarity motion on LCD's are here today already.

Mark Rejhon -- Chief Blur Buster

mdrejhon

Member

Oops, my apologies. I thought I was replying to a person who didn't believe in going beyond 60fps.Sorry for the late and boring response, but I was assuming it would be a 60Hz monitor since there are no 120Hz or 144Hz 4k monitors coming yet so I was wondering why you would want 120FPS on 60hz.

So I now interpret you as: "Are there benefits of doing framerates higher than refresh rates?"

The answer is: YES, for input lag.

Ultra high framerates are beneficial during VSYNC OFF. Even 300fps can benefit. During 300fps@60Hz VSYNC OFF, every pixel on the screen was generated from the GPU less than 1/300sec ago. This reduces input lag. 300fps@60Hz will create about 5 tearlines per display refresh (300fps / 60Hz = 5), and each horizontal "slice" of frame (divided by tearlines) will be a different, consecutively more recent frame, guaranteeing that every pixel on that refresh is fresher than if you were doing only 60fps@60Hz, during the LCD's top-to-bottom refresh (as seen in high speed video for the non-strobed portion).

Understanding this better, requires understanding that as the LCD is being refreshed while the data is arriving on the display cable. This is how modern gaming LCD's work; they realtime refresh the panel directly from the signal cable with a subframe latency (mostly caused by the GtG pixel transition). If you change the frame while the display is being scanned, you're getting a newer frame spliced into the current refresh pass (creating the tearline), that's a fresher, newer frame, thusly reducing input lag.

It still even benefits triple buffered, if you have a proper triple-buffering implementation (one-frame queue depth, with freshest frames replaces queued not-yet-displayed frames, if new frames get rendered before the previous frame got a chance to be displayed). It will have your fire button input from only 1/120sec ago, rather than from 1/60sec ago. So you mathematically have an 8ms faster reaction time at 120fps@60Hz, than 60fps@60Hz, thanks to the input lag reduction. Regarding 120fps @ 60Hz triple buffered or G-Sync, it can still reduce input lag if you display only the freshest frames (guaranteeing every 60Hz refresh is a frame only 1/120sec old, rather than 1/60sec old -- a frame latency of 8.3ms versus 16.7ms).

So in short: You get input lag benefits during 120fps @ 60Hz.

(but not motion fluidity benefits, like going from 30fps->60fps on 60Hz)

However, stutters (and tearing) can occur when doing fluctuating framerates above your Hz, because of the framerate-vs-Hz mismatch. Stutters/judders can hurt your aim badly, so G-Sync is wonderful for that. Aiming for frags becomes easier without stutters. It's easier to frag enemies using nVidia G-Sync, even if G-Sync caps out at 144fps. Some elite pro gamers wanting to do CounterStrike at 300fps, may not always prefer G-Sync, but nVidia's G-Sync certainly appears preferable to VSYNC OFF for the vast majority of players.

grandwizard

Member

Oops, my apologies. I thought I was replying to a person who didn't believe in going beyond 60fps.

So I now interpret you as: "Are there benefits of doing framerates higher than refresh rates?"

The answer is: YES, for input lag.

Ultra high framerates are beneficial during VSYNC OFF. Even 300fps can benefit. During 300fps@60Hz VSYNC OFF, every pixel on the screen was generated from the GPU less than 1/300sec ago. This reduces input lag. 300fps@60Hz will create about 5 tearlines per display refresh (300fps / 60Hz = 5), and each horizontal "slice" of frame (divided by tearlines) will be a different, consecutively more recent frame, guaranteeing that every pixel on that refresh is fresher than if you were doing only 60fps@60Hz, during the LCD's top-to-bottom refresh (as seen in high speed video for the non-strobed portion).

Understanding this better, requires understanding that as the LCD is being refreshed while the data is arriving on the display cable. This is how modern gaming LCD's work; they realtime refresh the panel directly from the signal cable with a subframe latency (mostly caused by the GtG pixel transition). If you change the frame while the display is being scanned, you're getting a newer frame spliced into the current refresh pass (creating the tearline), that's a fresher, newer frame, thusly reducing input lag.

It still even benefits triple buffered, if you have a proper triple-buffering implementation (one-frame queue depth, with freshest frames replaces queued not-yet-displayed frames, if new frames get rendered before the previous frame got a chance to be displayed). It will have your fire button input from only 1/120sec ago, rather than from 1/60sec ago. So you mathematically have an 8ms faster reaction time at 120fps@60Hz, than 60fps@60Hz, thanks to the input lag reduction. Regarding 120fps @ 60Hz triple buffered or G-Sync, it can still reduce input lag if you display only the freshest frames (guaranteeing every 60Hz refresh is a frame only 1/120sec old, rather than 1/60sec old -- a frame latency of 8.3ms versus 16.7ms).

So in short: You get input lag benefits during 120fps @ 60Hz.

(but not motion fluidity benefits, like going from 30fps->60fps on 60Hz)

However, stutters (and tearing) can occur when doing fluctuating framerates above your Hz, because of the framerate-vs-Hz mismatch. Stutters/judders can hurt your aim badly, so G-Sync is wonderful for that. Aiming for frags becomes easier without stutters. It's easier to frag enemies using nVidia G-Sync, even if G-Sync caps out at 144fps. Some elite pro gamers wanting to do CounterStrike at 300fps, may not always prefer G-Sync, but nVidia's G-Sync certainly appears preferable to VSYNC OFF for the vast majority of players.

Just wanted to say thanks your posts have been really informative.

The info table floating around confuses me. Are all the other ports on the monitor disabled when the module is installed? Or was it just trying to say that the G-Sync will only work over Display Port? I like my current monitor because of the multiple inputs it has (PS3 plugged into the HDMI port right now).

Other than that, tech seems really cool. Give it to me on a 1440p screen worth a damn and I'm in!

Other than that, tech seems really cool. Give it to me on a 1440p screen worth a damn and I'm in!

doesn't even matter how few people use it when the software doesn't need to go out of its way to support it

Exactly, people seem to forget this.

Skel1ingt0n

I can't *believe* these lazy developers keep making file sizes so damn large. Btw, how does technology work?

Yep, I'm 110% in, regardless of price. I'm expecting 120Hz + G-Sync monitors in the $400 range. Less is great, and I'll gladly pay $500 on Day One. This is HUGE!!!

I really dislike SLI - too many issues - and thus I splurged on the Titan. It's great 95% of the time - but when it dips below 60fps on my 60hz monitor, it all feels wasted. I want to het a 120hz monitor - but why, if I can't attain 120fps in many demanding titles?

This solves it all. Hopefully they're on shelves really early next year.

I really dislike SLI - too many issues - and thus I splurged on the Titan. It's great 95% of the time - but when it dips below 60fps on my 60hz monitor, it all feels wasted. I want to het a 120hz monitor - but why, if I can't attain 120fps in many demanding titles?

This solves it all. Hopefully they're on shelves really early next year.

Yep, I'm 110% in, regardless of price. I'm expecting 120Hz + G-Sync monitors in the $400 range. Less is great, and I'll gladly pay $500 on Day One. This is HUGE!!!

I really dislike SLI - too many issues - and thus I splurged on the Titan. It's great 95% of the time - but when it dips below 60fps on my 60hz monitor, it all feels wasted. I want to het a 120hz monitor - but why, if I can't attain 120fps in many demanding titles?

This solves it all. Hopefully they're on shelves really early next year.

Just because you can't get 120hz in all the most demanding titles doesn't make 120hz not worth it.

For example, I never finished playing Half Life 2 so I've started finished it again.

I have a GTX 570 and I can run the game with 4xMSAA and 8xSGSSAA and still lock in 120hz and the game looks incredibly smooth smooth and animates really buttery.

Having a Titan, even if you can't do 120hz in every single new game, there are probably enough games out there that you'll eventually play that being able to play at 120fps would be a really sweet benefit to going back.

Yeah, I was thinking about this with the Arkham City benchmarks: on a 560 Ti that game CAN exceed 120 and is regularly hovering around 80-90. That'd get a boost as is in the monitor being listed, and depending on how Arkham Origins goes that'd be a brand new game that'd regularly be above 60 on cards that can use this. Then there's games like Falcoms that if I leave V-sync off run in the THOUSANDS, but at that point it's outputting so many frames that it looks as if it were v-sync'd anyway.Just because you can't get 120hz in all the most demanding titles doesn't make 120hz not worth it.

For example, I never finished playing Half Life 2 so I've started finished it again.

I have a GTX 570 and I can run the game with 4xMSAA and 8xSGSSAA and still lock in 120hz and the game looks incredibly smooth smooth and animates really buttery.

Having a Titan, even if you can't do 120hz in every single new game, there are probably enough games out there that you'll eventually play that being able to play at 120fps would be a really sweet benefit to going back.

Yep, I'm 110% in, regardless of price. I'm expecting 120Hz + G-Sync monitors in the $400 range. Less is great, and I'll gladly pay $500 on Day One. This is HUGE!!!

I really dislike SLI - too many issues - and thus I splurged on the Titan. It's great 95% of the time - but when it dips below 60fps on my 60hz monitor, it all feels wasted. I want to het a 120hz monitor - but why, if I can't attain 120fps in many demanding titles?

This solves it all. Hopefully they're on shelves really early next year.

FYI SLI is like using a single card now... 99% of the time it works just fine. It's just way, way faster. Love it.

Unknown One

Member

I wonder would this help alleviate microstutter from SLI? Probably not is my guess.

D

Deleted member 125677

Unconfirmed Member

I thought monitor was the ONE thing I wouldn't have to upgrade ever

Fuck you, NVIDiA!

I planned on going with the R9 series next upgrade but I guess that wasn't meant to be

Fuck you, NVIDiA!

thank you NVIDIA

I planned on going with the R9 series next upgrade but I guess that wasn't meant to be

So how are they going to avoid strobing when the framerate drops?

minimum cap on refresh rate.

Err, Half-Life 2 is nearly 10 years old at this point. Playing it now kind of misses out on the wow factor the game had going for it in 2004.Just because you can't get 120hz in all the most demanding titles doesn't make 120hz not worth it.

For example, I never finished playing Half Life 2 so I've started finished it again.

I have a GTX 570 and I can run the game with 4xMSAA and 8xSGSSAA and still lock in 120hz and the game looks incredibly smooth smooth and animates really buttery.

I was going to ask what this would mean for game recording software, as vsynced visuals would be much easier translated into video, then I realized most video formats already allow timecodes for individual frames so each frame can be displayed with unique timings. If anyone asked, that'd be the answer.

Still would wish this would be implemented into a display standard (HDMI 1.4extended, HDMI 2.0 etc.)

Still would wish this would be implemented into a display standard (HDMI 1.4extended, HDMI 2.0 etc.)

michaelius

Banned

Question to anyone who knows

Will all monitors with G-sync be limited to display port only ?

Or is it possible to create one with DP and some other inputs like HDMI/DVI (those don't have to have g-sync) ?

I'm connecting my ps3 (and future ps4) to pc display so it's kinda important

Will all monitors with G-sync be limited to display port only ?

Or is it possible to create one with DP and some other inputs like HDMI/DVI (those don't have to have g-sync) ?

I'm connecting my ps3 (and future ps4) to pc display so it's kinda important

The PS3 may have an nVidia GPU but it's effectively several generations too far out of date to take advantage of this!Question to anyone who knows

Will all monitors with G-sync be limited to display port only ?

Or is it possible to create one with DP and some other inputs like HDMI/DVI (those don't have to have g-sync) ?

I'm connecting my ps3 (and future ps4) to pc display so it's kinda important

Seriously though, you need a PC, and you need a GPU on this list to use it. So the Display port thing is likely a literal non-issue: you'd be using a video card and monitor that has one anyway if you want to use this, assuming it can't just do it over DVI.

EDIT: Actually I may've misinterpreted your post. Still, there's one monitor out now that can be modded, and it has both DVI and HDMI, so there's no need for them to make the displays with ONLY Displayport.

Question to anyone who knows

Will all monitors with G-sync be limited to display port only ?

Or is it possible to create one with DP and some other inputs like HDMI/DVI (those don't have to have g-sync) ?

I'm connecting my ps3 (and future ps4) to pc display so it's kinda important

Theres no reason for the monitors to be display port only. If you want the benefits of g-sync, then yes, you'll be forced to use a display port. If you want to do HDMI, there'll be a port but your monitor will function as it does now.

michaelius

Banned

The PS3 may have an nVidia GPU but it's effectively several generations too far out of date to take advantage of this!

Seriously though, you need a PC, and you need a GPU on this list to use it. So the Display port thing is likely a literal non-issue: you'd be using a video card and monitor that has one anyway if you want to use this, assuming it can't just do it over DVI.

EDIT: Actually I may've misinterpreted your post. Still, there's one monitor out now that can be modded, and it has both DVI and HDMI, so there's no need for them to make the displays with ONLY Displayport.

Yes i know.

I want to connect my PC with gtx 770 to g-sync display through DP and then have other inputs to connect ps3/ps4 to this display too.

And if you modded VG248QE you lose other inputs

... Hmm. I didn't pay close enough attention, they could be speaking of just the G-Sync, or they really do shut off the other ports. That can be really problematic if true and not just a quirk from modifying that display.Yes i know.

I want to connect my PC with gtx 770 to g-sync display through DP and then have other inputs to connect ps3/ps4 to this display too.

And if you modded VG248QE you lose other inputs

michaelius

Banned

... Hmm. I didn't pay close enough attention, they could be speaking of just the G-Sync, or they really do shut off the other ports. That can be really problematic if true and not just a quirk from modifying that display.

Well as far as i know main source of input lag in displays is signal processing - scallers and electronics for overdrive and handling of multiple inputs.

That can be observed on Korean 27" displays - get Qnix/Crossover with single input and no scallers- you have around 6 ms lag - get one with additional ports and scaller for them- 20 ms input lag.

So with g-sync reducing input lag among other things it probably bypasses this whole section in display.

Which makes me wonder if factory made g-sync displays will have more than 1 display port.

I guess we will see in next few months when factory made models are annouced.

Prophet Steve

Member

Oops, my apologies. I thought I was replying to a person who didn't believe in going beyond 60fps.

So I now interpret you as: "Are there benefits of doing framerates higher than refresh rates?"

The answer is: YES, for input lag.

Ultra high framerates are beneficial during VSYNC OFF. Even 300fps can benefit. During 300fps@60Hz VSYNC OFF, every pixel on the screen was generated from the GPU less than 1/300sec ago. This reduces input lag. 300fps@60Hz will create about 5 tearlines per display refresh (300fps / 60Hz = 5), and each horizontal "slice" of frame (divided by tearlines) will be a different, consecutively more recent frame, guaranteeing that every pixel on that refresh is fresher than if you were doing only 60fps@60Hz, during the LCD's top-to-bottom refresh (as seen in high speed video for the non-strobed portion).

Understanding this better, requires understanding that as the LCD is being refreshed while the data is arriving on the display cable. This is how modern gaming LCD's work; they realtime refresh the panel directly from the signal cable with a subframe latency (mostly caused by the GtG pixel transition). If you change the frame while the display is being scanned, you're getting a newer frame spliced into the current refresh pass (creating the tearline), that's a fresher, newer frame, thusly reducing input lag.

It still even benefits triple buffered, if you have a proper triple-buffering implementation (one-frame queue depth, with freshest frames replaces queued not-yet-displayed frames, if new frames get rendered before the previous frame got a chance to be displayed). It will have your fire button input from only 1/120sec ago, rather than from 1/60sec ago. So you mathematically have an 8ms faster reaction time at 120fps@60Hz, than 60fps@60Hz, thanks to the input lag reduction. Regarding 120fps @ 60Hz triple buffered or G-Sync, it can still reduce input lag if you display only the freshest frames (guaranteeing every 60Hz refresh is a frame only 1/120sec old, rather than 1/60sec old -- a frame latency of 8.3ms versus 16.7ms).

So in short: You get input lag benefits during 120fps @ 60Hz.

(but not motion fluidity benefits, like going from 30fps->60fps on 60Hz)

However, stutters (and tearing) can occur when doing fluctuating framerates above your Hz, because of the framerate-vs-Hz mismatch. Stutters/judders can hurt your aim badly, so G-Sync is wonderful for that. Aiming for frags becomes easier without stutters. It's easier to frag enemies using nVidia G-Sync, even if G-Sync caps out at 144fps. Some elite pro gamers wanting to do CounterStrike at 300fps, may not always prefer G-Sync, but nVidia's G-Sync certainly appears preferable to VSYNC OFF for the vast majority of players.

Thank you, that is informative and interesting. Although I do doubt a bit that this is what he meant by maxing out a 4k display.

Wonder if it's possible to put in a switch to flip if it must be done by hardware, that you shut off the other inputs in favor of just the display port, or if you enable them but have G-Sync disabled. Would be kinda disappointing if the concession is only being able to use your computer with a display.Well as far as i know main source of input lag in displays is signal processing - scallers and electronics for overdrive and handling of multiple inputs.

That can be observed on Korean 27" displays - get Qnix/Crossover with single input and no scallers- you have around 6 ms lag - get one with additional ports and scaller for them- 20 ms input lag.

So with g-sync reducing input lag among other things it probably bypasses this whole section in display.

Which makes me wonder if factory made g-sync displays will have more than 1 display port.

I guess we will see in next few months when factory made models are annouced.

A quality design would bypass converters, scalers and other processing when they aren't being used. I don't think there is a technical reason why this can't be done; of course it might add some cost. My guess is the designers of quality displays just haven't been considering gamers that much, and only hardcore enthusiasts will look up tested input latencies.Well as far as i know main source of input lag in displays is signal processing - scallers and electronics for overdrive and handling of multiple inputs.

G-Sync is the most exciting PC tech I've seen in years. No wasting graphics quality in 90% of the game in order to keep game locked at 60FPS in the most demanding 10%. No need to fine-tune the settings. You just see all the power of your GPU.

The value proposition of a GPU becomes much simpler: pay more and you see more FPS at the same settings. No more "can maybe enable this extra effect if the additional performance goes over a certain threshold that allows still hitting 60FPS", etc. For me, G-Sync actually gives motivation to invest more in a GPU when I do upgrade.

The first 1440p, non-TN G-Sync display that comes out has a very good chance of getting my money.

(Please, pretty please, put G-Sync in displays with pro panels, styling and ergonomics in addition to the blinged out shiny plastic gamer fare!)

100% agreed. Given that 1440p and 4k G-sync display are coming I have high hopes for that. I can't imagine anyone building a TN panel at those resolutions.A quality design would bypass converters, scalers and other processing when they aren't being used. I don't think there is a technical reason why this can't be done; of course it might add some cost. My guess is the designers of quality displays just haven't been considering gamers that much, and only hardcore enthusiasts will look up tested input latencies.

G-Sync is the most exciting PC tech I've seen in years. No wasting graphics quality in 90% of the game in order to keep game locked at 60FPS in the most demanding 10%. No need to fine-tune the settings. You just see all the power of your GPU.

The value proposition of a GPU becomes much simpler: pay more and you see more FPS at the same settings. No more "can maybe enable this extra effect if the additional performance goes over a certain threshold that allows still hitting 60FPS", etc. For me, G-Sync actually gives motivation to invest more in a GPU when I do upgrade.

The first 1440p, non-TN G-Sync display that comes out has a very good chance of getting my money.

(Please, pretty please, put G-Sync in displays with pro panels, styling and ergonomics in addition to the blinged out shiny plastic gamer fare!)

(Please, pretty please, put G-Sync in displays with pro panels, styling and ergonomics in addition to the blinged out shiny plastic gamer fare!)

I don't know if it's my age or what. But I almost throw up at some of the way keyboards, monitors, and mouses are styled. In my late teens and early 20's I would have been all over that crap.

Horse Armour

Member

Why is this needed on PC and not on consoles? Every PS4 video I've seen doesn't have any tearing, is it because PC graphics cards don't have enough GDDR5?

Why is this needed on PC and not on consoles? Every PS4 video I've seen doesn't have any tearing, is it because PC graphics cards don't have enough GDDR5?

I almost fell for this.

Why is this needed on PC and not on consoles? Every PS4 video I've seen doesn't have any tearing, is it because PC graphics cards don't have enough GDDR5?

Lack of avatar completes it.

I have to say that I considered that LED and window stuff in PCs silly even when I was in my teens (at which point it was just starting).I don't know if it's my age or what. But I almost throw up at some of the way keyboards, monitors, and mouses are styled. In my late teens and early 20's I would have been all over that crap.

But hey, if people like it then more power to them.

I fell for it until I read the user name.I almost fell for this.

Dictator93

Member

Lack of avatar completes it.

Horse Armour is always there to remind us.

One of my favorite posters.

I'm guessing there won't be a way to upgrade my BenQ xl2411t due to lack of displayport?

For the next year, yes.

Nvidia is negotiating with the HDMI consortium to adopt this.

Even if they are successful don't expect the add-in board to bemade for every display out there.

Monitor manufacturers are in the business to sell displays and not add-in boards.

This situation with the most popular Asus gaming monitor is probably a compromise made to ensure Nvidia's tech gets more word of mouth advertising.