-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Is the AMD Jaguar CPU in consoles weaker than the Cell Broadband Engine Architecture?

- Thread starter QuickSilverD

- Start date

The Cell advantage over Xenon isn't too surprising IMHO. When you program SPEs well, and with a workload suited to them, you can get really great efficiency. More so than on the PPE, and more so than on Xenon cores (both of which were really rather sucky "general purpose" cores).That...Is confusing. XBO processor is so much faster than the PS4 in that? Even assuming the PS4 is clocked at 1.6GHz (some benchmarks imply it's faster) and the XBO is 1.75, that's 9% faster, which would put it at about 106FPS in theory. So what's the extra coming from?

And Cell at 3x faster than Xenon? It's faster in some regards in theory, but even then 3x is at the upper bounds, close to double is what you would usually expect with absolutely perfect scaling to each SPU.

I don't agree with this one.A programmer's analogy.

Cell is like thirty or so drunk teenagers and two average teachers trying to herd them. Jaguar is like eight German Army generals.

The teenagers are so drunk that they have to cluster together to walk, but when it comes to breaking ice with bare fists, they will probably be a better fit. The thing is, GPU is like a swarm of intoxicated crows that's even better at it (except they use beaks, but that's just a little implementation detail).

SPEs are highly polished and perfected instruments, but also hard to use and extremely specialized. It's more like comparing a shuriken to a huge stone. Most people will do a lot more damage lobbing the latter, and perhaps even hurt themselves with the former, but in the hands of a master the situation is reversed. Conversely, you can also use the stone to hammer in a nail, while you'd be hard-pressed to do that with the shuriken.

lightchris

Member

And Cell at 3x faster than Xenon? It's faster in some regards in theory, but even then 3x is at the upper bounds, close to double is what you would usually expect with absolutely perfect scaling to each SPU.

If you're purely compute bound that might be true. I can imagine though that the SPUs can be faster if the problem can be fitted nicely into the 256 KB local storage. Since it is basically a manually managed cache it's potentially faster than a transparent cache.

prag16

Banned

How in the world did you arrive at such a number?

Can we get a tales from my ass for this post?

Like a large butt spewing shit?

Agreed. This was an absurd post that almost made me spray water all over my keyboard.

I think it is cleat the power of the PS4 and the XBOX One well out powers the Cell chip. That is not to say the Cell could not have been beefed up with an update in tech, but Sony decided against that course of action. A wise choice, since the PS4 X86 architecture makes it a pleasure to program for!

Hmmkay, let's see if I can do a comparison based on gflops.

XBOX360 CPU

3,2 GHz PPC Tri-Core Xenon

77 GFLOPS (theoretical)

XBOX ONE CPU

AMD JAGUAR x86 8x1,75Ghz

112 GFLOPS (theoretical)

----------------------------------------------

PS3 CPU

Cell Broadband Engine CPU 6x3,2 GHz

ca. 100 GFLOPS real/ 230 theoretical(ca. 200 GFLOPS on matrix multiplication)

PS4 CPU

AMD JAGUAR x86 8x1,6Ghz

102 GFLOPS (theoretical)

Well I think that gamedevs need to use the GPU more in this generation, because the GPU GFLOPS went from like 200-300(PS360) to 1,85 and 1,3 TFLOPS.

XBOX360 CPU

3,2 GHz PPC Tri-Core Xenon

77 GFLOPS (theoretical)

XBOX ONE CPU

AMD JAGUAR x86 8x1,75Ghz

112 GFLOPS (theoretical)

----------------------------------------------

PS3 CPU

Cell Broadband Engine CPU 6x3,2 GHz

ca. 100 GFLOPS real/ 230 theoretical(ca. 200 GFLOPS on matrix multiplication)

PS4 CPU

AMD JAGUAR x86 8x1,6Ghz

102 GFLOPS (theoretical)

Well I think that gamedevs need to use the GPU more in this generation, because the GPU GFLOPS went from like 200-300(PS360) to 1,85 and 1,3 TFLOPS.

Stats are from wiki and vgleaks.

QuickSilverD

Member

there probably not comparable directly, but either way, one would expect a bigger jump in performance after 8 years :/Hmmkay, let's see if I can do a comparison based on gflops.

XBOX360 CPU

3,2 GHz PPC Tri-Core Xenon

77 GFLOPS (theoretical)

XBOX ONE CPU

AMD JAGUAR x86 8x1,75Ghz

112 GFLOPS (theoretical)

----------------------------------------------

PS3 CPU

Cell Broadband Engine CPU 6x3,2 GHz

ca. 100 GFLOPS real/ 230 theoretical(ca. 200 GFLOPS on matrix multiplication)

PS4 CPU

AMD JAGUAR x86 8x1,6Ghz

102 GFLOPS (theoretical)

Well I think that gamedevs need to use the GPU more in this generation, because the GPU GFLOPS went from like 200-300(PS360) to 1,85 and 1,3 TFLOPS.

Stats are from wiki and vgleaks.

TaintedHotSauce

Member

Hmmkay, let's see if I can do a comparison based on gflops.

XBOX360 CPU

3,2 GHz PPC Tri-Core Xenon

77 GFLOPS (theoretical)

XBOX ONE CPU

AMD JAGUAR x86 8x1,75Ghz

112 GFLOPS (theoretical)

----------------------------------------------

PS3 CPU

Cell Broadband Engine CPU 6x3,2 GHz

ca. 100 GFLOPS real/ 230 theoretical(ca. 200 GFLOPS on matrix multiplication)

PS4 CPU

AMD JAGUAR x86 8x1,6Ghz

102 GFLOPS (theoretical)

Well I think that gamedevs need to use the GPU more in this generation, because the GPU GFLOPS went from like 200-300(PS360) to 1,85 and 1,3 TFLOPS.

Stats are from wiki and vgleaks.

Stop with this nonsense GFLOPS comparison. The instructions per cycle that Xenon perform do is terrible. It takes many clock cycles to complete one single instruction and the cpu cores are crappy in-order designs. Also, the Cell SPEs are only suited for certain types of workloads, whereas the Jaguar cores are extremely good with integer general purpose workloads. The Cell was also relied upon heavily to compensate for the anemic performance of the RSX gpu.

The Jaguar CPUs in the Xbox One and PC do far more work per clock cycle, are out of order designs and have more cache.

Clock speed doesn't mean a whole lot unless you're comparing two processors of an identical architecture

It's not just the clock rates either. The Jaguar IPC is much better for branchy general purpose workloads than the last-gen CPUs, but still pretty distant from an Intel Core [whatever]. So you really have a factor of 3 or so difference in single-threaded CPU performance between PS4 and a decent (not even going into enthusiast territory) gaming PC.That 1.6 ghz really handicaps the cpus. You can't multithread everthing in a game to make up for it. Its better to have those fewer thread running at much higher clock rates.

QuickSilverD

Member

Mind me asking this probably not bright question, but what makes multithreading so difficult? aren't games running many dozens of processes anyway? can't they just evenly hand these to the different cores?

Why would you put a dog in the trunk?

Mind me asking this probably not bright question, but what makes multithreading so difficult? aren't games running many dozens of processes anyway? can't they just evenly hand these to the different cores?

There was a really good Valve presentation from a while ago explaining why just putting different processes on different cores is bad. It's probably the easiest thing to do but it's also horribly inefficient; they said that in TF2 this kind of splitting of tasks only led to 1.2x performance over a single core.

JonathanPower

Member

As far as I can see, even if the Cell is marginally better than the Jaguar CPU at some tasks, it's marginally better at tasks it was specifically designed to excel at.

What's we're seeing is that the 'tablet' CPUs in PS4 and Xbone are very nearly as good at the task that Cell was designed specifically as the Cell, despite the fact that they are low end, general-purpose CPUs.

It's a bit like finding out that your average family car from 2014 has almost the same 0-60 time as a supercar from 2008. Yes, the supercar is still marginally faster, but you still can't fit the family dog in the trunk.

Exactly. And unlike Cell, all the power of Jaguar is easily accessible to programmers.

Synchronization, and read-modify-write access to shared resources and data.Mind me asking this probably not bright question, but what makes multithreading so difficult?

Not all parallelization is difficult -- there are problems, such as shading pixels, which are what we call "embarrassingly parallel" -- and that's why it's even possible to use exceedingly parallel computing platforms such as GPUs efficiently. The difficulty comes in when trying to effectively parallelize algorithms which are not embarrassingly parallel. It's not unusual for an efficient parallel version to be far more complicated to design, write, maintain and debug than semantically equivalent serial code.

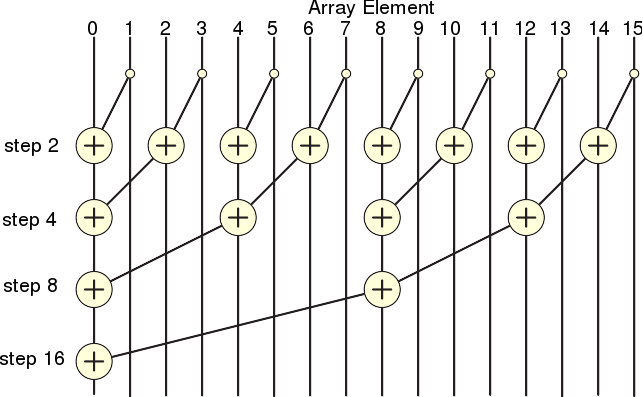

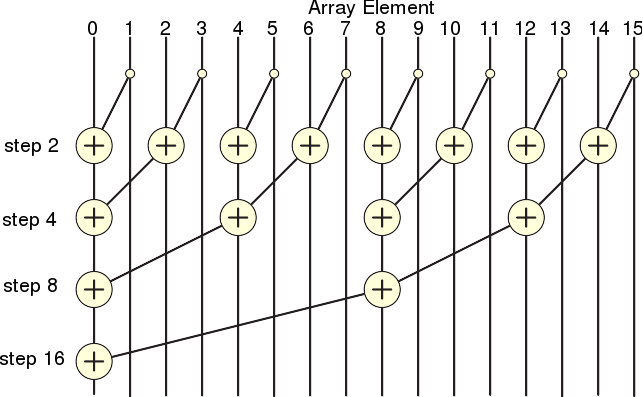

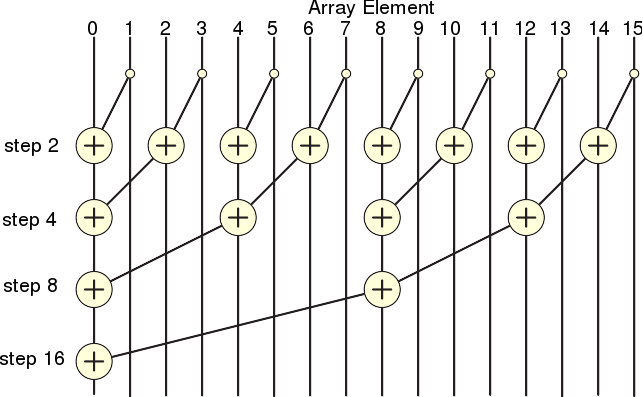

Consider calculating the sum of all elements in an array (a list) -- a reduction operation. Serially, you jsut iterate over the list and add each element to the total sum until you are done. Probably 3 lines of code.

To maximize parallelism, you need to do something akin to this:

Suddenly you have log(N) time steps, each with synchronization between them, and need to take care of where you are addressing your array in each step and element. And that's just reduction, which is pretty damn simple to parallelize in the grand scheme of things.

I could go on and on about this (I teach a lecture about it after all

lightchris

Member

Mind me asking this probably not bright question, but what makes multithreading so difficult? aren't games running many dozens of processes anyway? can't they just evenly hand these to the different cores?

First of all, we're talking about threads here, not processes (a single application usually launches a single process; try Wikipedia for the differences between a process and a thread).

Traditionally games ran on a single thread, because there was no need to launch more threads on a single core CPU. That means that all the game logic processing is done sequentially: For example, for each frame you need to fetch the player's input, do the AI and physics calculations, update the positions of your objects and camera accordingly and finally send those positions to the GPU to render. In order to run this stuff on multiple cores you need to segregate the work; this may or may not be trivial (see Durante's post).

Consider a simplified example where you decided to run the AI on core A and the rest on core B. Each frame the two cores need to synchronize when they're done with their calculations, meaning that if core A is done faster than core B, it has to wait until core B is finished. You can probably imagine that this may vary wildly depending on your current situation in game. Let's say core A takes 10 ms while core B only takes 5 ms and has to wait another 5 ms: The whole computation is done after 10 ms. Sequentially the same work would've taken 15 ms, so our speedup is only 50% even though we wrote code that would've potentially doubled the performance.

One difficulty in multithreading is to even out this stuff, so that each core never runs out of work and doesn't spend time being idle. The more sophisticated it gets, the more time you'll need to spend on writing and debugging the code.

wonderdung

Member

CBE has the highest peak performance among all of them. Xbox 360's CPU runs at higher clock speed so will outperform Jaguar in rare cases where code is highly optimized to avoid stalls.

Jaguar will run most code faster, but the CPUs from last gen will run highly optimized code faster. Which is better? For the vast majority of tasks the Jaguar cores are better.

It is hard to reason about without a lot of experience, and even harder to test all possibilities.

Jaguar will run most code faster, but the CPUs from last gen will run highly optimized code faster. Which is better? For the vast majority of tasks the Jaguar cores are better.

Mind me asking this probably not bright question, but what makes multithreading so difficult? aren't games running many dozens of processes anyway? can't they just evenly hand these to the different cores?

It is hard to reason about without a lot of experience, and even harder to test all possibilities.

Excuse my ignorance as I hardly know anything about the subject and this kinda tech talk in general but what would you say about the PS4/XONE's capabilities in general? Are they really as weak as some people make them out to be or do they have some kind of ''secret sauce'' or is there anything else people might be missing with the architecture/specs?Synchronization, and read-modify-write access to shared resources and data.

Not all parallelization is difficult -- there are problems, such as shading pixels, which are what we call "embarrassingly parallel" -- and that's why it's even possible to use exceedingly parallel computing platforms such as GPUs efficiently. The difficulty comes in when trying to effectively parallelize algorithms which are not embarrassingly parallel. It's not unusual for an efficient parallel version to be far more complicated to design, write, maintain and debug than semantically equivalent serial code.

Consider calculating the sum of all elements in an array (a list) -- a reduction operation. Serially, you jsut iterate over the list and add each element to the total sum until you are done. Probably 3 lines of code.

To maximize parallelism, you need to do something akin to this:

Suddenly you have log(N) time steps, each with synchronization between them, and need to take care of where you are addressing your array in each step and element. And that's just reduction, which is pretty damn simple to parallelize in the grand scheme of things.

I could go on and on about this (I teach a lecture about it after all) but that's the central idea.

There's alot of conflicting stories about it actually, some people say they this gen of consoles is so ''weak'' they won't even hold out for 5 years, while others say they are perfectly fine functioning consoles that will bring us great games and graphics in due time. Its kind of confusing. :\ (I like to believe they are powerhouses though

They are weak compared the pc counterparts, where cpu are tons of times more advanced. The only 'new'things it's the high number of ACE in the ps4, but surely can't beat the global performance of the more advanced gpu in the pc market. But will be interesting to see how much will improve the ps4 graphic in the future.Excuse my ignorance as I hardly know anything about the subject and this kinda tech talk in general but what would you say about the PS4/XONE's capabilities in general? Are they really as weak as some people make them out to be or do they have some kind of ''secret sauce'' or is there anything else people might be missing with the architecture/specs?

There's alot of conflicting stories about it actually, some people say they this gen of consoles is so ''weak'' they won't even hold out for 5 years, while others say they are perfectly fine functioning consoles that will bring us great games and graphics in due time. Its kind of confusing. : (I like to believe they are powerhouses though)

QuickSilverD

Member

Thanks godelsmetric, Durante, lightchris, wonderdung for your replies regarding parallelism

weak relatively to PC, overall PS4 and XBONE should be much more capable than PS3 and X360, so we will at the very least get the same gameplay with better graphics than before, so I'm don't be too worried about itExcuse my ignorance as I hardly know anything about the subject and this kinda tech talk in general but what would you say about the PS4/XONE's capabilities in general? Are they really as weak as some people make them out to be or do they have some kind of ''secret sauce'' or is there anything else people might be missing with the architecture/specs?

There's alot of conflicting stories about it actually, some people say they this gen of consoles is so ''weak'' they won't even hold out for 5 years, while others say they are perfectly fine functioning consoles that will bring us great games and graphics in due time. Its kind of confusing. :\ (I like to believe they are powerhouses though)

Dictator93

Member

They are weak compared the pc counterparts, where cpu are tons of times more advanced. The only 'new'things it's the high number of ACE in the ps4, but surely can't beat the global performance of the more advanced gpu in the pc market. But will be interesting to see how much will improve the ps4 graphic in the future.

Yeah, this sounds like the situation.

That strongly depends on what sort of task you're trying to run. If it's a computational task with excellent opportunities for patterned instruction-level parallelism, then yeah, a well-made Cell program has a good shot at winning. But in a lot of cases it probably won't be possible to optimize code for Xenon or Cell to compete with Jaguar; Jaguar simply has a much snappier pipeline for highly sequential code with interesting behaviors, thanks to things like lower execution latency and much less bullshit.CBE has the highest peak performance among all of them. Xbox 360's CPU runs at higher clock speed so will outperform Jaguar in rare cases where code is highly optimized to avoid stalls.

Jaguar will run most code faster, but the CPUs from last gen will run highly optimized code faster.

If you build a rocket sled, you can go really fast on a smooth straightaway, but "good driving" won't necessarily be enough to keep you from constantly crashing on a windy trail.

Synchronization, and read-modify-write access to shared resources and data.

Not all parallelization is difficult -- there are problems, such as shading pixels, which are what we call "embarrassingly parallel" -- and that's why it's even possible to use exceedingly parallel computing platforms such as GPUs efficiently. The difficulty comes in when trying to effectively parallelize algorithms which are not embarrassingly parallel. It's not unusual for an efficient parallel version to be far more complicated to design, write, maintain and debug than semantically equivalent serial code.

Consider calculating the sum of all elements in an array (a list) -- a reduction operation. Serially, you jsut iterate over the list and add each element to the total sum until you are done. Probably 3 lines of code.

To maximize parallelism, you need to do something akin to this:

Suddenly you have log(N) time steps, each with synchronization between them, and need to take care of where you are addressing your array in each step and element. And that's just reduction, which is pretty damn simple to parallelize in the grand scheme of things.

I could go on and on about this (I teach a lecture about it after all) but that's the central idea.

Anybody interested in this further would do well to understand the difference between parallelism and concurrency, which is what durante is talking about here. Efficient parallelism ala concurrency is depending on how you hash.

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

sidenote/ When speaking about multithreading in this thread it bears noting that we're discussing the subject in the context of multiprocessing. Multithreading per se is not limited to mutiprocessing. E.g. you can have a bunch of I/O threads running efficiently on a single processor. /sidenote

Actually that's among the most fundamental problems of multiprocessing. GPUs pose only part of the solution. They are extremely good at 'threaded parallelism' where each thread in the block* has to do the exact same work as its siblings. The moment workloads in the block start to diverge all siblings get to accommodate for the slowest individual, and the GPU's efficiency drops like a stone**. The thing is, even some embarrassingly parallel problems can show such a divergent behavor so they would drag GPUs orders of magnitude below their theoretical performance levels. So what do we do? Shall we somehow make GPUs divergence-friendly (i.e. more CPU-like), or shall we use large arrays of CPUs? With large arrays of CPUs you surely get the divergence covered but you get to a point where the fully automated memory view provided by good 'ol SMP is not an option anymore. Actually, that happens rather quickly. 64 cores coherent chips? Sure, as long as you don't exercise coherency wildly across the ring bus.. Frankly, kid, you'd do best to limit your talk to your neighbours. So unsurprisingly, the status-quo in the land of CPU parallelism is NUMAs (coherence with and remark) and fully non-coherent, cluster-like compute meshes where each node is responsible for its own memory view. CELL was a step in that latter direction, and multi-socketed servers (Xeons, POWER, etc) are a step in the former direction (and desktops and current-gen consoles are following shortly in NUMA steps). None of the directions has proven an overall better choice than the alternative in the grand scheme of things. The fundamental difference being NUMAs still try to exercises coherency, though devs have to be fully mindful about their cross-socket traffic if they're to get any meaningful performance***. And cluster-like meshes are honest-to-goodness 'you're a grown up, you provide your own memory views. In return, I won't clog your buses with coherency chatter'. Somewhere in-between sit exotic design like Parallella (a mesh-on-a-chip, with both manually-controlled DMA, both on- and off-mesh, and a unified address space across the mesh nodes which behaves similarly to a large NUMA grid).

So why this wall of text? Because I wanted to emphasize the point that even when we have the embarrassingly parallel algorithms, getting those to fully utilize the iron is a fundamental task in itself, one that is being continuously tackled on both hw and sw levels.

* A fundamental grouping of threads, named differently by the different IHVs: warps, wavefronts, etc.

** Though recent nv GPUs tend to have multiple schedulers per warp.

*** Actually, they'd do best to watch even the intra-socket traffic, but let's leave that alone for now.

/hijacksOne difficulty in multithreading is to even out this stuff, so that each core never runs out of work and doesn't spend time being idle. The more sophisticated it gets, the more time you'll need to spend on writing and debugging the code.

Actually that's among the most fundamental problems of multiprocessing. GPUs pose only part of the solution. They are extremely good at 'threaded parallelism' where each thread in the block* has to do the exact same work as its siblings. The moment workloads in the block start to diverge all siblings get to accommodate for the slowest individual, and the GPU's efficiency drops like a stone**. The thing is, even some embarrassingly parallel problems can show such a divergent behavor so they would drag GPUs orders of magnitude below their theoretical performance levels. So what do we do? Shall we somehow make GPUs divergence-friendly (i.e. more CPU-like), or shall we use large arrays of CPUs? With large arrays of CPUs you surely get the divergence covered but you get to a point where the fully automated memory view provided by good 'ol SMP is not an option anymore. Actually, that happens rather quickly. 64 cores coherent chips? Sure, as long as you don't exercise coherency wildly across the ring bus.. Frankly, kid, you'd do best to limit your talk to your neighbours. So unsurprisingly, the status-quo in the land of CPU parallelism is NUMAs (coherence with and remark) and fully non-coherent, cluster-like compute meshes where each node is responsible for its own memory view. CELL was a step in that latter direction, and multi-socketed servers (Xeons, POWER, etc) are a step in the former direction (and desktops and current-gen consoles are following shortly in NUMA steps). None of the directions has proven an overall better choice than the alternative in the grand scheme of things. The fundamental difference being NUMAs still try to exercises coherency, though devs have to be fully mindful about their cross-socket traffic if they're to get any meaningful performance***. And cluster-like meshes are honest-to-goodness 'you're a grown up, you provide your own memory views. In return, I won't clog your buses with coherency chatter'. Somewhere in-between sit exotic design like Parallella (a mesh-on-a-chip, with both manually-controlled DMA, both on- and off-mesh, and a unified address space across the mesh nodes which behaves similarly to a large NUMA grid).

So why this wall of text? Because I wanted to emphasize the point that even when we have the embarrassingly parallel algorithms, getting those to fully utilize the iron is a fundamental task in itself, one that is being continuously tackled on both hw and sw levels.

* A fundamental grouping of threads, named differently by the different IHVs: warps, wavefronts, etc.

** Though recent nv GPUs tend to have multiple schedulers per warp.

*** Actually, they'd do best to watch even the intra-socket traffic, but let's leave that alone for now.

They are weak compared the pc counterparts, where cpu are tons of times more advanced. The only 'new'things it's the high number of ACE in the ps4, but surely can't beat the global performance of the more advanced gpu in the pc market. But will be interesting to see how much will improve the ps4 graphic in the future.

Thanks.Thanks godelsmetric, Durante, lightchris, wonderdung for your replies regarding parallelism

weak relatively to PC, overall PS4 and XBONE should be much more capable than PS3 and X360, so we will at the very least get the same gameplay with better graphics than before, so I'm don't be too worried about it

Secretly hoping for Durante to chime in aswell.

MrCunningham

Member

Xbox One best dance simulator confirmed!

That's why they bundled it with Kinect initially.

CELL was very different beast to pretty much every other CPU you'll find in home electronics. The SPEs were insanly fast at certain tasks but not so good at the majority of things. There was also the well publicised issues getting everything running in parallel on the PS3.

The thread title is a too general to get a yes or no answer.

The thread title is a too general to get a yes or no answer.