-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

My friend just told me the eye can only see 30FPS

- Thread starter Fudgepuppy

- Start date

Sanctuary

Member

Your opinion is bad.

Many more people (or at least from those who bothered to comment or take polls etc) disliked the way the two HFR Hobbit movies looked in motion than liked. It had a very obnoxious soap opera effect going on that made it look comparatively awful to the 24fps version.

Saying that someone's opinion is bad because they don't like the way videos look at higher framerates compared to video games is a bad opinion. The two aren't even similar, because one is completely passive while the other interactive.

Unless playing an Uncharted game of course.

Sparkedglory

Member

Your friend is dumb

Clockwork5

Member

Yeah, input lag sucks in real life too, huh?

Our brain interprets the constant stream of data transmitted from the eye much faster than 30fps.

Diminishing returns on what we can percieve doesn't even begin to really kick in until closer to 60 but we still "see" everything at a much higher "framerate" than that.

Our brain interprets the constant stream of data transmitted from the eye much faster than 30fps.

Diminishing returns on what we can percieve doesn't even begin to really kick in until closer to 60 but we still "see" everything at a much higher "framerate" than that.

Many more people (or at least from those who bothered to comment or take polls etc) disliked the way the two HFR Hobbit movies looked in motion than liked. It had a very obnoxious soap opera effect going on that made it look comparatively awful to the 24fps version.

Saying that someone's opinion is bad because they don't like the way videos look at higher framerates compared to video games is a bad opinion. The two aren't even similar, because one is completely passive while the other interactive.

Unless playing an Uncharted game of course.

It's impossible for moving images to not look worse at lower framerates. Higher framerates cause video to become more lifelike, more realistic. Low framerates cause the image to become a juttery, blurry mess when the camera pans - higher framerates help reduce or eliminate the disconnect from reality that occurs when this happens and allow you to remain immersed in the video. Preferring lower framerates for anything is a bad, incorrect opinion.

Sentenza

Member

Their opinion is bad, too.Many more people (or at least from those who bothered to comment or take polls etc) disliked the way the two HFR Hobbit movies looked in motion .

It's impossible for moving images to not look worse at lower framerates. Higher framerates cause video to become more lifelike, more realistic. Low framerates cause the image to become a juttery, blurry mess when the camera pans - higher framerates help reduce or eliminate the disconnect from reality that occurs when this happens and allow you to remain immersed in the video. Preferring lower framerates for anything is a bad, incorrect opinion.

I recorded a little video showing pretty much what you just explained. This is what it looks like in slow motion, think it is 120FPS played back at 30FPS. Recorded with phone, so quality is a bit meh. http://a.pomf.se/qgxxzl.mp4

I don't agree with you on preferring lower framerate though. For movies I can see why people don't want more than 24FPS, we are so used to how it looks and for the most part it is just fine. It's just those occasional panorama shots that makes the stuttering obvious. Personally I wouldn't mind if everyone started making them at 48FPS at least, but I have no problems with movies staying at 24 either.

Games on the other hand I want 120FPS whenever possible and 60 on console. A stable 30FPS with a controller is fine, but I would never take that over 60 if I had the option.

staticneuron

Member

I know right?! But then, he kind of made me uncertain. He explained it as this: The human eye has a response-time of 30hz, where everything completely in sync with this would look completely smooth (if it has motion blur and so forth) but that we perceive higher framerates better because it means there's more information leading to a smaller chance of your eye getting out of sync with the video.

Your eyes are analog. They are constantly receiving information and processing it.

I think your friend is confusing perception of motion with the persistence of vision. Vision is continuous but the perception is something done by your mind and that is where it can be fooled.

The reason why anything above 30hz or fps might be hard to perceive on tv or film is simply because of perception. For video games it is a bit different since it is interactive and input lag combined with obvious judder or drops in framerate breaks the perception/illusion of smooth continuous movement.

Well he's full of shit. I can barely tell the difference between 30 and 60 unless I'm looking for it, but I still notice significant frame-drops immediately because it feels like I'm dragging the control stick through mollasses.He dismissed this too.

rokkerkory

Member

Each eye can see 30fps and thus 60 fps for most of us

This isn't something where we need to give out participation trophies to everyone, or be accepting of subjective opinions, accepting people's differences.Conversely, fuck you. I usually can't tell the difference. It has to be something with intense movement like F Zero for me to tell the difference. I've never denied that other people can tell the difference, but you don't have to be a dick about other people.

Even in your own post you conceit that you can see a difference. This is a binary thing with a correct objective assessment.

There is a difference between, "I can't tell a difference", and " It doesn't matter to me and I don't really care." The first is objectively wrong and is a lame cover up for some perceived slight. Given the "Fuck you", in your post, that does seem to be the case. The second is an understandable subjective opinion that people should agree to disagree on.

Each eye can see 30fps and thus 60 fps for most of us

Well, that does not include you then.

Sanctuary

Member

It's impossible for moving images to not look worse at lower framerates. Higher framerates cause video to become more lifelike, more realistic. Low framerates cause the image to become a juttery, blurry mess when the camera pans - higher framerates help reduce or eliminate the disconnect from reality that occurs when this happens and allow you to remain immersed in the video. Preferring lower framerates for anything is a bad, incorrect opinion.

No one was talking about watching movies at 10fps. And while filming at higher framerates might create a more "lifelike" reproduction, that doesn't actually make it a better experience. Video games on the other hand have many other considerations when factoring in higher framerates.

http://www.tested.com/art/movies/452387-48-fps-and-beyond-how-high-frame-rates-affect-perception/

Since silent films gave way to talkies in the 1920s, the frame rate of 24 frames per second has become standard in the film industry. 24 fps is not the minimum required for persistence of vision--our brains can spin 16 still images into a continuous motion picture with ease--but the speed struck an easy balance between affordability and quality. For the past century, cinema has trained us to recognize 24 frames per second as a reflection of reality. Or, at least, a readily acceptable unreality.

But to the average viewer, 48 fps looks like an exaggerated version of a television program shot at the common video tape speed of 30 fps.

That's exactly what it looked like to me.

How do I hate thee? Let me count the ways

Smith's attentional theory makes me wonder if the way we watch movies is something we learn subconsciously from the first moments we sit in front of a television. "Certainly there's a familiarity effect," he says, after thinking about it for a moment. "We're aware of what a cinematic image looks like. Now we're aware of what a TV image, even a high def TV image, looks like. So when we see the film image at 48 frames per second, it's queueing all of our memories of seeing something similar in TV. That's why people are calling it the soap opera effect or bad TV movie effect. Because that's what it looks like, what it reminds us of.

"Whether we learned to expect it to be a certain frame rate...I don't think it operates on that level. These low level sensory behaviors are something we don't really have conscious access to, we can't really control it. Our eyes know how to make sense of the real world, and they know how to make sense of a still painting or a movie. We will change what we interpret in the image based on the way it's presented to us. But we can't really see the frame rate directly. We can only see the consequence of it in what we perceive."

This is perhaps the simplest and clearest explanation of why High Frame Rate projection looks so unpleasant: It contradicts our memories and expectations. Even if we haven't "learned" to expect 24 fps playback, by this explanation kids could grow up watching 48 fps video and find it perfectly palatable.

On a surface level, 48 fps motion simply looks too fast, like a movie playing on fast-forward. But there's something specific about HFR that makes it especially unsettling, which may explain why we find it horrifying in film but don't mind it in video games: the uncanny valley.

Doctoglethorpe

Member

I've always wondered if we used more then 10% of our brain if we could see more then 30fps with our eyes.

Cave Johnson

Member

Play the TLOU remastered edition and ask him to switch back from 60fps after playing for a good 10 min.

dude's in denialI tried showing him the comparisons, but he refused.

NobleGundam

Banned

Welp.. Time to upgrade to cybernetic eyeballs, gaf.

I've always wondered if we used more then 10% of our brain if we could see more then 30fps with our eyes.

Wow man, maybe we could experience sour tastes on every section of our tongue too.

Seriously though, why do you even care OP? This kid sounds like he has an IQ of 80, i'd just drop it.

ScepticMatt

Member

That's a bullshit article.Regardless, we aren't even seeing "frames" anyway, we are perceiving the fluidity of motion. According to this, we perceive reality at a rate somewhere between 24 fps and 48 fps.

http://movieline.com/2012/12/14/hobbit-high-frame-rate-science-48-frames-per-second/

I like the science articles posted by another poster here, or the the papers collected here:

http://forums.blurbusters.com/viewtopic.php?f=7&t=333&sid=e13a26473e3f48c3befdf10ad7456c85

That's a different question from "what frame rate do I need for smooth motion"."Tests with Air force pilots have shown, that they could identify the plane on a flashed picture that was flashed only for 1/220th of a second."

http://www.100fps.com/how_many_frames_can_humans_see.htm

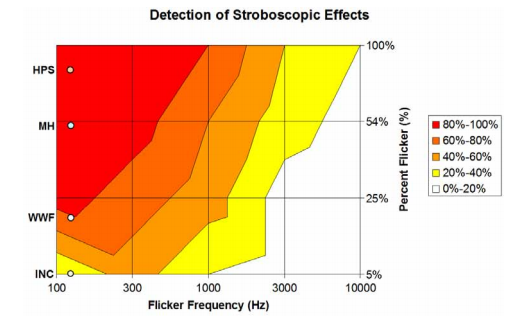

If you account for temporal aliasing, you may see changes above 1000 Hz, if you don't 300 Hz is way overkill.There is still a perception issue, there'll be a point where you just can't tell the difference any more, but we can still perceive changes in framerate up to 300fps .

No one was talking about watching movies at 10fps. And while filming at higher framerates might create a more "lifelike" reproduction, that doesn't actually make it a better experience. Video games on the other hand have many other considerations when factoring in higher framerates.

http://www.tested.com/art/movies/452387-48-fps-and-beyond-how-high-frame-rates-affect-perception/

That's exactly what it looked like to me.

Higher framerate is objectively better if the question is "Which provides a more authentic experience?" in terms of relation to real life, or at the very least the reality of what it looked like on set.

I don't think that's the question you're asking though. I think the question you're asking is "Which do I like more?" which is of course open to interpretation by every individual.

I think the both of you are arguing different things.

Also worth noting is that higher framerate isn't inherently better, as plenty of people don't like motion interpolated movies and TV. The motion interpolation doesn't remove any of the original frames, and only adds estimated data so if the argument is "higher frame rate is always better" whoever is arguing that has to sort of work around that issue.

AbandonedTrolley

Member

Everyone knows that it's per eye so we can see 60FPS!

SkeptiMism

Member

This really shouldn't be said often, but it's appropriate here I'd say. He's full of shit. Either he's in complete denial for some reason, or he's just bullshitting you.

It's like the simplest maths that the higher the frame rate, the lower the response time. If you have 30 frames and then go to 60 frames, you have double the frames. If you have double the frames, you also have faster input response time by 2 times.

Also, I really wonder, what does he think about why the motion blur is there in the first place? If the frame rate is high, there's no need for the motion blur because the motion blur is made to smooth it out, but with higher frame rates you don't need it. Someone correct me if I'm wrong here though.

I have a theory though. He knows about you visiting neogaf often right? Maybe he wanted to troll you so much that you'd make a thread on it? If he knows your account name (same as on Steam or somewhere else for example). His explanation and complete denial kinda make me wonder if there could be some of the truth here.

It's like the simplest maths that the higher the frame rate, the lower the response time. If you have 30 frames and then go to 60 frames, you have double the frames. If you have double the frames, you also have faster input response time by 2 times.

Also, I really wonder, what does he think about why the motion blur is there in the first place? If the frame rate is high, there's no need for the motion blur because the motion blur is made to smooth it out, but with higher frame rates you don't need it. Someone correct me if I'm wrong here though.

I have a theory though. He knows about you visiting neogaf often right? Maybe he wanted to troll you so much that you'd make a thread on it? If he knows your account name (same as on Steam or somewhere else for example). His explanation and complete denial kinda make me wonder if there could be some of the truth here.

Sanctuary

Member

It's like the simplest maths that the higher the frame rate, the lower the response time. If you have 30 frames and then go to 60 frames, you have double the frames. If you have double the frames, you also have faster input response time by 2 times.

Response time isn't even what's being discussed. You can have a 120hz screen that looks better in motion, but has an equivalent response time of a 60hz screen. Just like you can have one 60hz screen with a 12ms response time and another that's 40ms. A slow response time is what would cause ghosting in the older LCD TVs and monitors. Motion blur is used to make 30fps games look smoother, but that's not quite the same thing as ghosting and it's often simply used for effect.

NemesisPrime

Banned

250 fps actually.

DeadlyVirus

Member

I remember when I was in college doing some research for a Video & Graphics presentation, I came across some biological studies saying that the human eye can't see any difference in any motion above 100 fps

Atraveller

Banned

What you are seeing here is the most advanced built-in 30/60 frame switch.Well, that does not include you then.

ScepticMatt

Member

That's only true if you have perfect temporal anti-aliasing (which necessitates eye-tracking).I remember when I was in college doing some research for a Video & Graphics presentation, I came across some biological studies saying that the human eye can't see any difference in any motion above 100 fps

Otherwise you may even see differences even at 3000 Hz.

BBC thinks you need 700 Hz to avoid strobing/aliasing for UHDTV viewing distance/resolution:

http://www.bbc.co.uk/rd/blog/2013/12/high-frame-rate-at-the-ebu-uhdtv-voices-and-choices-workshop

BlackRainbowFT

Member

Being elitist doesn't change your vision.

There IS a difference between 30 and 60 FPS. It's a fact. Don't see how it's even debatable to be honest.

Whether or not you mind is another thing. Just don't deny the difference.

And this is a very simple topic! People with no knowledge still feel the need to think they're right...

It's no wonder why more serious topics also get deniers who spread lies...

Climate change, autism, vaccines, etc.

I have a G-Sync 144hz display and have been playing around with CSGO's fps_max setting (which can be applied in real-time in the game). As g-sync syncs the refresh rate to the frame rate, it might be a decent test.

I saw a noticeable increase up until about 132hz, when flicking it to 144hz I could see he difference in certain scenarios but it wasn't immediately obvious to me (the difference between 132-144). But it does seem highly game dependent on how high that bar is for me, and I suspect it'd also change significantly at different resolutions.

In games that didn't allow me to set the framerate cap arbitrarily and in real-time, I instead flicked the max refresh rate and I could see bigger differences in games like The Witcher 2 between 120hz and 144hz.

I saw a noticeable increase up until about 132hz, when flicking it to 144hz I could see he difference in certain scenarios but it wasn't immediately obvious to me (the difference between 132-144). But it does seem highly game dependent on how high that bar is for me, and I suspect it'd also change significantly at different resolutions.

In games that didn't allow me to set the framerate cap arbitrarily and in real-time, I instead flicked the max refresh rate and I could see bigger differences in games like The Witcher 2 between 120hz and 144hz.

I have glasses and i started to noticed some lag because of them, i see everything pretty much 0,5 sec later ;( which really sucks in traffic.

I need thinner glasses maybe i can get lag down a bit.

Wait what? Is this a joke?

Wait what? Is this a joke?

No the resistance of my thick glasses makes incoming pictures slower thus i see later

( ͡° ͜ʖ ͡°)

Headhunter

Neo Member

Each eye can see 30fps and thus 60 fps for most of us

Best argument EVAR! Case closed!

SkeptiMism

Member

The response time comment was in response to OP's comments in the thread where he said the guy denies that too completely. But yes, increasing frame rate doesn't always decrease the response time.Response time isn't even what's being discussed. You can have a 120hz screen that looks better in motion, but has an equivalent response time of a 60hz screen. Just like you can have one 60hz screen with a 12ms response time and another that's 40ms. A slow response time is what would cause ghosting in the older LCD TVs and monitors. Motion blur is used to make 30fps games look smoother, but that's not quite the same thing as ghosting and it's often simply used for effect.

CosmicGroinPull

Member

Ask your friend if you can cum in his eyes and then tell you whether it's 30 or 60fps.

Higher framerate is objectively better if the question is "Which provides a more authentic experience?" in terms of relation to real life, or at the very least the reality of what it looked like on set.

I don't think that's the question you're asking though. I think the question you're asking is "Which do I like more?" which is of course open to interpretation by every individual.

I think the both of you are arguing different things.

Also worth noting is that higher framerate isn't inherently better, as plenty of people don't like motion interpolated movies and TV. The motion interpolation doesn't remove any of the original frames, and only adds estimated data so if the argument is "higher frame rate is always better" whoever is arguing that has to sort of work around that issue.

You bring up a good point, and it's something a lot of people don't consider.

The average movie set feels fake once you're on it; the lighting, the props, the makeup... none of it stands up to the kind of scrutiny 60fps provides. Yes, it's a more accurate representation of what was filmed, but when what was filmed wasn't convincing in the first place, what are you really getting closer to? That's not say there isn't a place for higher frame rates, just that the standards will have to be raised considerably for it to work.

I've always wondered if we used more then 10% of our brain if we could see more then 30fps with our eyes.

The 10% idea is also a myth.

Canis lupus

Member

That's a different question from "what frame rate do I need for smooth motion".

So? If you can identify an object that was flashed for 1/220th of a second, does that not mean you can see 220fps?

NemesisPrime

Banned

The 10% idea is also a myth.

Indeed... I hate that they always mention that.

That 10% of our brain is there for a reason... because the brain can't regenerate, so it needs some spare "parts" to move stuff to when old stuff fails. Anyway... a bit OT

NemesisPrime

Banned

I remember when I was in college doing some research for a Video & Graphics presentation, I came across some biological studies saying that the human eye can't see any difference in any motion above 100 fps

It depends where in your FOV you are looking at. The human eye is a very sophisticated piece of equipment that has some smart tricks to minimize information send to the brain.

In the center of your FOV it can be up to 250 fps, depending on what is actually happening.

Anyway... apples and oranges.

NemesisPrime

Banned

Regardless, we aren't even seeing "frames" anyway, we are perceiving the fluidity of motion. According to this, we perceive reality at a rate somewhere between 24 fps and 48 fps.

http://movieline.com/2012/12/14/hobbit-high-frame-rate-science-48-frames-per-second/

What FPS does reality run? (and yes it does run in frames... there is a smallest unit of time called a Planck Unit, 10−43 seconds). An interesting question TBH. All a bit OT but rather interesting (I am not going to explain all this... I am sure all of you interested can Google). Let's just say that reality runs at a VERY high framerate

Then again time is relative... that means that that framerate of reality is slightly different for each of us (if we are moving ofc).

Reality Lag!