Evilms

Banned

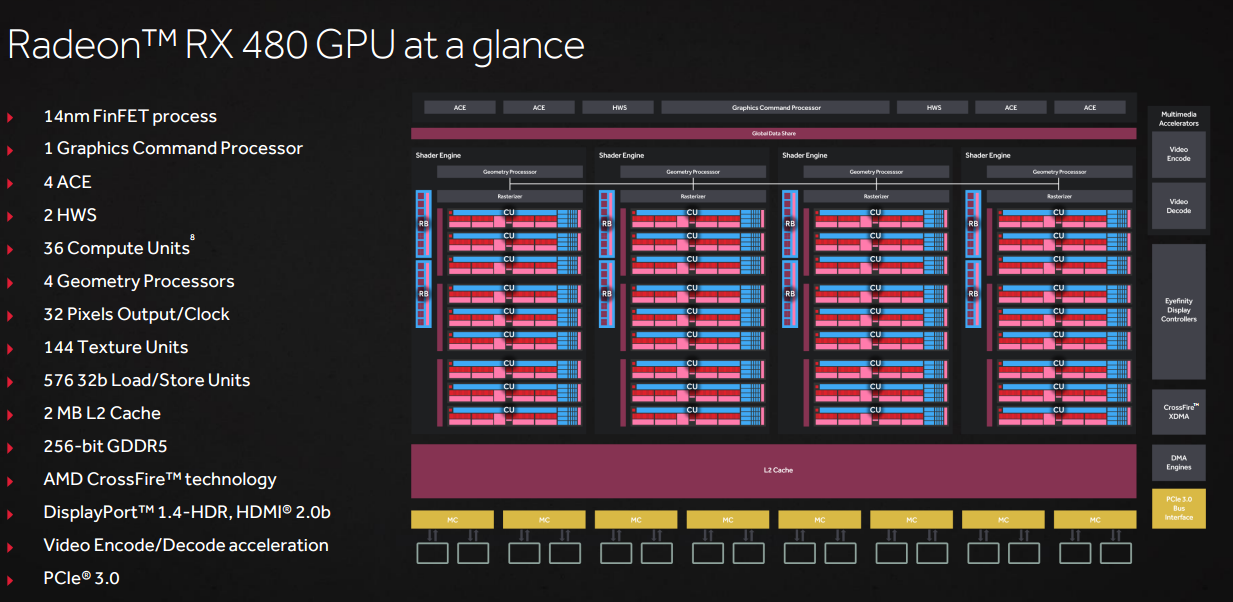

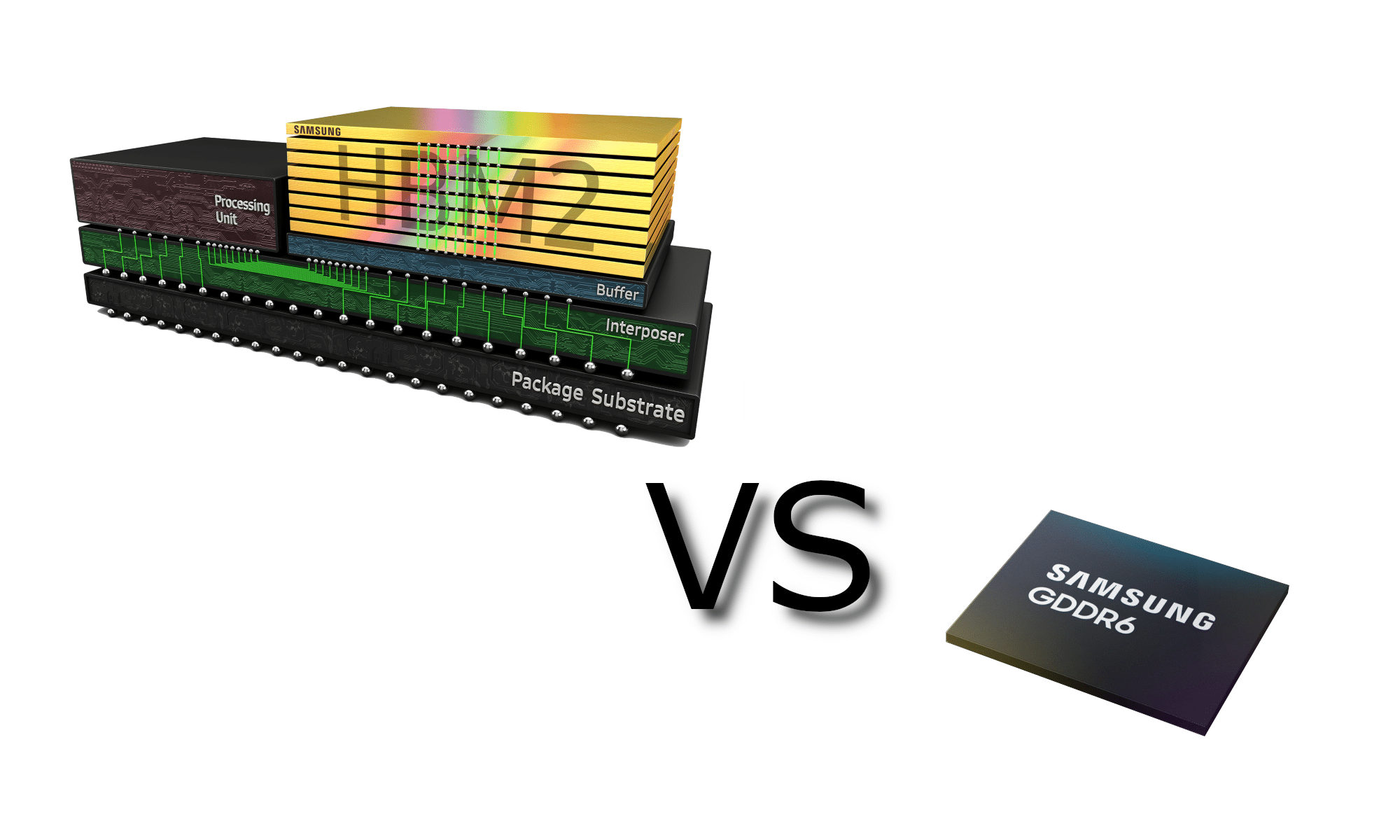

Does using HBM2 reduce the size of SoC compared to Gddr6?

PS5 with 320mm Soc vs Anaconda 380mm will lend some creditability to HBM rumor for PS5

HBM memory is more expensive than GDDR and does not perform better in gaming than gddr, I strongly doubt that Sony will choose this choice which will only increase the invoice unnecessarily.

GDDR6 VS HBM2 Memory - TechSiting

Both GDDR6 and HBM2 are types of memory that enable processors to perform better in a wide variety of applications due to their high memory bandwidth. In this

Last edited: