IntentionalPun

Ask me about my wife's perfect butthole

Possible, but not confirmed. You're just supposing from your "random thoughts".

You gotta be fucking kidding me here.. I'm posting a slide from a Microsoft presentation, surely vetted by AMD... you are posting tweets from some random dude on Twitter with ~1300 followers.

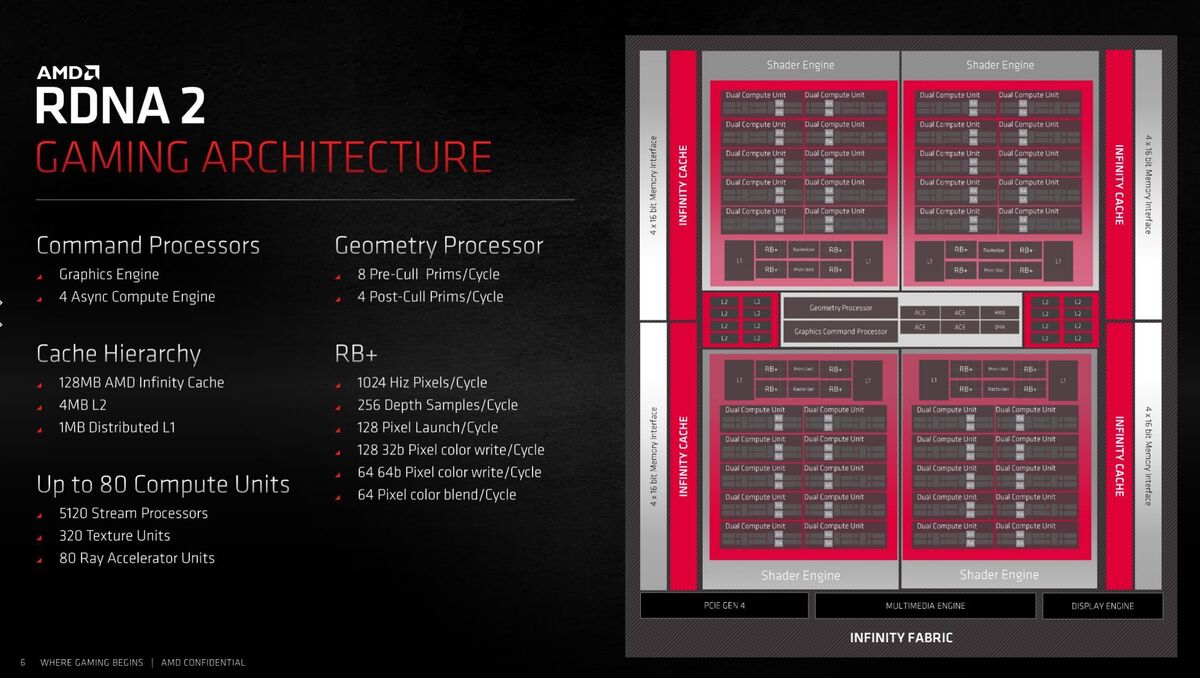

AMD is developing their super resolution for RDNA 2. Where are the Tensor Cores? There's not accelerated hardware, only shaders. Why you assume they're made hardware modifications for Mesh Shaders? More random thoughts coming for you.

How is this an answer to my question?

We have no idea if FidelityFX Super Resolution will be locked behind RDNA 2 cards or not.. as we don't know if it requires Infinity Cache or some other RDNA 2 feature.

If it doesn't, you can bet AMD will enable it on older cards.. as they have a long history of not arbitrarily locking features behind old generations, despite them being supportable on older ones.

And that's why it'd be really shitty of them to do that specifically for competing with an nVidia feature we know resides on specific hardware units like Mesh Shaders.

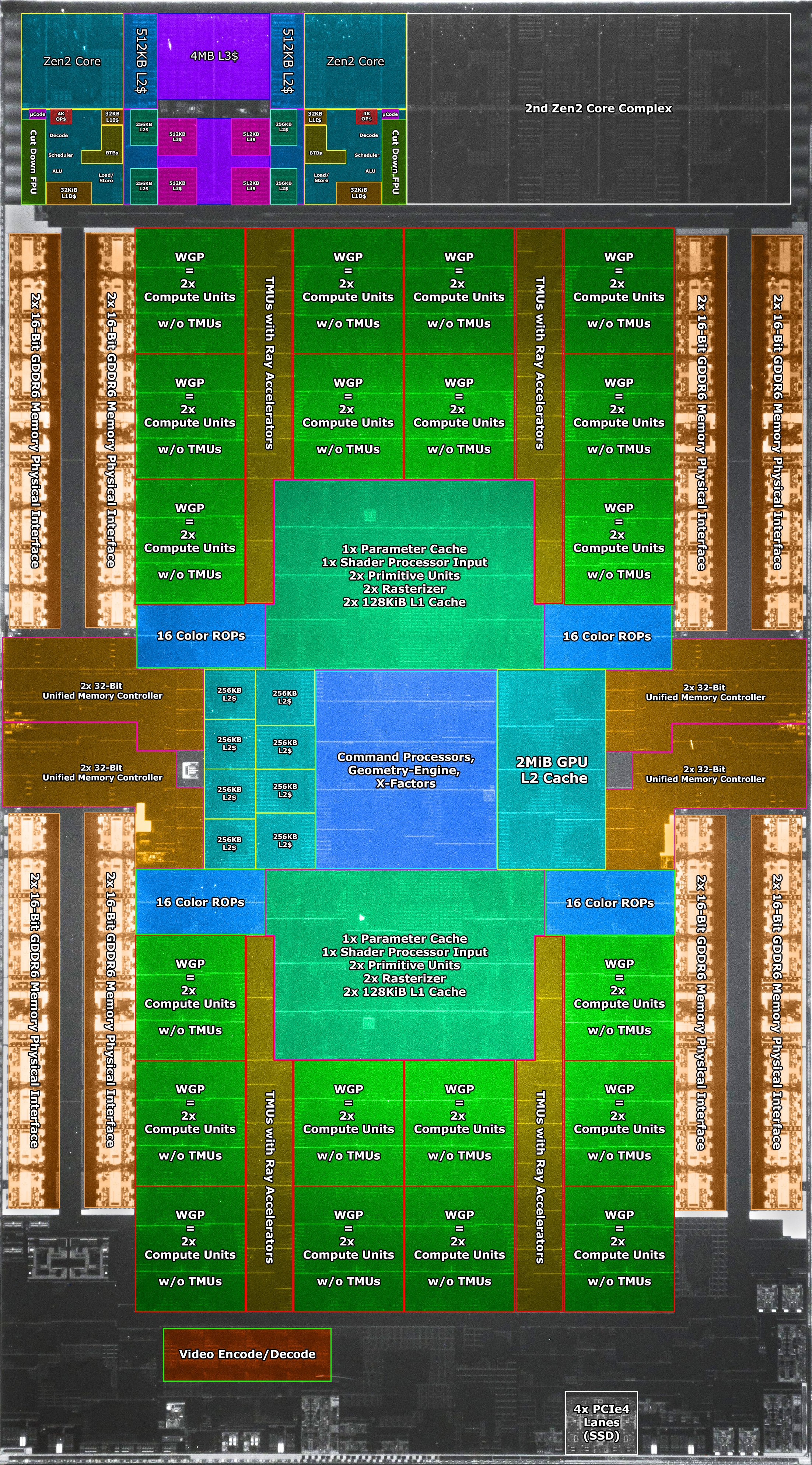

Not even sure why I need to even explain this logic considering MS detailed them as a separate item on a GPU hardware block diagram..

But just... you know.. using logic here... to support an argument.. backed by official sources.

Last edited: