This is definitely the most energy efficient processor I have ever seen if this is all accurate. I kind of wish Nintendo would have added another core to the CPU just to give that extra kick.

Well, in a sense.

IBM is bad at power gating, the tighter the CPU, and this one is tight the less of an issue it is; but if you look at an

Intel Atom part idling at 0.004 Watts the PPC750 starts showing it's age.

Actual performance per Watt though, remains impressive, yes. And I suppose the improved cache should bring it up to 2.71 DMIPS/MHz which is what

the large 1MB cache did for the PPC476FP so there's your performance gain, over Gekko/Broadway.

I remember seeing documentation that a showed that the PowerPC processor of that time returned 3 times the performance of the full scale Pentium 3. I can't find the page but it was a 200Mhz G4 vs. a 300 Mhz Pentium 3.

EDIT: Found it.

http://macspeedzone.com/archive/4.0/g4vspent3signal.html

That's a little bit unfair.

They're pinning MMX against Altivec, Altivec is the IBM/Freescale answer to MMX, sure... but Pentium 3 had SSE which was Intel answer to later stuff like 3D Now. Still, that's something the G4 would be sure to come out on top because it had four-way single precision floating point. Something PPC750/G3 (and the Pentium 3) didn't.

Paired singles on Gekko/Broadway make it 2-way.

That is why I was curious. Is Gflops and asbolute measurement of the limit of overall performance capability? By my estimate, going by the old data, Gekko should have been a little over twice as strong as the Xbox1 CPU. How do the Nintendo based PPC750s stand up to the G4 performance/efficiency wise?

http://www.oocities.org/imac_driver/cpu.html

Not so.

Gekko had 1125 DMIPS @ 486 MHz

XCPU had 951.64 DMIPS @ 733 MHz

A 866 MHz Pentium III would make it even, at 1124.31 DMIPS, and a Broadway @ 729 MHz should be 1687.5 DMIPS, still not close to doubling despite almost being 733 MHz.

It's still better, and it supported compression, which was a plus (

more here).

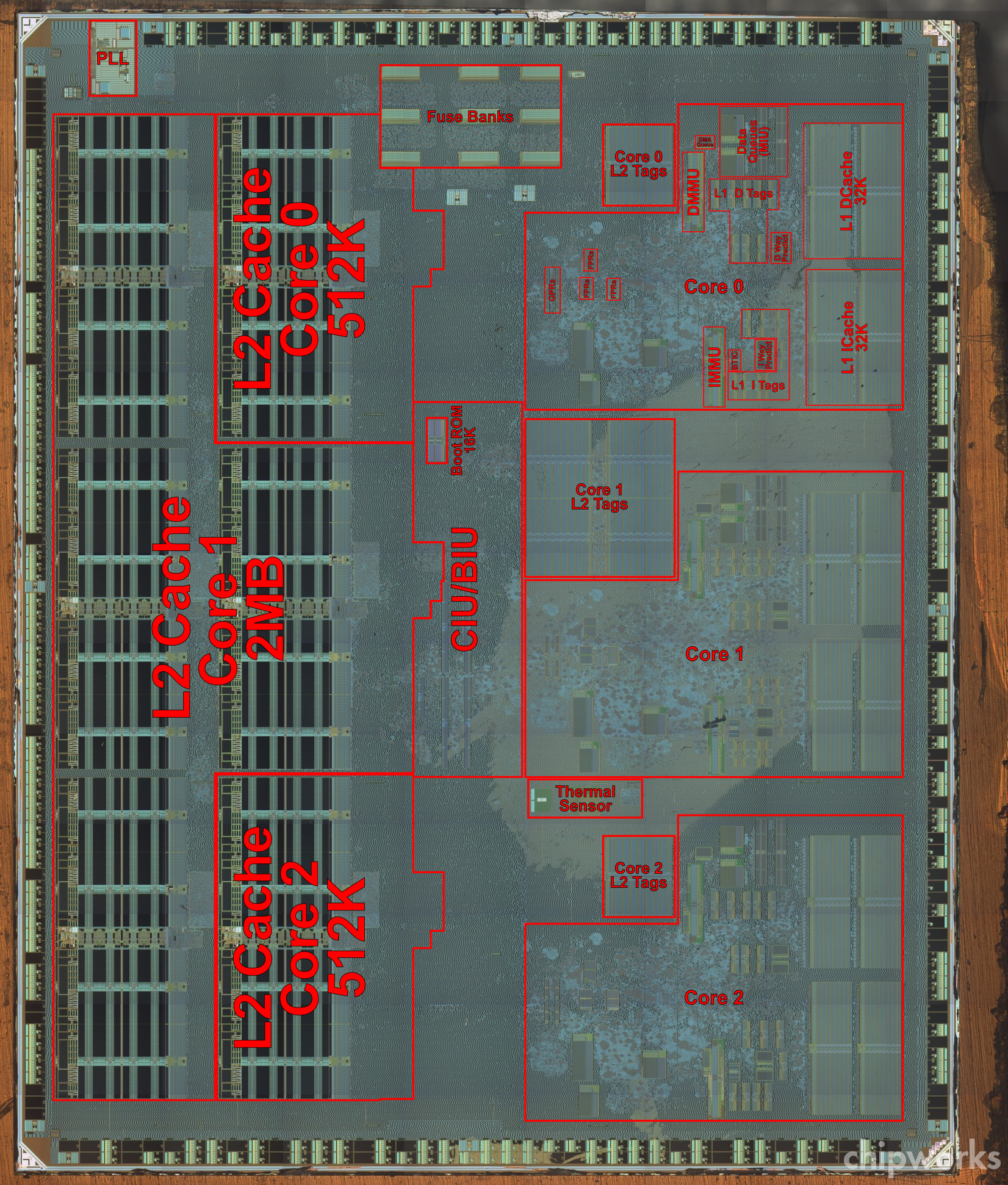

The PPC750 sure has a lot of derivatives now that I look at it. Do we know for certain which one Espresso uses?

https://www-01.ibm.com/chips/techlib/techlib.nsf/techdocs/B22567271129DE7F87256ADA007645A6/$file/PowerPC_750L_vs_750CXe_Comparison.pdf

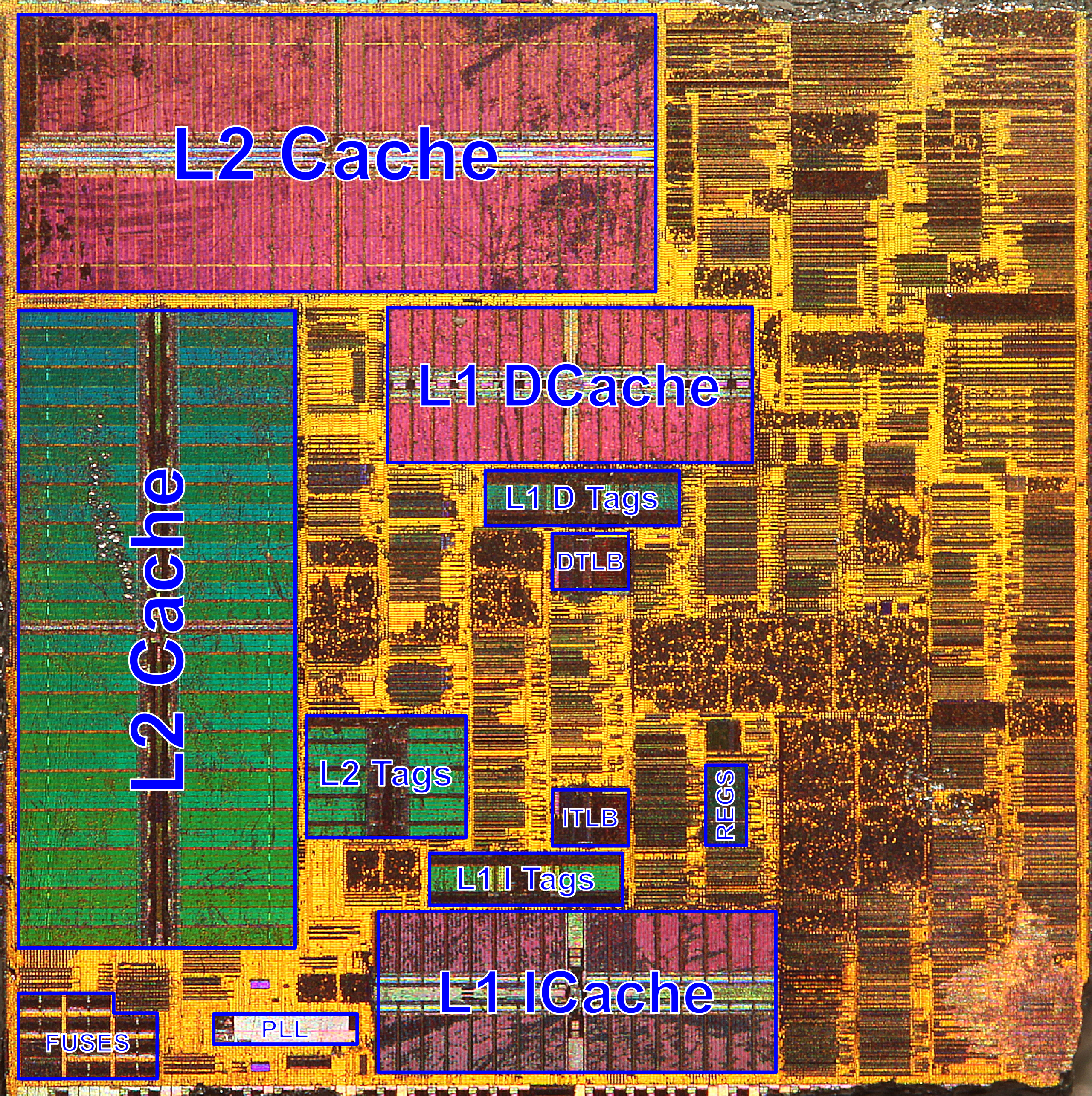

An evolved PPC750CL for sure.

For only Gekko and 750CL/Broadway had paired singles (perhaps CXe had them there, perhaps disabled in order to increase Gekko yields, I dunno)

The only alternative from using those IBM had would be the canceled PPC750 VX, which would have Altivec (read: four-way single precision floating point), that I suspect was never even close to production.

That leaves them with core shrinking and supercharging the cache of a PPC750CL.

Like I said, worst case scenario is keeping in line with the 256 KB L2 performance and delivering 2877.32 DMIPS, best case scenario is 3368.53 DMIPS (2.71 DMIPS/MHz) per core.

If a single core PPC750 takes 3 instruction and returns two each cycle, then how would that compare to a hyper-threading processor core or a dual core processor that returned a single instruction for each single core's performance? I always thought it would play out the same pretty much, but I remember from a long time ago during the Core 2 Duo days that people said that being dual core gave an extreme performance boost over hyper-threading even though they are both processing 2 instructions at once. I never quite understood that.

That's kinda hard to explain without getting too technical and risking stepping on my boundaries.

But it's basically because hyper-threading is using overhead that would otherwise be unused (I usually use the example of having a house with a high ceiling where not much goes above your head, leaving unused space), that's why it is a 30% performance boost at most, usually more like 10/15%. That second thread is not meant to run code with the same priority and density as the main thread, it's for secondary stuff, to cram more stuff in per cycle. It would also be useless on a short pipeline design like the Espresso/PPC750 (short pipeline eliminates the "high ceiling" I was talking about, it also makes the cpu more efficient).

A second core though... is a second core, certainly preferable to HT.