10:05-11:00: Practical DirectX 12 - Programming Model and Hardware Capabilities

This session will do a deep dive into all the latest performance advice on how to best drive DirectX 12, including work submission, render state management, resource bindings, memory management, synchronization, multi-GPU, swap chains and the new hardware capabilities.

Speakers: Gareth Thomas - AMD & Alex Dunn - NVIDIA

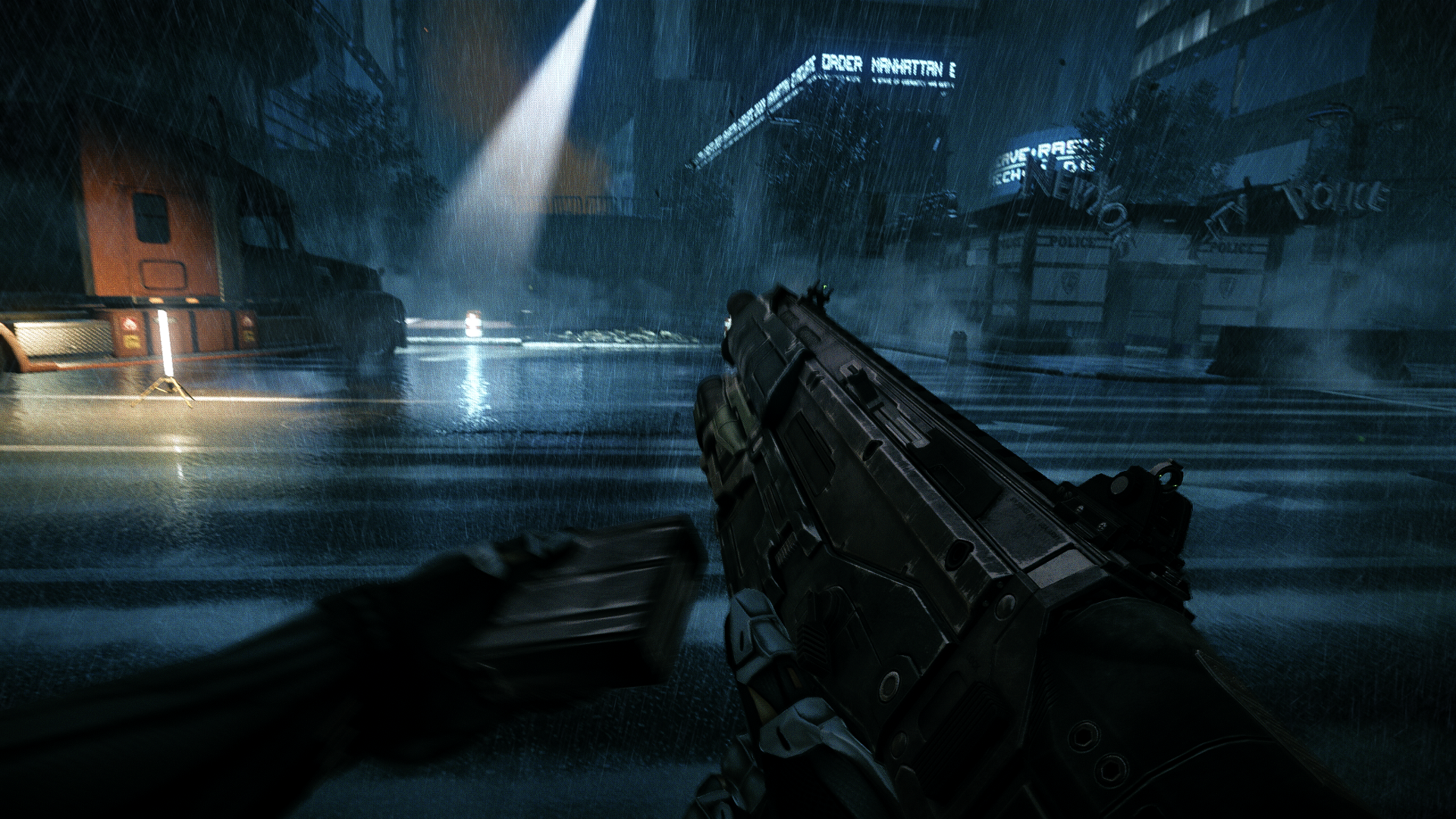

11:20-11:50: Rendering Hitman with DirectX 12

This talk will give a brief overview of how the Hitman Renderer works, followed by a deep dive into how we manage everything with DirectX 12, including Pipeline State Objects, Root Signatures, Resources, Command Queues and Multithreading.

Speaker: Jonas Meyer - IO Interactive

12:00-12:30: Developing The Northlight Engine: Lessons Learned

Northlight is Remedy Entertainment's in-house game engine which powers Quantum Break. In this presentation we discuss how various rendering performance and efficiency issues were solved with DirectX, and suggest design guidelines for modern graphics API usage.

Speaker: Ville Timonen - Remedy Games

12:30-13:20: Lunch

13:20-14:20: Culling at the Speed of Light in Tiled Shading and How to Manage Explicit Multi-GPU

This session will cover a new technique for binning and culling tiled lights, that makes use of the rasterizer for free coarse culling and early depth testing for fast work rejection. In addition we'll cover techniques that leverage the power of explicit multi-GPU programming.

Speakers: Dmitry Zhdan - NVIDIA & Juha Sjoholm - NVIDIA

14:40-15:40: Object Space Rendering in DirectX 12

While forward and deferred rendering have made huge advancements over the last decade, there are still key rendering issues that are difficult to address. Among them, are arbitrary material layering, decoupling shading rate from rasterization, and shader anti-aliasing. Object space lighting is a technique inspired by film rendering techniques like REYES. By reversing the process and shading as early as possible and not in rasterization space, we can achieve arbitrary material layering, shader anti-aliasing, decoupled shading rates, and many more effects, all in real-time.

Speaker: Dan Baker - Oxide Games

16:00-17:00: Advanced Techniques and Optimization of HDR Color Pipelines

The session explores advanced techniques for HDR color pipelines both in the context of the new age of wide gamut HDR displays and with application to existing display technology. Presenting a detailed look at options for optimization and quality at various stages of the pipeline from eye-adaption, color-grading, and tone-mapping, through film grain and final quantization.

Speaker: Timothy Lottes - AMD