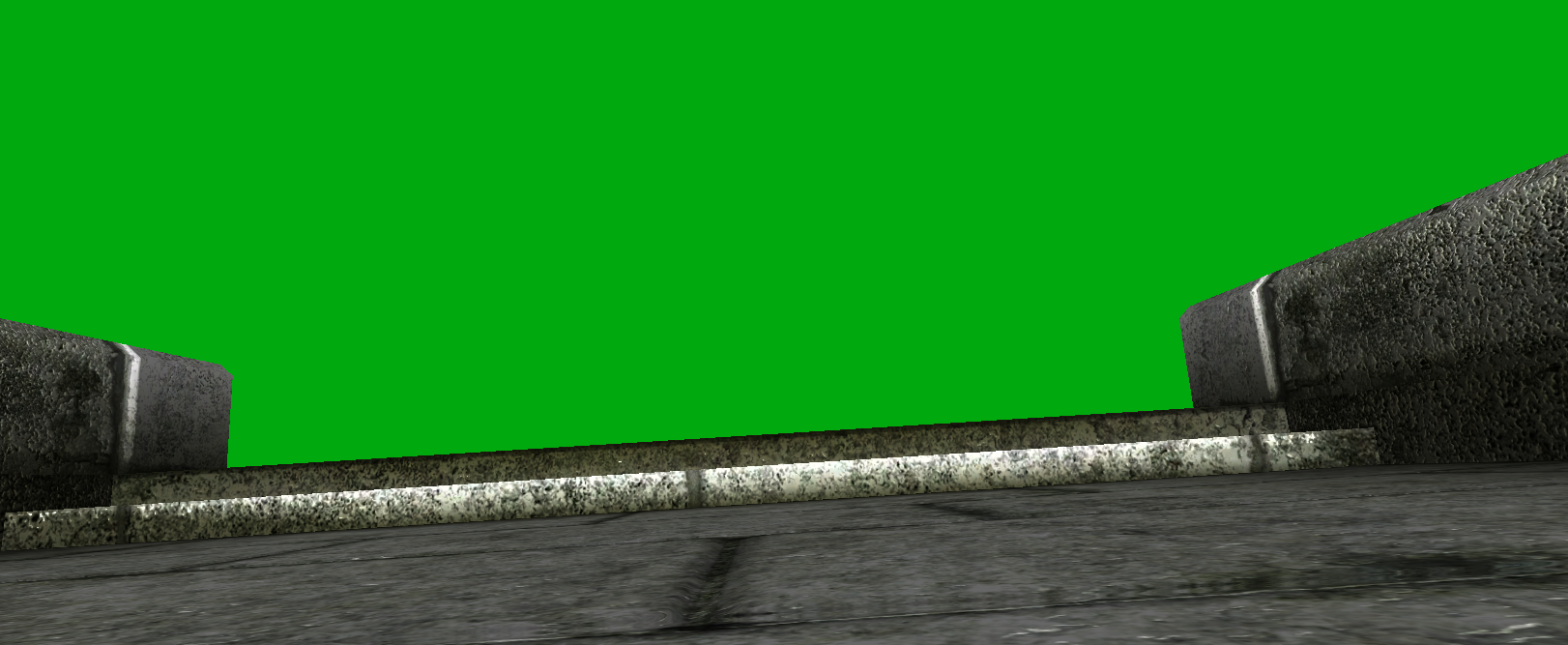

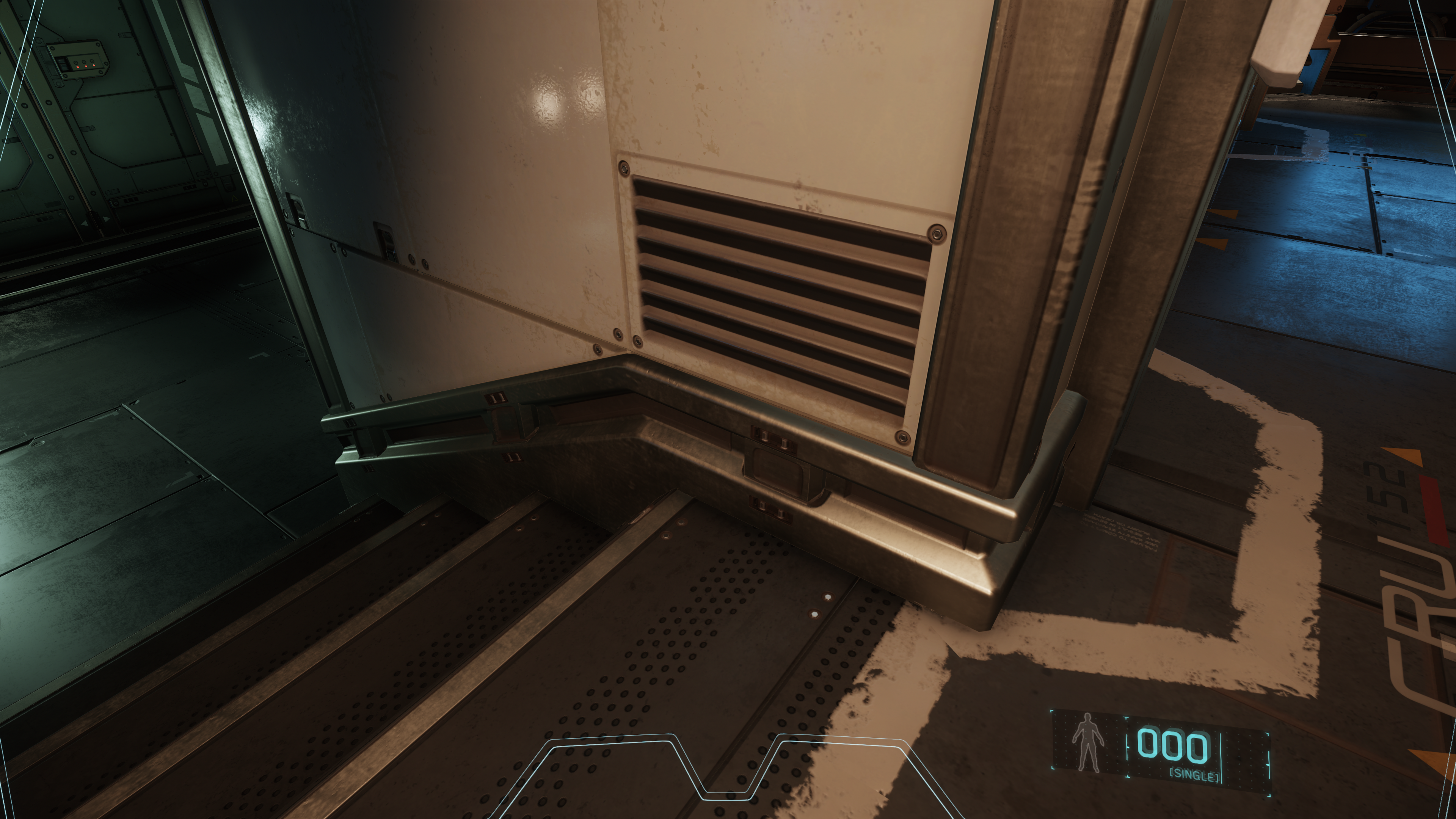

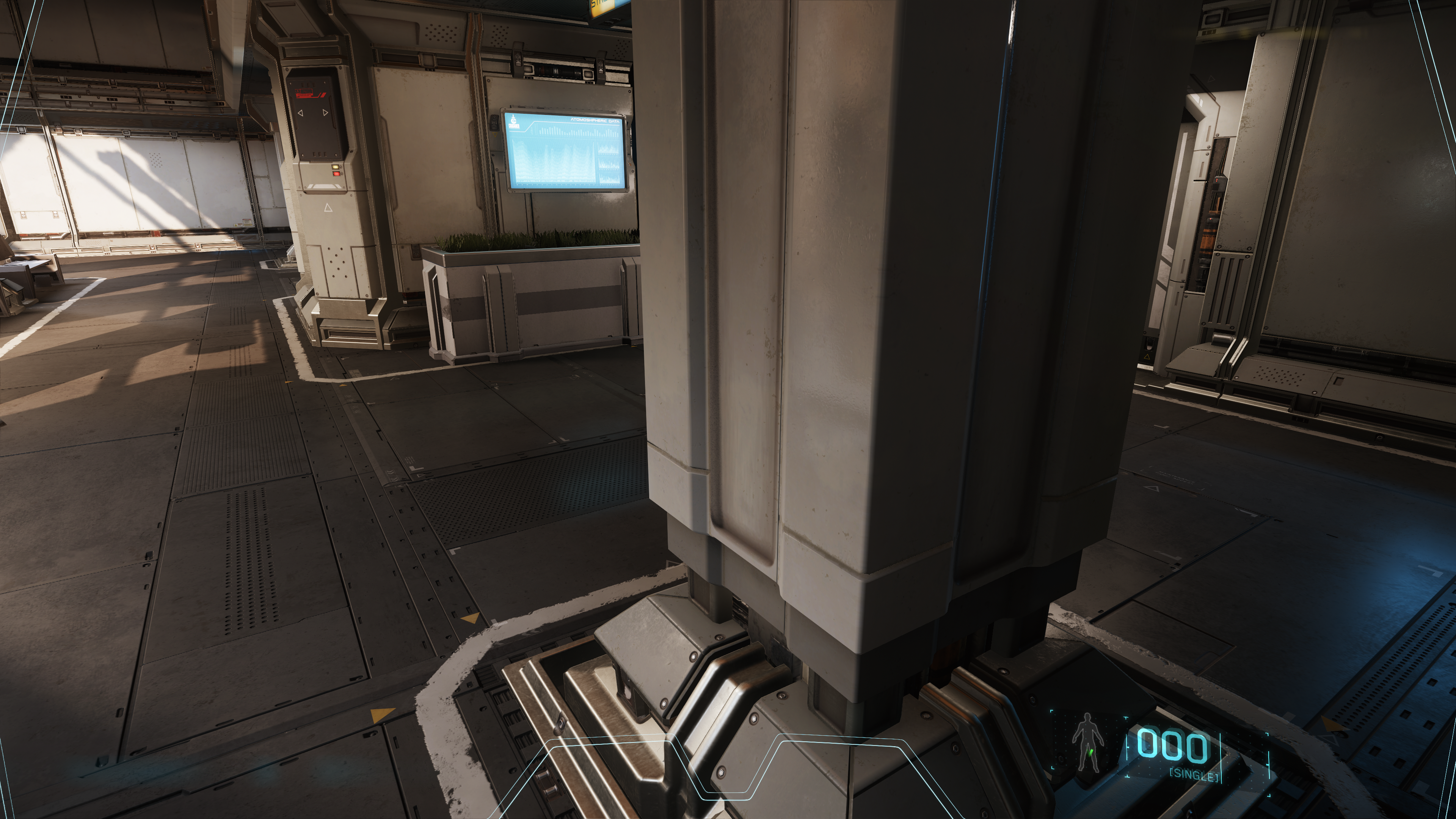

I find the prominence of the dithered fade-in quite weird. First saw it in Just Cause 2 in 2010 and seems to just be getting more popular since then. I thought dithering was something we left behind in the 90's.

Fading between LODs is sort of a tricky issue.

Doing a simple cross fade between the two LODs with alpha transparency wouldn't be perfect, because the combination of the two would be transparent during the transition (two transparent objects blended together is still a transparent object). What you want is for the combination of the two to be opaque at pixels touched by both LODs and use the cross fade transparency values at pixels touched by only one of the LODs; this would technically be doable, but would require some setup prior to the blend into the main framebuffer, and you might still wind up with some depth oddities regarding how your cross-fading LOD objects interact with other transparent stuff and post-processing.

Fading one in and then removing the other once the new one is fully opaque

could be done, but you'd still get a pop unless the new LOD was strictly larger than the old one. You could make this work seamlessly-ish by putting out a requirement that the low LOD meshes always fully enclose the high LOD meshes or vise versa, but that's sort of a bizarre requirement that would have significant implications on how things are authored.

With a dithered fade-in, the old LOD fades out with one dither mask while the new LOD fades in with an inverted dither mask, essentially switching the objects out pixel by pixel. This avoids the oddities of transparency blending approaches while also being cheaper to render. Not having to worry about what happens when an objects switches between transparent and opaque also means that it plays nicely with more lighting/rendering/whatever approaches.

Obviously the penalty is that the dither looks like dither.