Zombie James

Banned

http://www.anandtech.com/show/8962/the-directx-12-performance-preview-amd-nvidia-star-swarm

CPU utilization:

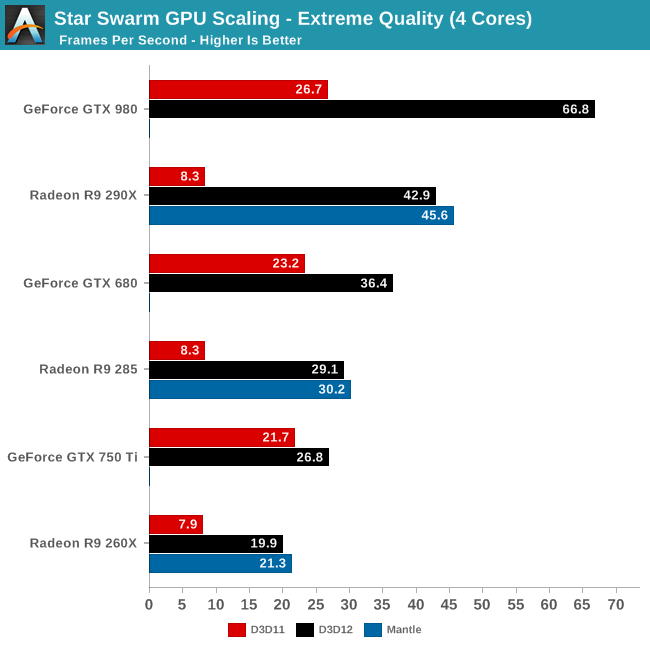

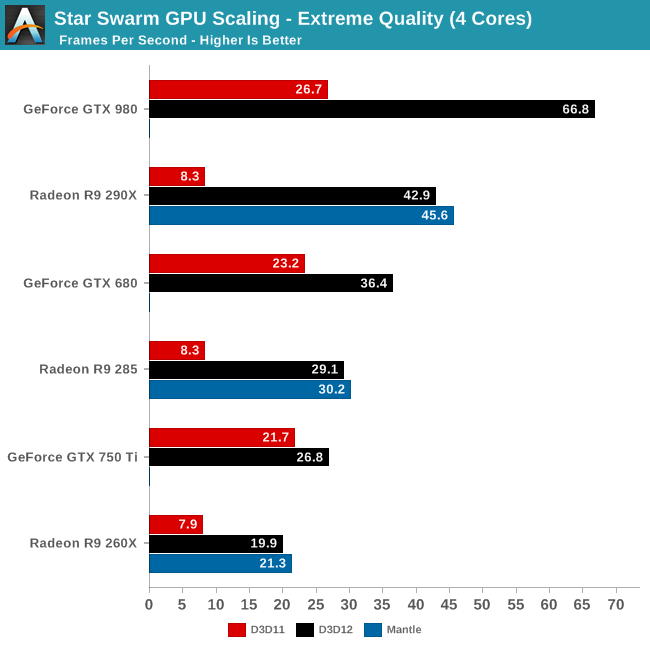

Plenty more in the article. Looking good so far. RIP Mantle?

CPU utilization:

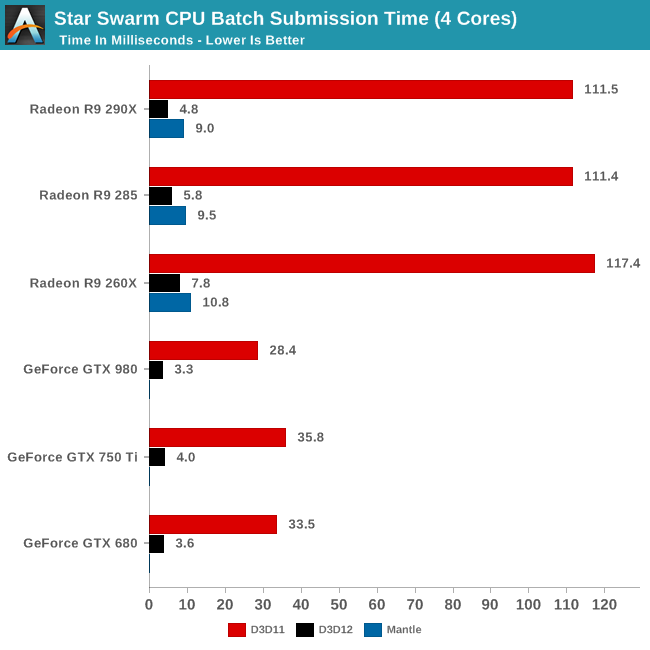

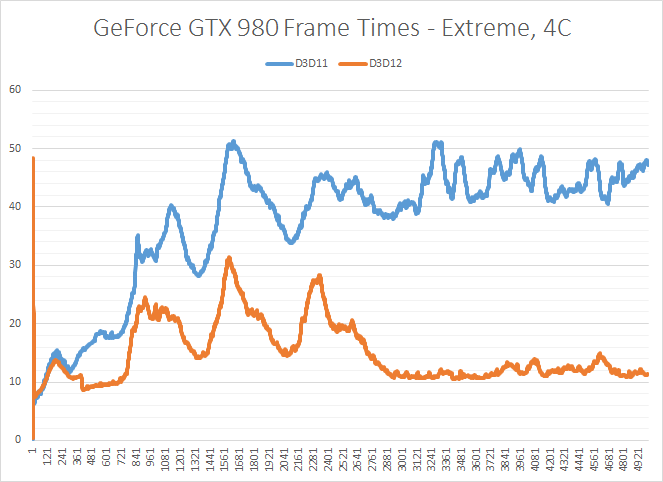

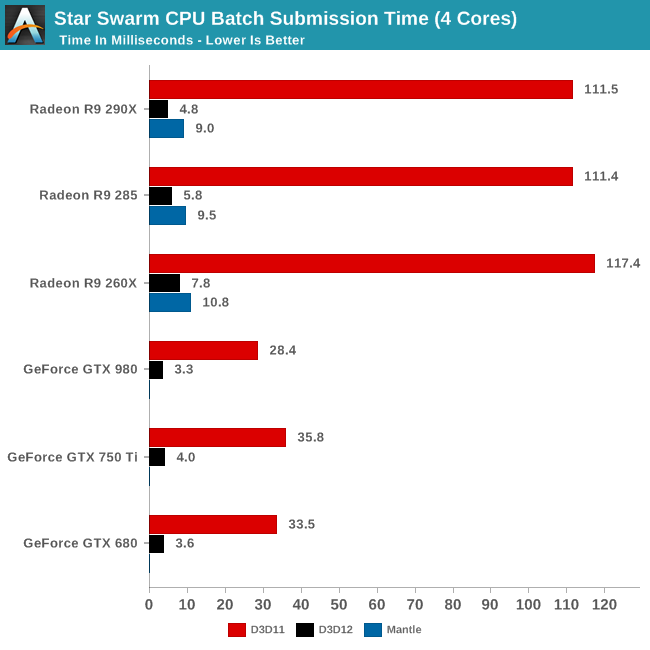

Speaking of batch submission, if we look at Star Swarm’s statistics we can find out just what’s going on with batch submission. The results are nothing short of incredible, particularly in the case of AMD. Batch submission time is down from dozens of milliseconds or more to just 3-5ms for our fastest cards, an improvement just short of a whole order of magnitude. For all practical purposes the need to spend CPU time to submit batches has been eliminated entirely, with upwards of 120K draw calls being submitted in a handful of milliseconds. It is this optimization that is at the core of Star Swarm’s DirectX 12 performance improvements, and going forward it could potentially benefit many other games as well.

Another metric we can look at is actual CPU usage as reported by the OS, as shown above. In this case CPU usage more or less perfectly matches our earlier expectations: with DirectX 11 both the GTX 980 and R9 290X show very uneven usage with 1-2 cores doing the bulk of the work, whereas with DirectX 12 CPU usage is spread out evenly over all 4 CPU cores.

Plenty more in the article. Looking good so far. RIP Mantle?