I'm surprised no one is plucking at the way the article is written.

I mean, sure, Durango's alpha dev kit is a regular PC:

That means the only indicative conservative target performance piece in there should be the GPU; just like the PowerMac G5's used as X360's Dev kits a few years back:

It was a stock Power Mac dual 2.0GHz with 512 MB of system memory and an ATi X800 (R420) graphics card. 512 MB being the configuration because that stock model shipped with that ammount. In short that's a big bunch of nothing. that thing is just a PC.

But the article goes further into what-the-fuck-are-you-speculating land, here:

DaE also says that Microsoft is targeting an eight-core CPU for the final retail hardware - if true, this must surely be based around Atom architecture to fit inside the thermal envelope. The hardware configuration seems difficult to believe as it is so divorced from the technological make-up of the current Xbox 360, and we could find no corroborative sources to establish the Intel/NVIDIA hook-up, let alone the eight-core CPU.

Atom!? what are these guys smoking? makes no sense, seems like the guy was throwing mud into the wall but couldn't really interpret what he was saying; first off, there's no off the shelf octo-core atom configuration and most of all, it wouldn't make any sense to do; so chances are this ain't it, if true and octo-core it's a damn Xeon workstation and the dude just went leftfield on it. Probably not indicative in any way of what the final hardware will be; more like stock off the shelf workstation.

Atom makes absolutely no freaking sense for a console; we're talking about an architecture based on Pentium 1 whose performance is reduced and limited to in-order-execution; it's roughly half efficient per clock when compared to an ancient Pentium M; takes a beating from everything on the market too.

The top range Atom Cedarview gives out 5333 DMIPS @ 2.13 GHz; that's little over double than double the performance of the original Celeron 733 in the original Xbox; and in fact less powerful in integer than the X360 cpu on a per-core basis; 5333 DMIPS versus 6400 DMIPS per core (who also couldn't manage to triple Xbox 1 integer performance per clock despite having more than 4 times the clockrate; and this is not because Pentium III performance was mighty good at that point; and it really isn't now meaning this cpu is as subpar as it gets; adding more of them doesn't make them a good choice).

Multiplying 5333 per 8 cores gives it almost thrice the complexity (only one processor short of trippling X360's configuration) and only managing to double and surpass by a very little margin the X360 offering. This means that even current gen ports can be a pain in the behind if they go with atom as they can't even match the performance per core the X360/PS3 had; and surpassing it with more complexity (the need to use 8 cores) reminds me of Saturn or PS3, it's just dumb.

And we're talking about 2.5 DMIPS/MHz in Atom Cedarview/Valleyview (the last models); current ARM Cortex A9 are matching the very same 2.5 DMIPS/MHz and wasting less energy per clock so if they were changing architecture from PPC to something else, they'd be stupid to go with x86 if they were to use Atom's. Also, since ARM's are licensed to various manufacturers and produced in higher quantity chances are it would also be cheaper. I'm not saying ARM is likely though, I'm saying someone that suggest's Atom's is out of his mind, threw a speculation article into nowhere.

And I'm not even touching the floating performance of that turd; that being the only thing current gen CPU's had that could be considered good; the Atom hasn't.

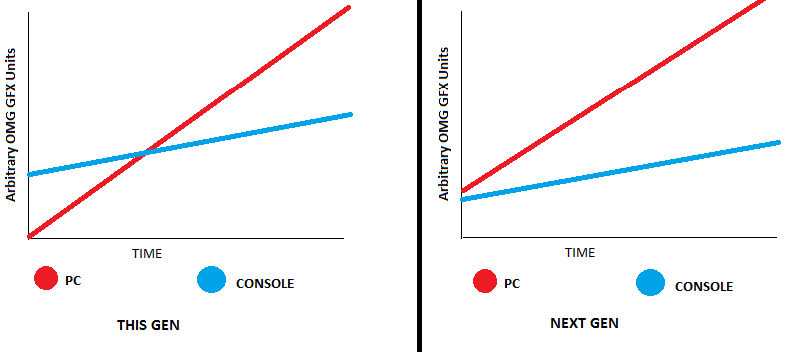

The rest... The GPU doesn't really matter but being the odd-hardware-out of this gen can't help them, if the other two are using ATi then the knowhow of things ATi GPU's usually do right, less known features and performance optimizations/penalties will be somewhat shared between them (and thus, the games will be optimized for ATi from the ground up), making it so that the one with Nvidia the most radically different one. Can't see it being too much of a factor, but it does matter somewhat.

As for the memory configuration, sure it's a devkit (actually, it's a stock PC workstation preliminar development kit, but going along with the crappy article) but going over 4 GB on the real thing would be unresponsible and put them in a bad place when pricedrops became a reality amongst competitors; even 4 GB kinda does.

GDDR3/DDR3 chips exist in 512 MB configurations, so 8 GB would require 16 chips; 4 GB would require 8 chips. As for GDDR5, that only exists in 256 MB configurations, so we're talking 32 chips for 8 GB and 16 for 4 Gigabytes, even hybrid configurations would still mean a lot of chips when considering X360 only had 4 memory chips.

4 GB of memory this gen will be a homerun, suggesting 8-12 GB in the title of a spec leak of a future console is kind of a tabloid thing to do.

Very bad article, expected more from Digital Foundry.