brain_stew

Member

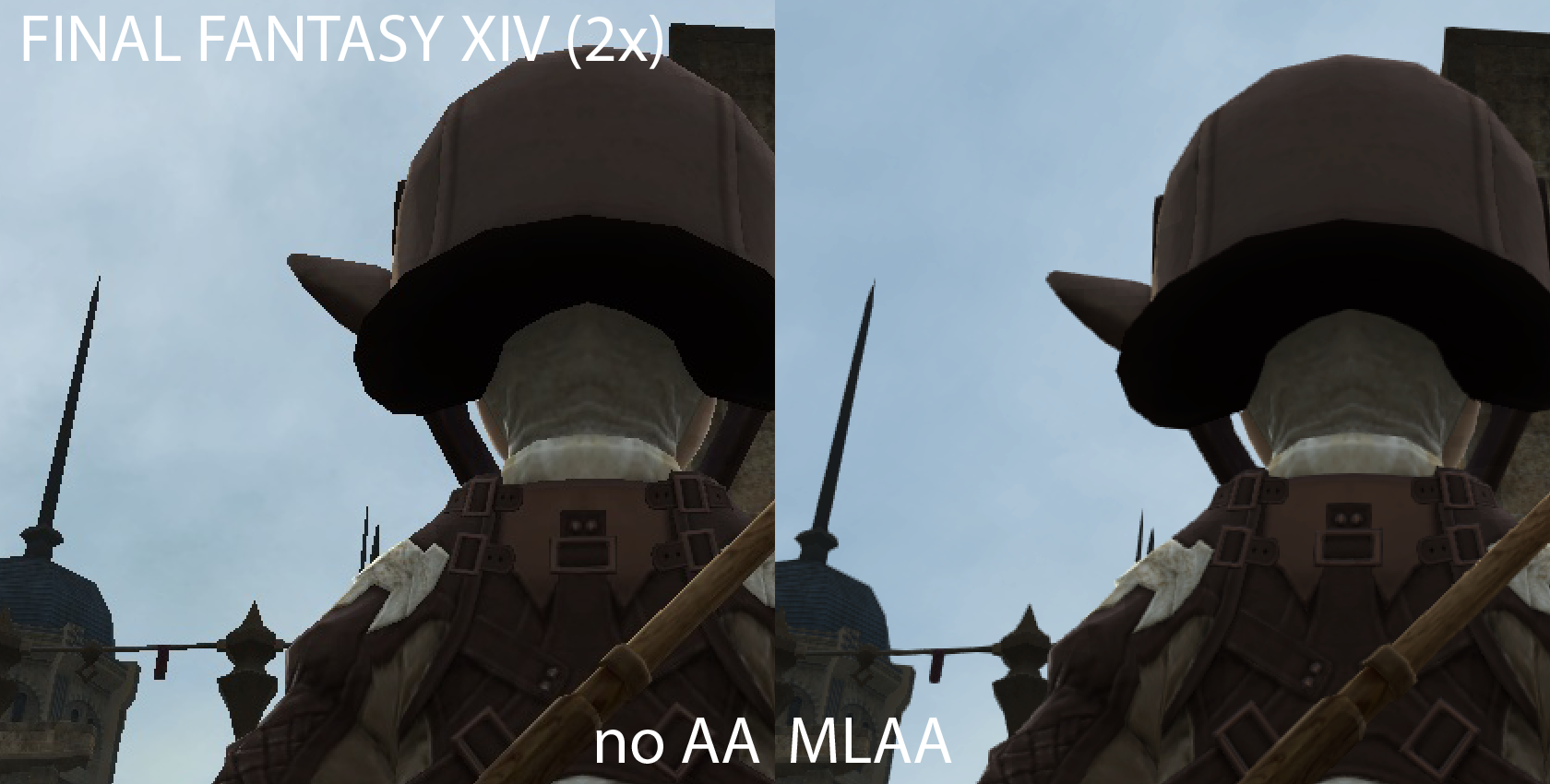

UPDATE 3: Comparison pics time!

If you want to take your own screenshots simply run your games in windowed mode and use the Windows 7 snipping tool. FRAPs will not work.

UPDATE 2: Now working on all 5xxx series cards!

Simply install the link below(10.10a hotfix w/ MLAA edit slipstreamed in, provided by mr_nothin) and then restart and you should have the option to enable MLAA in CCC:

http://www.mediafire.com/?5h6cpooivo4sbox

If this doesn't work then try:

RadeonPro is currently being updated so soon you'll have the ability to apply MLAA per application without any driver or registry fiddling

----------------------------------------------------------------------------------------

As many know, MSAA is incompatible with multiple render targets under DX9 and as the popularity of MRTs has grown, there's been an increasing amount of PC games without any AA solution at all, notable examples include Dead Space and GTA 4. Its sometimes possible to enable brute force super sampling but even when this does work (and its often a crapshoot), the insane performance penalty makes it rather infeasible in modern games.

However, fear not! Since MLAA (as popularised by GOW3 on the PS3) is a simple post process filter and nothing more, its long been debated that it should be quite simple to force it on top of any DX9/10/11 application without interupting the rendering process. Infact, some work has already been done in this area and the early and the early performance numbers are hugely encouraging. A simple 9800GTX can manage to deliver AA of similar quality to 4xmsaa in just 0.5ms! Now AMD have stepped uptp the plate and are going to include an MLAA setting in their drivers for their new 6xxx series cards! Coupled with Radeon Pro, you'll be able to force MLAA per application with ease in any DX9/10/11 game! Fantastic!

Fantastic!

Here's the leaked slide with details of their implementation:

There's not a single technical reason why this can't work on 5xxx series cards since 6xxx series cards bring no new functionality. Since its just a simple post process that has been proven to work under DX9, there's no reason it can't be brought to even older cards either, its more of a marketing rather than a technical reason but it could prove more work to rework their implementation for none DX11 class hardware, we'll see.

Hopefully this lights a fire under Nvidia's arse as this is a great USP to have imo. As demonstrated previously, even at 1080p, MLAA should be practically free on a modern PC GPU and its roughly 1000% faster than 8xmsaa in most cases (at least it was on a 9800GTX).

I'm eager to see how well AMD's implementation works.

UPDATE:

Its the real deal!

It has practically no noticeable impact on performance at all and the results, while slightly destructive, are still very good indeed.

The one real "gotcha" is that it affects the HUD as wel, not surprising since its a post-process after the fact and not integrated into the game's rendering pipeline but not ideal either. Here's a gif to demonstrate the effect.

JADS said:

Valru said:

If you want to take your own screenshots simply run your games in windowed mode and use the Windows 7 snipping tool. FRAPs will not work.

UPDATE 2: Now working on all 5xxx series cards!

Simply install the link below(10.10a hotfix w/ MLAA edit slipstreamed in, provided by mr_nothin) and then restart and you should have the option to enable MLAA in CCC:

http://www.mediafire.com/?5h6cpooivo4sbox

If this doesn't work then try:

You can try to add MLAA manually:

Start the Regedit (/REGISTRY/MACHINE/SYSTEM/ControlSet001/Control/CLASS/{4D36E968-E325-11CE-BFC1-08002BE10318}/0000) and look for MLF_NA. So change 1 to 0 and restart the CCC.

If MLF_NA is not available: Add it!

Restart computer

RadeonPro is currently being updated so soon you'll have the ability to apply MLAA per application without any driver or registry fiddling

----------------------------------------------------------------------------------------

As many know, MSAA is incompatible with multiple render targets under DX9 and as the popularity of MRTs has grown, there's been an increasing amount of PC games without any AA solution at all, notable examples include Dead Space and GTA 4. Its sometimes possible to enable brute force super sampling but even when this does work (and its often a crapshoot), the insane performance penalty makes it rather infeasible in modern games.

However, fear not! Since MLAA (as popularised by GOW3 on the PS3) is a simple post process filter and nothing more, its long been debated that it should be quite simple to force it on top of any DX9/10/11 application without interupting the rendering process. Infact, some work has already been done in this area and the early and the early performance numbers are hugely encouraging. A simple 9800GTX can manage to deliver AA of similar quality to 4xmsaa in just 0.5ms! Now AMD have stepped uptp the plate and are going to include an MLAA setting in their drivers for their new 6xxx series cards! Coupled with Radeon Pro, you'll be able to force MLAA per application with ease in any DX9/10/11 game!

Here's the leaked slide with details of their implementation:

There's not a single technical reason why this can't work on 5xxx series cards since 6xxx series cards bring no new functionality. Since its just a simple post process that has been proven to work under DX9, there's no reason it can't be brought to even older cards either, its more of a marketing rather than a technical reason but it could prove more work to rework their implementation for none DX11 class hardware, we'll see.

Hopefully this lights a fire under Nvidia's arse as this is a great USP to have imo. As demonstrated previously, even at 1080p, MLAA should be practically free on a modern PC GPU and its roughly 1000% faster than 8xmsaa in most cases (at least it was on a 9800GTX).

I'm eager to see how well AMD's implementation works.

UPDATE:

Its the real deal!

It has practically no noticeable impact on performance at all and the results, while slightly destructive, are still very good indeed.

The one real "gotcha" is that it affects the HUD as wel, not surprising since its a post-process after the fact and not integrated into the game's rendering pipeline but not ideal either. Here's a gif to demonstrate the effect.