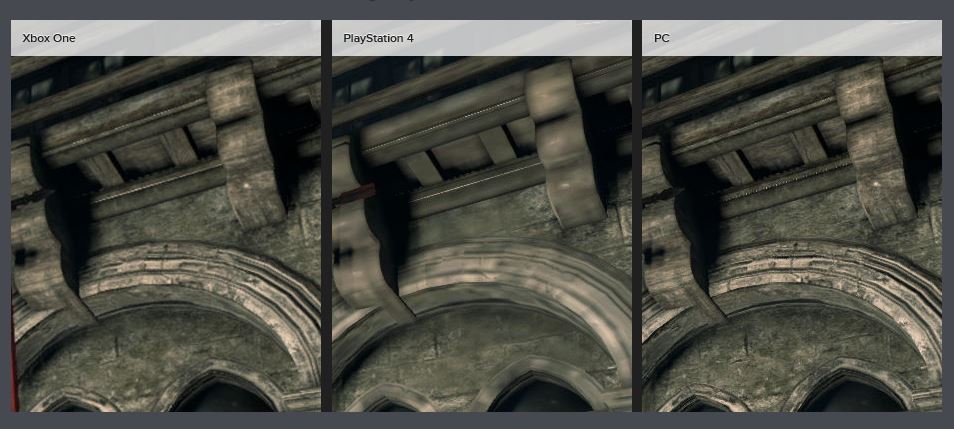

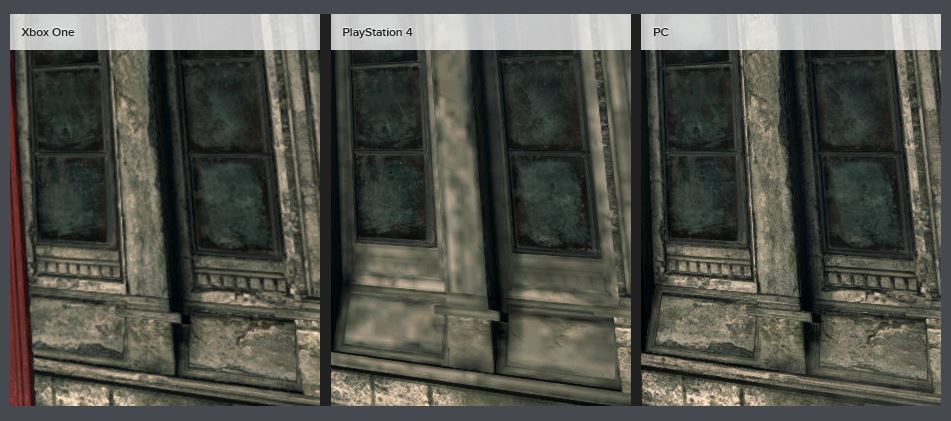

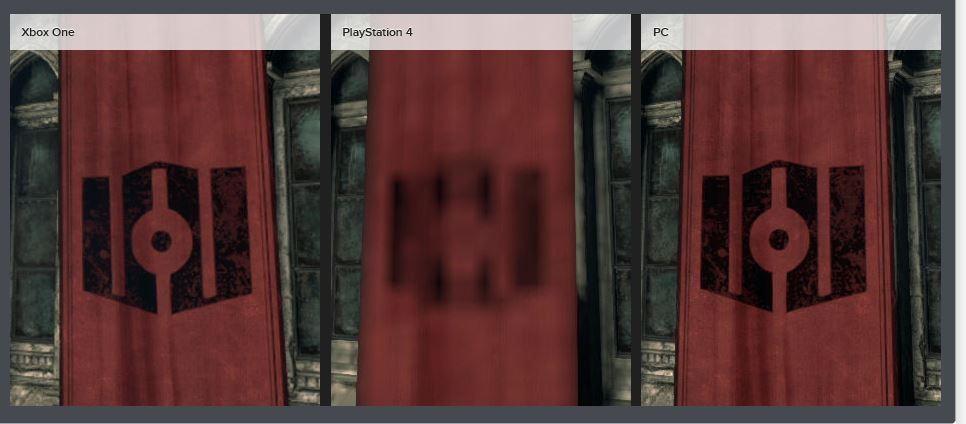

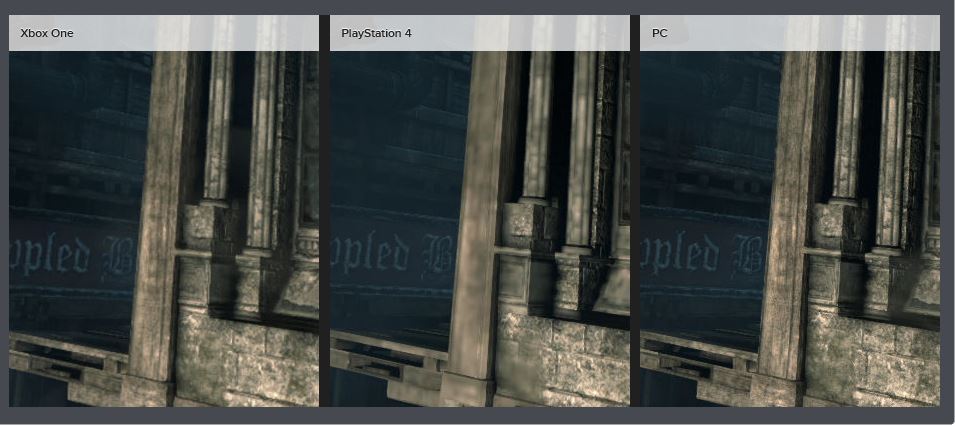

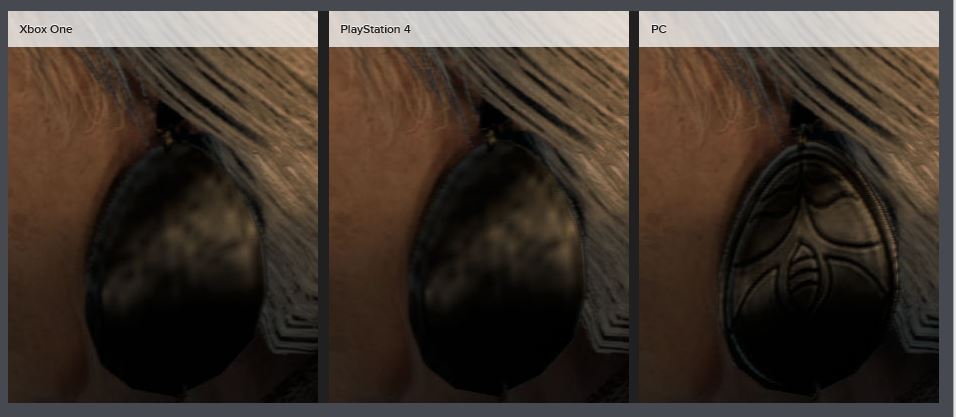

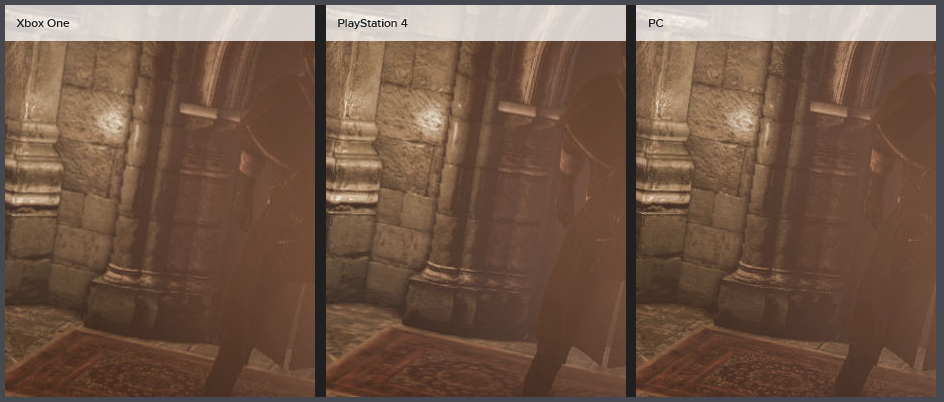

There's a lot of misinformation going on in here. Thinking that the 1080p resolution resulted in lower filtering...no. That's not at all how that works. Clearly performance wasn't at the forefront of the dev's concerns given the sub 30fps for both versions.

In fact I'm seeing a lot of "they should just lower the resolution to 900p/720p and get extra effects on PS4" and that's just a load of malarkey. What we're seeing with XB1, with the resolution issues and such, is a result of not necessarily performance, but of bandwidth concerns regarding ESRAM. To work within the 32mb, you have to pick and choose what you want to output. Right now devs have to actually choose resolution over post processing and effects on XB1, and the easy route at the moment is lower resolution. In time, there will be better tools so that devs can pump more into the ESRAM using smarter coding techniques given by better SDKs and such. But that will always be a factor for XB1.

The reason PS4 is almost always 1080p is because there is no major bandwidth hurdles like XB1. You output at 1080p because it's low cost and affects little with your memory/graphics budget on PS4. Literally the only exception to this is BF4, which was using early SDKs that, like all launch games, didn't fully harness all the hardware the PS4 had to offer. Consider that PS4 launch games got resolution bumps to 1080p once they had better dev tools/SDKs (Assassin's Creed, Call of Duty) with no trade off to performance.

At this point it is straight up FUD to believe PS4 titles are suffering graphically due to 1080p, or that the filtering effect from Thief would be due to the resolution bump.

edit: to be clear, I'm not saying running at 1080p isn't going to have a performance hit, because it is. But the cost of running 1080p on XB1 and PS4 are significantly different.

In fact I'm seeing a lot of "they should just lower the resolution to 900p/720p and get extra effects on PS4" and that's just a load of malarkey. What we're seeing with XB1, with the resolution issues and such, is a result of not necessarily performance, but of bandwidth concerns regarding ESRAM. To work within the 32mb, you have to pick and choose what you want to output. Right now devs have to actually choose resolution over post processing and effects on XB1, and the easy route at the moment is lower resolution. In time, there will be better tools so that devs can pump more into the ESRAM using smarter coding techniques given by better SDKs and such. But that will always be a factor for XB1.

The reason PS4 is almost always 1080p is because there is no major bandwidth hurdles like XB1. You output at 1080p because it's low cost and affects little with your memory/graphics budget on PS4. Literally the only exception to this is BF4, which was using early SDKs that, like all launch games, didn't fully harness all the hardware the PS4 had to offer. Consider that PS4 launch games got resolution bumps to 1080p once they had better dev tools/SDKs (Assassin's Creed, Call of Duty) with no trade off to performance.

At this point it is straight up FUD to believe PS4 titles are suffering graphically due to 1080p, or that the filtering effect from Thief would be due to the resolution bump.

edit: to be clear, I'm not saying running at 1080p isn't going to have a performance hit, because it is. But the cost of running 1080p on XB1 and PS4 are significantly different.