Actually, no, I think you're wrong there, the game itself, as in real gameplay, was running at 4K, and at settings beyond PC Ultra.

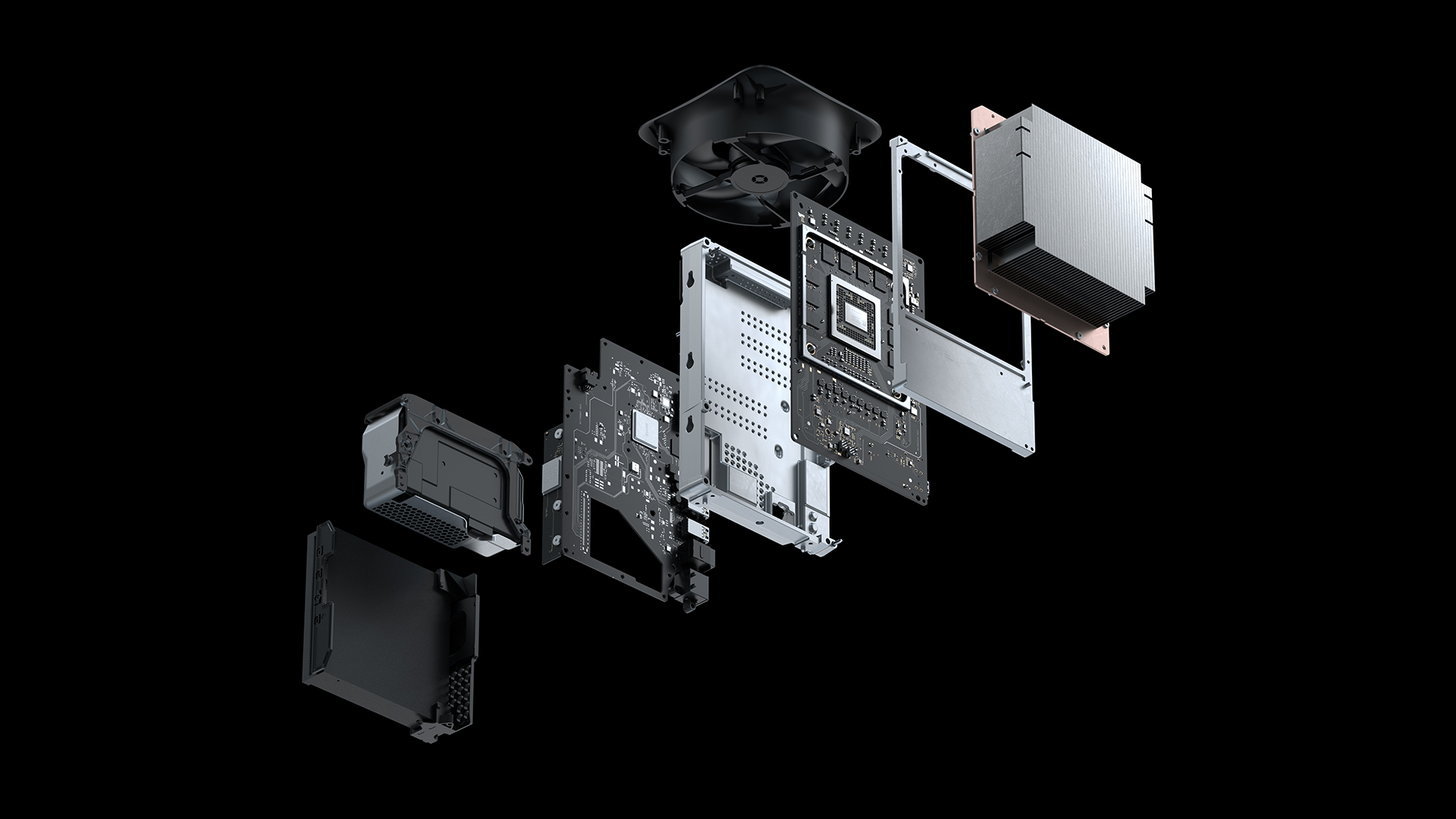

A few months ago, we revealed Xbox Series X, our fastest, most powerful console ever, designed for a console generation that has you, the player, at its center. When it is released this holiday season, Xbox Series X will set a new bar for performance, speed and compatibility, all while allowing...

news.xbox.com

The obvious implication here is that if the cutscene is running at a rock solid 4K 60fps, then surely the gameplay is running at 4K. Come on now. Can you actually disprove a single thing I just said? This isn't from Digital Foundry, this one is straight from Microsoft themselves. So did Microsoft also misinterpret their own console? Ultra settings with elements that were beyond what the PC Ultra setting has, and it was killing it performance wise.

The actual benchmark that ran super close to under RTX 2080 performance, that one was running without the beyond PC ultra settings, but equivalent PC ultra. The actual benchmark is designed to be a little bit more punishing than the actual game, that's what ran slightly below the RTX 2080 in performance at equivalent PC Ultra settings. And the reason it was behind on some elements had to do with the fact that the PC running the RTX 2080 had a beast of a CPU that further skewed results towards the PC side, which DF confirmed.