Soulblighter31

Banned

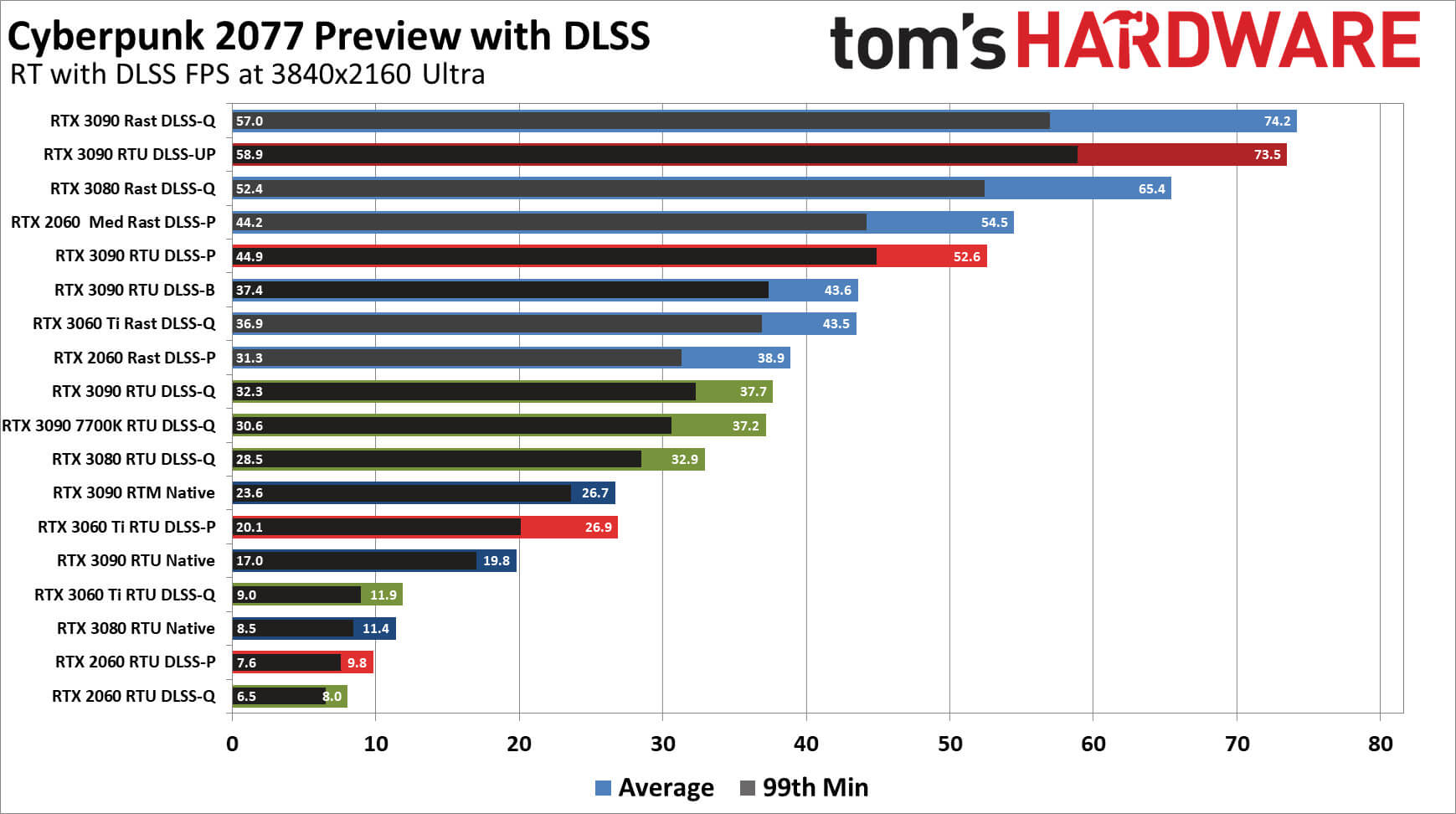

Define "handling" RT. I would argue that the nVidia cards don't handle RT today either. Let's take the biggest most important game that has been used to push RT. None of the RTX cards can handle Cyberpunk max settings with RT.

In order to get playable framerates at 4K, you have to run DLSS in Ultra Performance mode, which is literally running at 720p and upscaling it to 4K (which inevitably doesn't look that great), to get the game running higher than 60fps average, and even then the minimums are below 60. And nobody can dispute that fact.

Cyberpunk 2077 cannot run with 60fps in 4K/Ultra on the NVIDIA GeForce RTX3090

NVIDIA's latest high-end GPU, the NVIDIA GeForce RTX3090, cannot run the game in 4K/Ultra with 60fps, even without its real-time Ray Tracing effects.www.dsogaming.com

"In 4K/Ultra/RT with DLSS Quality, the RTX3090 was able to only offer a 30fps experience."

If you think this performance is somehow acceptable, especially for a $1500 graphics card or you classify it as "handling RT" or being "top of the line", then the performance in the majority of other games with RT is perfectly acceptable for the 6800 cards as well. The same applies to lowering of RT settings to achieve playable framerates.

The best use of DLSS is still without RT. Because it enables something like an RTX 2060 to give playable framerates at 4K. RT is currently a liability, not an asset.

Thats why nvidia released RT together with DLSS. You can RT at Ultra in 1440p with DLSS balanced. 60 frames, though you will go in the 50s on ocassion. But this is with the most ample RT in existence to date. You can tailor the experience by being selective of what RT features you keep enabled. Or you can use DLSS auto, which will keep it most of the time in quality and balanced. Benchmarking the game without DLSS is at most for an academic standpoint, because no user of RT cards will play the game without it. The technicalities of what dlss does, thats its not "true" 4k or 1440p doesnt matter. The end result goes head to head with native and enables 60 frame RT gameplay

![[IMG] [IMG]](https://i.ibb.co/5rDfn11/Cyberpunk-2077-street-Ultra.jpg)

![[IMG] [IMG]](https://i.ibb.co/mJh7JV4/Cyberpunk-2077-street-RT-Ultra.jpg)

![[IMG] [IMG]](https://i.ibb.co/FWLrZPp/Cyberpunk-2077-street-puddle-Ultra.jpg)

![[IMG] [IMG]](https://i.ibb.co/vLhTZkr/Cyberpunk-2077-street-puddle-RT-Ultra.jpg)

![[IMG] [IMG]](https://i.ibb.co/QdyLZ2K/Cyberpunk-2077-shadows-Ultra.jpg)

![[IMG] [IMG]](https://i.ibb.co/86JgXGf/Cyberpunk-2077-shadows-RT-Ultra.jpg)

![[IMG] [IMG]](https://i.ibb.co/vsSpJ00/Cyberpunk-2077-alley-Ultra.jpg)

![[IMG] [IMG]](https://i.ibb.co/pzhKqhV/Cyberpunk-2077-alley-RT-Ultra.jpg)

![[IMG] [IMG]](https://i.ibb.co/Vx0D8Nf/Cyberpunk-2077-building-Ultra.jpg)

![[IMG] [IMG]](https://i.ibb.co/zZdKZNN/Cyberpunk-2077-building-RT-Ultra.jpg)