https://wccftech.com/4a-games-tech-...ue-for-rt-but-amds-approach-is-more-flexible/

Great interview about ray tracing. The whole interview is interesting, but people on this forum should take this part to heart.

With regards to ray tracing specifically, how would you characterize the different capabilities of PlayStation 5 from Xbox Series X and both consoles from the newly released RTX 3000 Series PC graphics cards? Overall, should we expect a lot of next-gen games using ray tracing in your opinion?

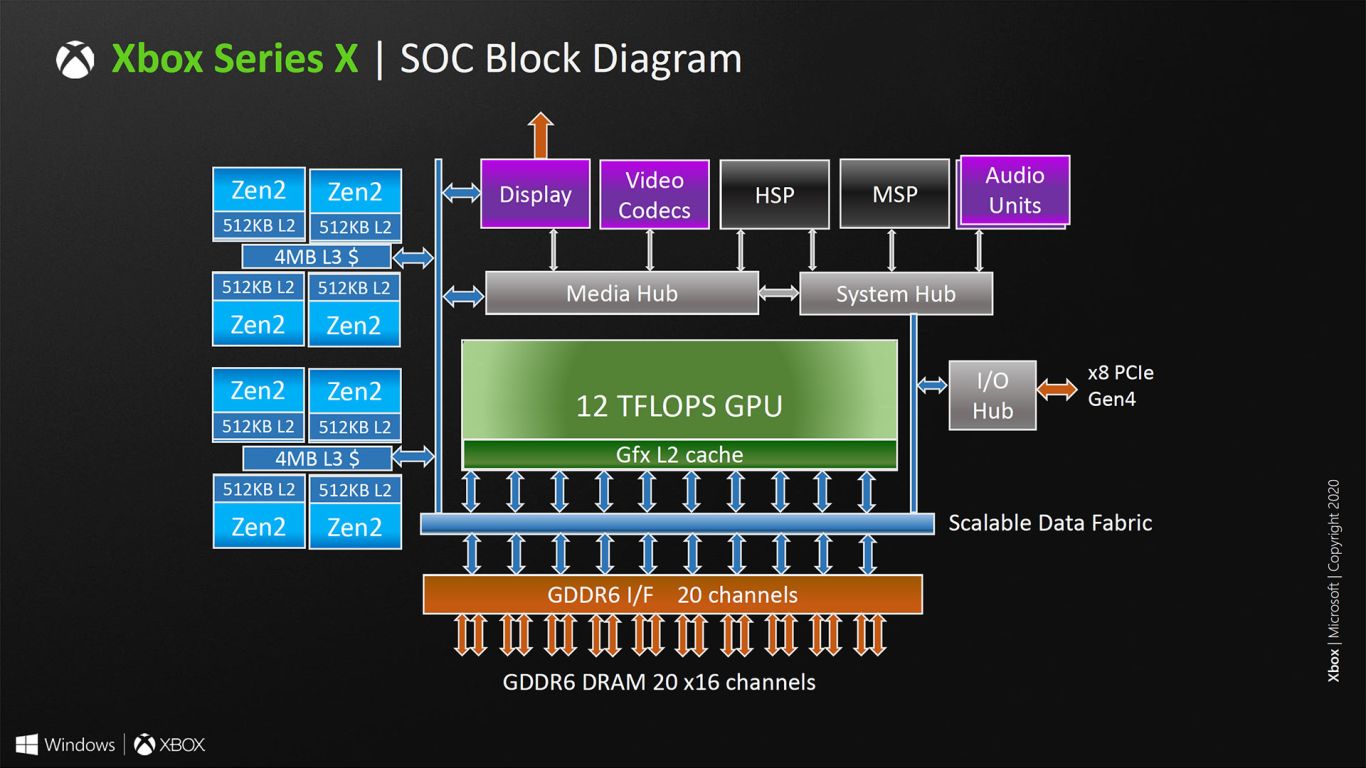

What I can say for sure now is PlayStation 5 and Xbox Series X currently run our code at about the same performance and resolution.

As for the NV 3000-series, they are not comparable, they are in different leagues in regards to RT performance. AMD’s hybrid raytracing approach is inherently different in capability, particularly for divergent rays. On the plus side, it is more flexible, and there are myriad (probably not discovered yet) approaches to tailor it to specific needs, which is always a good thing for consoles and ultimately console gamers. At 4A Games, we already do custom traversal, ray-caching, and use direct access to BLAS leaf triangles which would not be possible on PC.

As for future games: the short answer would be yes. And not only for graphics, by the way. Why not

path-trace sound for example? Or AI vision? Or some explosion propagation? We are already working on some of that.