Black_Stride

do not tempt fate do not contrain Wonder Woman's thighs do not do not

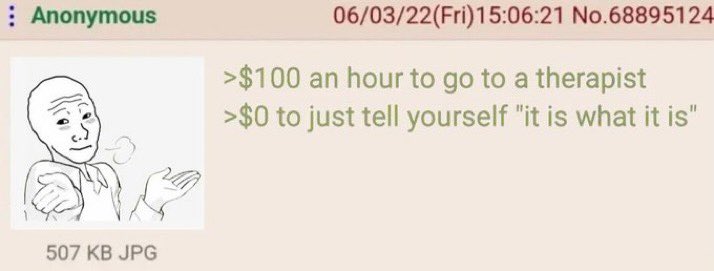

How is it DOA when they are targeting the most popular segment of the market?Is this Intel's top end card?

Very disappointing to match a mid-range card which was released more than a year ago.

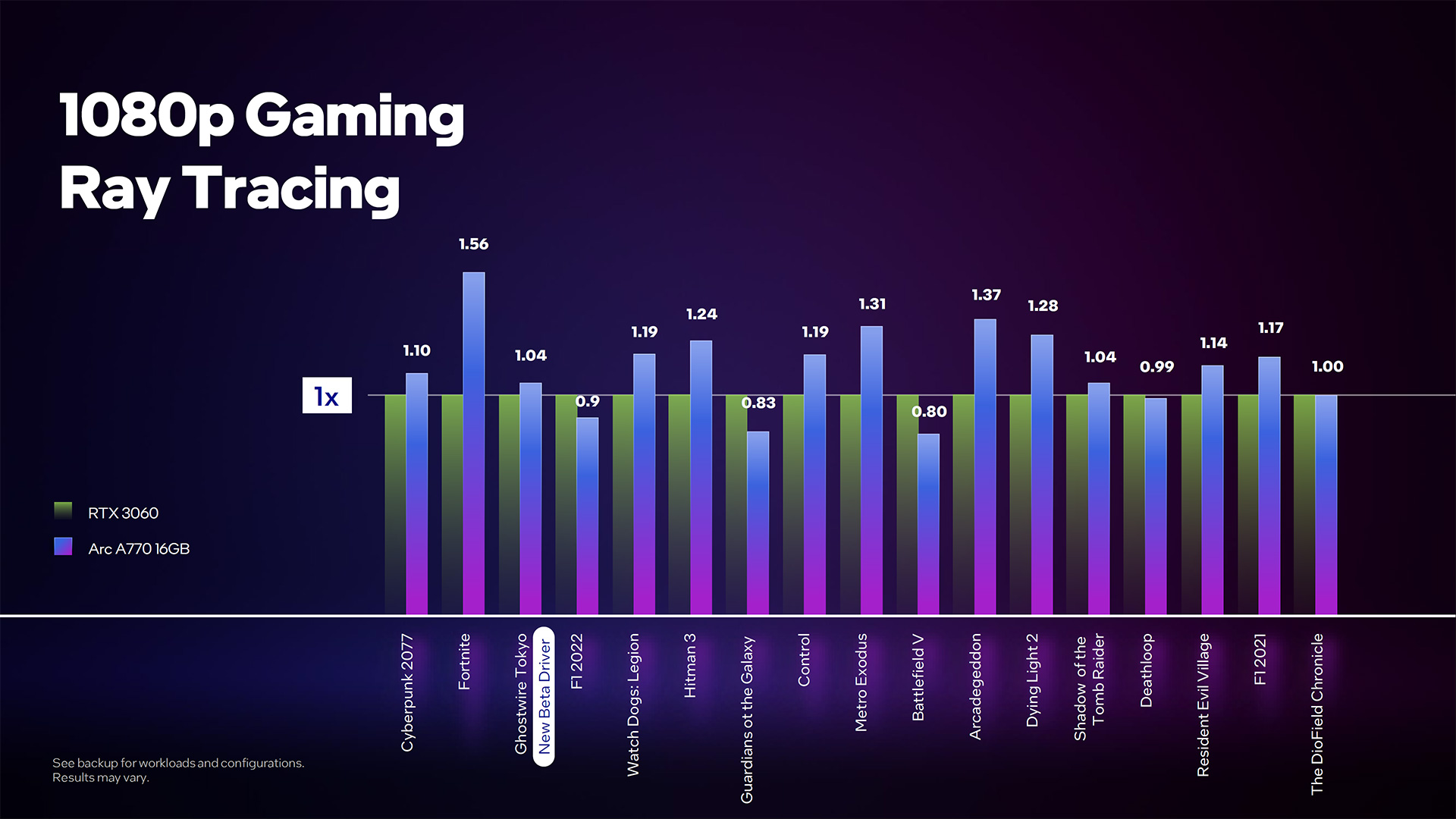

This thing is DOA. Although I must say XeSS looks promising. Impressive uplift in Tomb Raider.

That RTX 3060 - RTX3070 tier of card is what sells the most.

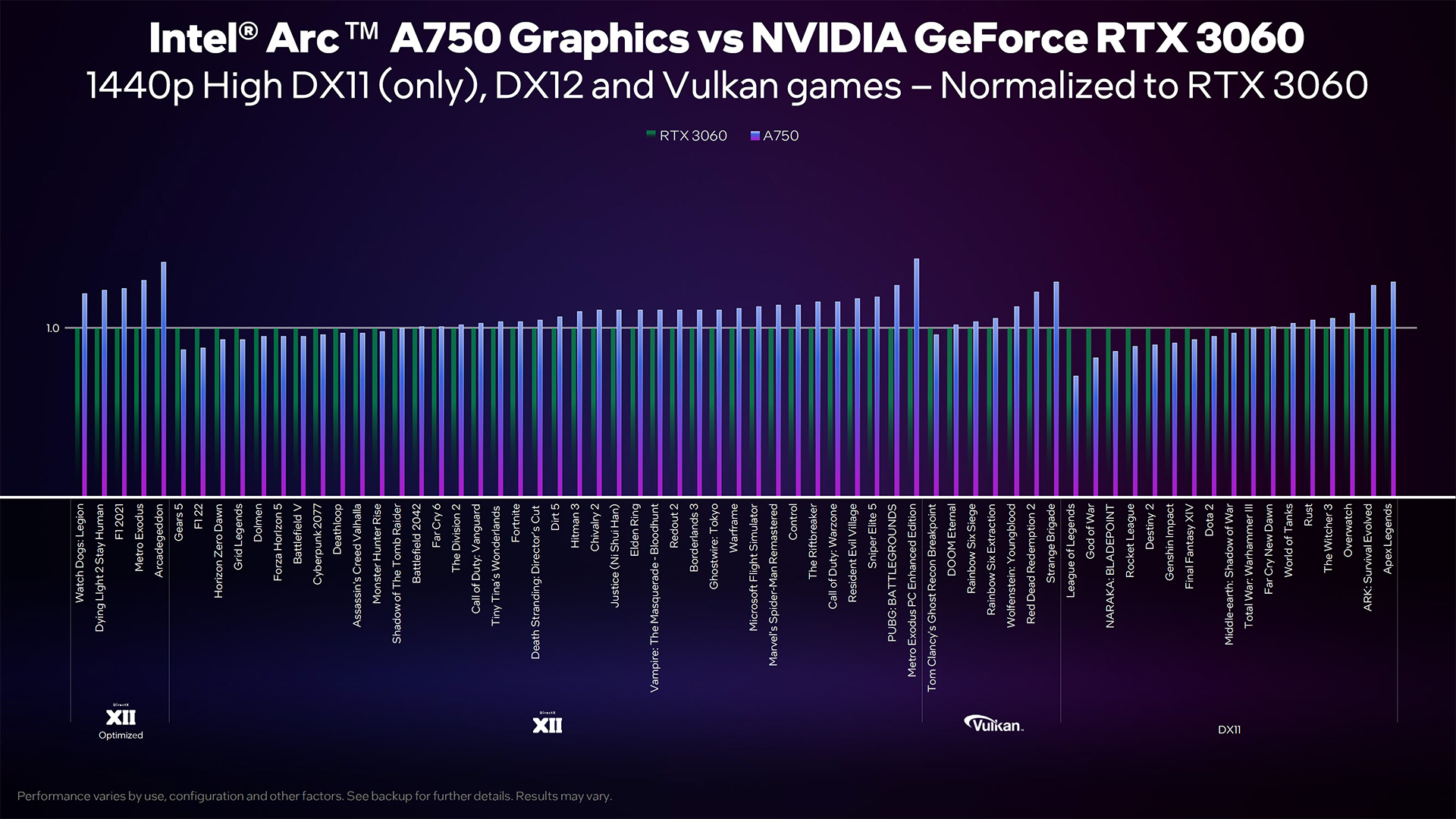

They are selling it for 329 dollars which is actually affordable for people. especially those who play at 1080p - 1440p.

The RTX 3060 is the most popular Ampere card, followed by the 3070, followed by the 3060Ti.

AMDs most popular card is the 6600XT....again in that xx60 - xx70 tier.

xx60s and 70s dominate the market, if you are trying to sell numbers and get your tech to be used (XeSS) you need to go where the market is.

Making a 3080 or 4070 class card would be pointless cuz you'd only sell 12 of them.

Cuz who you gonna convince off the jump to give you ~800 dollars for a GPU when theyve never seen your product do work.

Devs have no reason to support XeSS when no one is ever gonna actually use it.

With affordable cards in the segment people actually buy in, you atleast have a chance

You aint gonna find a brand new 3060ti for 329 dollars.finally, they're coming out

too bad it costs as much for the A770 as it does for a 3060ti, i figured theyd undercut nvidia on that one

Hell the cards MSRP is 400 dollars and most of the ones available are closer to 500 than to 329 dollars......to make matters worse (or better for Intel) with Nvidia saying fuck yall broke cats, dont expect the 3060Ti replacement anytime soon.

Their lower tier AD chips are going into Laptops right now.