You do realize that the GPU used for PS4 - 7870XT is a salvaged Tahiti. It is what I call the absolute worst candidate if you are trying to analyze ALU utilization out of it.While it was not indicative of general performance differences between the two console GPUs - and was bad to use it to try and indicate overall performance differences - the whole point of the benchmarks was to compare what difference greater ALU could bring, which is perfect for our purposes here in asking whether 'a typical game' scales indefinitely with increased ALU. If the ROPs and other elements were as unequal in this bench as they are in the consoles it would make it impossible to determine the impact of ALU alone.

-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

EuroGamer: More details on the BALANCE of XB1

- Thread starter artist

- Start date

phosphor112

Banned

While it was not indicative of general performance differences between the two console GPUs - and was bad to use it to try and indicate overall performance differences - the whole point of the benchmarks was to compare what difference greater ALU could bring, which is perfect for our purposes here in asking whether 'a typical game' scales indefinitely with increased ALU. If the ROPs and other elements were as unequal in this bench as they are in the consoles it would make it impossible to determine the impact of ALU alone.

What's the point of the comparison if the differences aren't just in the ALU's? At that point you're just measuring length of the dick, not the width, not the speed, not the longevity.

So is it safe to assume that XBOX ONE games that was shown before was using 14 CU's clocked at 800MHz but finally retail games will be 12 CU's clocked at 853MHz?

I'm sure the 2 CUs weren't activated/usable on the devkits. I read it somewhere today/yesterday. Maybe from Albert himself? Not sure.

So is it safe to assume that XBOX ONE games that was shown before was using 14 CU's clocked at 800MHz but finally retail games will be 12 CU's clocked at 853MHz?

Why would this be safe to assume? It sounds like they've always been targetting 12 CUs, but toyed with the idea of using all 14 and eating the cost difference. They probably found that 14 CUs was far too expensive.

I imagine the overclocking was actually a separate experiment from enabling the other CUs. They tried both, but overclocking seemed safe and low cost. It would probably have been done even if they had enabled the other two CUs.

Just to clarify, nobody is suggesting that there's a 14 CU GPU, and then some 4CU GPU off by itself somewhere.

The PS4 has one GPU with 18 full Compute Units. The 14 and 4 thing is simply a recommendation by Sony to developers on what they see as an optimal balance between graphics and compute. It was never suggested, at least not by me, that devs are forced to use it this way. They're free to use all 18 on graphics, 16 on graphics with 2 on compute, 5 on graphics with 13 on compute. It's all up to devs. Sony just gave a recommendation based on what they observed taking place after 14 CUs for graphics specific tasks. The extra resources are there, there's a point of diminishing returns for graphics specific operations, so they suggest using all the extra ALU resources for compute, rather than using them for graphics and possibly not getting the full bang for your buck. That's how I see it, and probably the last I'll say on the subject.

Ahh, that clears things up. Didn't realise it had come "straight from the horse's mouth" so to speak.

And as I'm here, I don't suppose you have a link on the bolded? I hadn't seen it before and, tbh, having had a quick search just now couldn't find anything.

Cheers.

GribbleGrunger

Dreams in Digital

Exactly what I am trying to find out as well

and this: Is that 25% per CU or 25% for the remaining 4 CUs?

I'm sure the 2 CUs weren't activated/usable on the devkits. I read it somewhere today/yesterday. Maybe from Albert himself? Not sure.

Why would this be safe to assume? It sounds like they've always been targetting 12 CUs, but toyed with the idea of using all 14 and eating the cost difference. They found that 14 CUs was probably far to expensive.

I imagine the overclocking was actually a separate experiment from enabling the other CUs. They tried both, but overclocking seemed safe and low cost. It would probably have been done even if they had enabled the other two CUs.

I was guessing that for them to Toy with it they would actually have to release a Devket FW that let the devs try this set up on their games to actually see which was better for them.

I was guessing that for them to Toy with it they would actually have to release a Devket FW that let the devs try this set up on their games to actually see which was better for them.

Oh ok. This probably happened - at least it would make the most sense to test such a setting/change.

GribbleGrunger

Dreams in Digital

So is it safe to assume that XBOX ONE games that was shown before was using 14 CU's clocked at 800MHz but finally retail games will be 12 CU's clocked at 853MHz?

I'm not technically minded but I was wondering whether that might be the reason we've seen a few games downgraded in resolution.

You do realize that the GPU used for PS4 - 7870XT is a salvaged Tahiti. It is what I call the absolute worst candidate if you are trying to analyze ALU utilization out of it.

I'm not trying to analyse ALU utilisation of PS4. The point is that between any two chips you may not get a linear performance delta from an increased amount of ALU alone. People were asking 'how could more CUs not = linear increase in framerate?!?' I'm simply pointing out it doesn't work like that, depending on the game, and that's all Sony's little suggestion about CU utilisation is echoing.

If someone has better benchmarks that isolate ALU differences it'd be great to see them.

What's the point of the comparison if the differences aren't just in the ALU's? At that point you're just measuring length of the dick, not the width, not the speed, not the longevity.

In debate we were having here, 'dick length' - scaling with ALU increases - was the only relevant bit. Of course it's not the case for a general comparison of GPUs.

GribbleGrunger

Dreams in Digital

Ok, now we're talking about dick length ... I'm DEFINITELY out of my depth.

Gaf is an unstoppable train filled with cuddly toys and razor blades.

Gaf is an unstoppable train filled with cuddly toys and razor blades.

phosphor112

Banned

IIn debate we were having here, 'dick length' - scaling with ALU increases - was the only relevant bit. Of course it's not the case for a general comparison of GPUs.

It's already established that it's not linear, but the magnitude of the drop off is being overstated, by far.

SenjutsuSage

Banned

My problem isn't with you "claiming" 14+4 (which you didn't). My problem is with this.

There is physically no reason to have "significant" drop. A drop? Sure, yes, I can see it, but where is this "significant" coming from?

I would have assumed the same, but that's not how it was presented by Sony apparently. I don't know why it was described as significant, but it was. I guess it's not really that important in the end, though, because, well, look at the games for the system. Killzone and Infamous look incredible, and that's just the first wave.

It's already established that it's not linear, but the magnitude of the drop off is being overstated, by far.

And that's cool. I didn't make an argument either way on that, just that it could be considered significant by some. I'd personally take any gains more ALU is giving even if it wasn't linear with their number

This thread has gotten really hard to follow. Let me get this straight. We apparently agree that:

1. CUs don't scale linearly, which I can't reconcile with this:

2. 14+4 isn't a requirement from Sony but is an example of what a developer could do with the 18 available.

3. PS4 uses some of its 18 CUs for other functions, but Xbone doesn't use any of its 12 for similar functions because "balance"?

At least two of those have to be wrong. Right???

1. CUs don't scale linearly, which I can't reconcile with this:

Penello: "18 CUs [compute units] vs. 12 CUs =/= 50% more performance. Multi-core processors have inherent inefficiency with more CUs, so it's simply incorrect to say 50% more GPU."

Ars: "The entire point of GPU workloads is that they scale basically perfectly, so 50% more cores is in fact 50% faster."

2. 14+4 isn't a requirement from Sony but is an example of what a developer could do with the 18 available.

3. PS4 uses some of its 18 CUs for other functions, but Xbone doesn't use any of its 12 for similar functions because "balance"?

At least two of those have to be wrong. Right???

Can Crusher

Banned

Just to clarify, nobody is suggesting that there's a 14 CU GPU, and then some 4CU GPU off by itself somewhere.

The PS4 has one GPU with 18 full Compute Units. The 14 and 4 thing is simply a recommendation by Sony to developers on what they see as an optimal balance between graphics and compute. It was never suggested, at least not by me, that devs are forced to use it this way. They're free to use all 18 on graphics, 16 on graphics with 2 on compute, 5 on graphics with 13 on compute. It's all up to devs. Sony just gave a recommendation based on what they observed taking place after 14 CUs for graphics specific tasks. The extra resources are there, there's a point of diminishing returns for graphics specific operations, so they suggest using all the extra ALU resources for compute, rather than using them for graphics and possibly not getting the full bang for your buck. That's how I see it, and probably the last I'll say on the subject.

I call bullshit. You speak as if compute was an afterthought, when looks like it was part of their vision all along.

Seems to fly in the face of me always saying the PS4 is easily the stronger console, doesn't it? I've just had disagreements on how noticeable that difference will appear to be in real games, since both systems will crank out incredible looking games.

Actually if the differences are felt both in graphics and in gameplay, then the differences will be larger than expected. I only expected better visuals, but Sony seems to be pushing for better visuals and for things that affect the gameplay and possibly can't be duplicated on competing consoles.

I was guessing that for them to Toy with it they would actually have to release a Devket FW that let the devs try this set up on their games to actually see which was better for them.

I sincerely doubt that this was done outside of XB R&D. Someone probably built a version of the firmware with the CUs and enabled then built and ran whatever benchmarking/game code they had access to.

Giving developers experimental firmware just seems like asking for trouble.

Gemüsepizza

Member

And I really, really doubt that this was the real reason why they went with a clock rate increase "instead" of 14 CUs - because why not have both? Having 14 CUs just wasn't viable from a business point of view, because they would have needed perfect chips. I think they are lying and it was also a nice opportunity to spread FUD about Sony's 18 CUs.

I call bullshit. You speak as if compute was an afterthought, when looks like it was part of their vision all along.

Actually if the differences are felt both in graphics and in gameplay, then the differences will be larger than expected. I only expected better visuals, but Sony seems to be pushing for better visuals and for things that affect the gameplay and possibly can't be duplicated on competing consoles.

But those things will most likely only be seen on exclusives, unfortunately..

SenjutsuSage

Banned

I call bullshit. You speak as if compute was an afterthought, when looks like it was part of their vision all along.

Actually if the differences are felt both in graphics and in gameplay, then the differences will be larger than expected. I only expected better visuals, but Sony seems to be pushing for better visuals and for things that affect the gameplay and possibly can't be duplicated on competing consoles.

Assumptions, assumptions. Afterthought? You ever consider that Sony knew this would happen, planned for it, and already had the perfect way to take advantage of those resources anyway, which is compute? Put another way, maybe Sony's GPU wouldn't be an 18 CU GPU in the first place if not for their big plans regarding GPGPU, maybe it would just be a 14 CU GPU period. There's none of what I'm saying that implies sony was in anyway caught off guard one day and said "Oh fuck, how about that compute thing, we can still use that though, right?"

I'm not saying that.

This thread has gotten really hard to follow. Let me get this straight. We apparently agree that:

1. CUs don't scale linearly, which I can't reconcile with this:

If your software demands that ALU, in an accommodating ratio vs other resources, then of course it can scale up with a greater number of CUs. There isn't some hard universal bottleneck standing in the way of that. The point that's being made is that it isn't always necessarily needed at a given resolution, or that between the shape of the software's resource demands and other idle time or stalls you may have a certain amount of CU time 'free' that you could fill in with other things. That's all.

A big problem in this whole debate is people generalising too much. Too much is software dependent.

As for number 3, Xbox One can use GPGPU if it wants. Obviously there's less resources to go around though and perhaps a lower degree of thread readiness for switching between compute and graphics tasks.

SenjutsuSage

Banned

If your software demands that ALU, in an accommodating ratio vs other resources, then of course it can scale up with a greater number of CUs. There isn't some hard universal bottleneck standing in the way of that. The point that's being made is that it isn't always necessarily needed at a given resolution, or that between the shape of the software's resource demands and other idle time or stalls you may have a certain amount of CU time 'free' that you could fill in with other things. That's all.

A big problem in this whole debate is people generalising too much. Too much is software dependent.

As for number 3, Xbox One can use GPGPU if it wants. Obviously there's less resources to go around though and perhaps a lower degree of thread readiness for switching between compute and graphics tasks.

Yea, there's probably different observed circumstances for different kinds of games. Take for example, Resogun or that Poncho game. Surely those games aren't doing the same thing that Killzone or even Infamous might be doing with the hardware.

cyberheater

PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 Xbone PS4 PS4

I'm sure the 2 CUs weren't activated/usable on the devkits. I read it somewhere today/yesterday. Maybe from Albert himself? Not sure.

Yeah. He said that. He said that MS did turn them on to see if the performance would be much better and decided that the upclock was the way to go so that they could improve their yields.

If your software demands that ALU, in an accommodating ratio vs other resources, then of course it can scale up with a greater number of CUs. There isn't some hard universal bottleneck standing in the way of that. The point that's being made is that it isn't always necessarily needed at a given resolution, or that between the shape of the software's resource demands and other idle time or stalls you may have a certain amount of CU time 'free' that you could fill in with other things. That's all.

A big problem in this whole debate is people generalising too much. Too much is software dependent.

As for number 3, Xbox One can use GPGPU if it wants. Obviously there's less resources to go around though and perhaps a lower degree of thread readiness for switching between compute and graphics tasks.

Thanks, and I suspected as much. Am I to understand that it could scale linearly in some kind of raw demo scenario?

I guess the part I'm really not understanding is how any of this puts PS4 out of balance...and if not, what the hell are we talking about?

If anything it seems more flexible with how you can use its resources...and its memory as well, but that's another dead horse. Does flexibility somehow translate to "imbalance"?

Thanks, and I suspected as much.

I guess the part I'm really not understanding is how any of this puts PS4 out of balance...and if not, what the hell are we talking about?

If anything it seems more flexible with how you can use its resources...and its memory as well, but that's another dead horse. Does flexibility somehow translate to "imbalance"?

Again, the whole 'balance' thing is meaningless without some specific software to relate to. It's the relationship between software demand and hardware resources that present balance or imbalance.

Maybe indeed some games don't need so much ALU, in which case x amount of ALU vs y amount of other resources might be 'imbalanced'. In other cases, some games may not need so much bandwidth but do need a lot of ALU, in which case that ratio of ALU may be balanced, and the ratio of bandwidth imbalanced.

In short though, more of anything is never bad. Who cares if some resource is over supplied for your game's needs? It's 'imbalances' in the other direction you have to worry about.

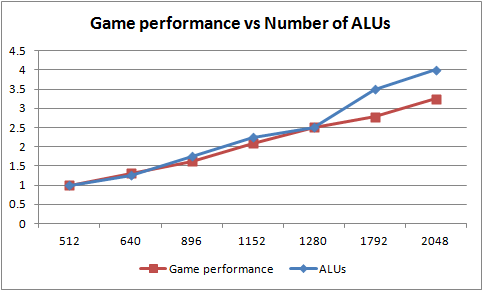

Here you go;I'm not trying to analyse ALU utilisation of PS4. The point is that between any two chips you may not get a linear performance delta from an increased amount of ALU alone. People were asking 'how could more CUs not = linear increase in framerate?!?' I'm simply pointing out it doesn't work like that, depending on the game, and that's all Sony's little suggestion about CU utilisation is echoing.

If someone has better benchmarks that isolate ALU differences it'd be great to see them.

Seems like a good linear increase.

edit: Fixed typo in title. Source is 15 game suite across 45 benchmarks from Techpowerup.

Can Crusher

Banned

Assumptions, assumptions. Afterthought? You ever consider that Sony knew this would happen, planned for it, and already had the perfect way to take advantage of those resources anyway, which is compute? Put another way, maybe Sony's GPU wouldn't be an 18 CU GPU in the first place if not for their big plans regarding GPGPU, maybe it would just be a 14 CU GPU period. There's none of what I'm saying that implies sony was in anyway caught off guard one day and said "Oh fuck, how about that compute thing, we can still use that though, right?"

I'm not saying that.

...

Sony just gave a recommendation based on what they observed taking place after 14 CUs for graphics specific tasks.

So either you're saying Sony tested the PS4 and came to this conclusion, or you're selling me a bridge and saying that Sony came to the conclusion that 14 CUs is some sort of magical sweet spot... which you know is just utter bullshit, because a 32 CU gpu would be able to do things that the hardware in these consoles can't. There's no "sweet spot" for GPU processing power right now, more does more.

But those things will most likely only be seen on exclusives, unfortunately..

Maybe. I could see a dev simply using 8+4 for the Xbox One, or whatever who knows. Either way those extra CUs will be put to use.

You know, if MS had any integrity, they would do what Nintendo did: state that they don't think all that power is necessary to make great video games in 2013. Or just don't talk about specs at all.

Given the parts involved here, trying to claim there is even a comparison between the two comes off as incredibly shady.

Given the parts involved here, trying to claim there is even a comparison between the two comes off as incredibly shady.

SwiftDeath

Member

Here you go;

Seems like a good linear increase.

Where is that from and what graphics card(s) are used?

Added the source, you can use the ALU count to see which cards I referenced.Where is that from and what graphics card(s) are used?

Here you go;

Seems like a good linear increase.

edit: Fixed typo in title. Source is 15 game suite across 45 benchmarks from Techpowerup.

It's not linear, you've got a 4x increase in ALUs on one axis with a less than 3.5x increase in framerate on the other...

(But I would out of curiosity be interested in the link and methodology anyway, specifically if they are actually isolated ALUs or are comparing cards that may also have different configs of rops/bandwidth etc)

ethomaz

Banned

I can see that too but I don't know how hard is make this happen in Xbone... PS4 have 8 ACEs and 64 queueds to developers uses to others stream processors for compute tasks... Xbone have 2 ACEs and 8 queueds? I don't know the number but if they try to use more compute tasks than the hardware can manage they will enter in queue even if there are stream processors free to use.Maybe. I could see a dev simply using 8+4 for the Xbox One, or whatever who knows. Either way those extra CUs will be put to use.

Here you go;

Seems like a good linear increase.

edit: Fixed typo in title. Source is 15 game suite across 45 benchmarks from Techpowerup.

Re-do your graph. The data points are not spaced according to their distance from each other on the x-axis.

McHuj

Member

Here you go;

Seems like a good linear increase.

edit: Fixed typo in title. Source is 15 game suite across 45 benchmarks from Techpowerup.

Why ALU's instead of flops? clock rate matters too.

It is linear, atleast where it's relevant.It's not linear, you've got a 4x increase in ALUs on one axis with a less than 3.5x increase in framerate on the other...

(But I would out of curiosity be interested in the link and methodology anyway, specifically if they are actually isolated ALUs or are comparing cards that may also have different configs of rops/bandwidth etc)

This scaling beyond 14CU non-sense is getting laughable.

It is linear, atleast where it's relevant.

It's not quite

I think we're both on the same side of the fence here, the idea of some universal, software independent limit at any one 'x' number of CUs isn't correct. But if we want to talk about whether there is alu time being left on the table 'typically' after render tasks, it's another question.

I sincerely doubt that this was done outside of XB R&D. Someone probably built a version of the firmware with the CUs and enabled then built and ran whatever benchmarking/game code they had access to.

Giving developers experimental firmware just seems like asking for trouble.

Well there is the rumor that the devkits took about a 15% hit with one of the updates.

so do the math 14 CU's (896 ALU's) to 12 CU's (768 ALU's) = close to a 15% drop.

Do you mean linear scaling beyond 1280? Because that's not relevant here.It's not quite

Ofcourse, the amount of other resources such as texell, pixell fill is also vastly different between the Xbone and PS4.And if you're just comparing cards with different numbers of alus - but that also have different levels of other resources, then we're not isolating the relationship between alu count and performance.

I think we're both on the same side of the fence here, the idea of some universal, software independent limit at any one 'x' number of CUs isn't correct. But if we want to talk about whether there is alu time being left on the table 'typically' after render tasks, it's another question.

Pretty clear where this is heading and why it was posted.

MS says we targeted 12 CU plus overclock because it outperforms 14 CU. They spins the old story about the 14cu+4cu for PS4 to show the xbone is more powerful since its more balanced. Since we have new "info" that came straight from "sony" that after 14CU is diminishing return so its a "waste" to use this for gfx. Of course the source is unknown and most likely 6 months old at least.

Now we have the xbone can out perform the ps4 gpu because they have the perfect number of CU and its overclocked. What about the 4CU for compute? well the xbone has 15 special processors that off load cpu tacks and plus the cpu is now over clocked!

LOL

So easy to see right through this stuff. Complete PR bullshit. Back in real life the ps4 has a massive advantage with ALU[50%+], ROP[100%+], TMU[50%+], usable ram per frame[100%+]and more advance gpu compute support.

I would be shocked if a single game ran better on xbone than PS4.

MS says we targeted 12 CU plus overclock because it outperforms 14 CU. They spins the old story about the 14cu+4cu for PS4 to show the xbone is more powerful since its more balanced. Since we have new "info" that came straight from "sony" that after 14CU is diminishing return so its a "waste" to use this for gfx. Of course the source is unknown and most likely 6 months old at least.

Now we have the xbone can out perform the ps4 gpu because they have the perfect number of CU and its overclocked. What about the 4CU for compute? well the xbone has 15 special processors that off load cpu tacks and plus the cpu is now over clocked!

LOL

So easy to see right through this stuff. Complete PR bullshit. Back in real life the ps4 has a massive advantage with ALU[50%+], ROP[100%+], TMU[50%+], usable ram per frame[100%+]and more advance gpu compute support.

I would be shocked if a single game ran better on xbone than PS4.

Well there is the rumor that the devkits took about a 15% hit with one of the updates.

so do the math 14 CU's (896 ALU's) to 12 CU's (768 ALU's) = close to a 15% drop.

I'm very sure that's not the case according to all leaks and information I got. Why should they use 14 CUs when they know the system will ship with 12 CUs?

Tripolygon

Banned

You are saying a whole lotta nothing.

Just to clarify, nobody is suggesting that there's a 14 CU GPU, and then some 4CU GPU off by itself somewhere.

We know that for a fact but you are suggesting that not all 18CUs are the same.

The PS4 has one GPU with 18 unified Compute Units. The 14 and 4 thing is simply a recommendation by Sony to developers on what they see as an optimal balance between graphics and compute.

No its just a suggestion as to how those 18CUs can be applied, it could also be 15/3 or 10/8. It has nothing to do with what is optimal. There is no such optimal number of CU, it all comes down to Bandwidth, ROP, ALU etc etc. and developer vision.

It was never suggested, at least not by me, that devs are forced to use it this way. They're free to use all 18 on graphics, 16 on graphics with 2 on compute, 5 on graphics with 13 on compute. It's all up to devs.

TRUTH

Sony just gave a recommendation based on what they observed taking place after 14 CUs for graphics specific tasks.

False, its just a suggestion, has nothing to do with scaling or performance falling off at a certain point.

The extra resources are there, there's a point of diminishing returns for graphics specific operations, so they suggest using all the extra ALU resources for compute, rather than using them for graphics and possibly not getting the full bang for your buck. That's how I see it, and probably the last I'll say on the subject.

Yes not all operations in GPU utilize the CU 100%, in fact Sony added 8 ACEs which allow developers to schedule compute tasks efficiently and even went further to allow utilization of unused wavefronts asynchronously during graphics rending on all 18CU

Seems to fly in the face of me always saying the PS4 is easily the stronger console, doesn't it? I've just had disagreements on how noticeable that difference will appear to be in real games, since both systems will crank out incredible looking games.

Both consoles will crank out good games but on paper PS4 is more powerful than Xbox One.

There is no sinister motivation behind me saying it. It was in direct response to a post that seemed to be suggesting the claim of some kind of 14 CU balance of sorts on the PS4 was completely unfounded, and I pointed out that this wasn't exactly the case, and that there is something more to that remark than just Jedi PR mind tricks.

Microsoft employee quoting a vgleaks error to support his claim of 12CU being the perfect number, nooo not PR jedi mind trick. So which is it, 12 or 14 or just confirmation bias

I thought in light of what MS said recently about the CPU limiting games, and how their clock speed increase gave them more than the extra 2 CUs, that this information I've known for awhile, might now make more sense in retrospect, as a possible explanation as to how or why that performance curve I heard about exists.

Confirmation bias

It wasn't an attempt to say the gap has closed, or anything silly like that. I've said on here time and time again, that the compute advantage of the PS4 would likely end up leading to things that simply couldn't be recreated on the Xbox One, and it may be in that area where the gap in power is showcased most between the two systems, rather than in raw graphics quality.

Actually, in compute PS4 would beat Xbox One and in graphics it will beat Xbox One. By how much is yet to be seen but on Paper it does.

Sounds reasonable, I thought, but people seem to just treat most things I say as an attempt to snipe at the PS4. Always gloss over me complimenting and praising the system for its power.

Doesnt sound reasonable, you are downplaying PS4 performance advantage by stating some Microsoft concocted BS about 12CU being the optimal amount while also saying 14+4 bullshit as evidence that Sony supports that theory. If we didn't have GPUs like the 7990 who are unbalanced with more than 12CUs and can obliterate PS4 and Xbox One in graphics depertment. AMD must be doing something wrong by adding all those CUs.

I can see that too but I don't know how hard is make this happen in Xbone... PS4 have 8 ACEs and 64 queueds to developers uses to others stream processors for compute tasks... Xbone have 2 ACEs and 8 queueds? I don't know the number but if they try to use more compute tasks than the hardware can manage they will enter in queue even if there are stream processors free to use.

I think the Xbox One has 2 ACEs with 4 queues.

panda-zebra

Member

Ok, now we're talking about dick length ... I'm DEFINITELY out of my depth.

thread twist recap: ps4's dick is bigger, but, according to ss who knows someone who knows sony, it'll not increase linearly beyond a certain length, unfortunately taking a hard knee-bend to the right and resulting in difficulties with balance.

cerny points out possibilities for avoiding such woes with a suggestion to maybe consider a little creative ballsack-action, but that's not for everyone and it might take a few years for people to come around to the idea.

I think the Xbox One has 2 ACEs with 4 queues.

2 ACEs with 8 queues each iirc. Can check back tomorrow.

No, it's not. It's down to how games 'typically' run on any GPU and how much ALU a 'typical' game uses at a given res vs other resources. A PC GPU with the same ratio of ALU:Rops:bandwidth would see the same less than linear return on more ALU past a certain point with the same games. It is not some soldered-into-the-hardware switch, it's a matter of 'typical' software balance and how it relates to hardware.

Your root information is correct, but that interpretation is not.

Do AMD and nvidia do this kind of profiling of current game engines? If so, I wonder why they continue to make GPUs with these kinds of ratios - portent with even more CUs than PS4 but similar ROPs and bandwidth. Shouldn't they be seeing similar drop offs in performance and should consider adjusting their cards? Or does the varying nature of PC screen resolutions bring that equation back into balance?

Chobel

Member

I think the Xbox One has 2 ACEs with 4 queues.

I think it's 2 ACEs with 8 queues each, I remember Kidbeta saying that.

Finally caught up on this thread. It's kind of crazy how it seems like SenjutsuSage and I have actually been saying very similar things, just with slight variations in phrasing that cause a huge change in interpretation. This info doesn't really change what we know about the PS4 power or architecture but does make it clear that Sony is very much trying to push GPU compute with devs. Thanks for the info, SS. Sorry for kind of jumping on you earlier. Also thanks to Gofreak, he explained some stuff that I never knew before. Been a while since I've learned something new in one of these threads.

This post is beautiful.

thread twist recap: ps4's dick is bigger, but, according to ss who knows someone who knows sony, it'll not increase linearly beyond a certain length, unfortunately taking a hard knee-bend to the right and resulting in difficulties with balance.

cerny points out possibilities for avoiding such woes with a suggestion to maybe consider a little creative ballsack-action, but that's not for everyone and it might take a few years for people to come around to the idea.

This post is beautiful.

LiquidMetal14

hide your water-based mammals

You are saying a whole lotta nothing.

Fair points even though it's not the whole story. It just isn't worth going back and forth with this guy.

JonathanPower

Member

Do AMD and nvidia do this kind of profiling of current game engines? If so, I wonder why they continue to make GPUs with these kinds of ratios - portent with even more CUs than PS4 but similar ROPs and bandwidth. Shouldn't they be seeing similar drop offs in performance and should consider adjusting their cards? Or does the varying nature of PC screen resolutions bring that equation back into balance?

I think you answered your own question. If the bandwidth is similar, then you can only take advantage of a similar number of ROP units.

This stuff is hilarious.

5 billion transistors!

Power of the cloud, your console is 4x more powerful!

Wait no, specs don't matter!

Wait no, they matter, we're doing all these last minute tweaks for 6% increase here and there. That's gonna make a huge difference! Forget the two previous PR lines!

Diminishing returns! Objectively better HW is not really better. Let me show you this by randomly adding BW numbers!

We got balance, baby!

What a trainwreck.

5 billion transistors!

Power of the cloud, your console is 4x more powerful!

Wait no, specs don't matter!

Wait no, they matter, we're doing all these last minute tweaks for 6% increase here and there. That's gonna make a huge difference! Forget the two previous PR lines!

Diminishing returns! Objectively better HW is not really better. Let me show you this by randomly adding BW numbers!

We got balance, baby!

What a trainwreck.