There are a lot of beneficies and most of them are effects of each other...

+ More performance at the same power target

+ Cheaper PSU

+ Less power consumption (daaaaa)

+ Less heat

+ Cheaper cool system for the GPU and the build

+ Cheaper GPU parts

+ Less issues with clock throttles.

-The first one is definitely important on the engineering side of things, but the consumer doesn't need to worry about that

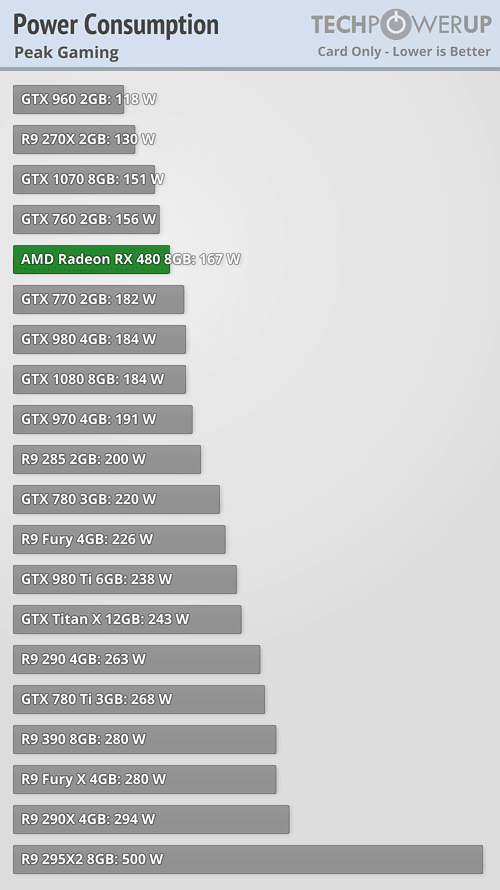

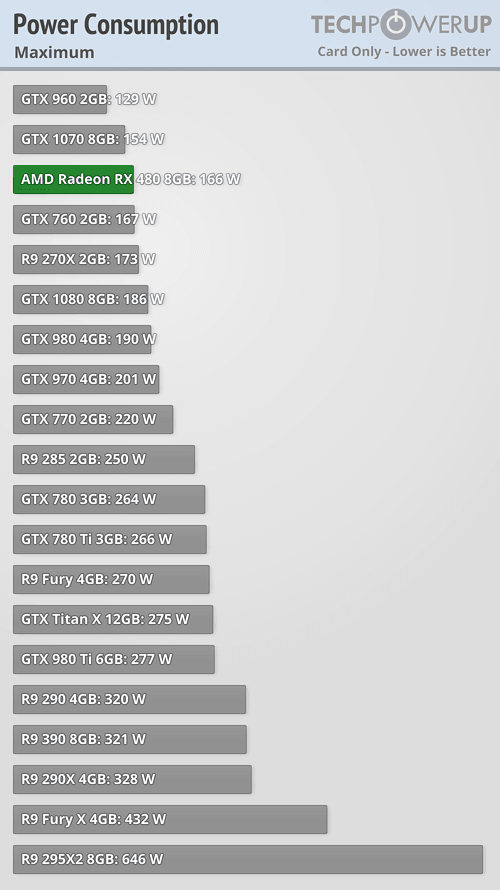

-You can get PSUs (550W) that can run practically any setup for about $35. You could go a bit cheaper if you get a 1060 over a 480, but by about like $5 and fewer watts/dollar. I have an OC'ed 4790K/Titan XP system and power draw doesn't exceed 600W from the wall. I have an 80+ bronze efficiency PSU so the actual power requirements are more in line with 500W. I picked up that particular 750W PSU for $50 too.

-Less power consumption, but insignificant factoring in everything the normal house has running and less important considering the power consumption of the entire system.

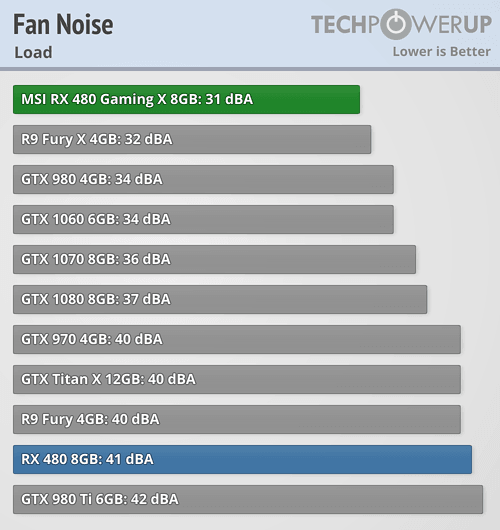

-It's true that there is less heat, but not by a substantial amount. I can't say I can tell the difference between a Titan XP, an R9 290, a GTX 980 and 2x670s as far as heat. Noise is more important IMO and that depends on the cooling. There are plenty of quiet designs on both sides.

-You won't need extra cooling capacity for an AMD card over an NV one.

-Important for the manufacturer, but the end user doesn't have to worry about anything except what it's costing them, and there are lots of great deals on AMD cards.

-Depends entirely on the cooling solution. Rarely a problem with any aftermarket card.