lightchris

Member

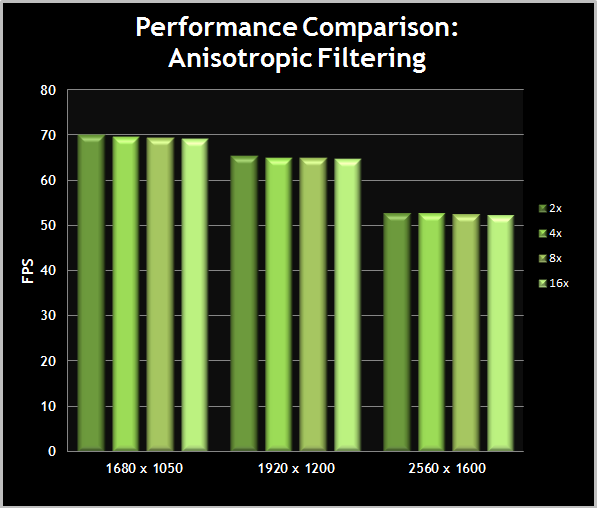

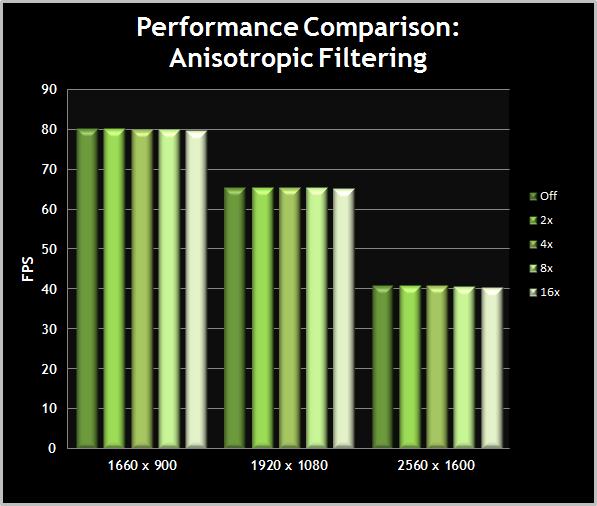

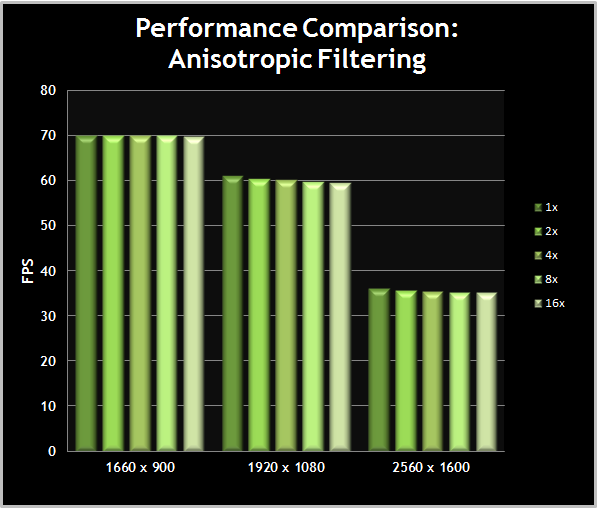

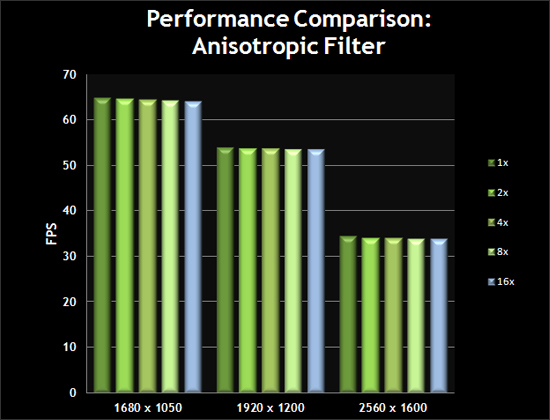

In practice this is the performance hit we are talking about:

Max Payne 3:

Borderlands 2:

Deus Ex: Human Revolution:

even Crysis 3:

and Battlefield 3:

It is absolutely negligible.

On which graphics card though?

It really depends on the circumstances. Just did a quick check in the WRC3 Demo on my GTX460. Results:

No AF:

16xAF:

That's 13% faster without AF. Doesn't mean it isn't worth it, but as I said: Depending on the situation it can be a noticeable performance hit.

Doesn't mean it isn't worth it f course.

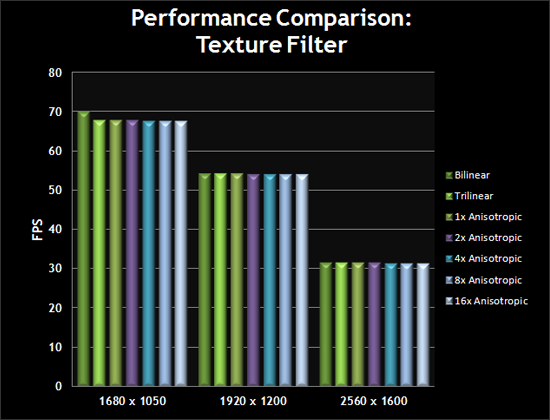

Isn't the trilinear one more realistic?

Ehh.. no. Take a look out of the window.