MightySquirrel

Banned

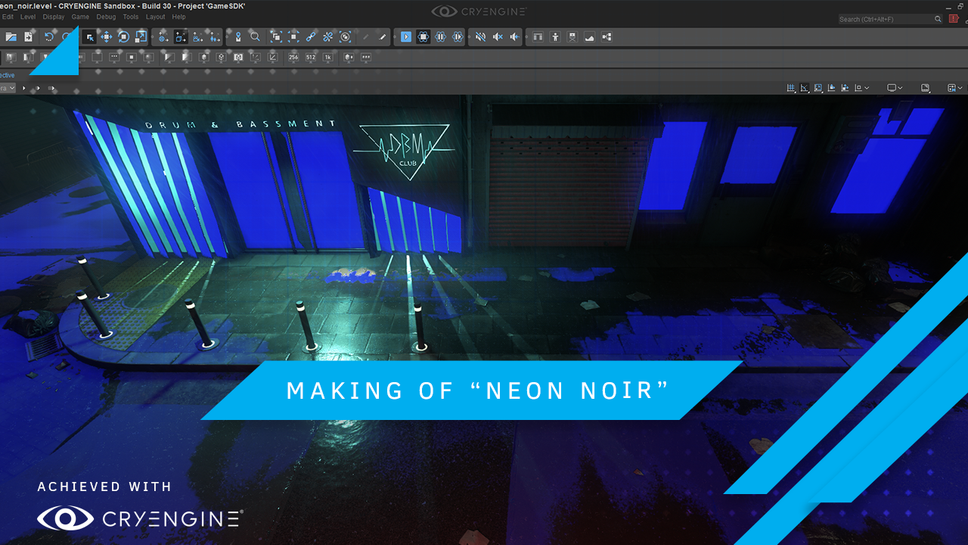

Impressive considering it's running on V56. Crytek still got it.

Edit: Seems like this post came out wrong. This is not meant as a negative post, I'm just trying to add some context and explain why Crytek is able to achieve this on normal hardware.

Its a tech demo, just like the RTX star wars demo, both arent really possible in-game though, even less so without raytrace hardware.

From my understanding, dedicated hardware was never 'necessary.' It just makes it more efficient.

Very unlikely, even with fancy reconstruction algos. In order for a pixel to get (1) a shadow from a single light source, and (2) reflections, you need at the very least one shadow ray and one reflective ray.I could've sworn I heard Alex from digital foundry say metro on ultra dxr is 1 ray per pixel.

Metro doesn't use it for reflections though just global illumination - so shadows and light bounce.Very unlikely, even with fancy reconstruction algos. In order for a pixel to get (1) a shadow from a single light source, and (2) reflections, you need at the very least one shadow ray and one reflective ray.

I’m waiting for the lighting in games to look like this. With Vega56 used here, hopefully it’s a hint that this will be what we can expect from next gen.

Don't be so cut and dry. There will certainly be developers that want to push visual fidelity, with higher poly models, high res textures, more realistic shaders, more detailed animations, and realtime raytracing. With a aim for 30fps/unlocked fps at 4KWe are looking at the thing that will stop the push for 60fps on next gen consoles.

Raytracing can stay as a demo, 60fps is more important.

If it's just shadows from a single source then 1 ray/pixel could do. Add GI bounces and you'd be waiting for a while until that image converged with 1 ray/pixel/frame. So again, chances for 1 ray/pixel/frame are very slim.Metro doesn't use it for reflections though just global illumination - so shadows and light bounce.

The programmer on metro said its "up to one ray per pixel on ultra", and half the rays on high settings.If it's just shadows from a single source then 1 ray/pixel could do. Add GI bounces and you'd be waiting for a while until that image converged with 1 ray/pixel/frame. So again, chances for 1 ray/pixel/frame are very slim.

Ah, thanks for the link.The programmer on metro said its "up to one ray per pixel on ultra", and half the rays on high settings.

https://www.eurogamer.net/articles/digitalfoundry-2019-metro-exodus-tech-interview

The problem is that when you try to bring your number of samples right down, sometimes to one or less per pixel, you can really see the noise. So that is why we have a denoising TAA. Any individual frame will look very noisy, but when you accumulate information over a few frames and denoise as you go then you can build up the coverage you require.

So basically they rely on a very aggressive TAA to handle extreme under-sampling. Even though in such situations the AA would make sure no noise is visible, sample starvation at sharp camera movements/turn-arounds should manifest as spots and areas changing luma abruptly over a few frames.The problem is that when you try to bring your number of samples right down, sometimes to one or less per pixel, you can really see the noise. So that is why we have a denoising TAA. Any individual frame will look very noisy, but when you accumulate information over a few frames and denoise as you go then you can build up the coverage you require.

Well that's confusing is it 1rpp or 3?Ah, thanks for the link.

So basically they rely on a very aggressive TAA to handle extreme under-sampling. Even though in such situations the AA would make sure no noise is visible, sample starvation at sharp camera movements/turn-arounds should manifest as spots and areas changing luma abruptly over a few frames.

That said, we don't have such a rigid rpp limitation with our in-house RTX tech, but we still rely on TAA, as we don't do hybrid.

ed: BTW, in the video to the article they mention they do 3 rpp, and the undersampling effects I'm referring to are still visible,

There's a chance they do 1/sub-1 rpp at 4K (checkered) and 3 rpp at HD. Also, as I mentioned, RTX stack support evolved with time (along with devs' understanding of the tech), and many devs managed to squeeze more rpp with time.Well that's confusing is it 1rpp or 3?

I noticed the noise in Alex's video too btw even at ultra

One thing to keep in mind is that Crytek's implementation is supposedly SVOGI (Voxel-Based Global Illumination ) based which is a lot cheaper than ray based raytracing that we see with NVIDIA's RTX. Which would explain how they got this running on an Vega 56 without dedicated hardware.

Engine providers have no use of misrepresenting their engines' tech -- their engines' customers will discover any shenanigans sooner than later.Interesting article about this, I didn't read it all but some of the pictures leads to questions.

https://www.techquila.co.in/cryteks-ray-tracing-demo-fake/

RTX doesn't do full ray tracing either. So... What's the big deal? Seems to be either nVidia propaganda, or someone not knowing what they're talking about.Interesting article about this, I didn't read it all but some of the pictures leads to questions.

https://www.techquila.co.in/cryteks-ray-tracing-demo-fake/

lol give it up already, every gen is the same.60fps as standard

Hey! that's what i thought too! I expect more of these type of GI techniques to take off next gen, not raytracing.One thing to keep in mind is that Crytek's implementation is supposedly SVOGI (Voxel-Based Global Illumination ) based which is a lot cheaper than ray based raytracing that we see with NVIDIA's RTX.

If you want some reading material...lol give it up already, every gen is the same.

Theres no such thing as a 60 fps standard!

Hey! that's what i thought too! I expect more of these type of GI techniques to take off next gen, not raytracing.

So this is a rasterization technique? can somebody who knows explain

I'm not sure what exactly they did in the recent demo. But basically, whether it's cone tracing or voxel tracing, it is a form of ray tracing, but, you can see it as the equivalent of lowering the resolution. Because native ray tracing uses a ray (or multiple rays) for every single pixel. By using Voxels, you're basically using ray tracing on 9 pixels at the same time, for example, which really lowers the load. This is a viable solution until hardware catches up to full ray tracing.So more of a Easter technique?