Seriously? I'm not going to rehash all in details but if you've been paying attention (may not have affected you personally which is great):

Spiderman: Poor CPU utilization and boundedness (especially with RT On), VRAM limitations/perf degradation

Atomic Heart: Stutter, crashing, FPS drops (at launch)

Returnal: Poor CPU utilization and boundedness (especially with RT On), streaming stutters when loading (again at launch)

I'm so confused. So PC can offer a "far superior" experience compared to consoles, but having to wait anywhere from a few mins to an hour to precompile shaders before startup is somehow a superior experience to just loading the game and playing? (And having to redo that everytime you make a GPU driver or HW change BTW). Then you say APIs and drivers have never been better....but does that mean they are easier to developer for over console even in their current state? Going from "pain in the ass" to less of a "pain in the ass" is still a ..."Pain in the Ass". Also, you do realize that much of the pain with shader compilation stutter is due to how DX12 works and what the developers now have to do at the SW level right? No it's not just a UE4 issue

I never said console games don't have their issue. S**t making video games is difficult...PERIOD. But you're missing my point. Using The Last of US PT1 as a recent example:

To this point, the legacy of The Last of Us was simply that is was an exceptional game that many put up as one of the best gaming experiences ever! The legacy of the game was just as a shining example of what the video game medium is capable of and it's quality helped define the reputations of both PlayStation Studios and Naughty Dog as a whole. Even resulting in one of the best shows in HBO history as well. Keep in mind that this game has literally released on

3 Console Generations to this point with various amounts of remastering and remaking of the original game....and while there of course were patches to improve things, the discussion and legacy of the game never wavered from it just being an outstanding game. Now with the PC port, what is the discussion and legacy? It's about how it doesn't run on this and that sku. It's about how poor of a port it is, how bad it performs, and how its crashing for many users, how it can't hit this resolution and FPS on this system etc .

No matter what they do from this point on to fix, these impressions will forever stick when thinking back to the PC version of this game. The legacy is now a bunch of silly memes with super wet characters and black artifacts all over them. I haven't seen a single forum thread or media article discussing just how great a game (at its core) The Last of Us really is with this PC release. Why? (here it is...) Because oftentimes, the nature of the PC and the recent issues that come with that has made it increasingly difficult for gamers to just enjoy the games they are playing. Admittedly, it's hard to just enjoy the game when you have glitching, stuttering, inconsistent performances, crashing, and other issues you have to debug just to get it to work properly. Sure, not EVERYONE has these issues but that's the whole point: there isn't just a single PC to consider. Hell there isn't even a series or level of PCs to consider with HW spanning a decade or more at times.

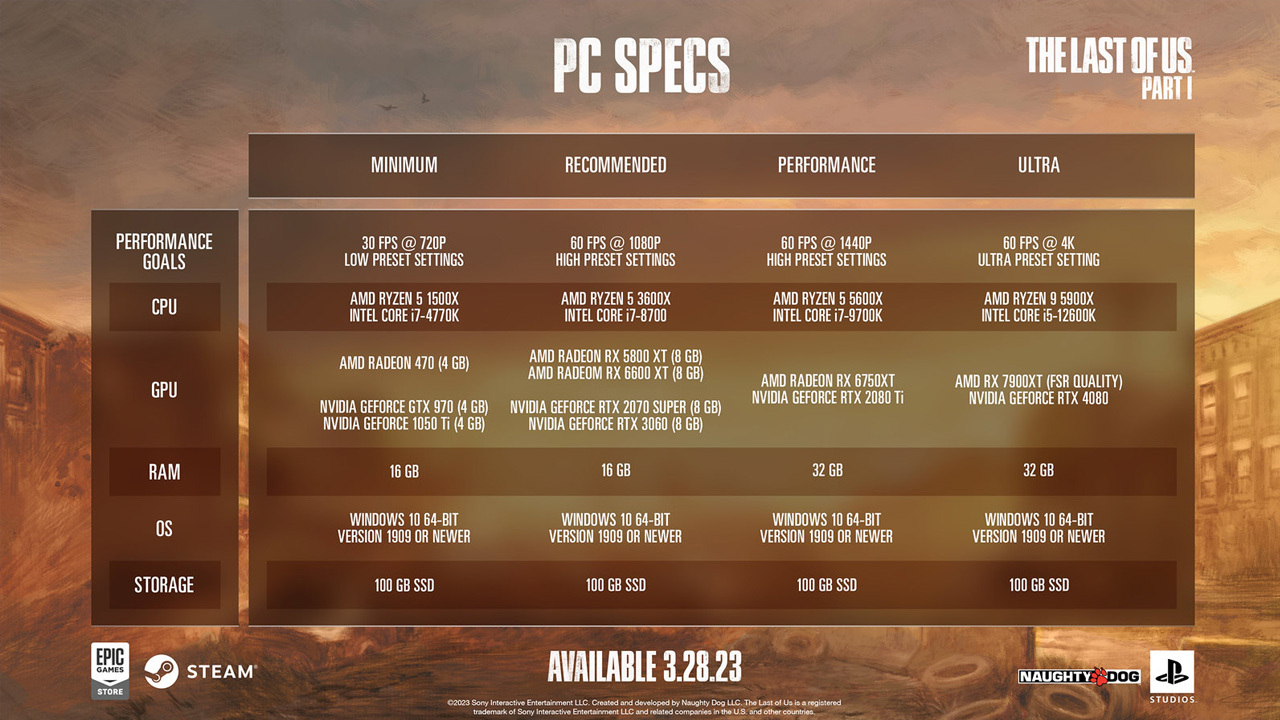

Everyone loves to act like "The PC" is just this single monolithic beast of a computer...how many of you realize that most dev studios barely have 4000x Series cards to test their games on (nvm RX7000 series)? There maybe 1-3 Ada cards at a studio typically reserved to some key devs on the team and not the QA lab (for example). At the same time, there may not be any non-RTX cards or DX9 cards or Windows 10 systems available to test on either. There's so much trust and reliance on so many external entities doing their job properly and address any new bugs just to ensure a game will work correctly. You hope Microsoft does it's job with the Windows OS and it's commands that your game relies on. You hope that Nvidia and AMDs drivers function as expected for the full range of skus out in the market (beyond what you can test). You hope that Steam, Epic, Xbox, Origins, Battlenet, Uplay etc work properly for every user despite what they may be running on their machine. You hope that OBS, Geforce Experience, Logitech, Razer, Norton and whatever other SW is running on the user's machine doesn't mess with the game's functionality and performance. Most importantly, you HOPE that the user will follow instructions, heed warnings, and use "common sense" to not try to do things to the game that may break the game (e.g attempt to run ultra settings and RT on a 5 yr old non-RT GPU with only 8Gbs or pair their old Intel 6000 Skylake quad core CPU with an RTX 4090 and wonder why the perf is so bad

)

Yes game development in general is a b*t** but PC development has its own challenges making it even worst in many ways. When you really think about it, it's literally a miracle something as complex as a modern video game could ever ship without any egregious issues that would prevent the player from simply enjoying the game.