But the TF isn't the important thing at a dev summit. The number of CUs are. Because that gives the developers an indication of what resources they'll have to churn through their tasks.

It seems unlikely (if the dev leak is true) that the number of CUs would be reduced for this reason just to hit the same TF.

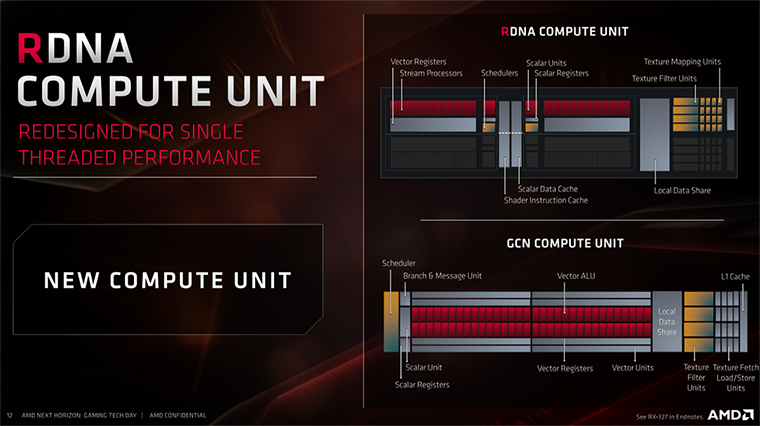

The GCN vs Navi perf difference (25%) also lines up with the dev rumours of PS5 dev kits outperforming Anaconda dev kits by 20-30%.

For the perf gap to be noticeable we're not talking about single digit percentages. A 25% perf gap fits the bill.

1. We have to distinguish if guy leaking this is dev

2. After that we have to see if 56 @ 1.8GHz - 13TF chip is referring to final spec or actual dev kits that went out with PC hardware (this would be analogue to PS4 dev kit roadmap where in June 2012 first PC based dev kits went out - see here

http://vgleaks.com/orbis-devkits-roadmaptypes/)

3. Sony and MS gave target specs to devs back in 2012, but not official documentation. But with Pro and X, this happened much later. For X, dev kits were sent less then a year console went out.

4. You have to ask yourself validity of a leak that says 13TF Navi @ 1.8GHz can be expected in console, because EVERYTHING we know about Navi tells us we shouldnt expect anything close.

5. Why 1.8GHz? In April, 1.8GHz Gonzalo was leaked. Its ID decoded tells us its console SOC, connected to codename Ariel found amongst Sony's chips in PCI ID base.

It is QS sample (from ES1 in Jan) and has base Zen2 CPU clocked at 1.6GHz

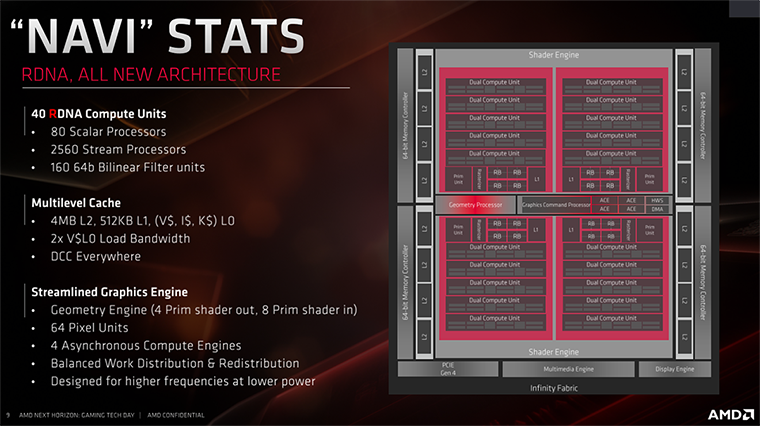

base (hmhm why 1.6GHz?) and 3.2GHz boost. Additionaly, it contsins Navi LITE GPU clocked at 1.8TF. Navi Lite? 36CUs @ 1.8GHz - 8.3TF, even that would barely make it into console IMO.

So 36CU Navi and Zen2 would not make it terribly big chip on 7nm, but along with PCB leak from Reddit which mentions 256 bit bus and 316mm² size it would make complete sense to be Sonys SOC.

Sony has, btw, been on "roll" of sizing down their chips since PS2, which was 510mm² chip. Then came PS3 with 470mm², PS4 with 348mm² and in the end Pro with 320mm².

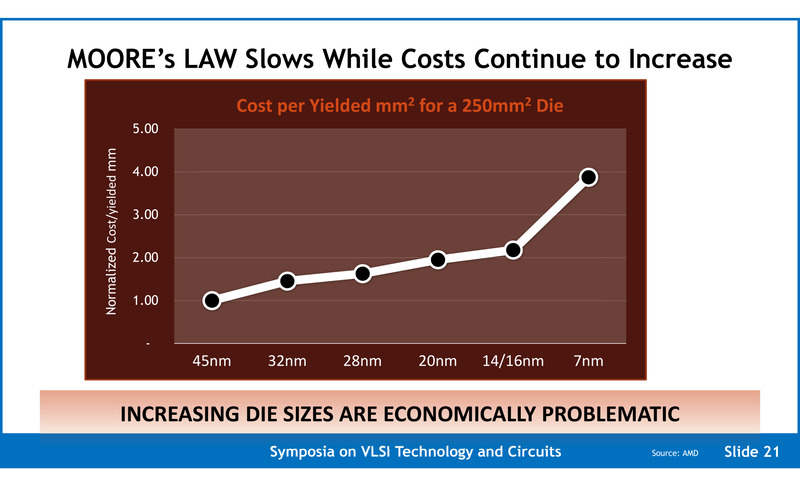

So as nodes get smaller and smaller, and prices for mm² get higher, Sony puts smaller die sizes in their consoles, likely duo to increased costs.

So, for me, nothing bar Gonzalo and Reddit PCB, technically speaking, makes any sense. If Sony had 13TF GPU in PS5 you would be hearing about it the minute they went to Wired and releaved PS5. Instead you have Cerny talking about immersion, 3D sound, SSD, CPU and RT. Nothing about mythical GPU (and rest assured, that Navi 13TF would be biblical. Probably matching 2080TI.