Bankai

Member

Thats the engine. It hot nothing to do with ssd

question answered

Thats the engine. It hot nothing to do with ssd

So it could not be done on PS4 as it is, glad we agree.

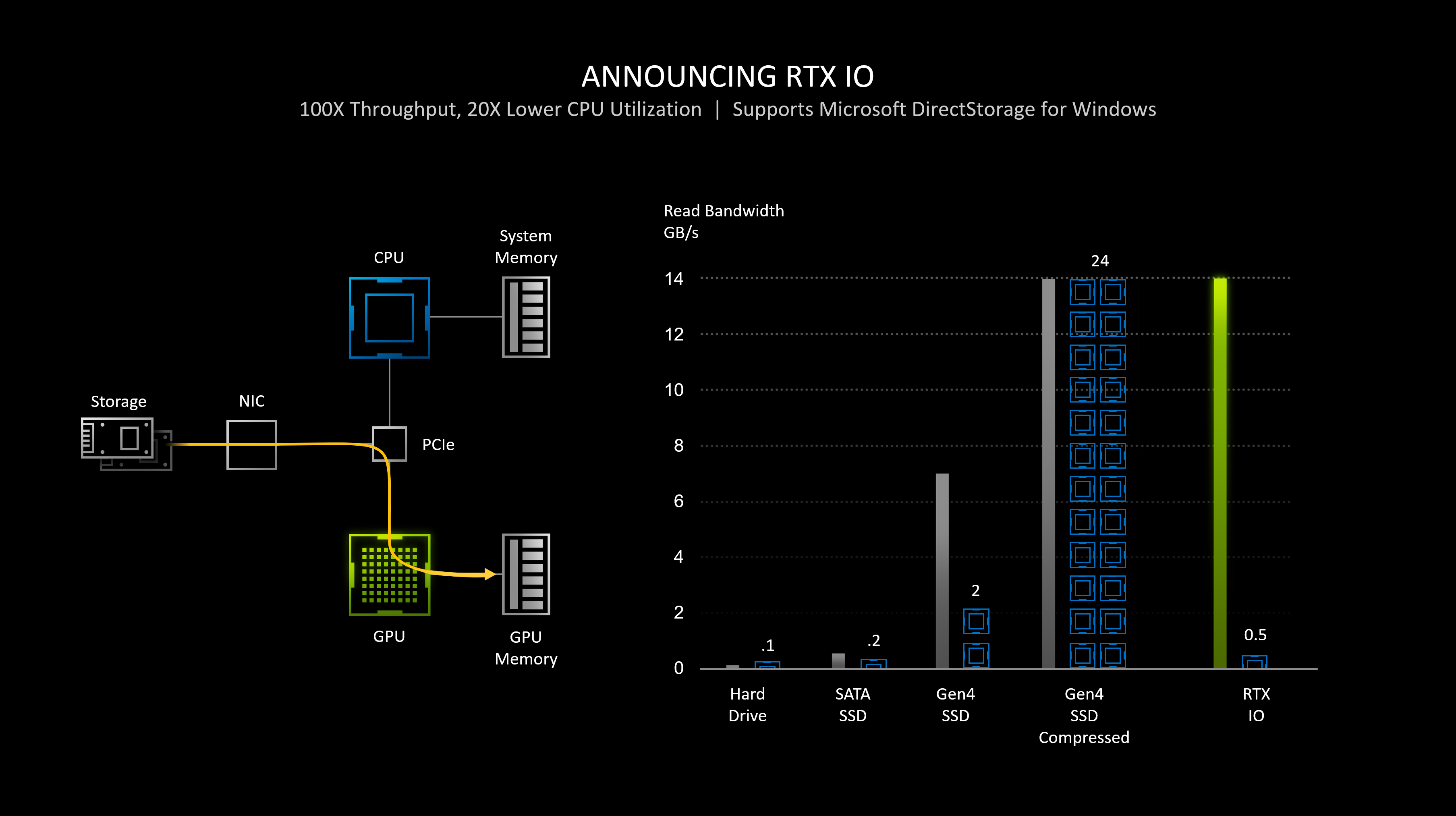

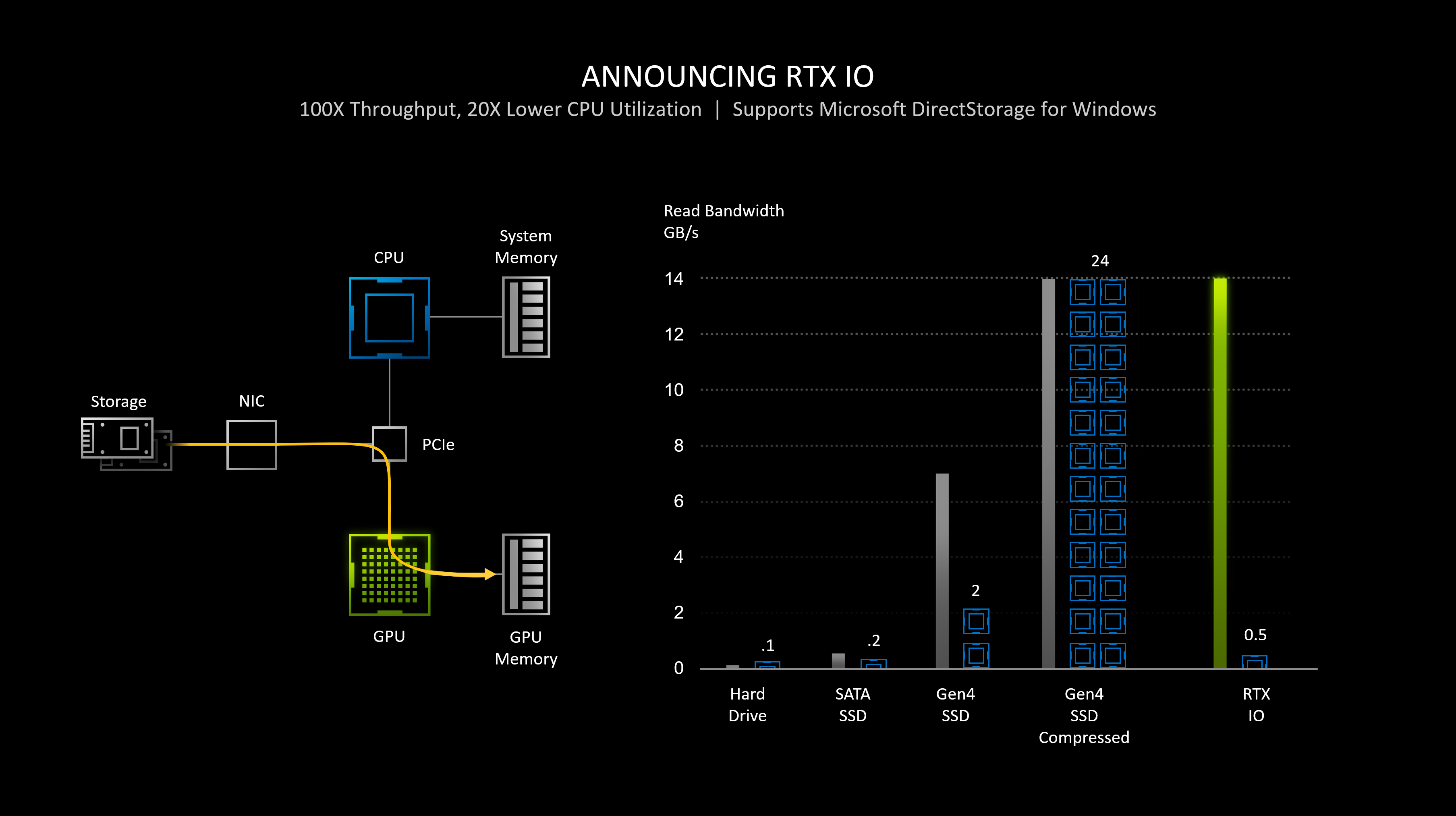

Well if they use GPU decompression there are going to take away GPU ressources that could still potentially cause framerate drops during gameplay. Those could be less impactful though depending of the GPU headroom than what we see in Spider-Man on PC.The whole point of DirectStorage and the PS5s custom I/O block is to basically alleviate strain on the CPU.

Until Devs start using GPU de/compression on PC.

DirectStorage/RTXIO would be implemented Asynchronously.Well if they use GPU decompression there are going to take away GPU ressources that could still potentially cause framerate drops during gameplay. Those could be less impactful though depending of the GPU headroom than what we see in Spider-Man on PC.

On PS5 I/O (done properly) takes no ressources on either CPU or GPU.

nah, if that day ever comes I sure hope someone connects a stock PS4 HDD via a USB adaptor and runs it on that.

that's the first thing I'd do, mainly because I literally have a stock PS4 HDD connected to my PC via USB

(when I bought my PS4 Fat I instantly removed the stock HDD and replaced it with a 2TB one. I did the same with my Pro, whose stock HDD is now my Emulation drive on the Series X)

I uploaded a video in an older thread where I ran the Matrix UE5 demo on that drive... and it ran basically the same as on my internal Samsung SSD, maybe some small load stutters.

but the initial load took ages lol

play Titanfall 2's time travel level.

2 different level layouts loaded in at the same time, hit the crystal, your character gets teleported to the other version of the level.

both versions of the level use similar assets, which saves VRAM space, and the destroyed version of the level uses sparsely placed terrain at close proximity instead of the way more complex version in the intact version of it, meaning the destroyed version most likely needs way less VRAM space.

so one way you could do that is to have hidden background streaming of the needed assets from the other version every time you get close to one of the crystals.

the fact that you can't just switch any time you want makes this all way easer than if you could switch at any time like in Titanfall 2 for example.

Rift Apart can most likely have more detail in that level than it could have on a PS4 for example, but such a level can be done on last gen. it would simply need to be paired back a bit like any other game too.

smaller textures, some detail dialed back, and maybe a tiny transition animation if absolutely necessary.

I agree that the GPU hit would be small but it would still take GPU ressources (and async compute is already pretty much used extensively in modern engines on consoles since PS4 gen).DirectStorage/RTXIO would be implemented Asynchronously.

Just like current de/compression is Async, your CPU doesnt stop doing everything else to focus on de/compression, the just like the actual graphic/render queue de/comp would fit within the budget, part of DirectStorage API is to help devs not have to work "too hard" on figuring out how to not incur heavy penalities to their graphic/render queue.

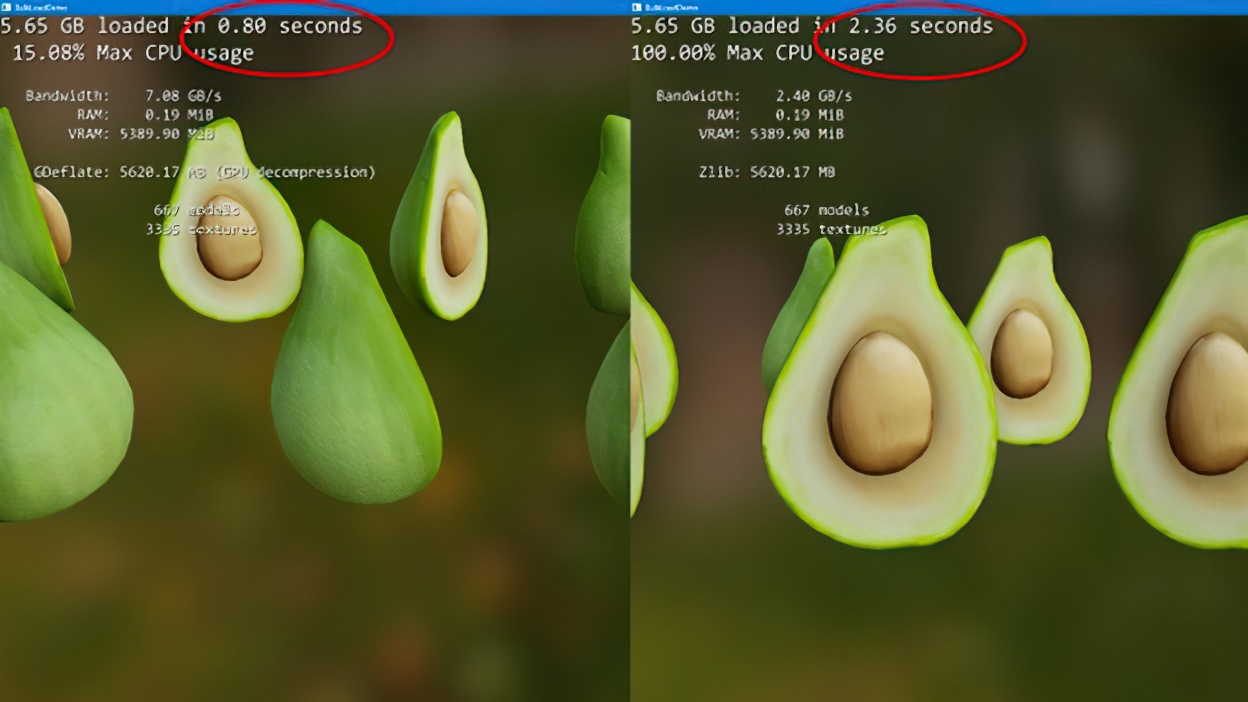

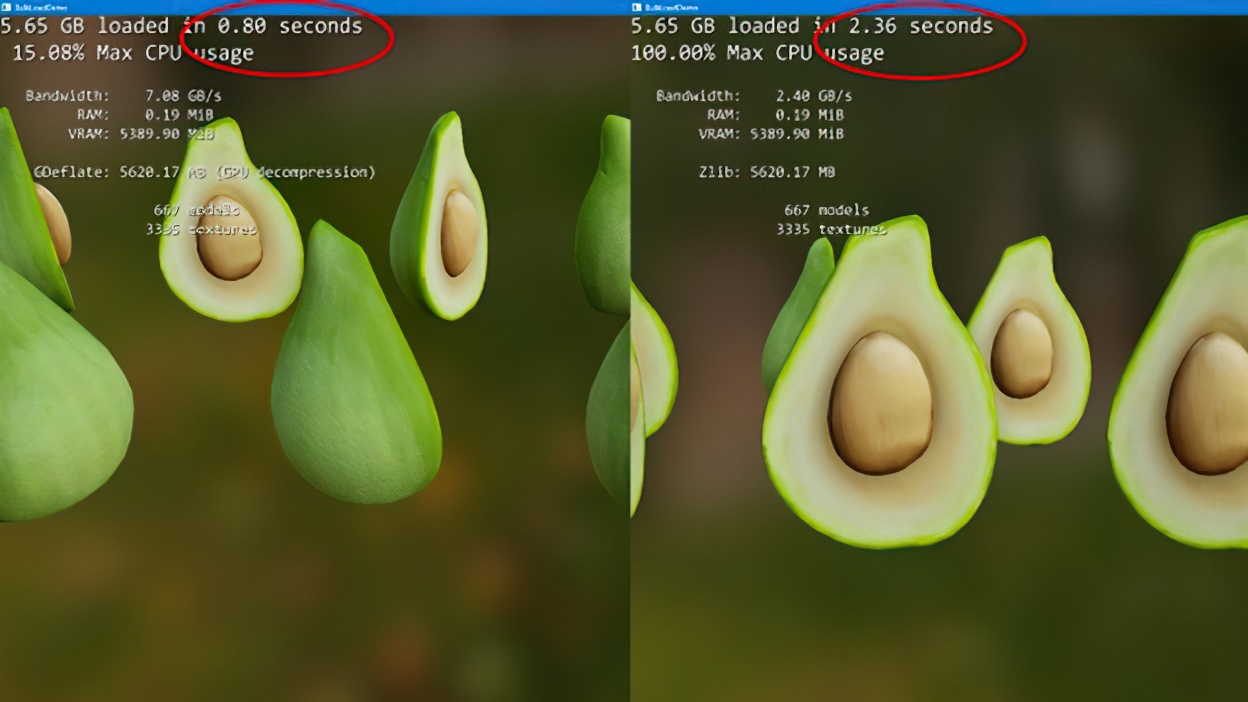

Second of all, the current build of GDeflate (Microsofts algo) does some 6 gigs in less than a second near completely freeing the CPU in the process, devs arent exactly going to shutdown the GPU to do that de/comp.

Devs still have to plan fallbacks for PCs that have slower drives but the hit to the GPU is minimal compared to the hit the CPU takes when it needs to do de/compression.

Third I take it you didnt know that both AMD and Nvidia have dedicated Async Copy/Compute capabilities?

No user can tell how much resource is being taken away from current workload by any sort of Aysnc Compute.I agree that the GPU hit would be small but it would still take GPU ressources (and async compute is already pretty much used extensively in modern engines on consoles since PS4 gen).

GPU decompression will be ideal for traditionnal loading static (or semi-statics like tight crawl space). But during gameplay, say a very CPU/GPU demanding open-world game like Spider-man or Cyberpunk, then the GPU ressources used to decompress data will be substracted from usual image rendering (lighting, RT, textures etc).

That won't be a big problem on PC (people will buy bigger GPUs instead of bigger CPUs) but on consoles with much weaker GPUs (and CPUs) the ressources (include async jobs) are usually already pretty much maximized in many modern engines so GPU decompression still will have a substantial cost.

In the future the way to go for decompressing data (and storing them to Vram) is clearly dedicated hardware (bypassing CPU and GPU like on PS5) as I/O is clearly becoming the main bottleneck in big open world games.

The video is timestamped at 28:42 This is just before ratchet enters the portal.

28:47. Ratchet enters a disguised loading screen where you literally just ride on one rail and can't do anything else.

28:51. Ratchet leaves the rail set piece and enters another portal

28:54. Ratchet enters another disguised loading screen where you just slide down a wall

28:58. Ratchet enters another portal

29:02. Ratchet yet again enters another disguised loading screen where you have virtually no movement or control over your character whatsoever.

29:06. Ratchet enters another portal

29:08. Yet again, another disguised loading screen...

29:15. Another portal...

29:18. Another disguised loading screen where you can at least move about and fight but you're placed onto a puny little boat fighting against 4 pirates

https://youtu.be/beaH_CCw-vA?t=1767

29:27. Fight ends, cutscene begins.

https://youtu.be/beaH_CCw-vA?t=1941

32:21. Finally you are free and not in a scripted on rails set piece anymore. The game has finally loaded a level.

And what if devs don’t take advantage of it which is my point doesnt it then become cpu related?The whole point of DirectStorage and the PS5s custom I/O block is to basically alleviate strain on the CPU.

Until Devs start using GPU de/compression on PC.

And what if devs don’t take advantage of it which is my point doesnt it then become cpu related?

What are you talking about man?And what if devs don’t take advantage of it which is my point doesnt it then become cpu related?

You are aware this is a different thread than the one talking about the pc version of Spider-Man right?What are you talking about man?

Multi quote so I can atleast follow your train of thought, you are randomly saying shit talking about RTX2060s and RTX3070s and CPU related issues on consoles, tasks being offloaded to other parts what the fuck man?

Do you mean consoles devs not using the PS5s I/O block and XSXs DirectStorage decomp?

If the devs are already within budget of their scope you cant force them to do anything.

If they arent dropping frames due to CPU decomp then thats that, they will use CPU decomp.

If a dev doesnt mind a loading screen....guess what we get a loading screen.

If an engine currently cant maximize the I/O of currentgen consoles but a dev still forces super high speed sections pop in is likely to happen.

It is very much possible to actually outrun an engine.

Makes senseYes, which is a (probably THE) reason why we still see pretty long load times in games that haven't been made to take advantage of the new APIs (just like PS4 games running on PS5).

Games like Demon's Souls and Miles Morales show what happens when you DO take advantage of that stuff (2 second loading fade-outs).

Various sony devs and 3rd party shills all kept jerking their dicks off on how it's going to be the biggest gaming leap since 3D and revolutionise game design completely. So far all we've seen is fast loading times.

There was an element of fanboys trying to use the PS5 SSD as some sort of graphics equaliser over the XSX higher specs.I blame people vastly over hyping the SSD.