-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Quantum Break PC performance thread

- Thread starter GroinShooter

- Start date

dr_rus

Member

Guys, I still can't play this at 3440x1440, even with an overclocked Pascal Titan X.

What settings should I turn down to get some frames without it affecting the quality too much? Because I am cannot stand the Vaseline smeared upscaling option.

Start with Volumetric Lightning.

LukasTaves

Member

What about memory and bandwidth? The game has some heavy buffers for all the lighting and they are directly related to the resolution.XONE - 1.3 TFLOPS

Fury X - 8.6 TFLOPS (6.6x faster not counting massively faster CPU in PCs).

The fact that performance on pc only falls behind of the expected at higher resolution and quality is pretty telling that it's not a bad port, just a demanding game. Specially taking into account that memory and bandwidth wise the improvement have been way more modest than flops.

Kikorin

Member

Started the game last night on my new pc and I've to say is awesome. I was out of pc gaming for so long, but now I made a powerful pc for work and obviously I'll use it for gaming too.

I've a GTX 1080, tried at 1080p all on max and work at 60fps, after loooong time on console gaming this is a huge jump.

I've a 4k TV anyway, what do you think? I have to try an higher res and lower details, or better 60fps all on max at 1080p?

I've a GTX 1080, tried at 1080p all on max and work at 60fps, after loooong time on console gaming this is a huge jump.

I've a 4k TV anyway, what do you think? I have to try an higher res and lower details, or better 60fps all on max at 1080p?

sertopico

Member

Guys, I still can't play this at 3440x1440, even with an overclocked Pascal Titan X.

What settings should I turn down to get some frames without it affecting the quality too much? Because I am cannot stand the Vaseline smeared upscaling option.

As written above, set vol lights to medium (you can gain 30 fps more), then try with effects quality (but the difference between the two presets is quite noticeable in my opinion) then with shadows quality and antialiasing (10-20 fps more, when both get lowered).

Start with Volumetric Lightning.

As written above, set vol lights to medium (you can gain 30 fps more), then try with effects quality (but the difference between the two presets is quite noticeable in my opinion) then with shadows quality and antialiasing (10-20 fps more, when both get lowered).

Thanks!

Setting Volumetric Lighting and Screen Space Reflections to medium lets me keep everything else at max without upscaling at 3440x1440 and still play smoothly. FPS is still all over the place, but the minimum is high enough now and GSYNC smooths out the inconsistencies.

I've a 4k TV anyway, what do you think? I have to try an higher res and lower details, or better 60fps all on max at 1080p?

That depends on what you prefer. I'd stick to 1080p, especially since it upscales nicely to 4K without losing quality.

dr_rus

Member

What about memory and bandwidth? The game has some heavy buffers for all the lighting and they are directly related to the resolution.

The fact that performance on pc only falls behind of the expected at higher resolution and quality is pretty telling that it's not a bad port, just a demanding game. Specially taking into account that memory and bandwidth wise the improvement have been way more modest than flops.

If some approach works on XBO but produce subpar results (in both performance and quality) on PC h/w when scaled up then it is a bad approach for PC version of the renderer and is thus by definition a bad port. QB runs way worse than it should for the graphics it is outputting on high and ultra settings - and even on medium ones in case of NV h/w. I find it rather strange as well that there's even a performance change when modifying geometric detail setting on my 980Ti as I can't see how GM200 isn't enough for QB's geometry. It is a badly ported console engine.

Inspectah_Deck

Member

Now I wanted to do a comparison of FPS before and after the anniversary update, because of this:

Also, QB runs more unstable since the anni. update, I already had 3 crashes and not only one before.

But on the other side, for me effects on high was too much in this level.

There was so much going on and I found that too straining on the eyes, normal looked better imo (Not kidding).

That will look glorious and I think the 1080 will be able to handle it.

But it seems like Dxtory OSD no longer works in QB.Faster D3D12 runtime

Draw and Dispatch APIs are up to ~10% faster on CPU. Developers will see this performance gain without any code change.

Also, QB runs more unstable since the anni. update, I already had 3 crashes and not only one before.

Yes, effects quality on high cost me around 20fps in the bridge level.effects quality (but the difference between the two presets is quite noticeable in my opinion)

But on the other side, for me effects on high was too much in this level.

There was so much going on and I found that too straining on the eyes, normal looked better imo (Not kidding).

Go 4k with medium settings, AA off, textures ultra, geometry high and upscaling on.I've a 4k TV anyway, what do you think? I have to try an higher res and lower details, or better 60fps all on max at 1080p?

That will look glorious and I think the 1080 will be able to handle it.

I think it will look more glorious with almost all the graphical settings maxed out in 1080p rather than with everything on medium in 4K.

I'd agree with you in most games, but in this there is barely any difference between medium and high whereas 4 times the res will definitely be noticeable.

Inspectah_Deck

Member

This!I'd agree with you in most games, but in this there is barely any difference between medium and high whereas 4 times the res will definitely be noticeable.

Ok, Dxtory now works again with the latest build (2.0.135).

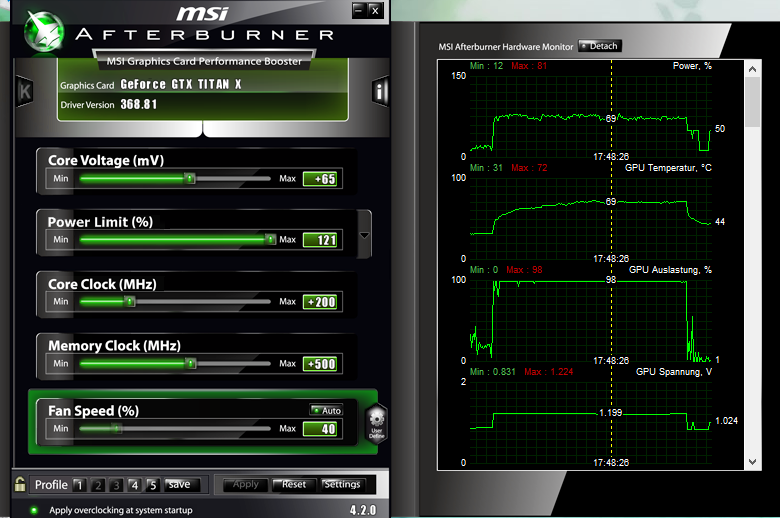

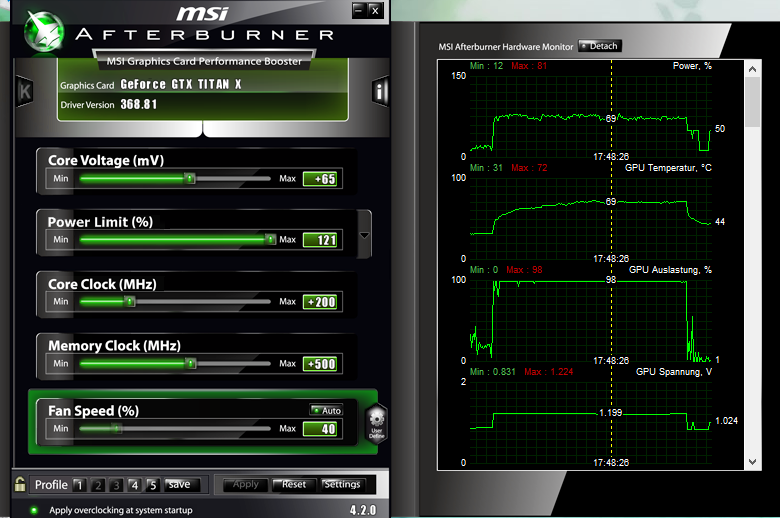

Before anniversary update:

After anniversary update

One FPS is one FPS I guess.

Need to test some other areas when I have time.

FunkyDealer

Banned

Is this the new Crysis?

icecold1983

Member

yeah comparing a titanx/1080 to the gpu in XB and i dont see how anyone can say this is a well optimized port with the above performance

Alethebeer

Member

Keep getting a mini freeze after every couple of minutes with a GTX 1070, i7 6700 and 16gb of RAM. What could it be?

Alethebeer

Member

What is a "mini freeze"? It may be driver version that QB does not like, or aftermath of Anniversary Update (if you did it).

The screen freezes like 0,5 seconds and the frames which should be in that 0,5 seconds just skip. E.g. if i'm running following a straight line the screen freezes on point A but at the end of the freeze i'm not starting from point A but from point B which is a bit farther.

Hope i was clear, i'm italian :/

PS: No anniversary update and i have the latest drivers.

LukasTaves

Member

4 times the screen resolution at almost twice the framerate for the titan X and at higher settings, which involves volumetric data.yeah comparing a titanx/1080 to the gpu in XB and i dont see how anyone can say this is a well optimized port with the above performance

The resolution/framerate is close to 8 times performance, not taking into account the higher settings which makes it a even higher jump... Dunno what you were expecting.

But it does not scale linearly. If you have a game that runs (say) 30fps in 4K, it almost never runs 120fps in 1080p, usually less. Which means increasing resolution 4 times does not drop framerate to 1/4th of previous value.

https://www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/8.html

108 fps in 1080p - according to your logic fps in 4K should be 108/4=27. But it's actually 67, over twice as much.

Or if we reverse it, if a game does 67 fps in 4K, then by your logic it should do 268 in 1080p - but it actually does 108.

Maybe syndicate is not the best example, so here's one of the best optimized games I ever played, BF4:

https://www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/10.html

1080p - 193fps, so in 4K it "should" be 48, but it's not, it's 66.

Is the game installed on SSD or HDD? (should be on SSD).

https://www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/8.html

108 fps in 1080p - according to your logic fps in 4K should be 108/4=27. But it's actually 67, over twice as much.

Or if we reverse it, if a game does 67 fps in 4K, then by your logic it should do 268 in 1080p - but it actually does 108.

Maybe syndicate is not the best example, so here's one of the best optimized games I ever played, BF4:

https://www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/10.html

1080p - 193fps, so in 4K it "should" be 48, but it's not, it's 66.

The screen freezes like 0,5 seconds and the frames which should be in that 0,5 seconds just skip. E.g. if i'm running following a straight line the screen freezes on point A but at the end of the freeze i'm not starting from point A but from point B which is a bit farther.

Hope i was clear, i'm italian :/

PS: No anniversary update and i have the latest drivers.

Is the game installed on SSD or HDD? (should be on SSD).

Alethebeer

Member

Is the game installed on SSD or HDD? (should be on SSD).

Unfortunately on a HD, i have an SSD for games but i fail to figure out why i did not install it there. By the way i turned on v-sync and it seems it stopped (i had v-sync off because g-sync is always on), i don't know why.

Not to mention the game pushes a ton of super heavy effects in general. Reflections everywhere and GI.4 times the screen resolution at almost twice the framerate for the titan X and at higher settings, which involves volumetric data.

The resolution/framerate is close to 8 times performance, not taking into account the higher settings which makes it a even higher jump... Dunno what you were expecting.

Dictator93

Member

Not to mention the game pushes a ton of super heavy effects in general. Reflections everywhere and GI.

But it does not scale linearly. If you have a game that runs (say) 30fps in 4K, it almost never runs 120fps in 1080p, usually less. Which means increasing resolution 4 times does not drop framerate to 1/4th of previous value.

https://www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/8.html

108 fps in 1080p - according to your logic fps in 4K should be 108/4=27. But it's actually 67, over twice as much.

Or if we reverse it, if a game does 67 fps in 4K, then by your logic it should do 268 in 1080p - but it actually does 108.

Maybe syndicate is not the best example, so here's one of the best optimized games I ever played, BF4:

https://www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/10.html

1080p - 193fps, so in 4K it "should" be 48, but it's not, it's 66.

Is the game installed on SSD or HDD? (should be on SSD).

yeah comparing a titanx/1080 to the gpu in XB and i dont see how anyone can say this is a well optimized port with the above performance

Still something is not right in QB on NV cards at least form my personal experience. Although I get high nominal GPU utilisation, I get the distinct impression that the game is not actually properly using all the card's registers and hardware units.

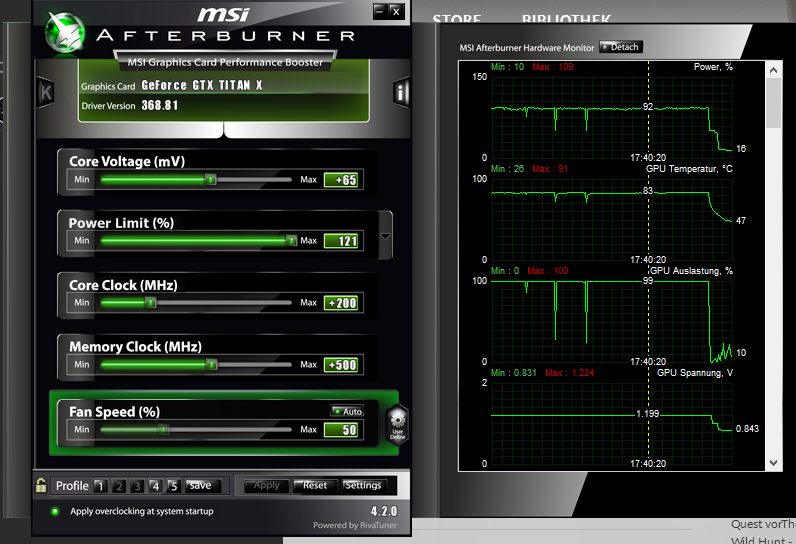

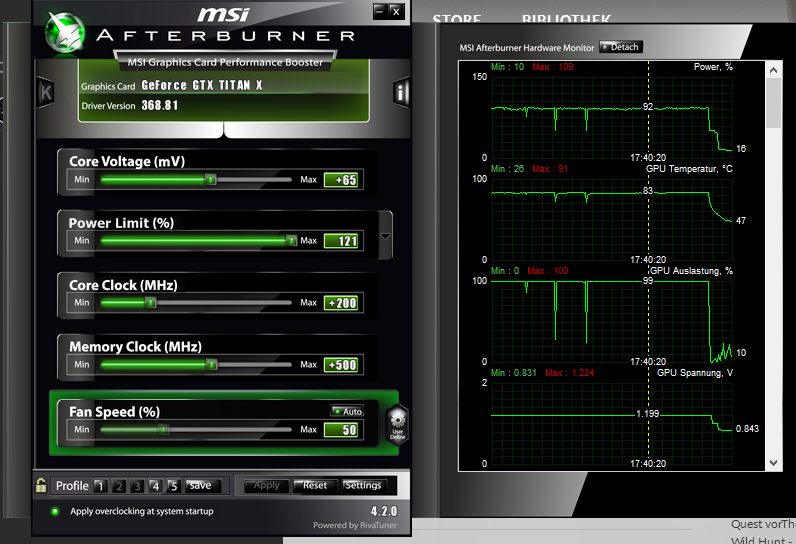

For example here is TW3's power utilsation uncapped @ 4K:

Here is QB's:

20% difference in power usage even at the punishing 4K resolution. One would imagine a DX12 game would increase power utilisation due to uncapped CPU headroom and better hardware unit utilisation and management...

icecold1983

Member

4 times the screen resolution at almost twice the framerate for the titan X and at higher settings, which involves volumetric data.

The resolution/framerate is close to 8 times performance, not taking into account the higher settings which makes it a even higher jump... Dunno what you were expecting.

Where are you getting 4x the screen res? Wasnt that video at 1080p with no upscaling = 2.25x the res?

dr_rus

Member

20% difference in power usage even at the punishing 4K resolution. One would imagine a DX12 game would increase power utilisation due to uncapped CPU headroom and better hardware unit utilisation and management...

This is certainly an indication that something fishy is going on on NV h/w. Too bad that Remedy seems to have stopped patching the game and moved to that Korean shooter sequel.

blastprocessor

The Amiga Brotherhood

I wanna play this but it's still £44.99 !

For example here is TW3's power utilsation uncapped @ 4K:

Here is QB's:

20% difference in power usage even at the punishing 4K resolution. One would imagine a DX12 game would increase power utilisation due to uncapped CPU headroom and better hardware unit utilisation and management...

Were the clocks similar in both cases?

Dictator93

Member

Yeah, it definitely means something is not right.This is certainly an indication that something fishy is going on on NV h/w. Too bad that Remedy seems to have stopped patching the game and moved to that Korean shooter sequel.

Were the clocks similar in both cases?

Yeah, you can even see so based upon the OC on the left as well as the voltage.

icecold1983

Member

Yeah, it definitely means something is not right.

Yeah, you can even see so based upon the OC on the left as well as the voltage.

i think the temperature is also pretty telling. and what is your gpu asic quality? seems like you got quite the sample there

Titan X throttles horribly on this shitty FE cooler from 1080 - nvidia remembered to increase titan price by 200$, but forgot to include a better cooling solution. 1080 is 180W TDP, Titan X is 250W and they both have exactly the same cooler. Yep.

When properly cooled, Titan X can be OCed to almost 2GHz clock, just like 1080. But for that you either need to become deaf and set the fan to 100%, or put a waterblock on it.

https://www.ekwb.com/news/ek-announces-nvidia-geforce-gtx-titan-x-pascal-water-blocks/

When properly cooled, Titan X can be OCed to almost 2GHz clock, just like 1080. But for that you either need to become deaf and set the fan to 100%, or put a waterblock on it.

https://www.ekwb.com/news/ek-announces-nvidia-geforce-gtx-titan-x-pascal-water-blocks/

Dictator93

Member

I have a modded bios, and the clocks (unless I am thermally throttling by furmarking @ 90 C or something) are always at about 1429 mhz.I meant actual effective clocks while playing. I think that when clocks are lower then power utilisation is also lower. So if for some reason there were lower clocks in QB that in W3, that would explain power difference.

Yeah, the temperature difference just shows how poorly the game is using the GPU in comparison to the Witcher 3 (which is not even the craziest thing you can do to a GPU of course). My ASIC Quality is 72.8 (which is average, right?) but I also have the Arctic Accelero Xtreme slapped on it, which keeps it rather cool under normal circumstances... summer thoughi think the temperature is also pretty telling. and what is your gpu asic quality? seems like you got quite the sample there

As I said above, my Titan X (Maxwell) is using a nice after market cooler. So no throttling!Titan X throttles horribly on this shitty FE cooler from 1080 - nvidia remembered to increase titan price by 200$, but forgot to include a better cooling solution. 1080 is 180W TDP, Titan X is 250W and they both have exactly the same cooler. Yep.

When properly cooled, Titan X can be OCed to almost 2GHz clock, just like 1080. But for that you either need to become deaf and set the fan to 100%, or put a waterblock on it.

https://www.ekwb.com/news/ek-announces-nvidia-geforce-gtx-titan-x-pascal-water-blocks/

icecold1983

Member

I have a modded bios, and the clocks (unless I am thermally throttling by furmarking @ 90 C or something) are always at about 1429 mhz.

Yeah, the temperature difference just shows how poorly the game is using the GPU in comparison to the Witcher 3 (which is not even the craziest thing you can do to a GPU of course). My ASIC Quality is 72.8 (which is average, right?) but I also have the Arctic Accelero Xtreme slapped on it, which keeps it rather cool under normal circumstances... summer though

As I said above, my Titan X (Maxwell) is using a nice after market cooler. So no throttling!

thats slightly above avg but not amazing(i think avg is mid to high 60s.) i wasnt aware you had an aftermarket cooler. also not sure how the bios affects things. your power readings in witcher 4k just seem low. thats why i thought you had a great sample

Dictator93

Member

thats slightly above avg but not amazing(i think avg is mid to high 60s.) i wasnt aware you had an aftermarket cooler. also not sure how the bios affects things. your power readings in witcher 4k just seem low. thats why i thought you had a great sample

The modded bios tweaks max Wattage and the max power percentage (up to 120%), so it should be lower appearing in general.

Yeah I got the after market cooler not too long, have loved it honestly.

SlaughterX

Banned

Anyone else having trouble getting the new BLuetooth S controller to work with this game?

I just started gaming on a PC and would like to play this game. How is this game with a GTX 1070 and a Core i7 6700K (OCed to 4.6GHz) running at 1440p?

The Nvidia Experience says I should run most of the settings at medium (think there is 1 setting in there set to high). Would this give me 60fps and how would it look compared to the Xbox One version (if you ignore the resolution difference)?

The Nvidia Experience says I should run most of the settings at medium (think there is 1 setting in there set to high). Would this give me 60fps and how would it look compared to the Xbox One version (if you ignore the resolution difference)?

You'll probably have a good time if you leave upscaling on but not with it disabled.I just started gaming on a PC and would like to play this game. How is this game with a GTX 1070 and a Core i7 6700K (OCed to 4.6GHz) running at 1440p?

The Nvidia Experience says I should run most of the settings at medium (think there is 1 setting in there set to high). Would this give me 60fps and how would it look compared to the Xbox One version (if you ignore the resolution difference)?

icecold1983

Member

I just started gaming on a PC and would like to play this game. How is this game with a GTX 1070 and a Core i7 6700K (OCed to 4.6GHz) running at 1440p?

The Nvidia Experience says I should run most of the settings at medium (think there is 1 setting in there set to high). Would this give me 60fps and how would it look compared to the Xbox One version (if you ignore the resolution difference)?

you can run at 60 fps with xbox settings(1080p). thats all medium except textures are one of the higher levels i think. raising the settings beyond medium hits performance hard and doesnt have much of a visual improvement. upscaling off adds some clarity but youll have to play at 30 fps. you wont be getting 60 fps at 1440p w/ xbone settings

https://www.youtube.com/watch?v=h9z3rII_RvU

thats 1080p ultra settings with upscaling off. best i could find

Inspectah_Deck

Member

Everything on medium, except textures on ultra and geometry on high, upscaling on, AA off.I just started gaming on a PC and would like to play this game. How is this game with a GTX 1070 and a Core i7 6700K (OCed to 4.6GHz) running at 1440p?

The Nvidia Experience says I should run most of the settings at medium (think there is 1 setting in there set to high). Would this give me 60fps and how would it look compared to the Xbox One version (if you ignore the resolution difference)?

Should be an easy 60fps at 1440p with a 1070.

My Rx 480 pushes mid to high 50s with these settings at 3440x1440, which is more demanding and your 1070 is much more powerful.

Game should look a lot better then on Xbox with the resolution bump and higher res textures.

Are you downclocking the card? Titan X can reach an effective boost clock of over 1900MHz.I have a modded bios, and the clocks (unless I am thermally throttling by furmarking @ 90 C or something) are always at about 1429 mhz.

https://www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/27.html

Dictator93

Member

Are you downclocking the card? Titan X can reach an effective boost clock of over 1900MHz.

https://www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/27.html

Please note how I typed "Titan X (Maxwell)".

Greatest Ever

Member

Unrelated, but this game looks really bad at times on the One. The effects and filters just mess with any textures and the game has some of the worst motion blur I've ever seen.

LukasTaves

Member

Yeah, it shouldn't scale perfectly, my point was more like it already increased a lot, which turned out to be a wrong assumption as pointed out below, 1080p is twice 720p, not 4 times XDBut it does not scale linearly. If you have a game that runs (say) 30fps in 4K, it almost never runs 120fps in 1080p, usually less. Which means increasing resolution 4 times does not drop framerate to 1/4th of previous value.

https://www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/8.html

108 fps in 1080p - according to your logic fps in 4K should be 108/4=27. But it's actually 67, over twice as much.

Or if we reverse it, if a game does 67 fps in 4K, then by your logic it should do 268 in 1080p - but it actually does 108.

Maybe syndicate is not the best example, so here's one of the best optimized games I ever played, BF4:

https://www.techpowerup.com/reviews/NVIDIA/Titan_X_Pascal/10.html

1080p - 193fps, so in 4K it "should" be 48, but it's not, it's 66.

Is the game installed on SSD or HDD? (should be on SSD).

Anyway I still believe the game performance is heavily tied to the voxel data they use for the dynamic gi. It's very expensive and being volumetric I would assume it's also heavy on memory/bandwidth. They even use the voxel solution only locally because at large scale it would be impractical for real time rendering. (at least on xbone)

Now that's a math error on my part. It indeed does it seems that it's underperforming.Where are you getting 4x the screen res? Wasnt that video at 1080p with no upscaling = 2.25x the res?

cereal_killerxx

Junior Member

So, I here's my setup:

i5 3570k

16GB DDR3 1600 ram

250GB SSD and 1TB HDD

Nvidia Geforce 1060 GTX 6GB

Would this game run decent enough or should I just wait to get the One version?

i5 3570k

16GB DDR3 1600 ram

250GB SSD and 1TB HDD

Nvidia Geforce 1060 GTX 6GB

Would this game run decent enough or should I just wait to get the One version?