I didn't realize they were so close in price... the 6950xt is a no brainer at only $60 more, at least that's what I'm seeing on Amazon.

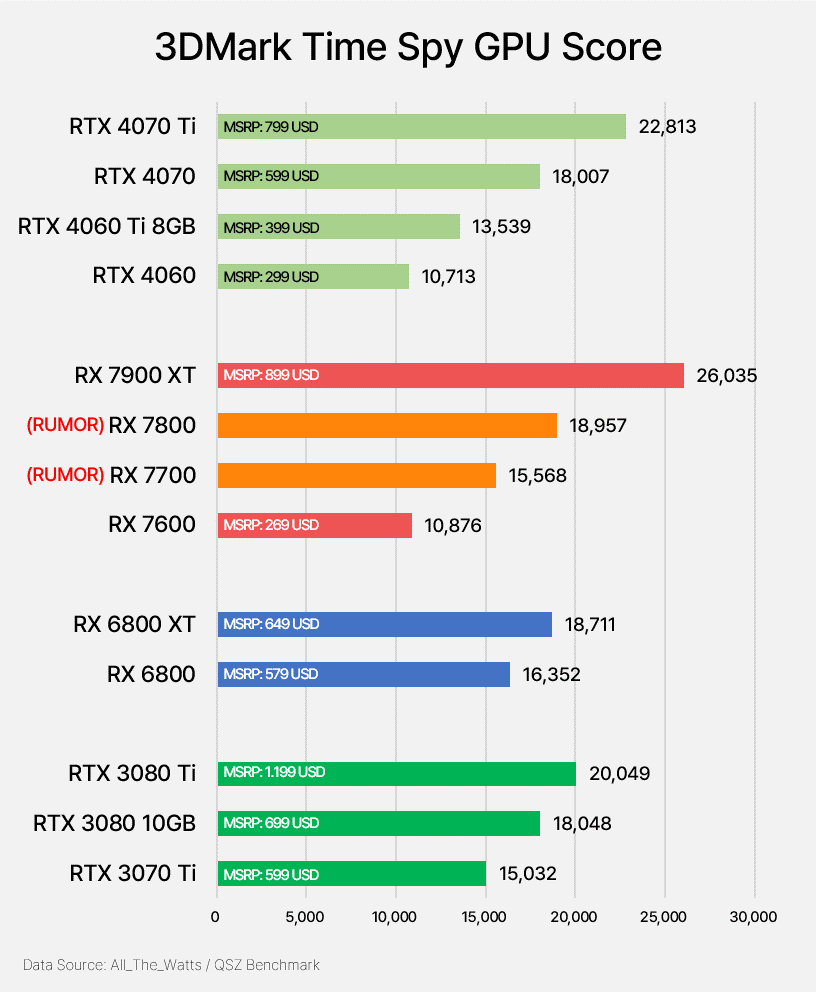

How much is the 7800xt supposed to cost?

I guess RDNA3 will outperform the old stuff in RT, but if you don't care about that..

If the 7800 XT or whatever they call it comes in at anything above $449, it's dead on arrival. 60 CU means it's a direct replacement for the non-XT 6800, which can currently be had for $400-$450. 7800 XT will more than likely be around 10% better than the non-XT like I said in my first post, but fall short of a 6800 XT.

RDNA 3 CU for CU vs RDNA 2 is extremely similar as shown in the performance data for both the 7600 (32 CU) vs 6650 XT (32CU) & 7900GRE (80 CU) vs 6800 XT (72 CU). The 7900GRE (aka 6900 XT) is only 10% faster than a 6800 XT in RT while having 8 more CU's & a higher in-game clock. The 7900GRE is essentially what the 7800 XT should've been at the very least, yet here we are.

This notion that RDNA 3 has any meaningful improvements in RT performance vs RDNA 2 CU for CU was shot down when the 7600 released & subsequently the 7900GRE (80 CU 256-bit bus, China only). We can already extrapolate the data on how a 60 CU 7800 XT will perform in RT simply by analyzing the other two cards. It'll go head to head (+10% from higher clocks/bandwidth) w/ a non-XT 6800 (60 CU). 7900 XT & 7900 XTX will be the only ones w/ meaningful RT improvements due to the higher CU counts w/ 384-bit bus.

If AMD is really calling the RDNA 3 60 CU a 7800 "XT" then I really hope the media calls them out for it like they did w/ Nvidia & the two "4080's". Regardless of it's pricing, it's only going to confuse consumers into thinking it's better than a 6800 XT (which is probably their intention). I digress though, it is what it is.