-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Why 792p on XBO?

- Thread starter andromeduck

- Start date

NeoGAF is the best and worst thing to ever happen to my gaming life.

Before GAF . "Damn this is fun and the graphics are pretty sweet." After GAF: "I'm not buying that game."

Curse you NeoGAF....curse you.

You're still playing games and not spending game time posting?

Sword Of Doom

Member

probably the best res if you cant get stable 900p at 30 and above 720p

But when games like Titanfall have performance issues at 792p then it doesn't make sense. Why not 720p at that point?

Edit: I guess better scaling makes sense

If they want a pixel ratio of exactly 16:9, they would have to be able to divide (in this instance) 792 with 9 and multiply it with 16 and still get an integer. Which they can. But that doesn't work with 791p or 793p, so you would have to either increase the resolution to 801p or decrease it to 783p. Maybe 792p was the sweet spot for both games?

Haha, what are you talking about? At the very least it needs to be divisible by 8 so the image can be stretched to 1080p.

I meant from a TV that'll downscale from 1080p, my brother has one of those that WILL see and process a 1080p signal but downscales it to the TV's resolution.Doesn't the image still get sent as 720p if the TV reports as 720p?

You definitely lose some sharpness whenever you scale, especially at non integer ratios since you're losing some of that data whenever you mix.

There is the question possibly though if it actually outputs a 768'p' picture from that or if it just sticks to 720p and leaves the full resolution for PC monitor usage. I'd need to see if people did some testing with 1080p versus 720p console settings.

SwiftDeath

Member

It really looks like a specific PR sanctioned move from where I sit. Apparently XB1 games being 720p is not good for a next gen console or something

Because it's not 720p.

I wonder when that patch for Titanfall comes out making it 1080p I wonder if Respawn is still working on that...

I'm sure they're already working on it now that they might have a whopping 2% more performance. Any minute now... Just you wait...

Sword Of Doom

Member

Wait until devs get a hold of Xbone's kinectless power 1200p confirmed.

Then we'll see something like 832p. I'm calling it

GeometryHead

Neo Member

Haha, what are you talking about? At the very least it needs to be divisible by 8 so the image can be stretched to 1080p.

You mean like 900p?

Someone inform our new friend how hive minding affects one's futureNeoGAF is the best and worst thing to ever happen to my gaming life.

Before GAF . "Damn this is fun and the graphics are pretty sweet." After GAF: "I'm not buying that game."

Curse you NeoGAF....curse you.

Hydrargyrus

Member

Like CDPR says: "Its PR diferentiation".

does it really make that much of a difference? even 900p isn't that much more and 1080p looks waaaay bigger on such a comparison chart ôo

also: if you search for 900p all you find is Watch_Dogs <.<

It makes MS happy that people can't call the Xbox One the Xbox 720.

Then we call it Xbox 790

That might be it. Still strange .... but interestingIs it not to do with the maximum frame buffer size they could fit in to the Esram?

I remember Respawn mentioning it when quizzed by Eurogamer about their choice with the resolution.

http://www.eurogamer.net/articles/digitalfoundry-2014-titanfall-ships-at-792p

cyberheater

PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 Xbone PS4 PS4

Because it's got a crap GPU that can't easily do 1080p. That is the only true answer.

andromeduck

Member

Why 900p on XBO?

Why 900p on PS4?

Why 792p on XBO?

Why 540p on PS3/360?

Why? Because these consoles aren't powerful enough to do what the developers want. Thus, a sacrifice is made. Resolution or frame rate, take your pick.

/rocketscience

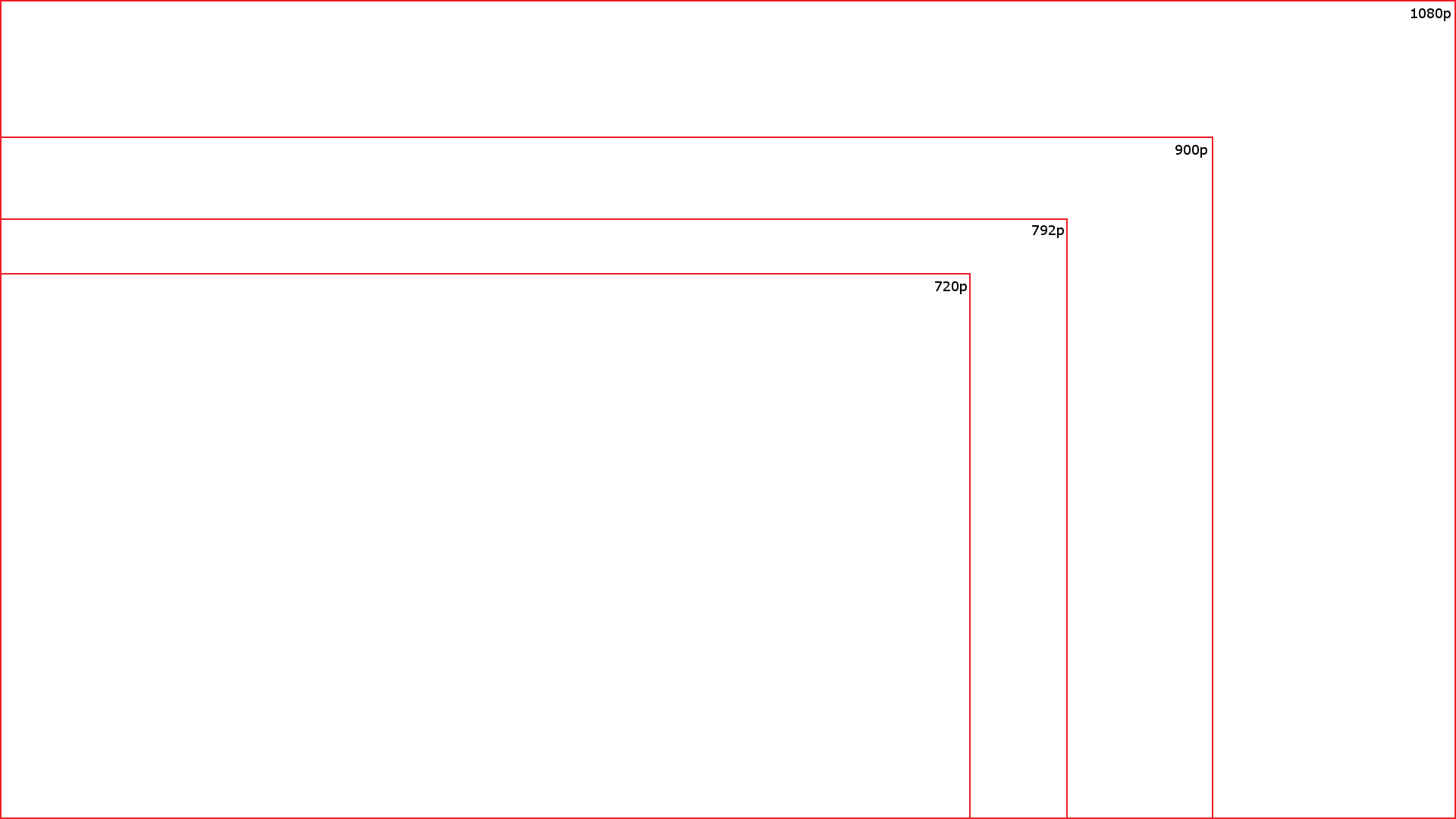

900/1080 = 5/6 which is easy scaling as it's a decent ratio

720/1080 = 2/3 which is a decent ratio to scale with

540/1080 = 1/2 which is one of the best ratios to scale with

There's a great image out there that goes over the various image artefacts caused by up-scaling but I can't find it right now

EDIT: found it!

hooijdonk17

Member

I don't know why they use 792p instead of something like 720p with 8x smaa. 720p should scale better and the smaa would greatly smooth out the jaggies without making things blurry like the shitty fxaa some devs likes to use.

While AA is good, it's not a replacement for resolution.

Resolution adds extra detail. AA basically removes detail from the image to make it look smoother. Best of worlds is high enough resolution that you don't even need AA. Low resolution and AA actually makes things look pretty awful, especially at non-native resolutions.

Remember COD4:MW. I remember loading the first mission and seeing the power lines. Bled my eye, incredibly ugly.

Killroyskbg

Member

Someone inform our new friend how hive minding affects one's future

No. Real AA adds detail the the rendered image. Single-sample post-processing AA could be considered to remove detail.Resolution adds extra detail. AA basically removes detail from the image to make it look smoother.

FeiRR

Banned

Someone inform our new friend how hive minding affects one's future

hooijdonk17

Member

No. Real AA adds detail the the rendered image. Single-sample post-processing AA could be considered to remove detail.

There's no such thing as 'Real' AA, they are all different kinds of AA, and unless you are doing something like full screen supersampling you are one way or another interpolating (and supersampling is not that much different than actually rendering at a higher resolution); That's not adding detail.

Why 900p on XBO?

Why 900p on PS4?

Why 792p on XBO?

Why 540p on PS3/360?

Why? Because these consoles aren't powerful enough to do what the developers want. Thus, a sacrifice is made. Resolution or frame rate, take your pick.

/rocketscience

How about paying attention to what OP is actually asking and tell us something we don't know....

That's just wrong. Read my article for details.There's no such thing as 'Real' AA, they are all different kinds of AA, and unless you are doing something like full screen supersampling you are one way or another interpolating; That's not adding detail.

In short, any AA method which samples the scene at additional locations (so everything which isn't single-sample postprocessing AA) clearly adds detail to the final rendered image. That's not at all limited to supersampling, but also applies to multisampling (and coverage sampling for that matter).

And supersampling is only equivalent to higher resolution rendering if you are using an ordered grid, which is generally not nearly as effective as a sparse grid.

Monty Mole

Member

Those who own a XBO and Titanfall, do you find there is a noticeable difference between 720p and 792p? Genuinely very close to dropping the bucks on a XBO, but as a PS4 owner I'm just wondering how much of a step back it'll actually feel. Does it look like Xbox 360 levels of IQ, or is the AA improved compared to that too? In all honesty though, when I'm playing Peggle 2 and Sunset Overdrive, I probably won't give two shits about the resolution.

hooijdonk17

Member

That's just wrong. Read my article for details.

In short, any AA method which samples the scene at additional locations (so everything which isn't single-sample postprocessing AA) clearly adds detail to the final rendered image. That's not at all limited to supersampling, but also applies to multisampling (and coverage sampling for that matter).

And supersampling is only equivalent to higher resolution rendering if you are using an ordered grid, which is generally not nearly as effective as a sparse grid.

I will happily read that now. Thank you

andromeduck

Member

I have a friend who acts like he is a seasoned game programmer because he reads GAF and the mere thought of me possibly not buying a PS4 enrages him! I'm pretty sure he would stab me in my sleep if I ever bought an Xbox One.

However, If you put up two TVS with the same game running from a PS4 and XB1 I assure you he wouldn't be able to correctly pick the PS4 version. He's never even played an XB1 game but GAF tells him it's bad so it must be!

Riky

$MSFT

Those who own a XBO and Titanfall, do you find there is a noticeable difference between 720p and 792p? Genuinely very close to dropping the bucks on a XBO, but as a PS4 owner I'm just wondering how much of a step back it'll actually feel. Does it look like Xbox 360 levels of IQ, or is the AA improved compared to that too? .

Titanfall looks a lot cleaner than Ghosts on X1, it's actually a substantial amount of extra pixels. Also if you compare Ghosts X1 to the 360 version it looks a lot nicer due to the higher resolution textures, so there is more to the image quality than just native resolution.

I think what you said earlier can generically apply to most large communities period, especially ones with people who can articulate opinions rather than it coming out like this. You just have to remember that someone, somewhere hates everything you love whether for good or bad reasons, and to keep that in mind when seeing some of these opinions, never mind that some people can go overboard.Point taken...went over my head...

cyberheater

PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 PS4 Xbone PS4 PS4

NeoGAF is the best and worst thing to ever happen to my gaming life.

Before GAF . "Damn this is fun and the graphics are pretty sweet." After GAF: "I'm not buying that game."

Curse you NeoGAF....curse you.

I come here for the information and the community but I rarely agree with the vocal minority about games. You have to be strong and form your own opinion.

I come here for the information and the community but I rarely agree with the vocal minority about games. You have to be strong and form your own opinion.

Only the hivemind deals in absolutes DARTH!!!

oh wait...

Anyway, i dont think the majority of members here agree on enough anyway, most of the threads that seem to be leaning towards one extreme, e.g pro xbox, are actually

just being posted in by people who are in some capacity, very invested in the thread topic. Lots of differing threads and posts, I dont post in all of them, i dont really

like posting in the xbox threads discussing upcoming games because i dont really have an opinion either way.

actually that's exactly the bottleneck

source

Ubisoft and Respawn must have found some use for the extra two bytes - probably related to some advanced rendering trick, anybody want to hazard a guess?

I'm not too familiar with deferred methods.

1408 x 792 x 20 bytes = 22,302,720 bytes = 22.3 MB

1408 x 792 x 8 bytes = 8,921,088 bytes = 9.9 MB

Total = 31.2 MB

Checks out but I'm not familiar either.

MarkMclovin

Member

It avoids the stigma of 720p.

You do realise that developers really don't care much about the stigma of this shit?

It's about performance, upscaling and other shit most of us here don't know about.

ZehDon

Member

Wait just a minute... this looks like an educated opinion! This guy knows what he's talking about! Quick - let's get him!!...In short, any AA method which samples the scene at additional locations (so everything which isn't single-sample postprocessing AA) clearly adds detail to the final rendered image. That's not at all limited to supersampling, but also applies to multisampling (and coverage sampling for that matter)...

Kidding, of course. Great article!

I think the people who've discussed the maths of it are hitting the nail on the head, at least from my limited perspective. With a lot of the tech in the industry leaning towards deferred renderers, Microsoft's choice of an eSRAM based solution appears to be little more than a choker chain. Sony might have lucked out with GDDR5 gamble, but Cerny listened to the developers and followed the trends - deferred rendering, GPGPU, asset streaming - and in doing so they future proofed their hardware, as much as any console can. Microsoft weren't kidding when they said they didn't target high end graphics. I really cannot see the Xbone lasting Microsoft's expected ten years.

Those who own a XBO and Titanfall, do you find there is a noticeable difference between 720p and 792p? Genuinely very close to dropping the bucks on a XBO, but as a PS4 owner I'm just wondering how much of a step back it'll actually feel. Does it look like Xbox 360 levels of IQ, or is the AA improved compared to that too? In all honesty though, when I'm playing Peggle 2 and Sunset Overdrive, I probably won't give two shits about the resolution.

I personally cannot tell the difference. I've played Ghosts on the PS4 and XBO, and the difference is very obvious. I've played Ghost and Titanfall on the XBO, I cannot tell the difference. Maybe, just maybe, the colors in TF seem more vibrant. Titanfall is not a looker, however.

That's why I don't get the whole 720p vs 792p...

Someone inform our new friend how hive minding affects one's future

Our new friend? He's not my new friend. Don't lump us all together as though we're some kind of hive mind.

Those who own a XBO and Titanfall, do you find there is a noticeable difference between 720p and 792p? Genuinely very close to dropping the bucks on a XBO, but as a PS4 owner I'm just wondering how much of a step back it'll actually feel. Does it look like Xbox 360 levels of IQ, or is the AA improved compared to that too? In all honesty though, when I'm playing Peggle 2 and Sunset Overdrive, I probably won't give two shits about the resolution.

The thing with Titanfall is that the game itself doesn't look too great in art anyway. I will say that the game does indeed look sharper than 720p games but overall, it pretty much looks like a really good looking last gen game.

I would need to see the same game in the two resolutions (720p and 792p) in person to make a more detailed comparison.

That was my suspicious as the reason.

Modern g-buffers* in deferred renderer can get pretty damn huge, especially when they haven't been built with bandwidth in mind (ie, they just keep getting bigger as people dump more stuff in them).

* ('geometry buffer' - basically writing out things like normal, diffuse color, etc to a set of intermediate render target buffers)

For comparison UE4's gbuffer has 4 32bit RGBA render targets, and a 64bit FP16 RGBA target for emissive contribution. When adding depth + stencil on top of that you're looking at a similar size per pixel.

Needless to say, smaller is better for bandwidth.

For a more extreme example, Imfamous:SS has a very large g-buffer, at well over 40 Bytes per pixel. That's over 80MB at FHD...

Can't you just keep some of that in the embedded ram? I.e the specific buffer you might be working on at that point, or he ones that get accessed the most? You wouldn't necessarily need to keep the final frame buffer for instance

On PS4 and PC it doesn't really matter because all ram is the same speed so you can clump it all together as one big buffer, but inevitably I think devs will start splitting it up for Xbox one. A pain at first but I'm sure it'll become simpler as time goes on.

Morphine_OD

Banned

This thread just made my day, I love you tech-gaf.

andromeduck, your theory is interesting.

If multiple games use 792p, it points to a common framebuffer usage (popular among various developers) that will fit within the ESRAM.

At the same time, it does not exclude the possibility of other resolutions, as we have seen. As long as there is a 32MB limit, it will always be a compromise between resolution, and per-pixel information (ultimately image quality).

If multiple games use 792p, it points to a common framebuffer usage (popular among various developers) that will fit within the ESRAM.

At the same time, it does not exclude the possibility of other resolutions, as we have seen. As long as there is a 32MB limit, it will always be a compromise between resolution, and per-pixel information (ultimately image quality).