Ah, I found an interesting photo. Its not a die shot but I'm sure it can be of some use.

I also recall people suggesting it could be an e6760 base a while back. Its also 40 nm. What is the likelihood of that I wonder.

Also, maybe that thing the Toki Tori devs found that saved them 100 MB of memory was HDR texture compression.

Can someone tell me what "Order-independent transparency" means for a game?

Fab process: 40 nm

Core Speed: 550 Mhz

Processing power (single precision): 352 GigaFLOPS

Polygon throughput: 550M polygons/sec

Unified Shader: 320(64x5)

Memory bandwidth: DDR3: 24.5 – 28.8 GB/s (isn't the Wii U's RAM clocked at exactly half that? That would make it 28.8 with both used simultaneously. Just a thought)

Texture Mapping Units: 16

Render Output Units: 8

32 Z/Stencil ROP Units

8 Color ROP Units

ATI Eyefinity multi-display technology - Three independent display controllers

Maximum board power: 39 Watts

I also recall people suggesting it could be an e6760 base a while back. Its also 40 nm. What is the likelihood of that I wonder.

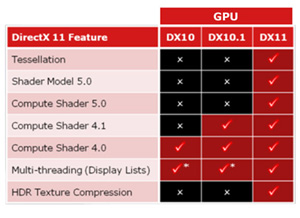

Also, maybe that thing the Toki Tori devs found that saved them 100 MB of memory was HDR texture compression.

Can someone tell me what "Order-independent transparency" means for a game?