This SSD has been thoroughly analyzed, and there is no particular "black tech", everything is specifically adapted to the console scene results, in theory, the cost is not high, or even more likely than the Xbox Series X SSD cost.

Yes, more than twice the speed, but in theory the cost is low, why?

Because SSD's main control is actually very cheap, the main role is to give manufacturers to distinguish pricing, in fact, high-end master take the price and low-end master control is not a few dollars. However, a good horse with a good saddle, the PC high-end SSD often with a small DDR cache to store the address finder table (LUT), and so is it.

If you want to build a 1TB hard drive with a pile of 64GB of flash memory, you can use 16 channels, one piece of flash per channel, so the fastest, 16x64GB s 1024GB, and then the speed we can put as 16X.

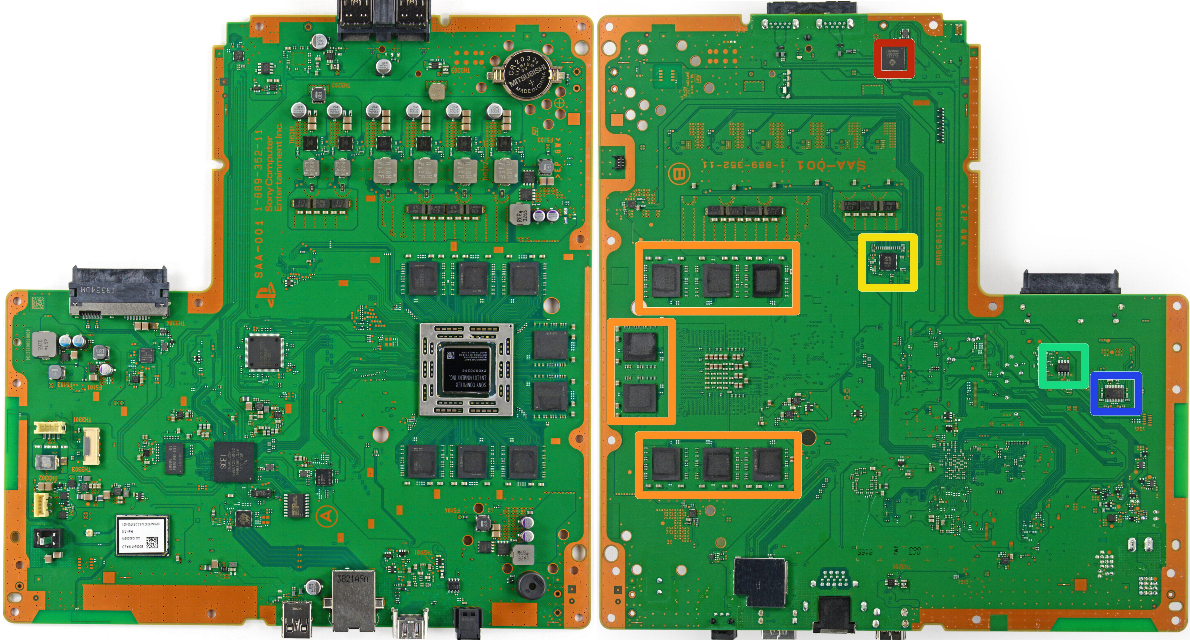

The PS5 is such a design, with 12 channels, 64GB per channel, 12x64 x 768GB. From 1024 to 1000 that is 825G. Speed is considered 12X.

XSX only has 4 channels, but each channel can be stacked with multiple chips, so it's 4x4x64GB . . . 1024GB, but only 4X speed. So if you look at the original speed, 5.5GB/s vs 2.4GB/s, about 2.3 times the difference. Why not three times? Because 64GB of flash running is not satisfied with a single channel, the channel bandwidth utilization is more complete when multiple chips are stacked.

This is also why the group's 8-channel E16 master can run to 5GB/s of reading, while the PS5's 12-channel master can only run to 5.5GB/s. In fact, incomplete utilization of channel bandwidth for a single flash resulted in a degree of waste.

Of course, there is another possibility that the MT/s per channel value of the PS5 master may be lower, in other words, the speed limit of each channel on the master is lower, but this saves money...

===================================

The PS5's masterised is DRAM-LESS, which is also critical because of the savings.

Sony uses large blocks of data to reduce the total block address size, thus reducing the size of the LUT table as mentioned earlier from the usual GB to the current KB level, so that it can be installed in a small SRAM cache, thus saving 1GB of DRAM money. Don't look down on this 1GB DRAM, zero total reflected in the total cost, almost can save a dozen knives.

The simplicity of the visit is through the address plus offset. The map you use in your daily life will show only XX Building, not XX Building XX Room XX, will it? Traditional PC SSD, address table is equivalent to a map accurate to the XX floor, and PS5 address table is equivalent to a map to xx district accurate. So obviously, the PS5 map will be much smaller and easier to put into the pocket.

The rest, then, is the offset. For ordinary PC is XX ROOM XX, AND FOR PS5 IS XX BUILDING XX FLOOR XX ROOM XX ... However, this has little effect on read speed.

While writing, you can think of it as a fire drill. PC's writing is "by XX District XX Building XX Building" people unified fire drills, and PS5 writing is "by XX District" people unified fire drills. In other words, the latter is more labor-conscious. But it's not a big influence on the console scene.

So the PS5's design is not more expensive than the XSX, but is likely to be a little cheaper than xSX's SSD. Because the hard drive is smaller, you can spend four less flash memory. Four 64GB flash memory is a big deal, much more expensive than a higher-end SSD master...

====================================

As for what to think? Quite simply, Sony's design goal is to save money, not to achieve higher performance than its competitors. High performance is just the way it is done. As said before, you have saved 1/4 of the capacity, the cost is also much lower, if not add edgy, will not be sprayed by the player? So did such a thing, change the channel more points but actually not much expensive and the root is not the main cost of the main control, that's all.

The bandwidth required for page switching of the graphics card is not possible to meet the situation of SSD s2GB/s unless it is deliberately forced. In a typical game scenario, most things should be in memory and memory, and only in extreme cases (such as a ship jumping) will require massive exchanges. But even if it is a massive exchange, 5.5GB/s SSD can not and 2.4GB/s SSD to open the gap, because the entire memory space used for the game is only about 13GB, which has a large number of things stored in memory do not need to exchange, the actual maximum swap will be a GB. Can't you design a scene to make the player jump back and forth in order to fill this 5.5GB/s, do you want the player to trigger the light-sensitive flashepilepsy (((

In addition, Virtual Geometry, Virtual Texture these things are essentially picture format footage (VG is a geometric model saved in the picture format), there are a large number of very efficient, and directly addressed compression algorithms, which also for the gross PS5 and XSX have added a hardware decompression chip, not only to release the CPU, but also to achieve ultra-low latency material exchange. What does it mean, usually you use WinRAR to unzip 1024 little sister files in a package are made with CPU, and now do with hardware not only high bandwidth and low latency (and the delay can be estimated, predictable, which is critical to the stable number of frames!) ), also can also go hand in direct access to the corresponding small sister, do not need to fully unzip. So Sony says it's revolutionary for console game development, because rounding it is game-free virtual memory ah friends. Direct addressing, and the latency can be expected, meaning that a braindeveloper can have direct access to almost any material they need at any time, and can pre-empt a large amount of access, largely hiding the delay (though not all).

=====================================

Next, answer the question's specific question:

Will the small progress of memory in the next generation be a bottleneck?

Yes. This problem is even more obvious in the light-chasing scene. Light chase is also good, 4K is also good, 60-120fps is also good, noise reduction is also good, which is not a large memory? It's all memory bandwidth destroyers. 448GB/s, 560GB/s, to some extent, has been set the upper limit of next-generation game access. The gain of the SSD is limited to capacity, not bandwidth.

We know that megatexture, virtual texture and other streaming technology can save a lot of memory, but the bottleneck lies in the hard disk IO, then the ps5 up to 8-9GB/s hard disk IO can solve this bottleneck?

It depends on how you define the bottleneck. Mega Texture and now the virtual texture streaming is a dish two, the bottleneck can be said to be the hard disk IO, not to mention 8-9GB/s hard drive, even if it is 1GB/s hard drive is enough. Even if you have a SSD for SATA 3, you won't see anything that can't be loaded in most games. Because the biggest bottleneck of virtual texture streaming is "random reading and writing speed", which is why the game of linear levels are generally casually used virtual texture, you can not see the card loading. Because linear levels are rarely read randomly, and open-world games are different, a large number of random reads instantly drags down the entire game. On the other hand, both the PC and PS4 use the CPU to extract the read, so a large number of reads can also drag the CPU to cause frame generation instability.

Of course, the above-mentioned tyrannical theory is directed at PC 1440P and the following scenarios. At 4K, it will be appropriate to float up to 2 times. However, the next generation is 2.4GB/s start, and hardware decompression, it can be said that has been at a certain cost to solve the problem perfectly.

Can such a high-speed SSD help improve picture performance and make up for microsoft's xsx 15% of floating-point performance?

No, the picture performance boosts the bandwidth that depends on the entire data path. On the face of it, the PS5 is only "2TFlops" lower in floating-point performance, but if you look at the essence:

- The memory bandwidth is 25% less, the difference is 112GB/s, this number can be more scary than 9GB/s, of course, I am just scare you (escape)

- WGP-level caches such as LDS and L0 are 44% less, L2 is 25%, and L1 is uncertain but should be less (otherwise XSX will easily feed the CU). For a GPU scenario, the size of the cache is far more important than frequency. The RTX 2070 Super is 40 SMs, 1.77GHz, not the same as lifting 40 Cu, 1.9GHz 5700XT hammer (which, of course, is just a analogy to scare you. In fact, Turing has a lot more of the higher rDNA1 factor than the RDNA1 in comparison to Teraflops, one of the main factors being that the N-card's data path is actually more efficient, with a cache size that is more solid for each stream processor than the RDNA1 graphics card). In lazy words, the performance gains from the increase in the size of the cache capacity are much greater than the frequency increases to a certain extent. Because you're faster at the same level, at most, twice the speed of someone else? But the time overhead of each reprieve is more than ten times that of access within a normal cache (

- Now you should be able to understand why UE5 this Demo can only run to 1440P 30FPS. Because whether it's Nanite or Lumen, it actually eats more memory bandwidth (rather than memory capacity) and more cpus use of memory.

Overall, XSX's data path bandwidth from memory to CU is too high (between 25-50%, depending on the scenario), but even then I don't think XSX can run UE5 demo 4K 60FPS. Because the essence of this Demo is to show that "you see I can make such a shy thing without good optimization" instead of "You see i'm more shy than anything else". In particular, Lumen, Lumen is actually SSGI and coarse-mode reflection and sparse-based carnitin tracking GI, Lumen's crude-mode reflection and carnouscan tracking part is not actually using light to speed up hardware to achieve, completely do not understand why, do not know is API is not ready or Epic has personality, anyway, DXR can accelerate the hormone tracking. In short, very crazy. It's not good to save point performance to improve point resolution (

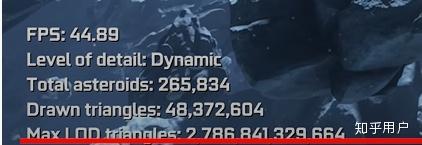

Nanite, for example, is not mesh Shader, of course, this is also to be expected after all PS5 moed this thing. XSX's geometric performance is much higher than 2080Ti under the optimized condition, and 2080 Timesh can actually render the triangle of 50M plus and people are still 30fps, Nanite in this demo is actually rendering about 20M triangle sand and 30fps, which is very embarrassing:

2080 Ti Run Mesh Shader, 45fps, 48M real-painted triangle, 4K resolution

If you drop down to a 20M painted triangle like the UE5 Demo, or down to 1440P, you can save a lot of resources for pixel shading, which can improve performance. Rounding 60fps is no problem (

As I've said before, this proves once again that PS5 does n'go of Mesh Shader. Geometric performance is still limited by The precision of parallelism, Primitive Shader.

So don't expect too much of what's the main design purpose of saving money, either for fans or for the manufacturer... In case the last real 399, is not really fragrant ...