So it's still 20GB/s for PS4 vs 30GB/s Xbone? Or am I misunderstanding something?

I'm not sure either. I thought it was 20GB with 10 of that being more direct access but it seems I may have been mistaken.

So it's still 20GB/s for PS4 vs 30GB/s Xbone? Or am I misunderstanding something?

Instead of twisting numbers, you should just show your first party games a bit more (FM5 in particular has had very little media shown - only two tracks two months before the release?) and some popular third party games running on your box (FIFA, COD, BF4 etc.) to instill people's confidence that you're going to provide a capable machine, regardless of what Sony are doing.

Sounds pretty respectable to me.

Onion+ has an additional 10GB/s I believe.I'm not sure either. I thought it was 20GB with 10 of that being more direct access but it seems I may have been mistaken.

So it's still 20GB/s for PS4 vs 30GB/s Xbone? Or am I misunderstanding something?

Haha!Well we sure as hell probably helped design all the 180s.

Xbox 360 > Xbox One confirmed.

We're all "Technical Fellow" material here.

I see my statements the other day caused more of a stir than I had intended. I saw threads locking down as fast as they pop up, so I apologize for the delayed response.

I was hoping my comments would lead the discussion to be more about the games (and the fact that games on both systems look great) as a sign of my point about performance, but unfortunately I saw more discussion of my credibility.

So I thought I would add more detail to what I said the other day, that perhaps people can debate those individual merits instead of making personal attacks. This should hopefully dismiss the notion I'm simply creating FUD or spin.

I do want to be super clear: I'm not disparaging Sony. I'm not trying to diminish them, or their launch or what they have said. But I do need to draw comparisons since I am trying to explain that the way people are calculating the differences between the two machines isn't completely accurate. I think I've been upfront I have nothing but respect for those guys, but I'm not a fan of the mis-information about our performance.

So, here are couple of points about some of the individual parts for people to consider:

18 CU's vs. 12 CU's =/= 50% more performance. Multi-core processors have inherent inefficiency with more CU's, so it's simply incorrect to say 50% more GPU.

Adding to that, each of our CU's is running 6% faster. It's not simply a 6% clock speed increase overall.

We have more memory bandwidth. 176gb/sec is peak on paper for GDDR5. Our peak on paper is 272gb/sec. (68gb/sec DDR3 + 204gb/sec on ESRAM). ESRAM can do read/write cycles simultaneously so I see this number mis-quoted.

We have at least 10% more CPU. Not only a faster processor, but a better audio chip also offloading CPU cycles.

We understand GPGPU and its importance very well. Microsoft invented Direct Compute, and have been using GPGPU in a shipping product since 2010 - it's called Kinect.

Speaking of GPGPU - we have 3X the coherent bandwidth for GPGPU at 30gb/sec which significantly improves our ability for the CPU to efficiently read data generated by the GPU.

Hopefully with some of those more specific points people will understand where we have reduced bottlenecks in the system. I'm sure this will get debated endlessly but at least you can see I'm backing up my points.

I still I believe that we get little credit for the fact that, as a SW company, the people designing our system are some of the smartest graphics engineers around they understand how to architect and balance a system for graphics performance. Each company has their strengths, and I feel that our strength is overlooked when evaluating both boxes.

Given this continued belief of a significant gap, we're working with our most senior graphics and silicon engineers to get into more depth on this topic. They will be more credible then I am, and can talk in detail about some of the benchmarking we've done and how we balanced our system.

Thanks again for letting my participate. Hope this gives people more background on my claims.

According to the available figures, that is correct. This bandwidth is, in both systems, cache-coherent in the sense, that the GPU always "sees" data that the CPU sees without the need of explicit synchronization by flushing the cashes. And both systems seem to behave the same way in this respect. For the CPU to "see" data that the GPU sees, both systems have to flush cashes. The PS4 can selectively flush individual cash lines while the XB1 apparently needs to flush the entire GPU cache. That is the evidence so far.

At Microsoft, we have a position called a "Technical Fellow" These are engineers across disciplines at Microsoft that are basically at the highest stage of technical knowledge. There are very few across the company, so it's a rare and respected position.

We are lucky to have a small handful working on Xbox.

I've spent several hours over the last few weeks with the Technical Fellow working on our graphics engines. He was also one of the guys that worked most closely with the silicon team developing the actual architecture of our machine, and knows how and why it works better than anyone.

So while I appreciate the technical acumen of folks on this board - you should know that every single thing I posted, I reviewed with him for accuracy. I wanted to make sure I was stating things factually, and accurately.

So if you're saying you can't add bandwidth - you can. If you want to dispute that ESRAM has simultaneous read/write cycles - it does.

I know this forum demands accuracy, which is why I fact checked my points with a guy who helped design the machine.

This is the same guy, by the way, that jumps on a plane when developers want more detail and hands-on review of code and how to extract the maximum performance from our box. He has heard first-hand from developers exactly how our boxes compare, which has only proven our belief that they are nearly the same in real-world situations. If he wasn't coming back smiling, I certainly wouldn't be so bullish dismissing these claims.

I'm going to take his word (we just spoke this AM, so his data is about as fresh as possible) versus statements by developers speaking anonymously, and also potentially from several months ago before we had stable drivers and development environments.

Are you kidding? I mean really - are you kidding?

This is part and parcel of the territory here. You have to answer for your statements, especially if you're here in an official capacity. People get banned for being out of line, but poking holes in the arguments of other posters is well within the rules.

I've had my work here both praised and eviscerated, called out by numerous forum folks both publicly and via PM when I got stuff wrong, and I'm a goddamn admin. Guess what - I wouldn't have it any other way. That is what makes NeoGAF what it is.

There are many, many people who are more than capable of assessing, vetting and debunking technical claims and they have every right to do so. That's the price of doing business here. If we had official Nintendo or Sony reps on board, they would be subject to the same process.

If you're scared, buy a dog.

Sounds pretty respectable to me.

Good move.Last post? Not even close. Sorry - still many more meme's yet to come.

As was kindly suggested by someone in a PM, it's unlikely anything more I'm going to post on this topic will make it better. People can believe me and trust I'm passing on the right information, or believe I'm spreading FUD and lies.

When I first started coming on, I said what I wanted to do was speak more directly and more honestly with the community, clarifying what we could because you guys have more detailed questions then we had been dealing with.

Regarding the power, I've tried to explain areas that are misunderstood and provide insight from the actual engineers on the system. We are working with the technical folks to get more in-depth. As I said - they are more credible then I am, and can provide a lot more detail. Best I leave it to them.

Next stop - launch itself. Only then, when the games release and developers will inevitably be asked to compare the systems, will we there be a satisfying answer.

Until then, as I have been, I'll try and answer what I can. But I'm not going to add more on this topic.

I agree. They should really just give up on the graphics argument at this point, it's a losing battle. Instead they need to try and convince me and others while, despite having lesser graphics, theirs is the box for me and not the graphically better station.

I think I would try something like this:

We concede that the PS4 does have slightly better specs. But XBox One offers entertainment in ways that go beyond the graphical capabilities of a machine. Like the Kinect (explain), like the TC connectivity (explain), like our first party titles (explain).

We at Microsoft believe that graphics can only take you so far in video game entertainment and that there are better ways to improve user experiences.

Then back it up with things like Oculus Rift Support and Forza glasses...

Are you kidding? I mean really - are you kidding?

This is part and parcel of the territory here. You have to answer for your statements, especially if you're here in an official capacity. People get banned for being out of line, but poking holes in the arguments of other posters is well within the rules.

I've had my work here both praised and eviscerated, called out by numerous forum folks both publicly and via PM when I got stuff wrong, and I'm a goddamn admin. Guess what - I wouldn't have it any other way. That is what makes NeoGAF what it is.

There are many, many people who are more than capable of assessing, vetting and debunking technical claims and they have every right to do so. That's the price of doing business here. If we had official Nintendo or Sony reps on board, they would be subject to the same process.

If you're scared, buy a dog.

Last post? Not even close. Sorry - still many more meme's yet to come.

As was kindly suggested by someone in a PM, it's unlikely anything more I'm going to post on this topic will make it better. People can believe me and trust I'm passing on the right information, or believe I'm spreading FUD and lies.

When I first started coming on, I said what I wanted to do was speak more directly and more honestly with the community, clarifying what we could because you guys have more detailed questions then we had been dealing with.

Regarding the power, I've tried to explain areas that are misunderstood and provide insight from the actual engineers on the system. We are working with the technical folks to get more in-depth. As I said - they are more credible then I am, and can provide a lot more detail. Best I leave it to them.

Next stop - launch itself. Only then, when the games release and developers will inevitably be asked to compare the systems, will we there be a satisfying answer.

Until then, as I have been, I'll try and answer what I can. But I'm not going to add more on this topic.

Why people like myself argue that there will be a difference at launch is because there haven't been consoles that were as similar as these ones before.I'll preface this post by saying that, having never owned a Sony console and thus not become invested in any of their franchises, I'm an Xbox fan. I've preordered an Xbox One and am very happy with that decision. That said, I'm fully willing to admit that the PS4 is a more technically capable machine on paper. I'm just not sure that the difference will manifest itself at launch.

When Penello says that the difference is not as great as you might think, he's referring to right now. Developers are still learning the intricacies of both systems. It makes sense that they'll be pretty comparable during the early stages. Heck, I'm pretty sure Sony itself has said it will likely be a couple years before the true potential of the PS4 is capitalized upon. As for right now, I'm content waiting for the actual launches before making assumptions about wide gaps in performance.

Last post? Not even close. Sorry - still many more meme's yet to come.

As was kindly suggested by someone in a PM, it's unlikely anything more I'm going to post on this topic will make it better. People can believe me and trust I'm passing on the right information, or believe I'm spreading FUD and lies.

When I first started coming on, I said what I wanted to do was speak more directly and more honestly with the community, clarifying what we could because you guys have more detailed questions then we had been dealing with.

Regarding the power, I've tried to explain areas that are misunderstood and provide insight from the actual engineers on the system. We are working with the technical folks to get more in-depth. As I said - they are more credible then I am, and can provide a lot more detail. Best I leave it to them.

Next stop - launch itself. Only then, when the games release and developers will inevitably be asked to compare the systems, will we there be a satisfying answer.

Until then, as I have been, I'll try and answer what I can. But I'm not going to add more on this topic.

With all due respect, I don't believe Albert. Compare the 7850 (1.76TF) with the 7770 (1.28TF). The performance difference is staggering. Now both the PS4 and Xbox One have better GPUs than the aforementioned (1.84 vs 1.31). However, the gap between the two console GPUs is greater than the gap between the two AMD GPUs that I have just mentioned.

Here is badb0y's writeup:

http://www.neogaf.com/forum/showpost.php?p=74541511&postcount=621

I do not see how Microsoft will mitigate that gap unless they're using some sorcery.

I don't have a problem with that, being called out is fine but the passive aggressive behaviour from some I think is a bit overboard. There's ways to do it in a more calm approach. I have no problem whatsoever with people taking issue at his claims. It's this attitude which has lead to many closed threads over the past few days.

We concede that the PS4 does have slightly better specs. But XBox One offers entertainment in ways that go beyond the graphical capabilities of a machine. Like the Kinect (explain), like the TC connectivity (explain), like our first party titles (explain)

Last post? Not even close. Sorry - still many more meme's yet to come.

As was kindly suggested by someone in a PM, it's unlikely anything more I'm going to post on this topic will make it better. People can believe me and trust I'm passing on the right information, or believe I'm spreading FUD and lies.

When I first started coming on, I said what I wanted to do was speak more directly and more honestly with the community, clarifying what we could because you guys have more detailed questions then we had been dealing with.

Regarding the power, I've tried to explain areas that are misunderstood and provide insight from the actual engineers on the system. We are working with the technical folks to get more in-depth. As I said - they are more credible then I am, and can provide a lot more detail. Best I leave it to them.

Next stop - launch itself. Only then, when the games release and developers will inevitably be asked to compare the systems, will we there be a satisfying answer.

Until then, as I have been, I'll try and answer what I can. But I'm not going to add more on this topic.

Last post? Not even close. Sorry - still many more meme's yet to come.

As was kindly suggested by someone in a PM, it's unlikely anything more I'm going to post on this topic will make it better. People can believe me and trust I'm passing on the right information, or believe I'm spreading FUD and lies.

When I first started coming on, I said what I wanted to do was speak more directly and more honestly with the community, clarifying what we could because you guys have more detailed questions then we had been dealing with.

Regarding the power, I've tried to explain areas that are misunderstood and provide insight from the actual engineers on the system. We are working with the technical folks to get more in-depth. As I said - they are more credible then I am, and can provide a lot more detail. Best I leave it to them.

Next stop - launch itself. Only then, when the games release and developers will inevitably be asked to compare the systems, will we there be a satisfying answer.

Until then, as I have been, I'll try and answer what I can. But I'm not going to add more on this topic.

Last post? Not even close. Sorry - still many more meme's yet to come.

As was kindly suggested by someone in a PM, it's unlikely anything more I'm going to post on this topic will make it better. People can believe me and trust I'm passing on the right information, or believe I'm spreading FUD and lies.

When I first started coming on, I said what I wanted to do was speak more directly and more honestly with the community, clarifying what we could because you guys have more detailed questions then we had been dealing with.

Regarding the power, I've tried to explain areas that are misunderstood and provide insight from the actual engineers on the system. We are working with the technical folks to get more in-depth. As I said - they are more credible then I am, and can provide a lot more detail. Best I leave it to them.

Next stop - launch itself. Only then, when the games release and developers will inevitably be asked to compare the systems, will we there be a satisfying answer.

Until then, as I have been, I'll try and answer what I can. But I'm not going to add more on this topic.

Hmm I wonder if "Technical Fellow" is going to become a Gaf meme...

I really wanted to post "Im an expert" lolHmm I wonder if "Technical Fellow" is going to become a Gaf meme...

Last post? Not even close. Sorry - still many more meme's yet to come.

As was kindly suggested by someone in a PM, it's unlikely anything more I'm going to post on this topic will make it better. People can believe me and trust I'm passing on the right information, or believe I'm spreading FUD and lies.

When I first started coming on, I said what I wanted to do was speak more directly and more honestly with the community, clarifying what we could because you guys have more detailed questions then we had been dealing with.

Regarding the power, I've tried to explain areas that are misunderstood and provide insight from the actual engineers on the system. We are working with the technical folks to get more in-depth. As I said - they are more credible then I am, and can provide a lot more detail. Best I leave it to them.

Next stop - launch itself. Only then, when the games release and developers will inevitably be asked to compare the systems, will we there be a satisfying answer.

Until then, as I have been, I'll try and answer what I can. But I'm not going to add more on this topic.

Last post? Not even close. Sorry - still many more meme's yet to come.

As was kindly suggested by someone in a PM, it's unlikely anything more I'm going to post on this topic will make it better. People can believe me and trust I'm passing on the right information, or believe I'm spreading FUD and lies.

When I first started coming on, I said what I wanted to do was speak more directly and more honestly with the community, clarifying what we could because you guys have more detailed questions then we had been dealing with.

Regarding the power, I've tried to explain areas that are misunderstood and provide insight from the actual engineers on the system. We are working with the technical folks to get more in-depth. As I said - they are more credible then I am, and can provide a lot more detail. Best I leave it to them.

Next stop - launch itself. Only then, when the games release and developers will inevitably be asked to compare the systems, will we there be a satisfying answer.

Until then, as I have been, I'll try and answer what I can. But I'm not going to add more on this topic.

Ok just a quick run through of points...

- What is this inherent inefficiency you speak of? Can you elaborate? It is not something I've ever heard mentioned.

- Your second point contradicts your first. If 50% more CU performance is viable to inefficiencies, why would 6% extra performance not also be privy to the same thing?

- How did you arrive at the 204gb/s figure for the Esram, can you elaborate? Also you realise this is a very disinginuous claim. YES the bandwidth can be added together in that the DDR3 and Esram can function simultaneously, but this tells only a small part of the full story. The Esram still only accounts for a meagre 32mb of space. The DDR3 ram, which is the bulk of the memory (8GB) is still limited to only 68gb/s, whilst the PS4's GDDR5 ram has an entire 8GB with 176gb/s bandwidth. This is a misleading way to present the argument of bandwidth differences.

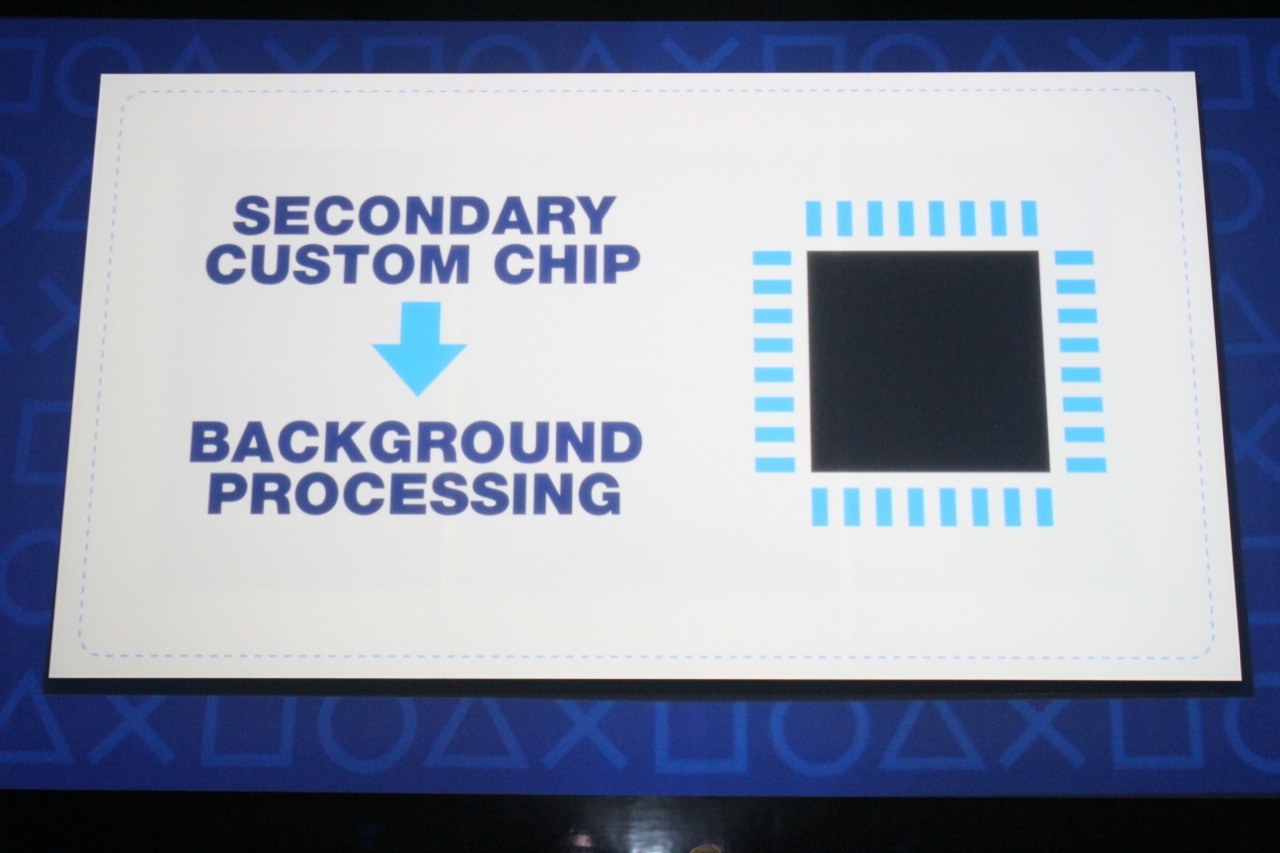

- How do you know you have 10% more cpu speed? You said you are unaware of the PS4's final specs, and rumours of a similar upclock have been floating around. It could also be argued that the XO has the more capable audio chip because the systems audio Kinect features are more demanding, something the PS4 does not have to cater to. Add to that, the PS4 does also have a (less capable) audio chip, along with a secondary custom chip (supposedly used for background processing). There's that to consider too.

- That's good that Microsoft understands GPGPU, but that does not take away from the inherent GPGPU customisations afforded to the PS4. The PS4 also has 6 additional compute units, which is a pretty hefty advantage in this field.

- This is factually wrong. With Onion plus Onion+ the PS4 also has 30gb/s bandwidth.

Albert, I understand you're not an expert on the X1's hardware, so trying to argue against a whole forum with experts on the subject is suicidal. If you're gonna say that there is not point arguing because you say so, the least you could do is accept an AMA, just take a list of questions, give them to your tech guy at MS, and come back with the answers, otherwise your reputation in here is pretty much damaged beyond repair, this is NeoGAF afterall.

Until then, as I have been, I'll try and answer what I can. But I'm not going to add more on this topic.

Albert, I understand you're not an expert on the X1's hardware, so trying to argue against a whole forum with experts on the subject is suicidal. If you're gonna say that there is not point arguing because you say so, the least you could do is accept an AMA, just take a list of questions, give them to your tech guy at MS, and come back with the answers, otherwise your reputation in here is pretty much damaged beyond repair, this is NeoGAF afterall.

So you won't even try to defend your previous posts or elaborate on them? You're talking technical here, you can't just make factually incorrect statements and not try to further explain yourself when someone proves you wrong.Last post? Not even close. Sorry - still many more meme's yet to come.

As was kindly suggested by someone in a PM, it's unlikely anything more I'm going to post on this topic will make it better. People can believe me and trust I'm passing on the right information, or believe I'm spreading FUD and lies.

When I first started coming on, I said what I wanted to do was speak more directly and more honestly with the community, clarifying what we could because you guys have more detailed questions then we had been dealing with.

Regarding the power, I've tried to explain areas that are misunderstood and provide insight from the actual engineers on the system. We are working with the technical folks to get more in-depth. As I said - they are more credible then I am, and can provide a lot more detail. Best I leave it to them.

Next stop - launch itself. Only then, when the games release and developers will inevitably be asked to compare the systems, will we there be a satisfying answer.

Until then, as I have been, I'll try and answer what I can. But I'm not going to add more on this topic.

Then you didn't do anything and have left us in the same place as we were before.

The fact that you're not commenting on this is worrisome.

Try us. I mean it. People will understand, we're trying to understand, and you're not helping.

When the mass gaming press are leading most people to believe that the two systems are identical in power, they have absolutely no reason to concede to the PS4 being more powerful. They aren't going to risk that kind of admission going viral and further lowering interest in their console just to appease GAF.I agree. They should really just give up on the graphics argument at this point, it's a losing battle. Instead they need to try and convince me and others while, despite having lesser graphics, theirs is the box for me and not the graphically better station.

I think I would try something like this:

We concede that the PS4 does have slightly better specs. But XBox One offers entertainment in ways that go beyond the graphical capabilities of a machine. Like the Kinect (explain), like the TC connectivity (explain), like our first party titles (explain).

We at Microsoft believe that graphics can only take you so far in video game entertainment and that there are better ways to improve user experiences.

Then back it up with things like Oculus Rift Support and Forza glasses...

512KB at 128 bytes / clock = 4096 clocks. Pretty simple maths man.

Albert, I understand you're not an expert on the X1's hardware, so trying to argue against a whole forum with experts on the subject is suicidal. If you're gonna say that there is not point arguing because you say so, the least you could do is accept an AMA, just take a list of questions, give them to your tech guy at MS, and come back with the answers, otherwise your reputation in here is pretty much damaged beyond repair, this is NeoGAF afterall.

Yeah, and regardless of the name, there's no doubt that if you're able to get that title, you're a genius as far as computer science is concerned.

This isn't the technical fellow that Albert was talking about, but one of them who's working on the Xbox One is Dave Cutler

Dave Cutler

Born

March 13, 1942 (age 71)

Lansing, Michigan, USA

Occupation

Senior Technical Fellow at Microsoft

VMS

In April 1975, Digital began a hardware project, code-named Star, to design on a 32-bit virtual address extension to its PDP-11. In June 1975, Cutler, together with Dick Hustvedt and Peter Lippman, were appointed the technical project leaders for the software project, code-named Starlet, to develop a totally new operating system for the Star family of processors...... snip

...Xbox

As of January 2012, a spokesperson for Microsoft has confirmed that Cutler is no longer working on Windows Azure, and has since joined the Xbox team.[6] No further information was provided as to what Cutler's role was, nor what he was working on within the team.

In May 2013, Microsoft announced the Xbox One console, and Cutler was mentioned as having worked in the development of host OS portion of the system running inside the new gaming device. Apparently Cutler's work was focused in creating an optimized version of Microsoft's Hyper-V Host OS specifically designed for Xbox One.

Sorry, I think you've misunderstood what Albert meant by "Last post? Not even close".

I believe he was referring to a post earlier that said that Albert's last post would be his last post in the thread.

Just something I noticed while trying to catch up on this topic.