Kelli Lemondrop

Banned

Comparing two reference cards across a wide range of benchmarks is now "spreading FUD and horseshit". Ok, whatever you say.

I've been in this for a while, and reference cards is how GPUs have been compared for over two decades now.

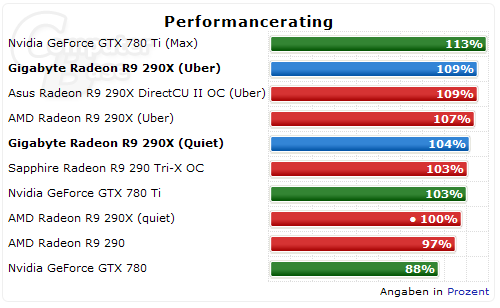

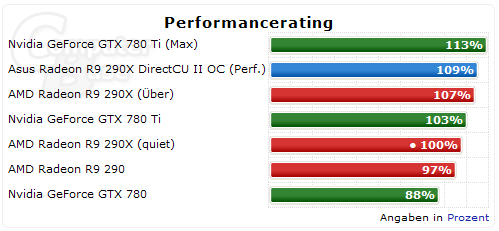

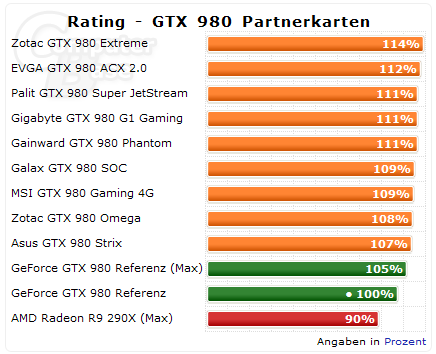

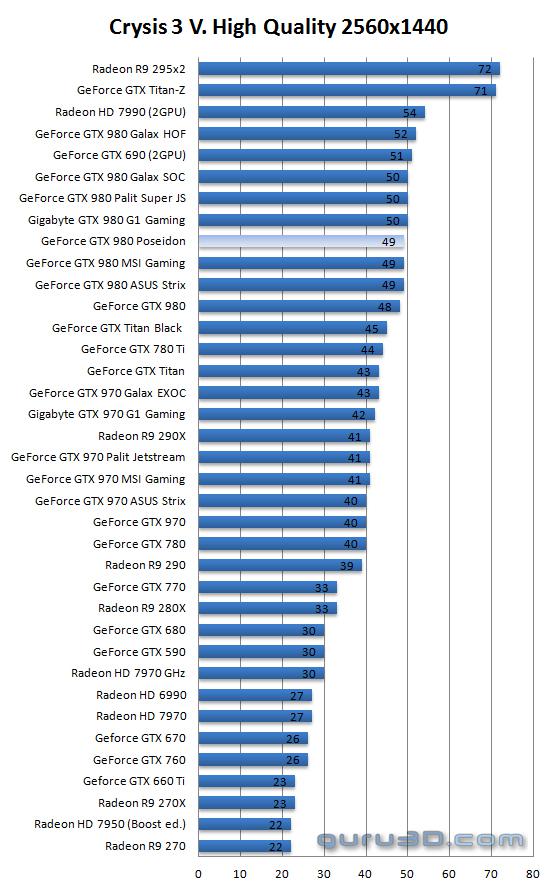

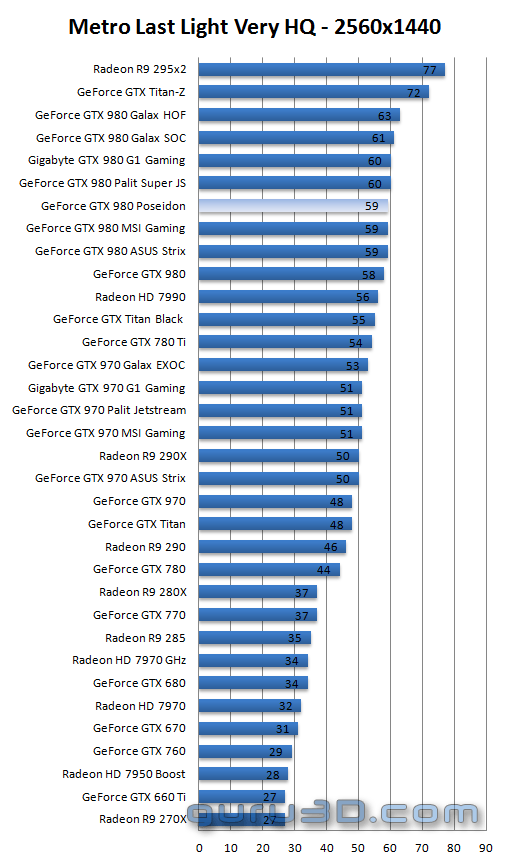

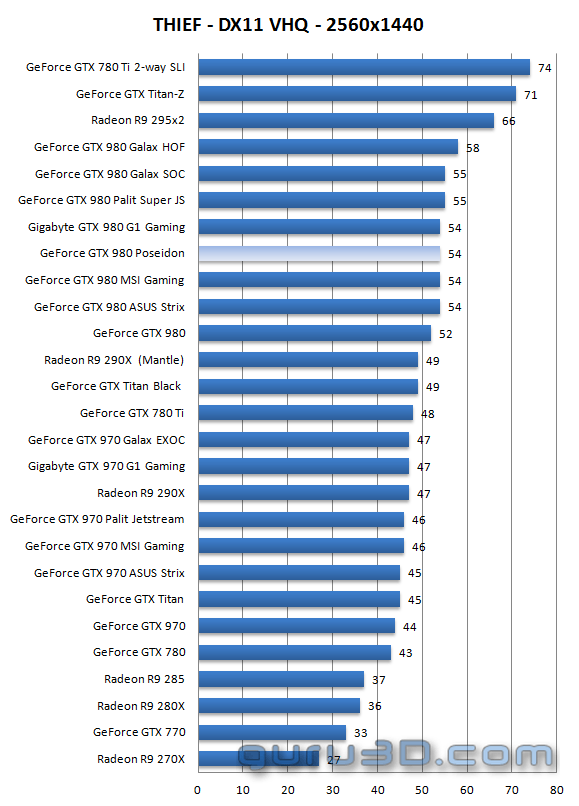

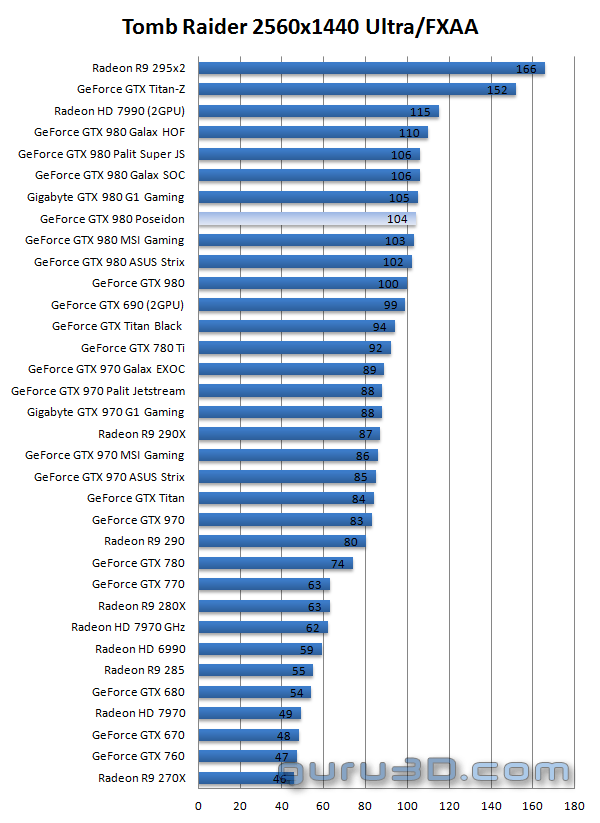

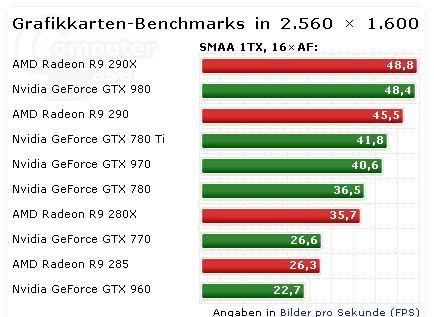

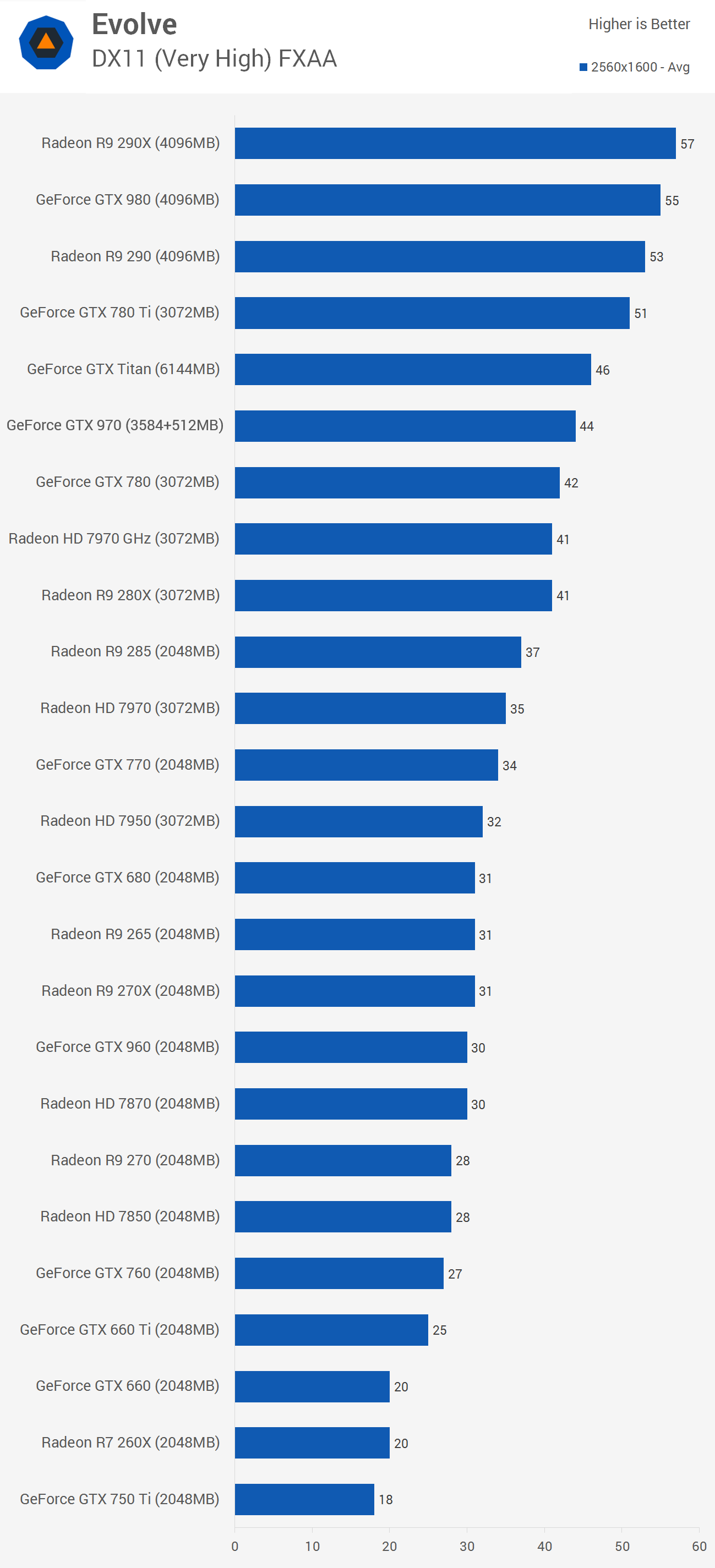

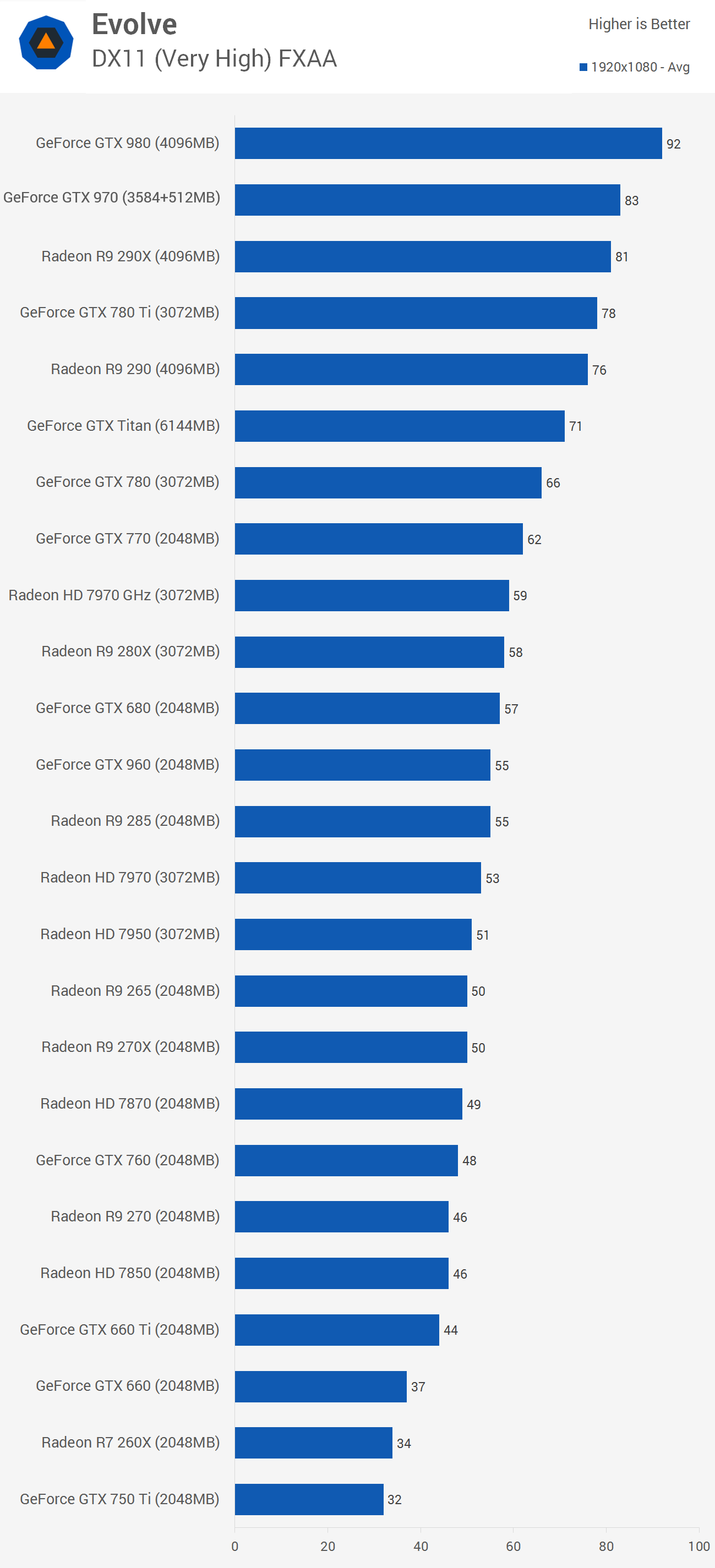

you are deliberately comparing a model you fucking KNOW throttles under load, non reference designs don't do that, the 980 is 10% faster than the 290X at 1080p, less than that at 4k when neither card is throttling and neither is overclocked. hell, that bench shows the 290 only being 1% slower than the 290X, thats complete nonsense too, and i call into question the legitimacy of your source. I don't give a rats ass the reputation you have around here for your work on dark souls and gedosato, when you are full of shit i'm going to call you out on it.