can we not do this fanboy nonsense

The title of the thread is already fanboy nonsense.

can we not do this fanboy nonsense

You can install DOS on modern systems, it's just more convenient to use DOSbox.Does it work on modern versions of Windows? I think you'd need to use either DosBox or a VM.

Yeah, that's ever the problem. Companies aren't going to invest as much in games they charge 5-10 dollars for vs 60 dollar new games.

Plus controls, not many people have something like this, and touchscreen only limits the scope of the game.

^basically the NX?

176. Different architecture anyway, so a direct comparison is pointless.Isn't the Wii U GPU at over 300 GFLOPs?

Two of the cores are performance cores, the other two - power-saving cores, and only two cores are visible at a time. It's not clear whether the scheduler is clustered switching or IKS, but it clearly is not Heterogeneous Multi-Processing (HMP).

Isn't the Wii U GPU at over 300 GFLOPs?

Even the OP isn't relevant to the discussion. We see no comparisons between the iPhone vs the WiiU even.How is this relevant to the discussion?

176. Different architecture anyway, so a direct comparison is pointless.

How is this relevant to the discussion?

I thought it could run at double that without over-volting or any risk of CPU/GPU damage

EDIT: Might require safe overclocking but on a home console that doesn't hurt battery life, only power bill

Doesn't really matter when the game doesn't even look fun.

Where'd you get that? It's on a 45nm fabrication plant, and at 550MHz. Running at 1100MHz in the Wii U's form factor on 45nm...

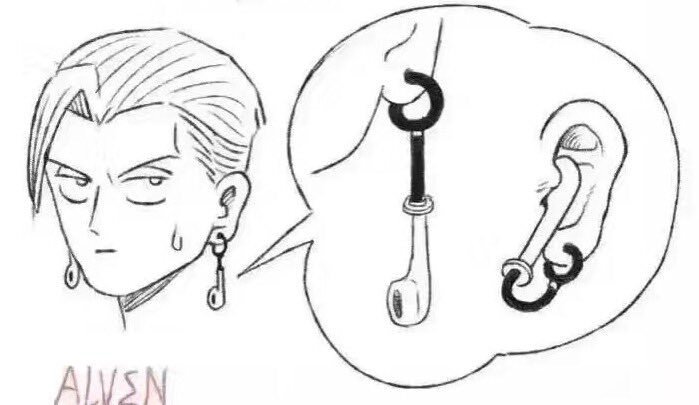

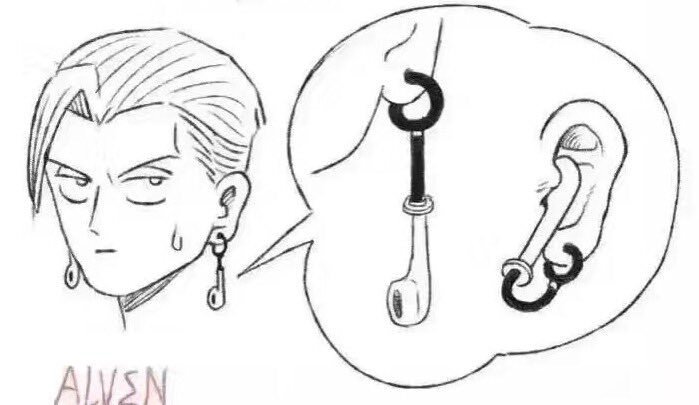

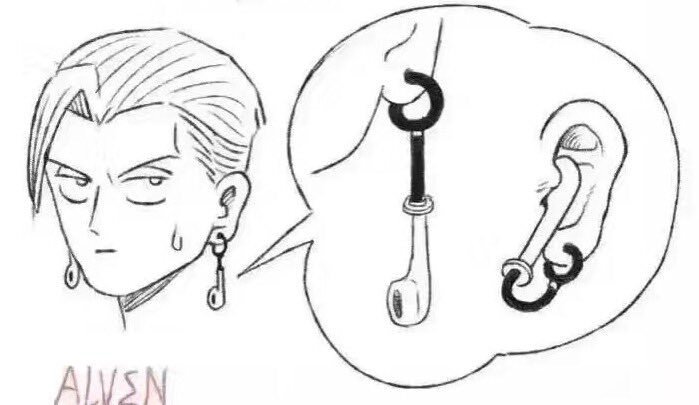

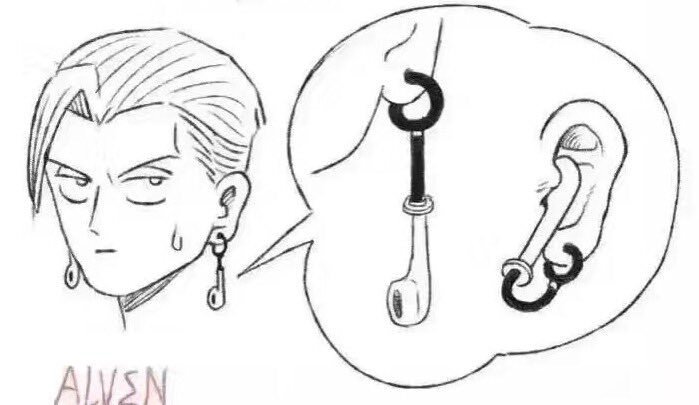

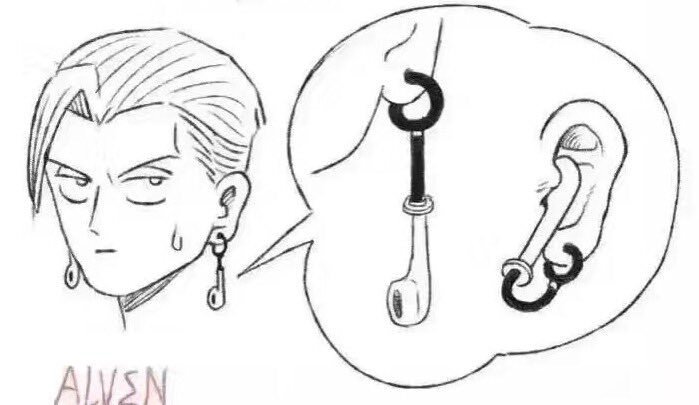

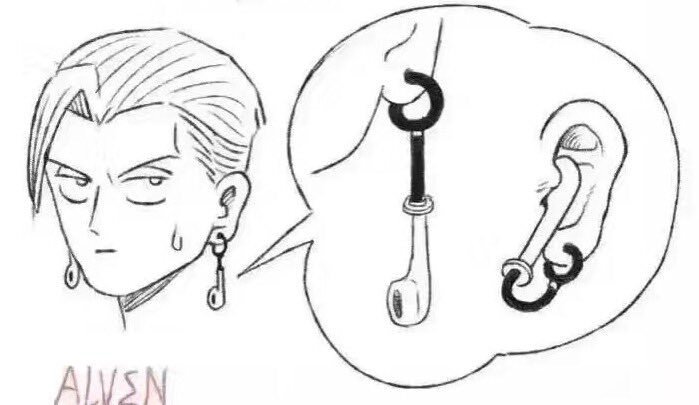

There's nothing quite like losing a $160 earpiece when it falls out down a storm drain, into someone's lawn, or any number of places these could end up lol.

What are you talking about? Apple's Metal graphics API is very advanced

Problem solved.

Not always true as they bloat the OS revisions which bog down the speed to some degree.

A lot of iOS features go wasted.

Not really.Makes sense. The A9X from last year matched the X1 for GPU performance

LOL, people are going to keep their wireless earpieces from getting lost by.......attaching a wire to them....aren't they.

Cracking up here.

Problem solved.

Problem solved.

The title of the thread is already fanboy nonsense.

Jaguar is a last gen mobile CPU.Can't wait this meme to die. For pretty much anything other than GPU workload the jaguar will destroy last gen CPUs.

emulators on pc exist

Amazing.

Problem solved.

The title of the thread is already fanboy nonsense.

Problem solved.

Jaguar is a last gen mobile CPU.

It won't destroy anything lol

It is weaker than Intel Pentium M released in 2003.

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <unistd.h>

/* ... */

/* the graph */

vertex_t * G;

/* number of vertices in the graph */

unsigned card_V;

/* root vertex (where the visit starts) */

unsigned root;

void parse_input( int argc, char** argv );

int main(int argc, char ** argv)

{

unsigned *Q, *Q_next, *marked;

unsigned Q_size=0, Q_next_size=0;

unsigned level = 0;

parse_input(argc, argv);

graph_load();

Q =

(unsigned *) calloc(card_V, sizeof(unsigned));

Q_next =

(unsigned *) calloc(card_V, sizeof(unsigned));

marked =

(unsigned *) calloc(card_V, sizeof(unsigned));

Q[0] = root;

Q_size = 1;

while (Q_size != 0)

{

/* scanning all vertices in queue Q */

unsigned Q_index;

for ( Q_index=0; Q_index<Q_size; Q_index++ )

{

const unsigned vertex = Q[Q_index];

const unsigned length = G[vertex].length;

/* scanning each neighbor of each vertex */

unsigned i;

for ( i=0; i<length; i++)

{

const unsigned neighbor =

G[vertex].neighbors[i];

if( !marked[neighbor] ) {

/* mark the neighbor */

marked[neighbor] = TRUE;

/* enqueue it to Q_next */

Q_next[Q_next_size++] = neighbor;

}

}

}

level++;

unsigned * swap_tmp;

swap_tmp = Q;

Q = Q_next;

Q_next = swap_tmp;

Q_size = Q_next_size;

Q_next_size = 0;

}

return 0;

}This isn't a thread about iPhone vs other Mobile tech.I'll bite.

Come back when your Nexus 6 has acceptable read/write performance.

This isn't a thread about iPhone vs other Mobile tech.

This is supposed to be a thread about iPhone vs consoles, Nintendo ones in particular.

There are still no relevant benchmarks in this thread.

But it's not advanced enough that it has beat home consoles in performance and graphical quality of games.

Not really.

The closest we'll get to 1080p gaming from Nintendo (on the Plus line).

I'm excited to see where they head with the mobile market and how they deal with Android too.

It will take more than better batteries to kill consoles.Personally I fell for the whole Apple TV and mobile will kill consoles but I now realise it won't until battery technology at least catches up and that's not going to happen either because they keep making them thinner..

WiiU is much weaker than what?They already have that on the much weaker wii u.

What are the chances of the iPhone 7 being more powerful than the NX?

I'll bite.

Come back when your Nexus 6 has acceptable read/write performance.

Just to make it clear, I'm not an Apple fanboy - I've owned and used phones that are primary devices across webOS (HP Pre 3, Palm Pre 2), Windows Phone 7 (HTC Radar), Windows Phone 8 (Nokia Lumia 820, Lumia 620), Windows Phone 8.1 (HTC 8X), BlackBerry 10 (BlackBerry Z10), Android 4.4 (Motorola Moto X) and iOS (iPhone 5s) over the last 5 years, so I think I have a fair say when discussing software and hardware across devices these days.

Pretty high imo. Nintendo can't use as expensive components as Apple.What are the chances of the iPhone 7 being more powerful than the NX?