Maybe the devs aren't wrong and teraflops aren't everything, maybe there are more things involved on what cause to have better or worse performance.

Maybe they are a theorical peak indicator for certain tasks and not a good indicator of overall real world performance.

CUs aren't the only difference between the consoles. PS5 GPU is faster. Cerny explained on his PS5 talk why they took that approach of less CUs but higher GPU frequency, and why their memory management and I/O optimizations are important too.

To have extra CUs helpd in some areas but adds zero in other areas where it's better to have the PS5 perks like having higher frequency for their GPU.

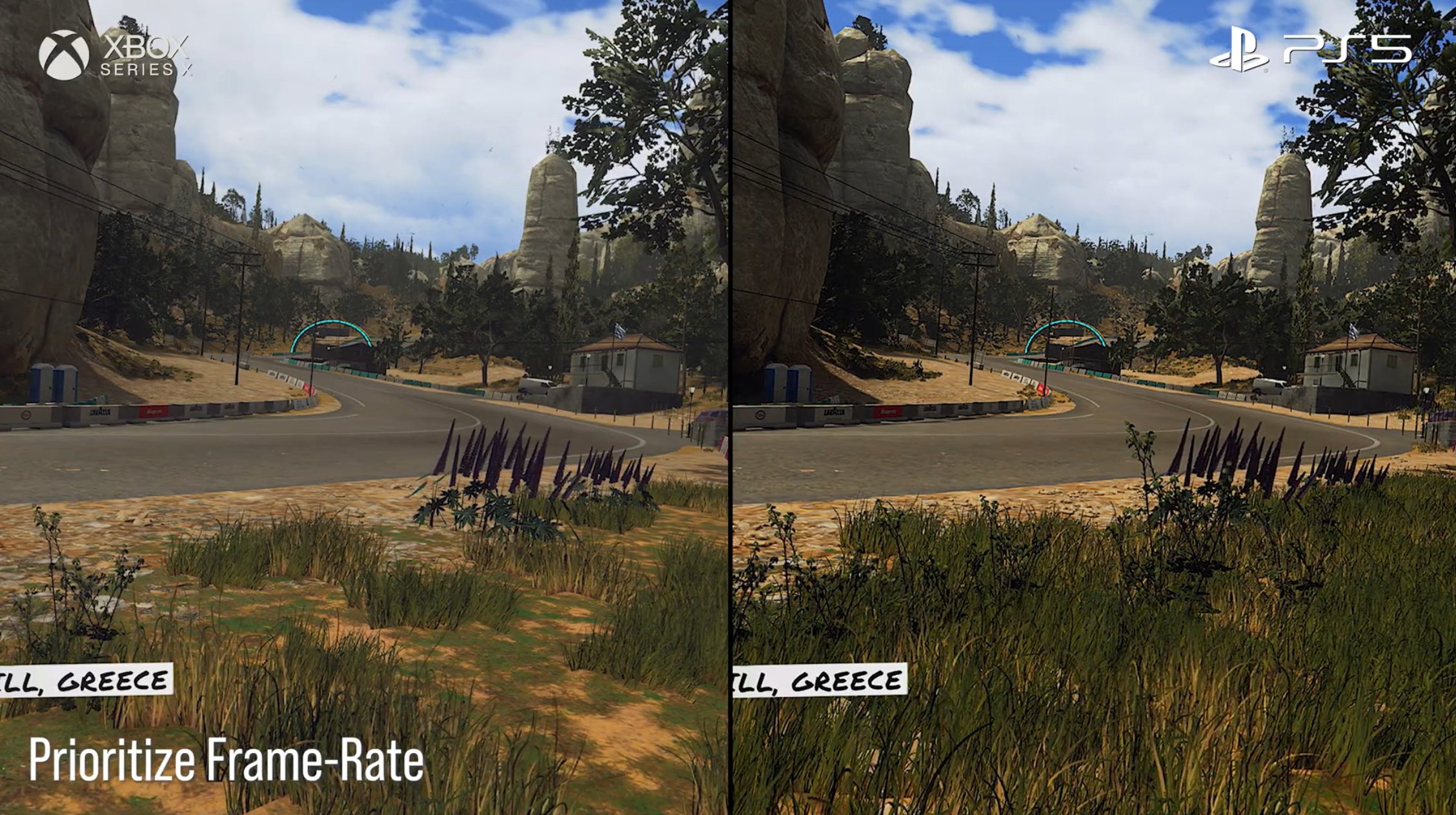

We saw from their PC version settings that their settings for these consoles are pretty much the same, so it isn't something related to the game trying to block the performance or something like that to try to artificially achieve an unneeded parity. We're also seeing pretty similar basically tied results in most multi games, so sounds that the real world performance of both consoles is basically the same.

Don't forget #puddlegate2.0!

Now seriously, I assume they'll patch that reflections issue, same goes with texture filtering looking worse on XSX