ZywyPL

Banned

Ant versus an elephant.

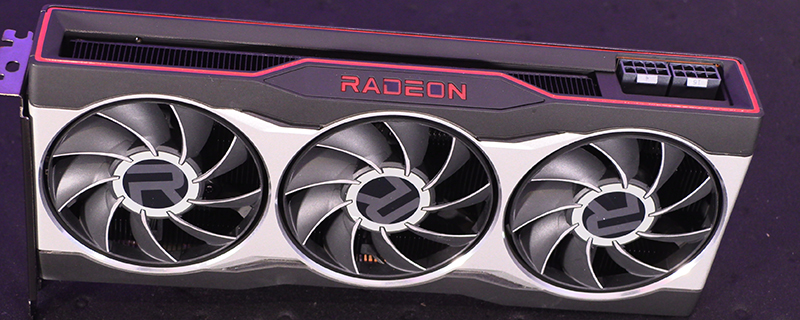

Ouch, the graphs are painful to watch... But I guess once the games start to exceed 10GB VRAM the Radeons will close the gap

Ant versus an elephant.

It's more reasonable to expect that further optimisation can improve things, given that the performance shown here doesn't seem much better than what was demo'd on the XSX.Ouch, the graphs are painful to watch... But I guess once the games start to exceed 10GB VRAM the Radeons will close the gap

Yeah Minecraft RTX is kind of a worst case scenario for these cards right now. Given that Ampere has better RT capabilities in a general sense but also the architecture seems to really shine in fully path traced games, which Minecraft is.

In addition to that the game is optimised for Nvidia cards/RT solution so it will perform even worse on AMD.

We already saw this earlier in the thread in the initial reviews where a few reviewers benchmarked with Minecraft to show RT performance.

Luckily for AMD there are only 2 path traced games right now (Minecraft and Quake), RT hardware just isn't performant enough this generation of GPUs to allow path tracing with modern graphical quality/complexity, maybe in 3 generations from now the GPU hardware will be powerful enough to make it somewhat possible.

In the meantime we will pretty much only be seeing hybrid rendered titles.

What happens with a hybrid game like cyberpunk that will use raytracing for at least 5 different things?

Ant versus an elephant.

This was probably posted at some point but AMD still is miles behind in some DX11 CPU heavy games. This was the reason why i changed 290 to 970, GPU performance was similar but in some games AMD cad was just killing my 2600K:

This is not relevant for new games but there are probably more titles like this (I wonder how FC4 in first village run, massive CPU hog...)

Also, the 290 aged significantly better than the 970 in the DX11 generation, I'm similarly having a hard time believing your 2600k was causing significant enough issues to reverse that.

I address this before here:Those results seem a little hard to believe - it's getting less than half the frames in Watch Dogs 2 as its sequel? I assume Rattay City is the most demanding part of the game because the 6800xt gets beyond those 1080p numbers in 4k. If this is true, which I can't tell because no one else is benchmarking these games, this seems like a really limited scenario.

Also, the 290 aged significantly better than the 970 in the DX11 generation, I'm similarly having a hard time believing your 2600k was causing significant enough issues to reverse that.

edit: wouldn't Total War be the gold standard for CPU intensive games? It's within the margin of error of the 3080 in Warhammer II in DX 11:

AMD Radeon RX 6800 and RX 6800 XT Review - OC3D

Introduction AMD have, to borrow a bit of sporting parlance, solved CPUs. The Ryzen range came out of the doors as excellent and the next versions only refined and enhanced them until we reach a stage with the Ryzen 5000 series where they are a match for anything the opposition can bring to the...www.overclock3d.net

Excuses, excuses, excuses. Ampere is simply better for ray tracing than RDNA2.Yeah Minecraft RTX is kind of a worst case scenario for these cards right now. Given that Ampere has better RT capabilities in a general sense but also the architecture seems to really shine in fully path traced games, which Minecraft is.

In addition to that the game is optimised for Nvidia cards/RT solution so it will perform even worse on AMD.

We already saw this earlier in the thread in the initial reviews where a few reviewers benchmarked with Minecraft to show RT performance.

Luckily for AMD there are only 2 path traced games right now (Minecraft and Quake), RT hardware just isn't performant enough this generation of GPUs to allow path tracing with modern graphical quality/complexity, maybe in 3 generations from now the GPU hardware will be powerful enough to make it somewhat possible.

In the meantime we will pretty much only be seeing hybrid rendered titles.

Excuses, excuses, excuses. Ampere is simply better for ray tracing than RDNA2.

In pure RT/PT it definitely is:

By that time it will be 2 or 3 years and both cards will be too slow.Ouch, the graphs are painful to watch... But I guess once the games start to exceed 10GB VRAM the Radeons will close the gap

Excuses, excuses, excuses. Ampere is simply better for ray tracing than RDNA2.

What on earth are you talking about? I think you have spent a little too long staring at your Nvidia 3080/3090 desktop wallpaper today that it might have affected your reading comprehension.

I've never stated that RDNA2 had better performance with RT than Ampere. I've repeatedly stated that Ampere simply has a more performant RT solution and if you need the best RT performance you should buy the 3000 series this generation. I've again, never stated otherwise. Nor has any reasonable person in this thread so no idea where this take is coming from.

As for my quoted post you are replying to, I pretty much explain it all in there. You may want to re-read it without the Nvidia goggles as I clearly state that the huge improvement in path tracing performance is due to how the Ampere architecture handles RT and when not hybrid rendering the cards are really able to stretch their wings.

You see this also with Turing vs Ampere where the biggest gains in performance for Ampere over Turing with RT on are path traced games such as Minecraft and Quake.

I mentioned that this title was a worst case scenario for RDNA2 vs Ampere given that Ampere really shines with fully path traced games, that is where we see the biggest gains with their RT solution. To amplify the already wide delta in performance between the two in path traced games, Minecraft RTX is only optimized for Nvidia cards/RT solution so will perform even worse again than it would otherwise on RDNA2 based cards. These are simply facts.

The perfectly reasonable point I'm making is that while Minecraft is certainly a bad performer on RDNA2 cards with RT enabled, that this performance gap we see was not representative of most real world gaming scenarios which use hybrid rendering instead. While the 3000 are still better at RT in most cases and we will see them ahead in most cases when RT is enabled, the gap is nowhere near as big as it is in Minecraft. The takeaway from that for any reasonable person is that Nvidia has a better RT solution but not more than 100+% better in real world scenarios.

Ampere is simply better for ray tracing than RDNA2.

I'm not going to waste my time reading this dissertation. You made excuses as to why RDNA2 performs worse than Ampere in ray tracing, such as games being optimized for Ampere. However, now you're backtracking.

I'm not back tracking on anything, nor did I make any excuses. If you are not even going to bother reading reasoned replies and posts on a discussion forum then why are you here? Please stop putting words in my mouth or fantasizing about what you would like me to be saying that makes it easier to argue your points rather than what I'm actually saying, it is very tiresome. Your hot take was handily slapped down by my reply and now you are retreating with your tail between your legs.

Speaking of making excuses, aren't you the guy who bought both a 3080 and then also a 3090, has a picture of them as your actual real life desktop wallpaper? Didn't you then after being triggered about RDNA2 being a more efficient architecture you made an entire thread trying to downplay their advantages and state that "No guys, for realz Ampere is actually secretly the more efficient architecture!".

RDNA2 Isn't As Impressive As It Seems

RDNA2 is manufactured on the "7nm" manufacturing process of Taiwan Semiconductor Manufacturing Company (TSMC), whereas Ampere is manufactured on the "8nm" manufacturing process of Samsung. Despite the actual sizes of these manufacturing processes not being consistent with their marketing names...www.neogaf.com

Didn't you try to give this architectural overview while knowing almost nothing about how GPU architectures work or even being very proficient with computers/technology? You made a thread asking for help because you didn't even know which slots on your motherboard to put your RAM. While that in and of itself is not a crime or something to be ridiculed over it is only one in a long line of posts from you asking beginner desktop PC technical questions, which is hilarious that you would then try to act as an a GPU architectural expert or someone with enough knowledge to give any kind of comparison or breakdown.

It was simply a fanboy thread designed to put in a lot of:

Excuses, excuses, excuses. RDNA2 is simply better for efficiency than Ampere.

Ampere is better in ray tracing combined with rasterization as well.

Also, in regard to Dirt 5 - the one game in which RDNA 2 performs better in ray tracing

It's been proven that RDNA 2 cards render the game with worse image quality than Ampere cards when ray tracing is on and when it's off; it's also been proven that Ampere cards render the game with more effects that hamper performance (e.g. more shadows).

So, RDNA 2 cards cheat in order to peform better in Dirt 5 than Ampere.

I'm too smooth to engage in petty arguments based on ad hominems. This is for the rough jacks; I'm a smooth cat, baby!

Sure John:

Sure John:

Those are bullshit benchmark "results." Anyone can create a graph with "results."

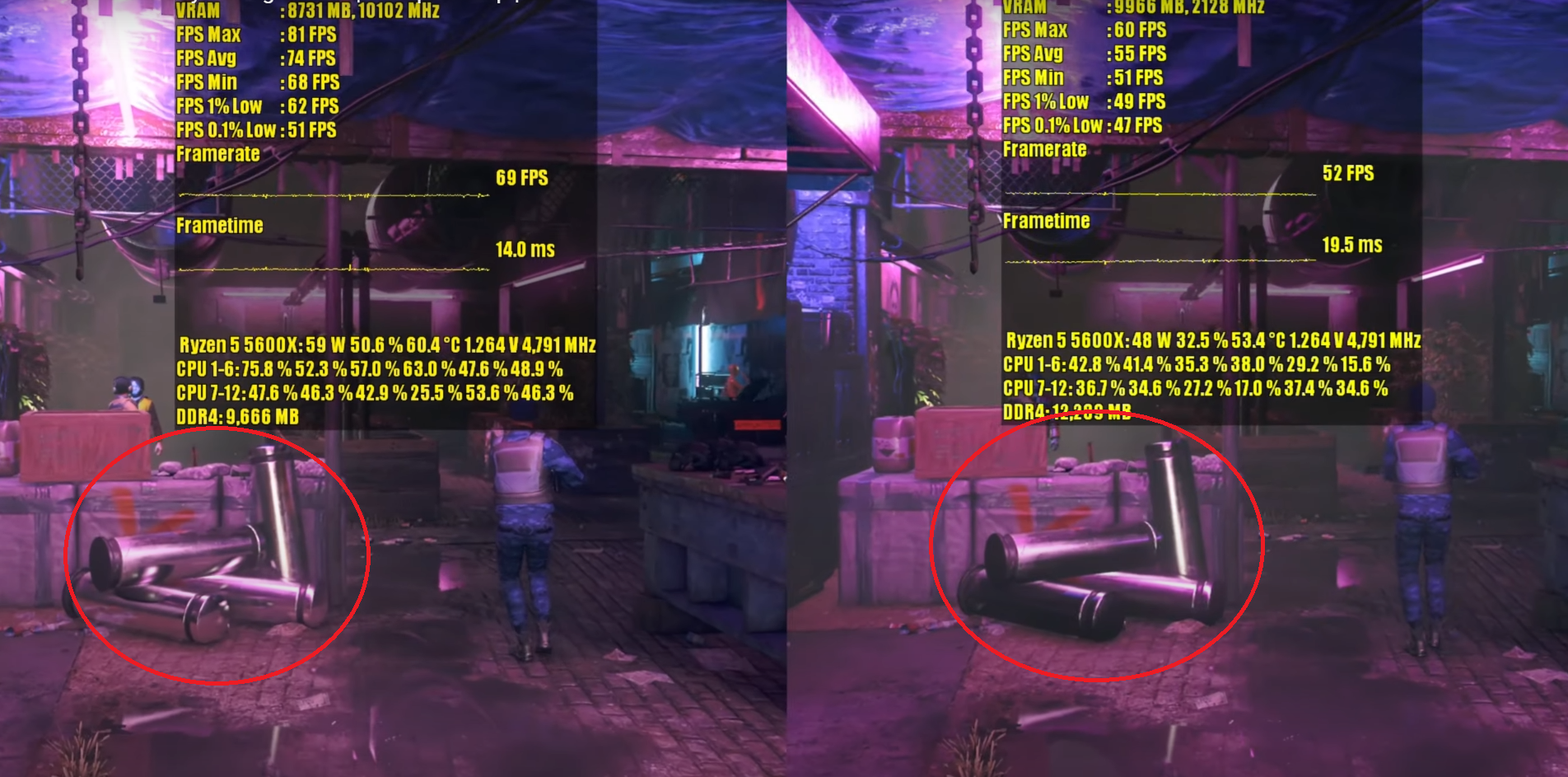

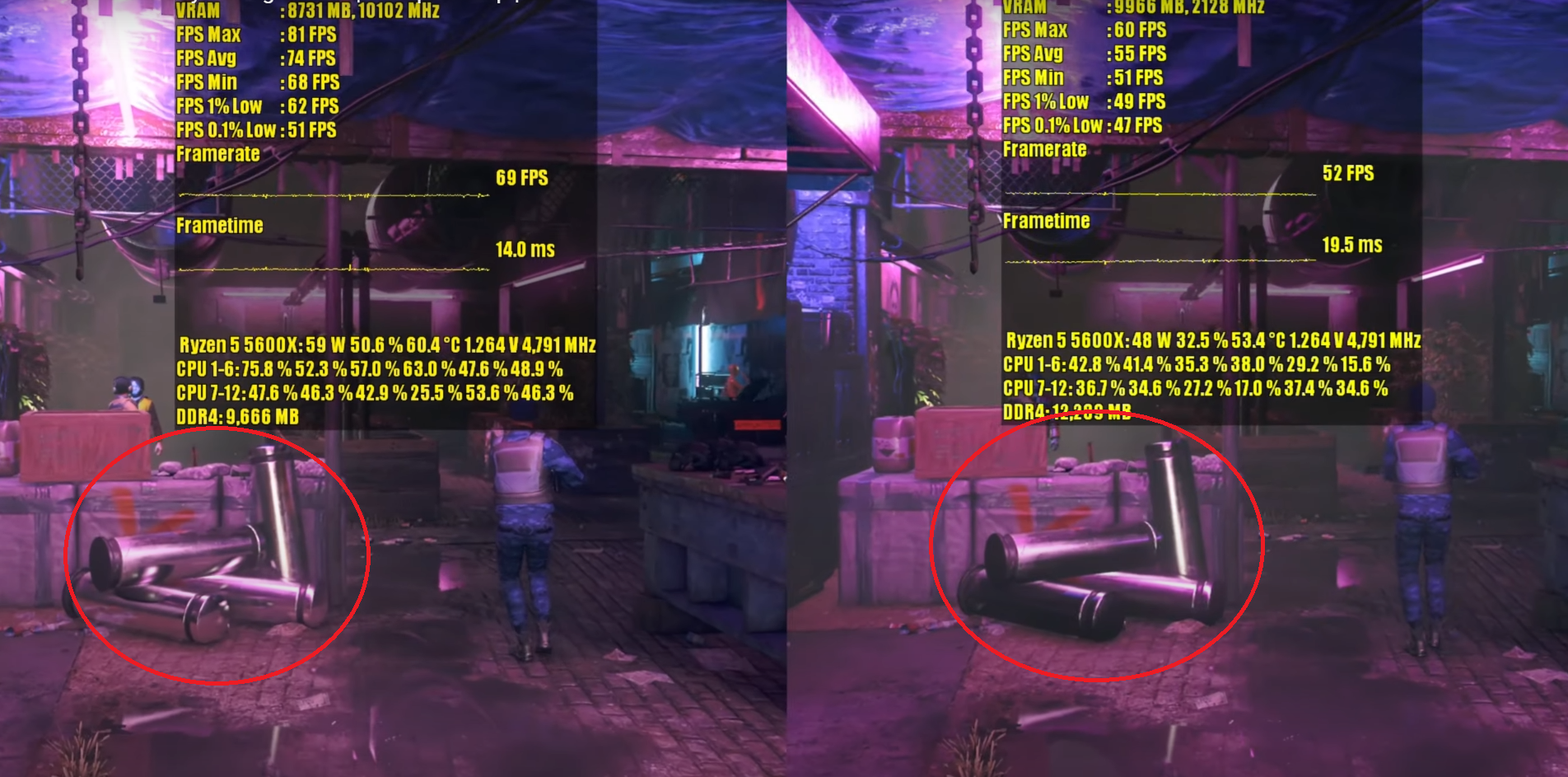

Here's actual footage of the RTX 3080 vs the RX 6800 XT in ray tracing across six games.

Go to 13:03 to see both cards running Watch Dogs: Legion at 1080p and 14:34 to see them running the game at 1440p.

It's AMD OPTIMIZED clearly, just look at quality of it:

It's AMD OPTIMIZED clearly, just look at quality of it:

My understanding for this case was the driver was bugging out on AMD cards causing effects to not render properly. I believe this was called out in AMD's review guidelines/press deck if I'm remembering correctly. Presumably this will be fixed with a driver update (was it already fixed with the recent update? I can't remember). It is possible performance may drop on AMD cards once the driver update hits, we won't really know until then.

Yea, I mentioned a week or so ago that the reflections on the trash bins are missing in the RDNA 2 rendering of the game.

Ironically, RDNA 2 cards still run the game worse with ray tracing enabled despite producing inferior image quality.

Unbelievable.

I wonder if it's this or the same thing they did on consoles, many materials (like some metal objects) just don't reflect to save performance.

Yeah, WD2 can't be used for any benchmarking with RT on.

Now moving to Godfall RT, this looks like some screenspace bullshit and not RT

I wonder if it's this or the same thing they did on consoles, many materials (like some metal objects) just don't reflect to save performance.

2020, when Lisa did such things to Huang that some people feel urge to claim computerbase is not a well known German source with hell of a strong reputation, but "anyone" who "create a graph with 'results'".Those are bullshit benchmark "results." Anyone can create a graph with "results."

Dirt 5 is a DXR 1.1 game, I don't know if NV even supports it.

If not, it's not even apples to apples comparison (and good job, Huang).

2020, when Lisa did such things to Huang that some people feel urge to claim computerbase is not a well known German source with hell of a strong reputation, but "anyone" who "create a graph with 'results'".

Yikes.

Shit is in stock on mindfactory, to whom it may concern:

6800 - 769€+ (claimed $579)

For reference (also all in stock)

3070 - 689€+ (claimed $499)

3080 - 1019€+ (claimed $699)

3090 - 1779€+ (claimed $1499)

Dirt 5 is a DXR 1.1 game, I don't know if NV even supports it.

Those are bullshit benchmark "results." Anyone can create a graph with "results."

Also, in regard to Dirt 5 - the one game in which RDNA 2 performs better in ray tracing - it's been proven that RDNA 2 cards render the game with worse image quality than Ampere cards when ray tracing is on and when it's off; it's also been proven that Ampere cards render the game with more effects that hamper performance (e.g. more shadows).

So, RDNA 2 cards cheat in order to peform better in Dirt 5 than Ampere.

Go to 3:30 and with until 5:56.

www.techspot.com

www.techspot.com

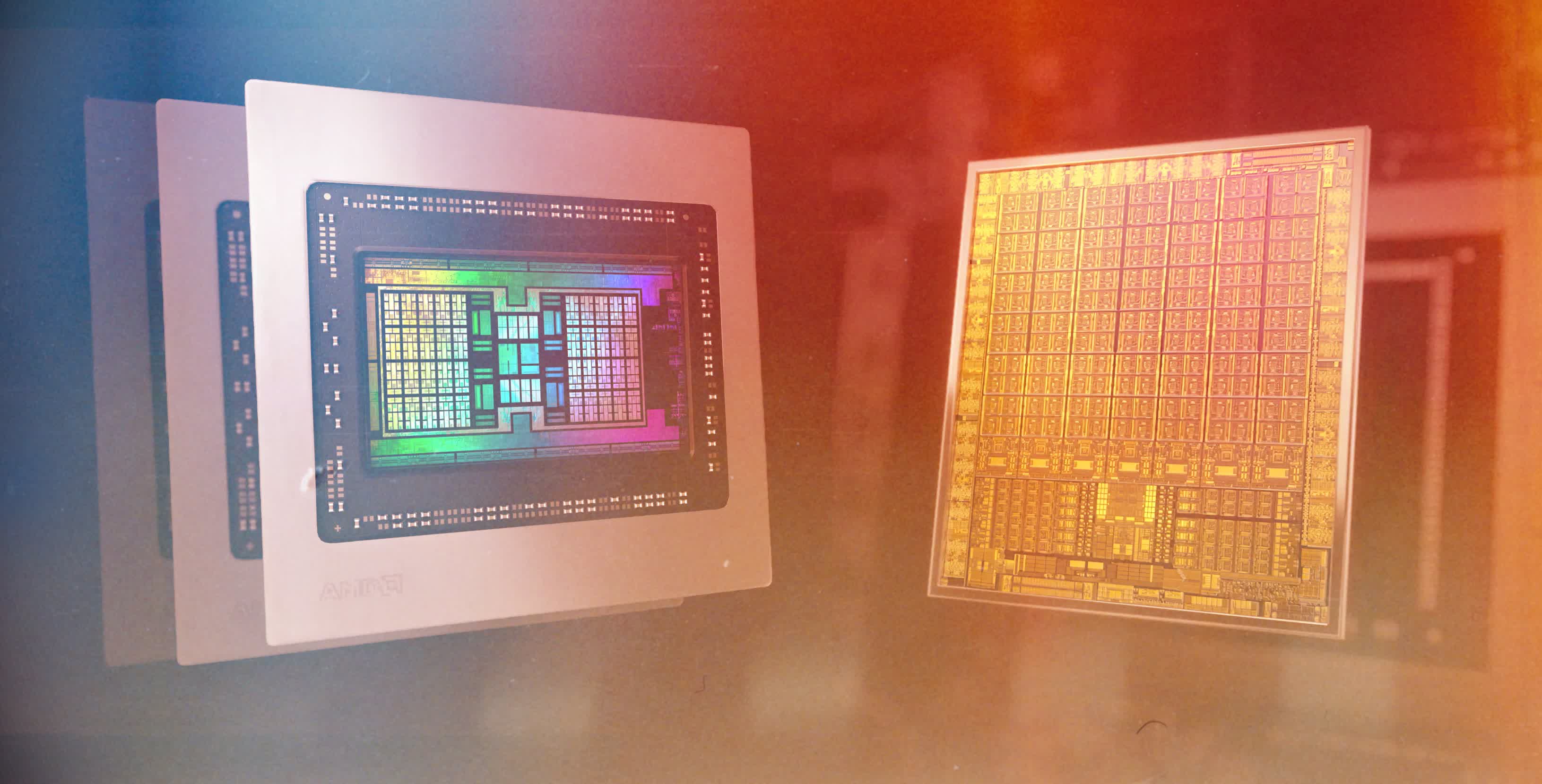

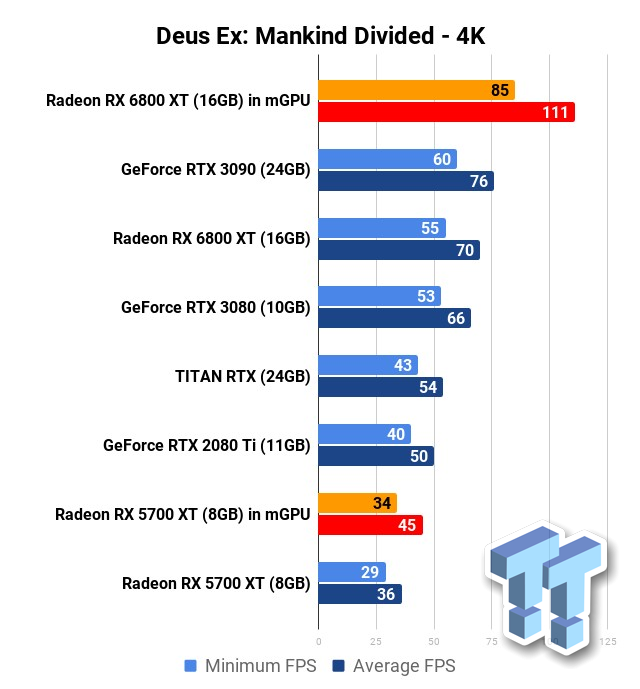

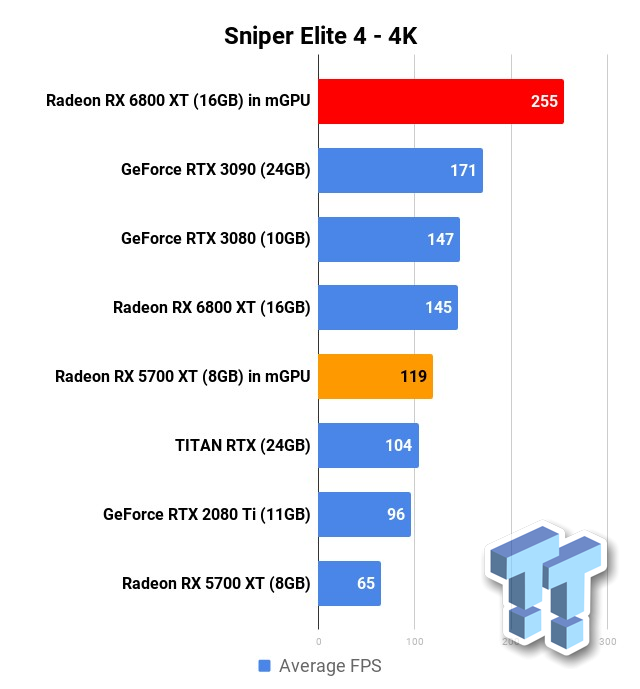

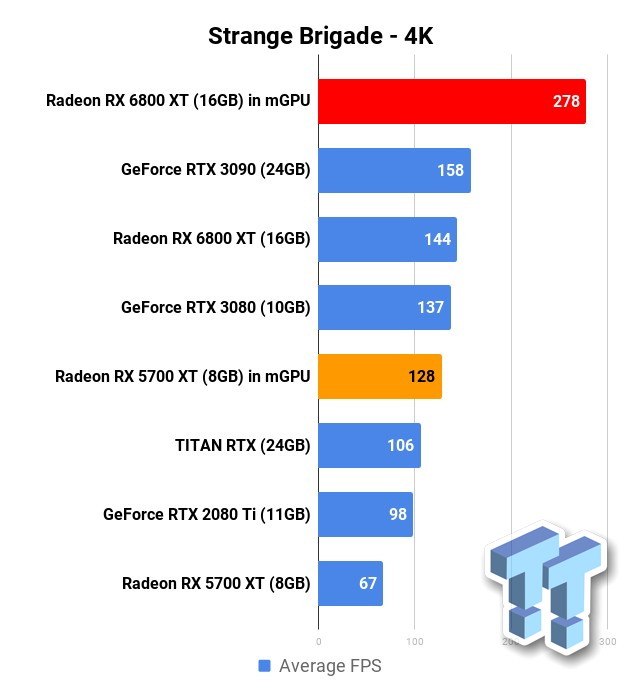

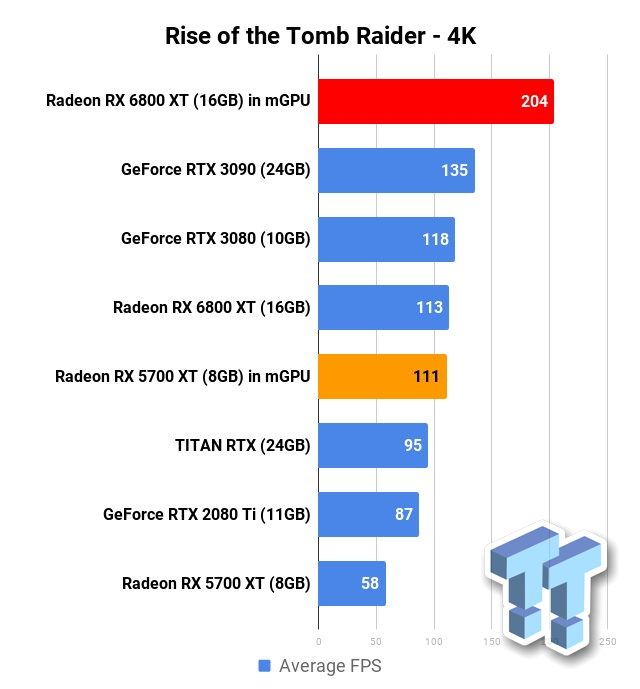

Great results in mGPU. It is a pity that GPU manufacturers/developers didn't pursue that avenue a bit more but I suppose the kind of person who would buy two high end GPUs to use together is only a tiny segment of the market so for developers who are already on crazy crunch and pushed to the limit with budgets and manpower it just didn't make enough financial sense.

Similarly I know there are some technical hurdles with VRAM and micro stuttering. From GPU manufacaturer point of view maybe it would remove incentive for users to upgrade to your latest GPU if two of your older ones can give similar or better performance.

Still, it would have been interesting to see an alternate timeline where manufacturers/developers went all in on multi GPU setups for gaming.

I'm still salty about that DX12 promise to naively see multiple GPUs as a single big one, with stacking the VRAM even. But then again, seeing how far a single GPU performance has gone in the recent years, I can't really blame the devs nor NV/AMD for dropping mGPU tech.

That is far from obvious and I'm talking about "support" as "actually do" and not formally accept the call but execute it 1.0 way.Just to be clear I'm pretty sure Nvidia supports the DXR 1.1 spec/features.

That is far from obvious and I'm talking about "support" as "actually do" and not formally accept the call but execute it 1.0 way.

It is certainly possible, it could be a way of saving performance on AMD cards as the RT performance is less than Ampere. But this seems like a weird thing to do in the PC space as if they were going to do that kind of thing they would probably detect if you were using for example a weaker Nvidia card like a 2060 and also drop effects if it was to save performance.

Generally on PC they just give you all effects as is and it is up to the user if they want to lower effects or quality settings for better performance. So for this I'm almost 100% convinced it is related to the driver rather than purposely rendering fewer effects/lower quality ones, but I suppose we will find out soon once an updated driver sorts out the issue I'm sure people will rebenchmark etc.. to see if the issue is fixed or not.

EDIT: Oh I found the "known issues" in the press drivers which calls out the lack of reflections:

So like I mentioned earlier, looks like it is a driver issue that AMD is aware of. It would be pretty unprecedented to assume that RT effects would be disabled or lower quality on AMD cards. That type of thing doesn't really happen in the PC space. If your current hardware is not capable enough developers kind of say "tough luck! we are doing it anyway! Performance may tank with your current card but hopefully your future card runs it better!".

as i wrote pages before WD:L is rendering exactly the same quality RT on my 3080 and 6800 as long as it does not bug out which it does occasionally (but definitely not constantly).

AMD's very own patch notes state that the RT effects ARE not rendering as expected and MAY be missing altogether. They don't state "may be rendering incorrectly".as i wrote pages before WD:L is rendering exactly the same quality RT on my 3080 and 6800 as long as it does not bug out which it does occasionally (but definitely not constantly).

When are the reviews supposed to be up? For the 6900XT.

I see that, but these are 6800XT and 6800. I had assumed 6900XT reviews would be up since the card is releasing tomorrow?Go to page one, first post of the thread. I have a bunch of reviews embedded and a link to a roundup of reviews.

I see that, but these are 6800XT and 6800. I had assumed 6900XT reviews would be up since the card is releasing tomorrow?