Yes yes lets give devs even more excuses to not optimize their games.

Fuck me... It's okay you prefer a certain graphics card manufacturer over the other, Nvidia provide a ton of benefits over AMD for sure, but celebrating tech that basically amounts to a band-aid on a more industry wide problem is a bit "eh" to say the least lol.

Saying that though I guess this is the new reality of videogames where the tech people behind them aren't getting any better at optimization because they're not working with the typical constraints that were present in the past.

This iteration is out of developer’s control for what is going on here. DX12 API has very basic function calls. How the GPU compiles the data and orders it with their scheduler is on the shoulders of GPU vendors. Nvidia made an optimization in that area, there’s no way for devs to optimize at that level.

Just like AMD compiler decided to make a ridiculous 99ms ubershader in Portal RTX when the game doesn’t call for it

@JirayD: Portal with RTX has the most atrocious profile I have ever seen. It's performance is not (!) limited by it's RT performance at all, in fact it is not doing much raytracing per time bin on...

threadreaderapp.com

Everyone thought that Nvidia was sabotaging performances on AMD cards for portal RTX, ends up that AMD’s compiler is drunk.

So devs don’t have all the parameters

But I’m not excusing them for most of the laziness that I’ve already critiqued them of in the past.

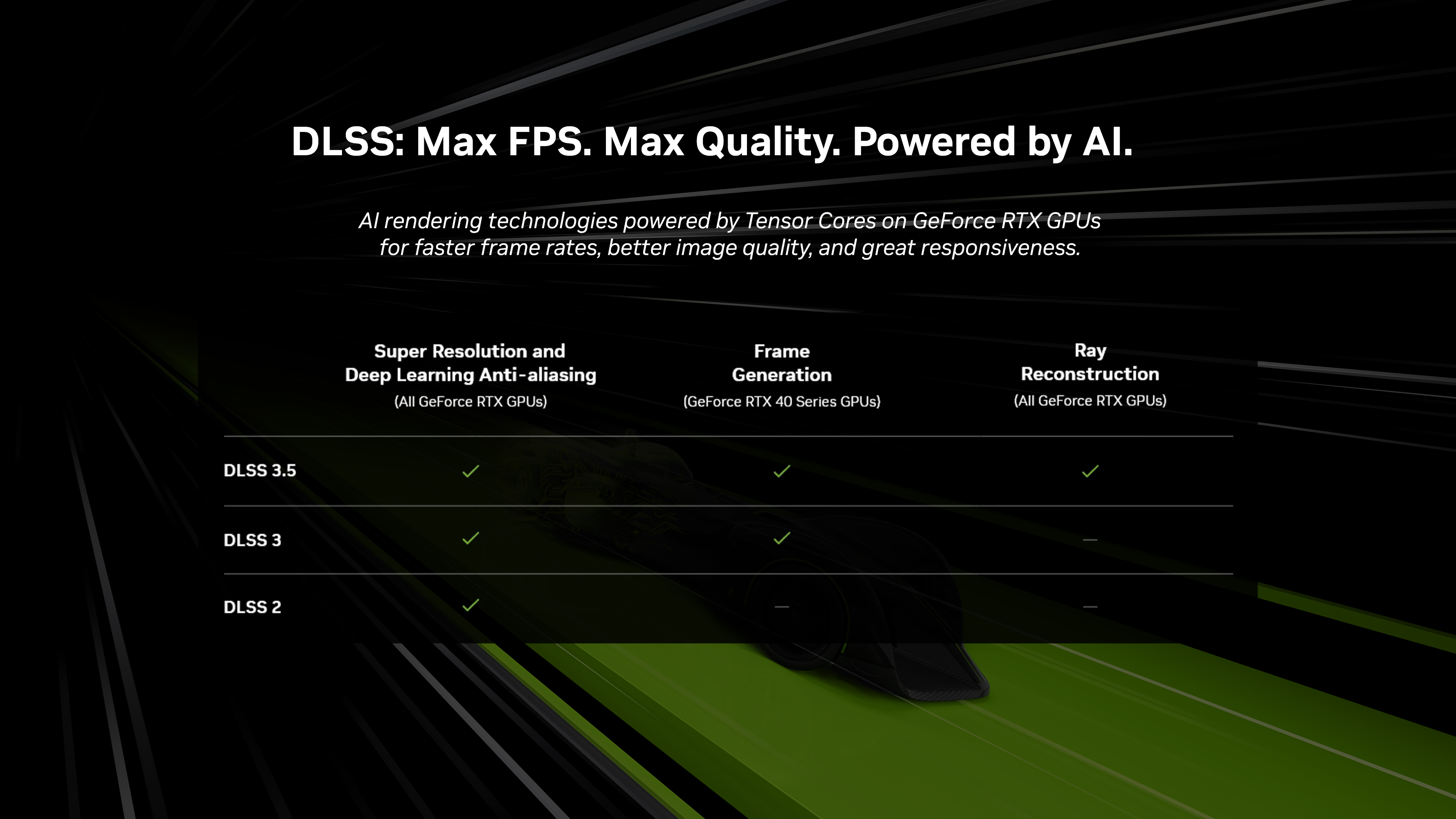

As for DLSS, yea as we saw with Remnant 2, to dev a game performance based on upsampling rather than native performances is a bad idea.