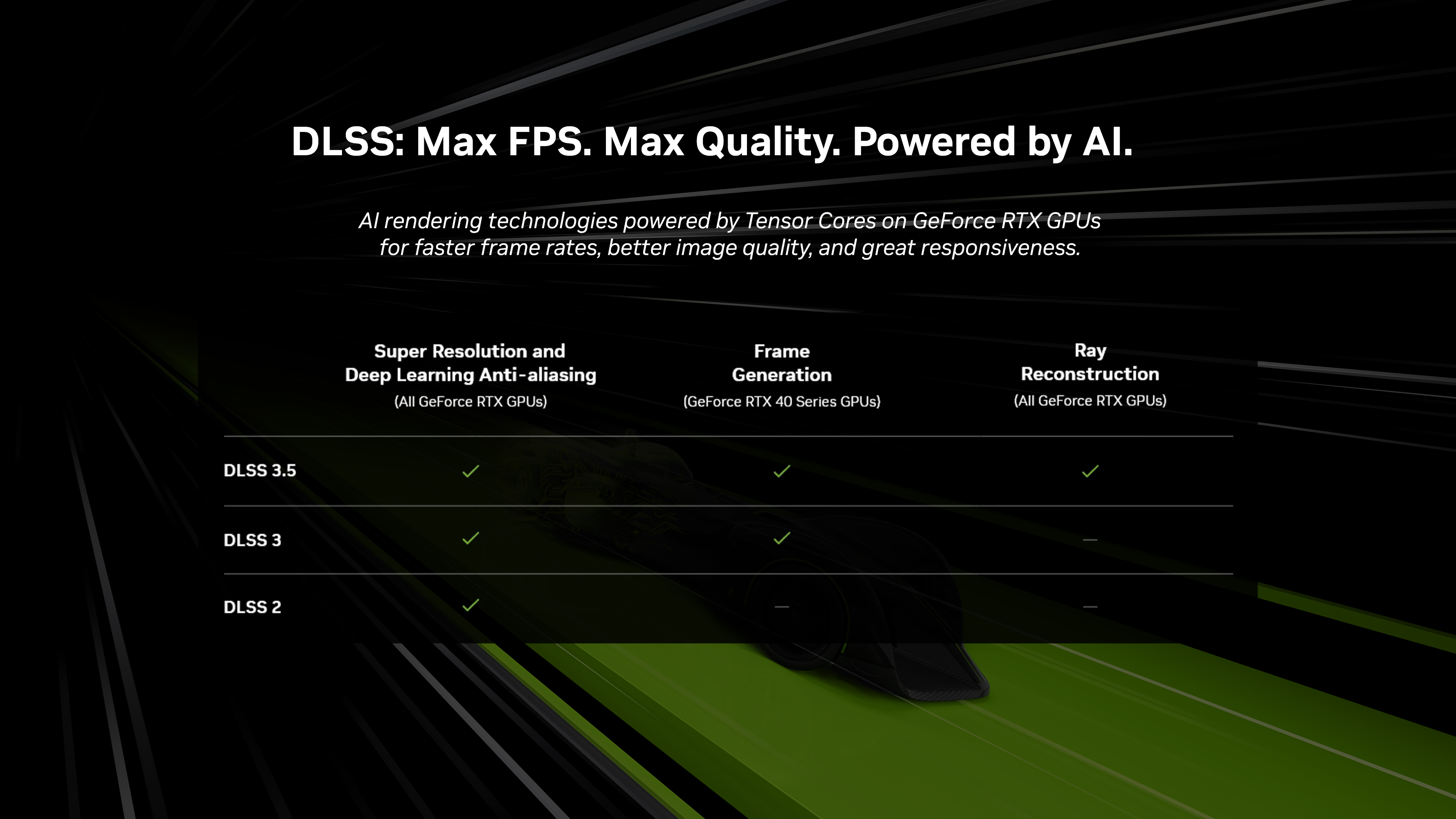

Well, 3.5 runs just fine on 2000 and 3000 series cards. So obviously Nvidia is not just stopping new features from working for no reason.

Can Nvidia get frame generation to work on previous gen cards? Maybe, but it seems it would lead to a drop in visual fidelity that would make enabling it rather pointless. Depends on how FSR3 stacks up I guess.

it is delusional to think frame gen will function fine on 3000/2000 cards

look at how whachy it is on 8 gb 4060/4060ti. it will be UNUSABLE in games that you will actually REQUIRE it, in other words, in games that stresses the card a lot. and what happens in those games that stresses a GPU like a 4060ti? they tend to gobble up VRAM like no tomorrow. and surprise surprise, everything ranging from 3060ti to 3080 10 GB lacks VRAM.

even 4070 gets hammered in hogwarts legacy at 1440p/raytracing when frame gen is enabled. extra frame drops and stutters happen due to card running out of VRAM.

frame gen will be unusable on 8-12 GB cards going forward considering the steep VRAM consumptions. devs won't really care about whether you have enough VRAM to run it or not. it is just some shiny NVIDIA tool that they can quickly implement. if their game chomps of upwards of 10.5 GB VRAM at 1440p with modest ray tracing settings, kiss a good bye to the viability and usability of Frame generation on an 12 GB card.

and simply forget about it on 8 GB cards.

there's a reason NVIDIA invested a lot in cyberpunk. it is a game that is super compliant on 6 GB cards. heck, you can run RTGI at 1080p dlss quality and be still fine in terms of VRAM usage. naturally, you will see quite nice bumps in that game with DLSS3, because there's enough VRAM for the game to operate on 8 GB budget.

but it is all smokes and mirrors. it would be, for example, unusable in jedi survivor with ray tracing.

and without ray tracing, all RTX 3000 cards are super fast and do not really require DLSS3.

DLSS3 could be meaningful with CPU bound cases but surprise surprise, ray tracing itself is creating immense CPU bound situations. funny, right? not that funny, but it is what it is.

potentially it could work okayish on 24 gb 3090 and maybe 12 gb 3080/3080ti in NICHE situations but then that would open another can of worms