Wishmaster92

Member

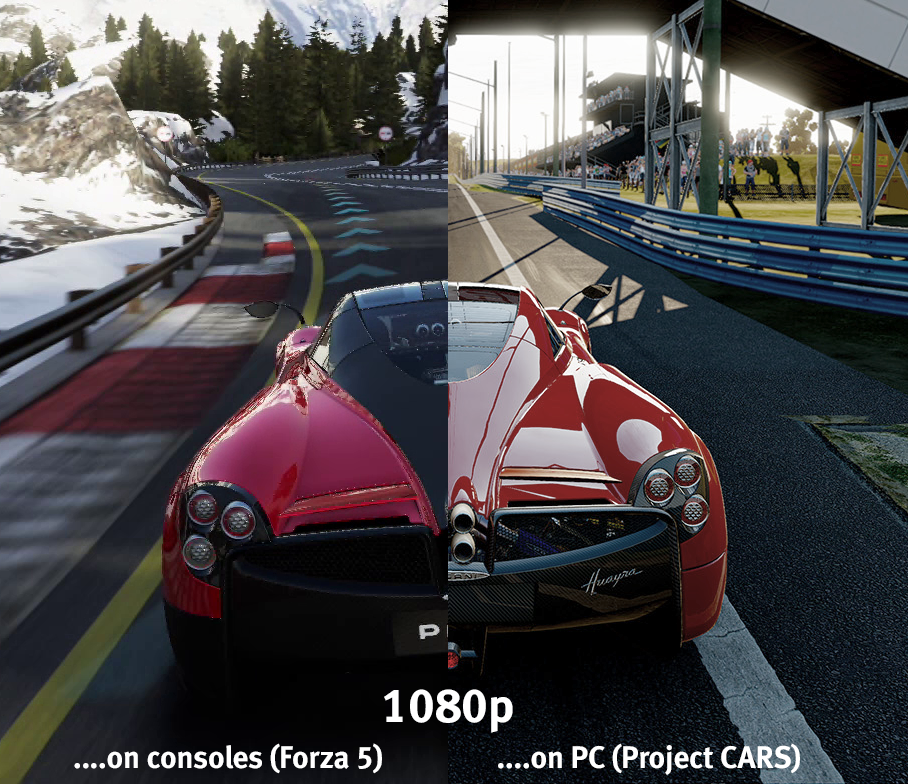

I guess this is what was happening here:

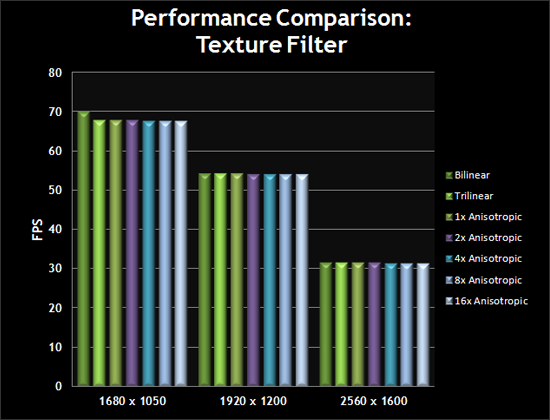

I remember having a discussion about these pictures in a killzone thread and many pointed out that the only difference between the spotlight model and the in-game model are:

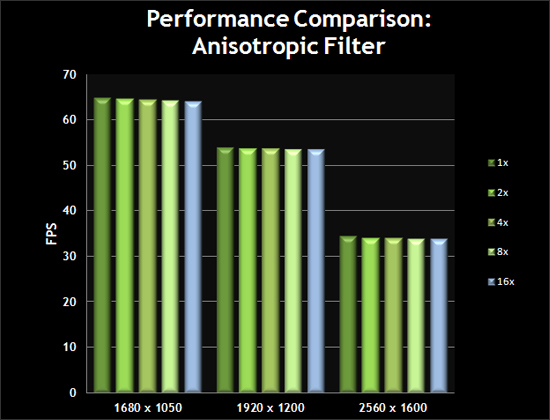

Better AA

Much better AF

The difference is noticeable.

I remember having a discussion about these pictures in a killzone thread and many pointed out that the only difference between the spotlight model and the in-game model are:

Better AA

Much better AF

The difference is noticeable.