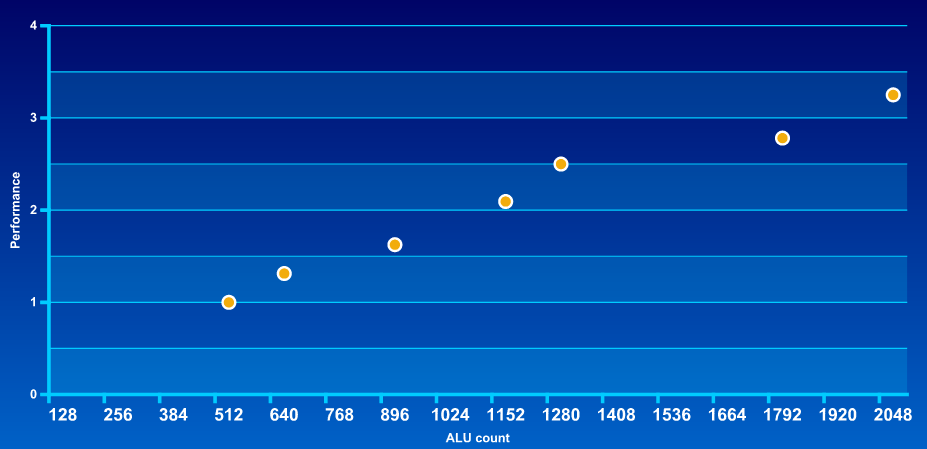

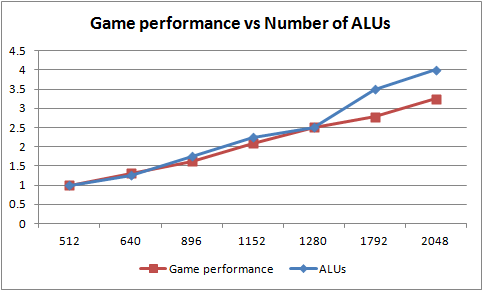

So trying to get the discussion back on topic, so I went around mapping out the GCN family GPU and their resources, trying to see if there was any balanced (or unbalanced) GPUs;

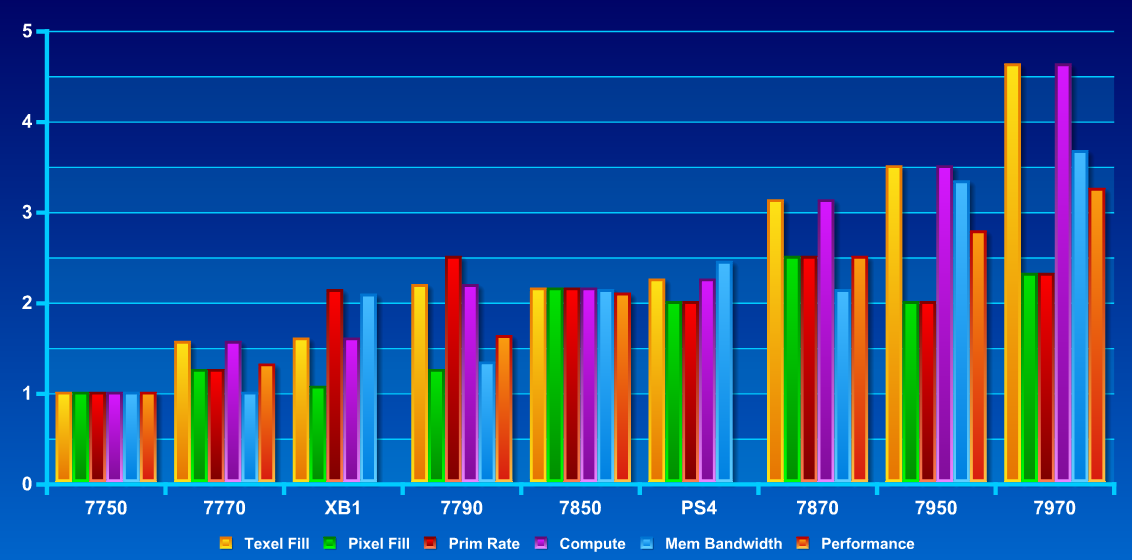

(Edited with XO/PS4 figures, Esram based and DDR3 only)

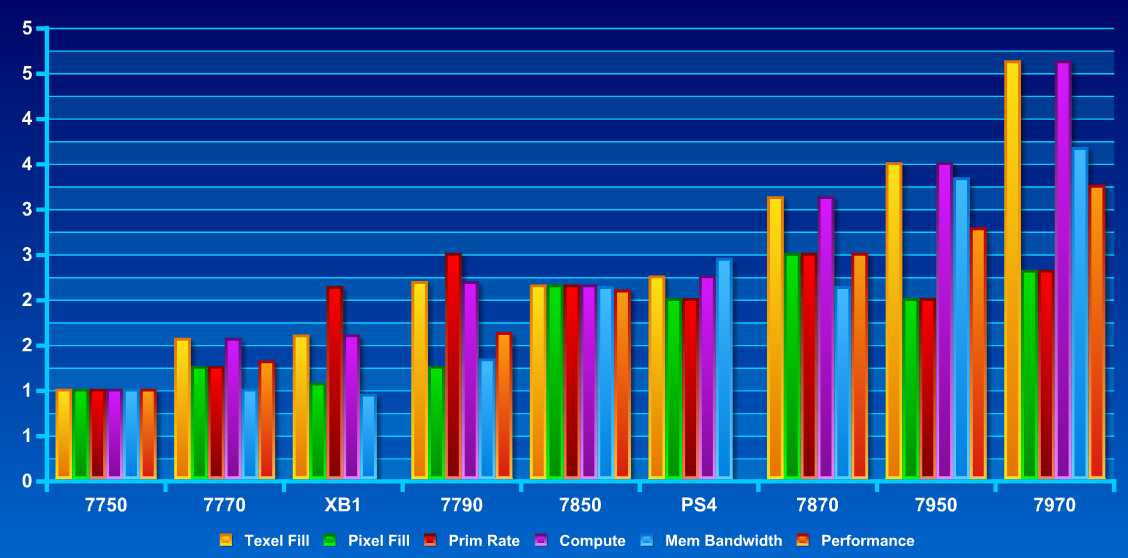

From the above you can see that Prim rate doesnt really effect overall performance as much, see how the 7790 has more triangle output than the 7850, same goes with 7870 over 7950/7970.

Second, an excess of pixel fill (ROP) isnt of tangible benefit either - 7870 having 25% more pixell fill over the 7950 yet that doesnt translate well in games because it's lacking in other areas - compute/texel fill.

These are the two areas that Microsoft decided to strengthen by going with the upclock and the two areas they gave away was texel and compute ..

How again is this a more balanced design? Oh yeah, those numbers that they ran on current titles, ones which will never be public domain. ¬_¬

*Performance source