Oh, trust me, I know that already. Some people were never actually interested in hearing anything about the system, hence why so much of what is actually said in this article is more or less being ignored altogether, or is just being twisted to mean something entirely different from what was actually said. Fact is, for people that were actually really interested in getting these details out of genuine curiousity about the system, and weren't just looking for something they can troll and make fun of, those people have a lot of interesting and detailed information to reference in this article. Some of the more negative reaction this article has been getting is proof of the fact that there was never any information or kind of clarification that Microsoft could have the engineers or architects that actually worked on this system provide to all of us that would satisfy them.

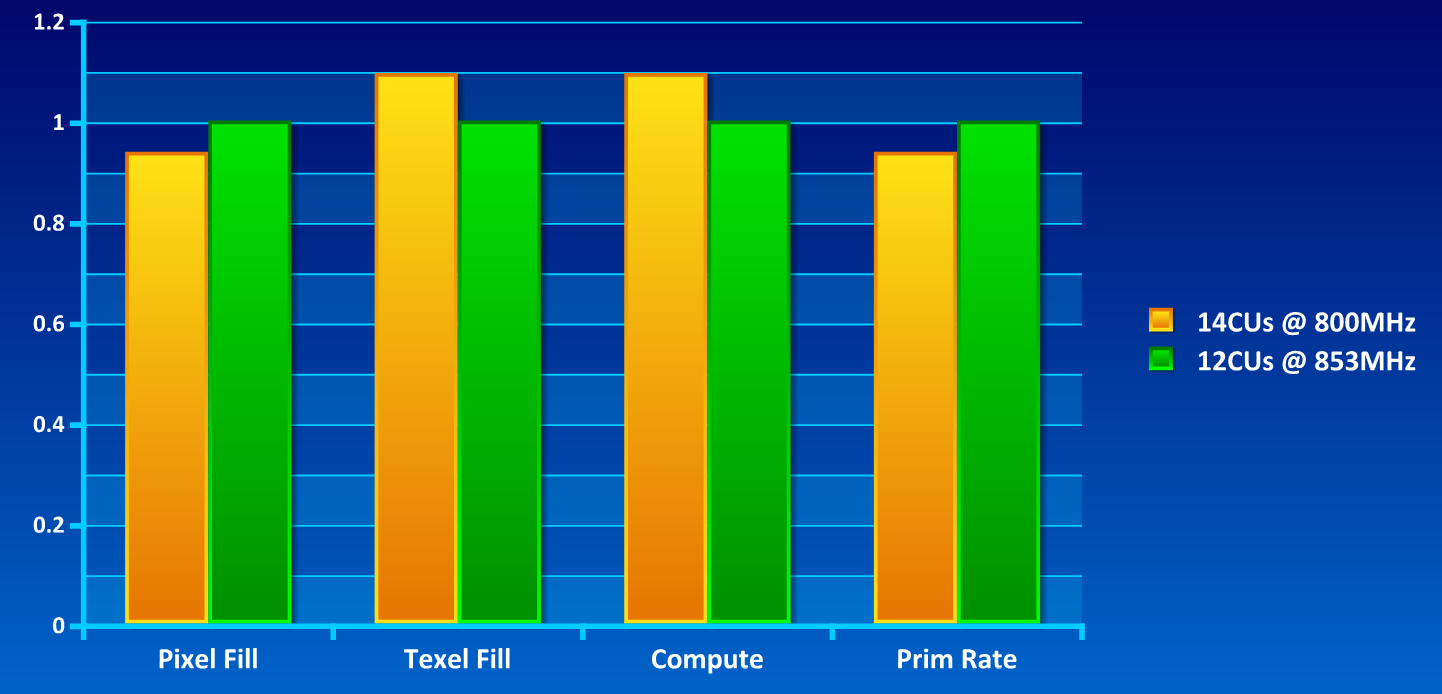

Nevermind the fact that many of the more strongly held beliefs or claims constantly made by posters about the Xbox One architecture were, in some cases, completely shutdown as false. None of that matters apparently. What would one of those things be? For a good long while I've been saying that a comparison to the 7770 and the Xbox One GPU made very little sense, and that it made much more sense to compare the Xbox One GPU to Bonaire, or the 7790 -- something I was mocked often for saying -- and as it now turns out, the Xbox One GPU is based on Sea Islands Architecture, and, as such, a lot more similar to Bonaire than many thought.

And that's a just a tiny fraction of the information shared in this article. Again, for people that were looking forward to this, lots of cool bits of info and some things were finally cleared up. Look forward to seeing what some tech sites say about some of the information shared. Would especially be interested in seeing what Anandtech and some other sites have to say about the revelation regarding the ESRAM layout actually being 32 individual modules.