graphics needs to advance not in how it renders a single frame -- because photo modes in games that don't add any extra processing clearly look amazing already -- but in how it comes together when the camera moves or the world is moving.

It isn't frames per second either. Nor is it resolution increases.

You can build a hugely persuasive 3d moving image at 30fps and 1080p, or even 720p.

What needs to improve is the little things that scream "fake" in 20 seconds of play time

- visible level of detail jumps

- inaccurate or lower resolution shadows

- insufficient number of lights or fakery with multiple lights

- mirrors that don't work (GTA5 has wonderful indoor mirrors! but fails with outdoor ones)

- geometry+textures that uses too many tricks to fool the eye in a static shot but fall apart upon inspection

- aliasing solutions that work everywhere, probably downsampling is necessary

- visible switches in texture quality from near to far

- visible trickery in rendering lots of NPCs or lots of moving vehicles (you can create a traffic jam in GTA5, that is great! but if you turn 180 degrees to look elsewhere, then turn back, half the cars have vanished).

- caching algorithms for assets that reveal themselves instead of working behind the scenes

etc etc. I'd trade resolution and frame rate for applying floating point operations to improvements in these areas. I want GTA5 in motion to look as persuasive as it does in a snapmatic photo. It already looks better than anything else out there in a single frame grab because of the art direction and lighting and assets. Stop improving that and start improving how things look in motion.

Games should sell their graphics (if graphics is their main thing) from 20 seconds of uncompressed video not photos - even if they are actual frame grabs.

Sadly, all of the things you list are performance related. Either CPU, GPU, or bandwidth. The only reason they exist is because they're pushing too much, so they need to swap models, occlude objects, etc...

If you don't want that, you need better hardware, or better engines (that hide it even better, or being more efficient), or by dropping down the scale of the games.

You push off framerate/motion, but actually, I think that is one area that I feel videogame renderers really need to focus to give a more realistic look. You're right, still frames often look good, but the way game renderers are setup is they have a sequence of still images. Sure we have some motion blur algorithms, some better than others, but I feel that better motion blur is really a key to giving a convincing visual experience.

People harp on games that drop to 24fps, har har, they're more "filimic", but it's true, 24fps is filmic, but in videogames it's not because it's equivalent to a 24fps movie shot at 1/1000th sec shutter. The motion is very hard on your eyes. In film, people aim to have something like 1/180 or slower shutter, so that the exposure gives a natural blur between frames, that meet up with the next frame. 60fps, or even 48fps is equated to the soap opera look, uncannily real.

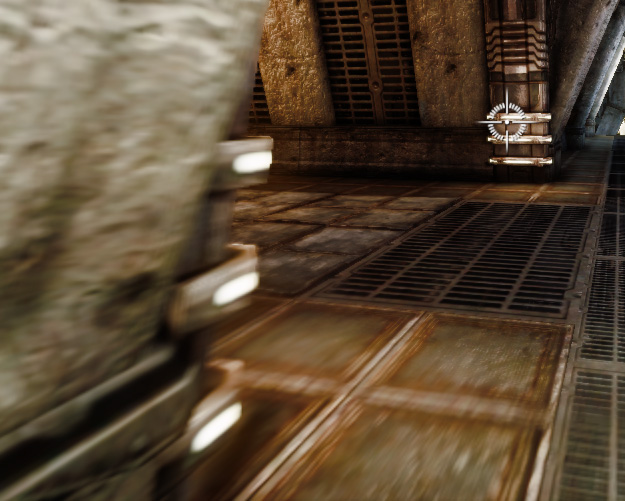

There are post processing motion blurs like this one, which is a post effect:

http://udn.epicgames.com/Three/MotionBlur.html

But as a post effect, it can have artifacts which can be quite jarring.

As you can see where the blur meets a non blur

There is also vector/directional based motion blurring, but that's based on simple blurring the of multiple renders (sometimes lower resolution renders), but the greater the blur, the greater the chance of the effect looking poor:

So is the only solution to have more horse power to do more renders to bridge the gap between blurs? Or is there a better algorithm out there for realistic motion blurs?

Here is an interesting read on vector space motion blur with ray tracing:

http://www.kunzhou.net/2010/mptracing.pdf