Silver Wattle

Gold Member

So far those rumours are lacking credibility, only the 6900XT rumours are credible.6900XTX is the halo card.

So far those rumours are lacking credibility, only the 6900XT rumours are credible.6900XTX is the halo card.

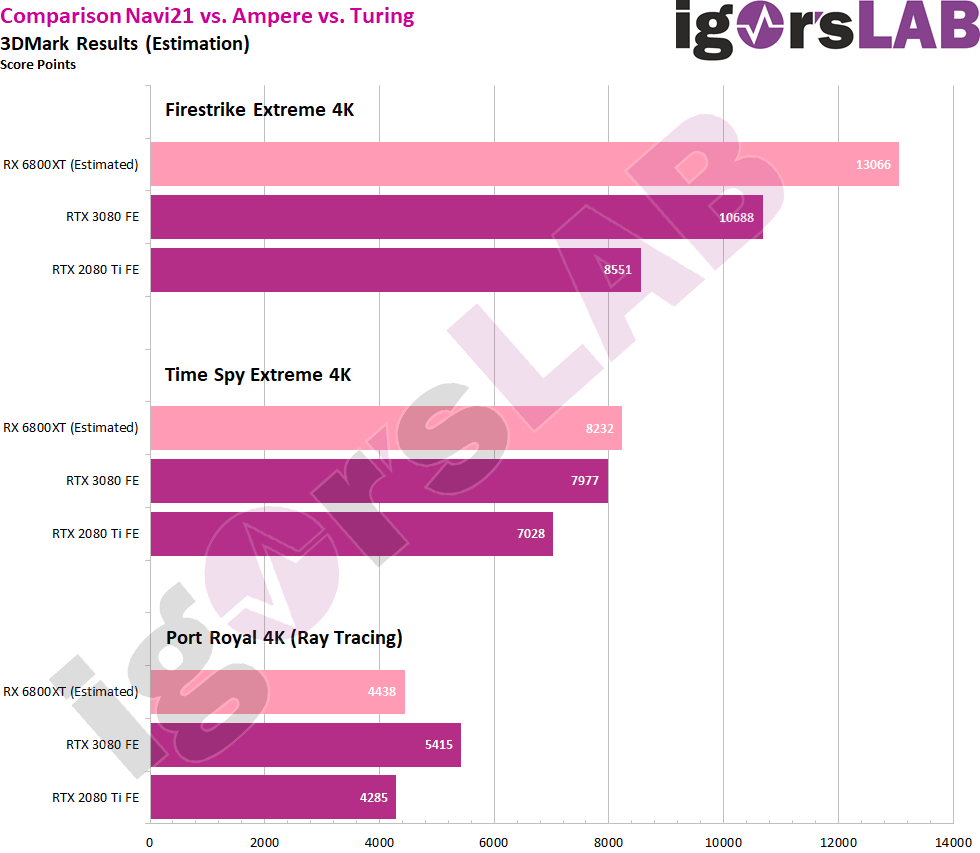

There's a big difference between something being irrelevant and something meaning a lil less than the othersFirestone ultra it's irrelevant. Time slot extreme and port royale matter more.

These benchmarks seem pretty showing off performance so far.There's a big difference between something being irrelevant and something meaning a lil less than the others

Fucking lol at the drag time argument

I dont know about any gpus, just here for the cars.

I drive a Stinger!!

Am I a bad person for really wanting AMD to do really well this cycle just so Nvidia drop their prices

Yes.

But speaking seriously for a noment: If CUDA is important to you then fair enough, but this attitude only hurts competition in the market, AMD need to actually make profit to invest in R&D to stay competitive, they also need to expand market share to put pressure on Nvidia.

If a company releases a good product then they should be rewarded with success, otherwise why would they continue to bother? Also you are sending a bad message to Nvidia that you will buy their products no matter what, which means stagnation and high prices.

Manage stock.

Price it at or around RTX 3070.

I'd say that more impressive than a 3070 and not quite as impressive as a 3080. Not much more to read into that really.Performance between 3700 and 3800 with a price between 3700 and 3800, is that supposed to be impressive

"Rice burners" are good cars, reliable and good economy vs performance, unlike most American cars, which have lot of faults, are expensive to maintenance, are laughably big + have big motors that are weak + drink way too much. (When you need huge V8 to get same amount of HP as tuned 2.0 engine, they are weak)

At least that is how it is seen outside of US.

Maybe AMD cards do The same now, less tflops but similar performance.

Oh I'm sure there are games, but I haven't seen shit that says "rt is the best way to play the game"Cy.... uhm... Cyber... punk...?

also many upcoming games in general? Watch Dogs for example.

Oh I'm sure there are games, but I haven't seen shit that says "rt is the best way to play the game"

If one is in stock and the other is a paper launch the choice is obvious.why would you buy that?

its still a good deal considering 3080 is just a paper launch for the foreseeable future and considering it will have more vram. of course that might change if 3070 is available at 500 but even then the extra vram and potential extra preformance will still make 6800 a good dealIf they can price it at 3070 levels then thyve got me interested.

Otherwise 100+ dollars for a bit more performance, but losing out on DLSS and DediRT hardware doesnt sound like a good deal.

Because once I reach 600 dollars I could stretch my budget to 700 and get a 3080.

Theyve got to do what Ryzen 3000 did in terms of pricing, basically have much better bang for buck.

Because, beating the RTX 3080 in bang for buck is going to be hard with a card thats marginally faster than a 3070 but 100+ dollars more expensive.

I'd still wait on 30XX cards if this thing can't compete honestly. If it's better in rasterization than even a 3080, but drops down to a potential RTX 3060 because no answer to DLSS 2.1 and worse raytracing performance, it wouldn't be worth it IMO.

You guys need to start using more of your own neurons when watching DF videos.Now we need to see what they're bringing for raytracing and a DLSS competitor.

You guys need to start using more of your own neurons when watching DF videos.

What did Death Stranding comparison reveal, when done not by paid shills?

You guys need to start using more of your own neurons when watching DF videos.

What did Death Stranding comparison reveal, when done not by paid shills?

I'd still wait on 30XX cards if this thing can't compete honestly. If it's better in rasterization than even a 3080, but drops down to a potential RTX 3060 because no answer to DLSS 2.1 and worse raytracing performance, it wouldn't be worth it IMO.

honestly, the RTX cards will most likely still be a way better value.

most likely better RT performance and DLSS2.0 are just 2 factors in favour of Nvidia right now.

have fun trying to play any modern game at reasonable framerates and raytracing on RDNA2 cards.

I'd still wait on 30XX cards if this thing can't compete honestly. If it's better in rasterization than even a 3080, but drops down to a potential RTX 3060 because no answer to DLSS 2.1 and worse raytracing performance, it wouldn't be worth it IMO.

You guys need to start using more of your own neurons when watching DF videos.

What did Death Stranding comparison reveal, when done not by paid shills?

DLSS quality

Oh god.DLSS quality

Yeah. That legit "exclusive preview" thing was totally not "problematic"Baseless trashing of DF really does get old.

It does not matter, what you call things.any kind of answe

I think it's reasonable to criticise preview content such as that, which comes without the proper context. But I don't see any issues with DF's 3080 review itself (which correctly highlighted that some of the 3080's advantage in Doom Eternal was due to VRAM at max settings).Yeah. That legit "exclusive preview" thing was totally not "problematic"

Raindrops being wiped out by NV upscaling has been noted 15 minutes into thread, by some random poster here.

When someone tells you "but from a distance, you won't see any difference" your bullshitmeter should start ringing.

The only answer AMD needs is to overbook more paid shills.

Anyway, the tech is quite young, it wasn't even good until it reached 2.0 iteration

So very kind of them totally not fishy. Did they mention how much of "some" it was?...correctly highlighted that some of the 3080's advantage in Doom Eternal was due to VRAM at max settings)...

Yes!So very kind of them totally not fishy. Did they mention how much of "some" it was?

Technically those V8 engines are quite small (OHV), a 7.0L V8 Corvette Z06 engine is much smaller than Nissan 350z V6 engine, but extremely fragile and unreliable and have plenty of valve issues. But Ford has went with the modern world with DOHC lately (pretty big engines, but have 2 cam reads instead of static, outdated hot rod, and much more reliable by default).

Let's keep this civil. Why not inform of us on what we're not seeing? Do you get the same or better performance with FidelityFX than DLSS 2.0 on equivalent gpu's? Will the upcoming gpu's have better RT than Turing, or even Ampere? We don't know, but is it not safe to go by the past 7 or so years? Its fine that you prefer AMD, and I'd even go so far to say that you absolutely hate Nvidia (for God knows what reason, maybe you're an AMD employee sent out to damage control forums?).Uh, give me a break on "iterations".

DLSS 2.0 has as much things in common with 1.0 as perhaps FidelityFX.

1.0 was actual AI upscaling with NN training at higher resolution.

2.0 is TAA with some static quirks.

And if what I just stated is shocking for you, perhaps you need to learn a bit more about the tech in question, anand has excellent articles, among others.

So let me ask again, of a handful of Death Stranding upscaling reviews, DLSS2.0 vs FidelityFX, are there none that prefer the latter?

So very kind of them totally not fishy. Did they mention how much of "some" it was?

I have exactly the same issue as you, I love AMD, wish I could buy their cards, but I'm stuck because of offline rendering with GPU. Also need them CUDA cores.I want AMD to do well for the price drop all round.

When AMD gets supported and/or finds a way to allow AMD acceleration or whatever theyll call it in Octane, Redshift and Substance.

I would fully switch over to AMD.

But productivity almost everything I use has some sort of reliance on Nvidia GPUs.

Indigo is OpenCL so AMD works, but alot of other apps are relying on CUDA.

That's cool, although I think I was talking about DF video, not some site.Yes!

In general, you get better performance by lowering resolution, what kind of question is this?Do you get the same or better performance with FidelityFX than DLSS 2.0 on equivalent gpu's?

I don't know and, frankly, don't care.Will the upcoming gpu's have better RT than Turing, or even Ampere?

I'm more into voting with my wallet than an average user, but on top of it, hardware RT is not something that is widely for anything but basic gimmicks and it's not going to change.I just can't understand preferring them with their current lineup compared to Nvidia's, unless you don't care about performance or raytracing.

5700 series had hardware QA issues (which is also shitty on AMD), that are mistaken for software.drivers

That's the written version of the DF video review. However, since you asked: at 13:46 they give the performance with texture quality reduced on the 2080.That's cool, although I think I was talking about DF video, not some site.

How can anyone believe all this is so reliant on AI and yet the per-game model is worse than the general one? Use your brain ffs this is never the case in any ML instance.

Am I a bad person for really wanting AMD to do really well this cycle just so Nvidia drop their prices because I need dem CUDA cores?

That's not the video in question. This one is:That's the written version of the DF video review.

That's not the video in question. This one is:

The only advantage is is that Nvidia pays full-time engineers & even pays the devs themselves for integration. But if they wanted to the devs themselves could make a non-shit TAA, most just don't bother.

That's it. That's all "advantage" DLSS 2.0 has. Tensor cores need not apply.

I'm more into voting with my wallet than an average user [...]

People just don't understand DLSS at all

Possible raytracing benchmarks:

3DMark in Ultra-HD - Benchmarks of the RX 6800XT with and without Raytracing appeared | igor´sLAB

As always, you have to be careful with such benchmarks, even if the material I received yesterday seems quite plausible. Two sources, very different approaches or settings and yet in the end a certain…www.igorslab.de

Looks like there is a chance the 6800 XT will be competitive with the 3070 in raytracing.

If they can offer much better rasterisation performance than the 3070, double the memory, and equivalent RTX performance, for a price in between the 3080 and 3070, then I think it will be super competitive.This is big, if true. I mean the non RTX performance, really impressive.

Possible raytracing benchmarks:

3DMark in Ultra-HD - Benchmarks of the RX 6800XT with and without Raytracing appeared | igor´sLAB

As always, you have to be careful with such benchmarks, even if the material I received yesterday seems quite plausible. Two sources, very different approaches or settings and yet in the end a certain…www.igorslab.de

Looks like there is a chance the 6800 XT will be competitive with the 3070 in raytracing.