MasterCornholio

Member

Xbox gamertag: ICPEE1

Well I hope you didn't take a whiz in public when we had that winter storm.

Xbox gamertag: ICPEE1

Bruh i'm talking to you about actual facts, let's put examples:Did you test the power draw on this games? You say Xbox has "no next gen games" however Gears 5 runs at 60-120fps at PC Ultra settings with VRS and Global Illumination at up to 4k. It's very demanding on PC. It draws a lot of power so it's a good benchmark of how the machines react under load.

Mate. Loading a level consist so of many things not just textures some of the things are cpu limited like logic, ai, physics and so on series x and ps5 can load a level similarly in the same time ps5 being a bit faster but the real bottleneck of what this ssd's where supposed to fix was textures and Cold data like meshes, sound. Now if series x can't even handle textures this means that ssd isn't solving the problem.

When mark cerny said no loading screens he didn't just be mean instant level loading or 2 second loading screens he meant streaming data so fast that if a player turns around the old data that wa said resident in ram is deleted and new data is loaded seamlessly without popin this means you can have scenes with more data than you could hold in ram meaning more detailed worlds advantage you've seen in unreal 5. Scenes with 100+ gb full of billions of polygons rendered on just 16gb gpu. And ratchet streaming worlds in a second and this is whats revolutionary on ps5 and why they call it the most revolutionary console since 3d.

The xbox meanwhile can't even handle popin on crossgen games.

This means it'll be left out when ps5 and pc games will have more detail, you'll basically have to cut down detail on Xbox so this beats the point of calling it nextgen it's more like a Xbox one x upgrade.

it happened a few times for me... I don't think its a widespread issue and I couldn't find any posts about itNever saw that in the many hours I put into Gears 5. Weird.

Nope just your average gamer here lol it happened a few times had to restart it a few times to resolve it was really weirdAre you on insider build by some chance? Never seen it like that, however I had some issue with other game,s because especially skip-alpha is piece of shit. But I do like living on dat edge.

You should let us know in separate theread, since you already have the `thing`

My kids play Dead by Daylight and it gets so hot on the vent but you never hear it. Their old ps4 and xbox one fat were stupidly loud.Fuck me. Series S is silent as in fucking dead quiet playing Division 2.

This game would be howling on my now deceased PS4 Pro with fans near max.

No fan noise on Series S even after gaming for more than 2 hours now.

Have to hand it or Xbox here. This thing is amazing.

Nothing about that was clear. It's as broad PR statement as it gets. From that they can do pretty much anything and those statements will still be true.I didn't think we'd get the clarity like that today.

Very clear.

Good to see MS support their platform. It never made sense to buy all those IPs and studios and give them to PlayStation. Sony would not buy studios to give Xbox games why should MS? Hopefully this effort will put to bed questions about MS' commitment in the video game space. I don't expect any Sega like 3rd party moves from MS at this point. I'm interested to see what they do next.I didn't think we'd get the clarity like that today.

Very clear.

Current contractual obligations and current games with communities isn't clear?Nothing about that was clear. It's as broad PR statement as it gets. From that they can do pretty much anything and those statements will still be true.

I have this vision of a folded up body crammed in to a ps5 box now.You'd see me on the 10 o'clock news, mate. It wouldn't be pretty.

Those thousand users were always around, they were just hanging in that buried thread in the communities sectionLike 15 threads on bethesda exclusivity today...it’s scary out there boys...

Yes, good thing I have game passSoooo the deal is about XBOX GAMEPASS and nothing else

Do you know what unlimited means?Yes, good thing I have game passfor only 12 euros a month , That’s about 140 euros a year , I have a unlimited supply of games .. sound good , right?

Those thousand users were always around, they were just hanging in that buried thread in the communities section

Sure .. but I like to make it big for a better story ..Do you know what unlimited means?

Lol years? If you play every single game sure.Sure .. but I like to make it big for a better story ..

It’s still enough for me to game years

Isn't the PS5 Tempest Engine similar to the Cell Processor.

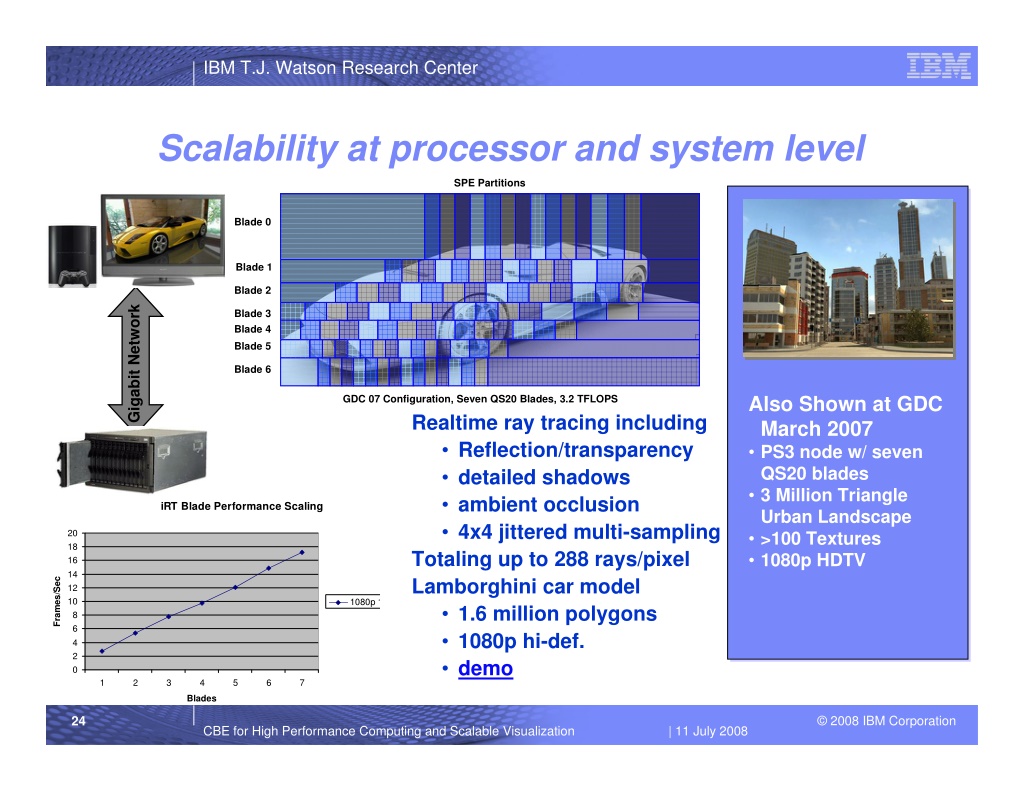

"Even though the PS3’s RSX is inaccessible under Linux the smart little system will reach out across the network and leverage multiple IBM QS20 blades to render the complex model, in real-time, with software based ray-tracing. Using IBM’s scalable iRT rendering technology, the PS3 is able to decompose each frame into manageable work regions and dynamically distribute them to blades or other PS3s for rendering. These regions are then further decomposed into sub-regions by the blade’s Cell processors and dynamically dispatched to the heavy lifting SPEs for rendering and image compression. Finished encoded regions are then sent back to the PS3 for Cell accelerated decompression, compositing, and display." -- Barry Minor

Oh for sure. The CELL set the entire gaming industry up for the future it's currently pursuing.

Even the necessity for all-encompassing middleware engines like Unreal exploded in popularity due in part to the CELLs unconventional architecture.

Yeah, this thing is essentially the logical conclusion for all GPUs.

You the see the trends with UE5 moving to software rasterizers for micro-polygons, (mostly) software RT, the move to more and more fully programmable GPU rendering pipeline (with Mesh/Primitive Shaders and Task/Surface shaders) etc etc... Most of the traditional fixed function units of the GPU pipeline will be replaced by software running on gen purpose cores. So you'll end up with a chip that looks remarkably like the above massively parallel CPU data streaming monster, but with select fixed function graphics hardware for stuff like RT, ML, ROPS, TMUs, command processor etc etc...

"It would be great to be able to write code for one massively multi-core device that does both general and graphics computation in the system. One programming language, one set of tools, one development environment - just one paradigm for the whole thing: Large scale multi-core computing." -- Tim Sweeney

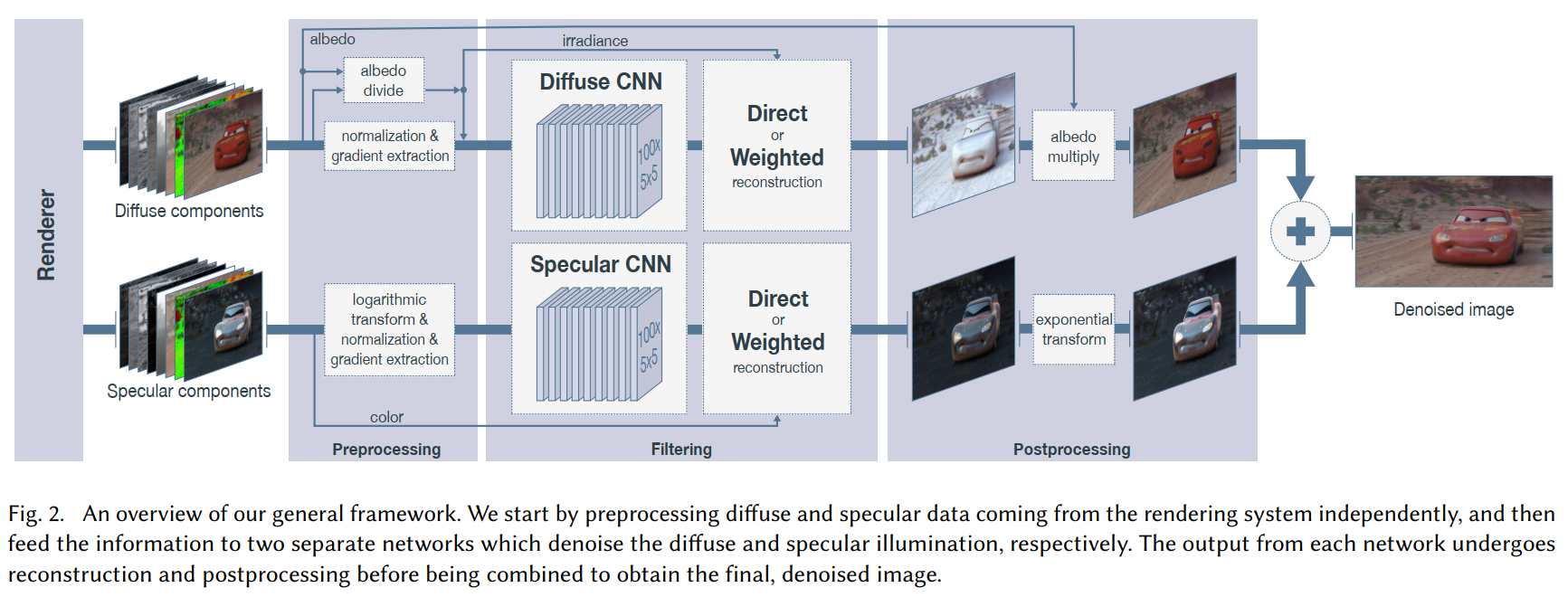

"Several rendering teams have developed (or are in the process of developing) GPU-based path tracers. GPUs have immense computa- tional power, but have lagged behind CPUs in the available memory and also require a different algorithm execution style than CPUs." -- Pixar

"With the huge computational complexity of movies, it will be interesting to see which architecture wins. One possible outcome is that these archi- tectures will merge over the next decade, in which case this becomes a moot point." -- Pixar

"Cell will evoke the appearance of graphics LSIs or southbridge chips, something almost like a PC, and that is the kind of business we want to start." -- Ken Kutaragi

Oh my! I feel such a looser! For 50 bucks/year I have access to just 800 games on PS Now. And I can play them on my PS5 or stream them on PC.Yes, good thing I have game passfor only 12 euros a month , That’s about 140 euros a year , I have a unlimited supply of games .. sound good , right?

Normally I would buy at least 4 games a year full price of 60 euros .

Every euro counts in corona time .

Just making sure we didn't forget.

Just making sure we didn't forget.

You know, like a "hello, im still here."

Yeah well clearly you didn't use enough alchohol.Hello my favorite people on my favorite forums in my favorite thread.

Heading out this weekend to the Bahamas to start a week long fishing charter with some good friends and lots of alcohol.

Hope to come back with lots of fish and stories to tell though have already been told I cant share a few things until early to mid April

I shall return in a few weeks.

Hello my favorite people on my favorite forums in my favorite thread.

Heading out this weekend to the Bahamas to start a week long fishing charter with some good friends and lots of alcohol.

Hope to come back with lots of fish and stories to tell though have already been told I cant share a few things until early to mid April

I shall return in a few weeks.

Cringe cranked up to 11

Is this a collage of how Western civilization dies?

I guess Sony could give away a bunch of last gen games nobody wants to pay for outright.I mean Sony has to counter MS with a one-two punch and I'm afraid that Returnal and Ratchet at 80 euros each is not enough.

The value of the Gamepass is unbelievable. Even I'm starting to think about getting an XSX at some point. Plus MS is really doing something with backwards compability and they have the Elite controller which I'm envious.