As funny as his videos be, this might be the funniest.

"One titty" ? Lmao!!!

Thanks for this, I am crying...lol.

yes we know everything about clocks and what advantage give. Im from a generation where we was overclocking everything. You should go at amd and nvidia and explain all of this because even that past,today and future project are based on even bigger parallelism (same goes for cpu's) probably after the 36cu's ps5 and this post they will change plansThis is really not true - the equation is very complex.

The performance gain from frequency increase is linear for the individual component. Going wider has increasing diminishing returns (each additional CU adds less than the last CU added).

However, frequency increase results in power and thermals at some point increasing exponentially and manufacturing yields to go down. In addition, unless other components of the system (CPU, memory etc) matches the frequency increase of the individual component you get synchronisation problems (which negatively impacts performance even more).

Wider is not always a good thing. You can see this with SLI data. With games that supported it adding +100% CUs gave roughly 30% increase in performance. Clear diminishing returns.

In a controlled environment such as a console - frequency is probably your most powerful tool as long as you can keep power and thermals in check.

Someone knows, what these are?

Honestly, don't really know on my side. In first time, I thought it could be some very big placeholders structures, but clearly too big and in really less number than usual. Big thermal Vias ? (big connections from substrate using all metal layers to package)

Why do you even write this? If you look at GPUs over the last 10 years there has been a clear increase in both frequency and parallelism - it has gone hand-in-hand. Frequency however only goes that far or yields will become abysmal. If you look at cards with similar frequencies but differences in number of cores you see a clear drop in benefit as well - compare 3080 and 3090 as an example: +20% cores/transistors vs. +10% FPS increase - clear diminishing returns and at a massive cost.yes we know everything about clocks and what advantage give. Im from a generation where we was overclocking everything. You should go at amd and nvidia and explain all of this because even that past,today and future project are based on even bigger parallelism (same goes for cpu's) probably after the 36cu's ps5 and this post they will change plans

Clock have his own advantages ....being "wide" have just lots better advantages there's nothing to be upset about

almost looks like small processors, maybe for shaders or other commands

The main discussion you felt compelled to answer it started with a user giggling behind the advantage of the xsx teraflops in comparison to the advantages brought by the clock.Why do you even write this? If you look at GPUs over the last 10 years there has been a clear increase in both frequency and parallelism - it has gone hand-in-hand. Frequency however only goes that far or yields will become abysmal. If you look at cards with similar frequencies but differences in number of cores you see a clear drop in benefit as well - compare 3080 and 3090 as an example: +20% cores/transistors vs. +10% FPS increase - clear diminishing returns and at a massive cost.

If PS5: TitiesSomeone knows, what these are?

That one is really really better.

Cerny's secret sauce.Someone knows, what these are?

AMD changed how ACE works so now you have only one that do more than the 8 in the past.So where the ACE/HWS blocks are located, within central GCP+GE cluster? Maybe they contribute to the size difference, especially if PS5 has more ACEs.

Yeah did a little more thinking and looking at a Navi 21 GPU reference again...99% sure PS5 doesn't have Infinity Cache.

Someone knows, what these are?

Wow, that picture sure will help tech-savvy guys understand better.

Thought about sensors for frequencies, tooHonestly, don't really know on my side. In first time, I thought it could be some very big placeholders structures, but clearly too big and in really less number than usual. Big thermal Vias ? (big connections from substrate using all metal layers to package)

That's a good catch. So one per Shader Array, but the Ps5 also has one in the GPU frontend.We have it also in the XsX and XsS die, seems used in the same cells. We have one on XsS, two on XsX, and... three on PS5. More redundancy?

Please note: this is just speculation.Agreed. There needs to be some form of glue / Infinity Fabric. After taking that into account, the area available for substantial amounts of LLC for the GPU would be almost null.

The space between the GDDR6 PHYs seems thicker than usual, but it could be just Infinity Fabric that AMD seems to put "wherever there's some space left".

As for the space between the CPU and GPU, I wonder if there's enough space for AMD/Sony to have implemented some sort of ring bus between the CCXs and that would justify the performance advantage at high framerates.

If they did and it has a similar behavior to what Intel has on their Low-Core-Count server CPUs (and most recently Comet Lake 8-core client), then it wouldn't be as good as Zen3's truly unified cache but it would be substantially better than Zen2.

Here are the access times on Zen2 with separate L3 between CCXs:

On Zen3 with unified L3:

On the 8-core Comet Lake with separate L3 on each core, connected by a ring bus:

This isn't a change that is very important if the goal is to make games that run great at 30 to 60FPS, but it makes a substantial difference when/if the CPU becomes a bottleneck at >100 FPS.

Microsoft wouldn't be very interested in this optimization but Sony could be, due to PSVR2.

It would also give some credence to the leaksters claiming "unified L3" on the PS5 CPU, since developers could assume the L3 is unified after looking at Comet Lake-like access times.

So 4 ACEs+1HWS as in RDNA1? I was thinking maybe PS5 could have more because of BC compability reasons, i guess that's not needed then.AMD changed how ACE works so now you have only one that do more than the 8 in the past.

And yes they are together with the Command Processor.

RDNA has 4 ACE I believe... needs to check.So 4 ACEs+1HWS as in RDNA1? I was thinking maybe PS5 could have more because of BC compability reasons, i guess that's not needed then.

4 ace from the white paperRDNA has 1 ACE I believe... needs to check.

Great, now wait for your frames. It seems like it didn't help, all they got was a console about the same as their main competition at about the same retail price.Xbox has RDNA2 because they waited to the end, because AMD endedn this cutting edge technology in may, that´s why the developer kits of xbox were not ready until the last day, and because of that first games were poor optimized for xbox series.

A Closer Look at How Xbox Series X|S Integrates Full AMD RDNA 2 Architecture - Xbox Wire

We here at Team Xbox would like to congratulate and celebrate our amazing partners at AMD on today’s announcement of the Radeon RX 6000 Series of RDNA 2 GPUs. It was incredible to see AMD demonstrate the power and potential that the new AMD RDNA 2 architecture can deliver to gamers around the...news.xbox.com

---"In our quest to put gamers and developers first we chose to wait for the most advanced technology from our partners at AMD before finalizing our architecture."

Microsoft waited for AMD to end RDNA2, sony didn´t.

I just locked the pics again and that pet seems really like RDNA/RDNA2 (4 ACEs).4 ace from the white paper

Great, now wait for your frames. It seems like it didn't help, all they got was a console about the same as their main competition at about the same retail price.

Which is fine by the way.

Isn't it confirmed that the actual Coul whine is coming from the power supply anyway and nothing to do with the apu.That's atrocious.

Edit: Sorry that was a mosquito flying past, my bad.Seriously, my X1 VCR can be a lot louder than that. Some GPUs can really scream, if you are going to make a video make sure the mic picks the sound up.

Complete nonsense. Just because DirectX is the API Microsoft uses to expose their chip's ray tracing hardware to developers, that's no reason to claim PS5 by definition technically can't be full RDNA 2 on that sole basis alone.Exactly. He literally says there that "RDNA2 includes hardware support for DIRECT X ray tracing"

So... PS5 by definition can't be this so called "full RDNA2" because PlayStation would never ever use fucking DirectX.

Wow the PS5 is not a PC. Shocker.

Its dishonest marketing from Microsoft. "Full RDNA2 must include DirectX" ... sure.

PS5 has a custom RDNA2 based GPU. Sony said that. AMD said that. Everyone knows this.

the response of xbox series X and ps5 being the same quality at same prize. Xbox Series has better quality, period, so it´s normal to have better architecture. Xbox series has rdna2 and ps5 doesnt.The coil whine isn't in the APU though. Plus it's not an issue with all systems.

No idea what your point is.

Where is the proof that PS5 is lacking hardware to do Mesh Shaders, VRS and Sampler Feedback?Complete nonsense. Just because DirectX is the API Microsoft uses to expose their chip's ray tracing hardware to developers, that's no reason to claim PS5 by definition technically can't be full RDNA 2 on that sole basis alone.

The reason it can't be considered full RDNA 2 has absolutely nothing to do with DirectX. It's because it's missing multiple major new hardware features of RDNA 2. Mesh Shaders, Variable Rate Shading (Tier 2) and Sampler Feedback.

Did Microsoft building DX12 right into Xbox One X stop PS4 Pro from being full Polaris?

Microsoft says it the way they do because their ray tracing API is the most widely known and marketed API for ray tracing, so why not take advantage of their brand's awareness for Xbox?

PS5 not being DirectX has nothing to do with why they're missing those hardware features, unless among those hardware features one or more are somehow totally Microsoft owned intellectual property, in which case even an AMD semi-custom partner couldn't have it. I recall Microsoft continually stating for Xbox series x variable rate shading was their patented technique, but never did get the reasoning behind them saying so. That said, this DirectX argument is a joke.

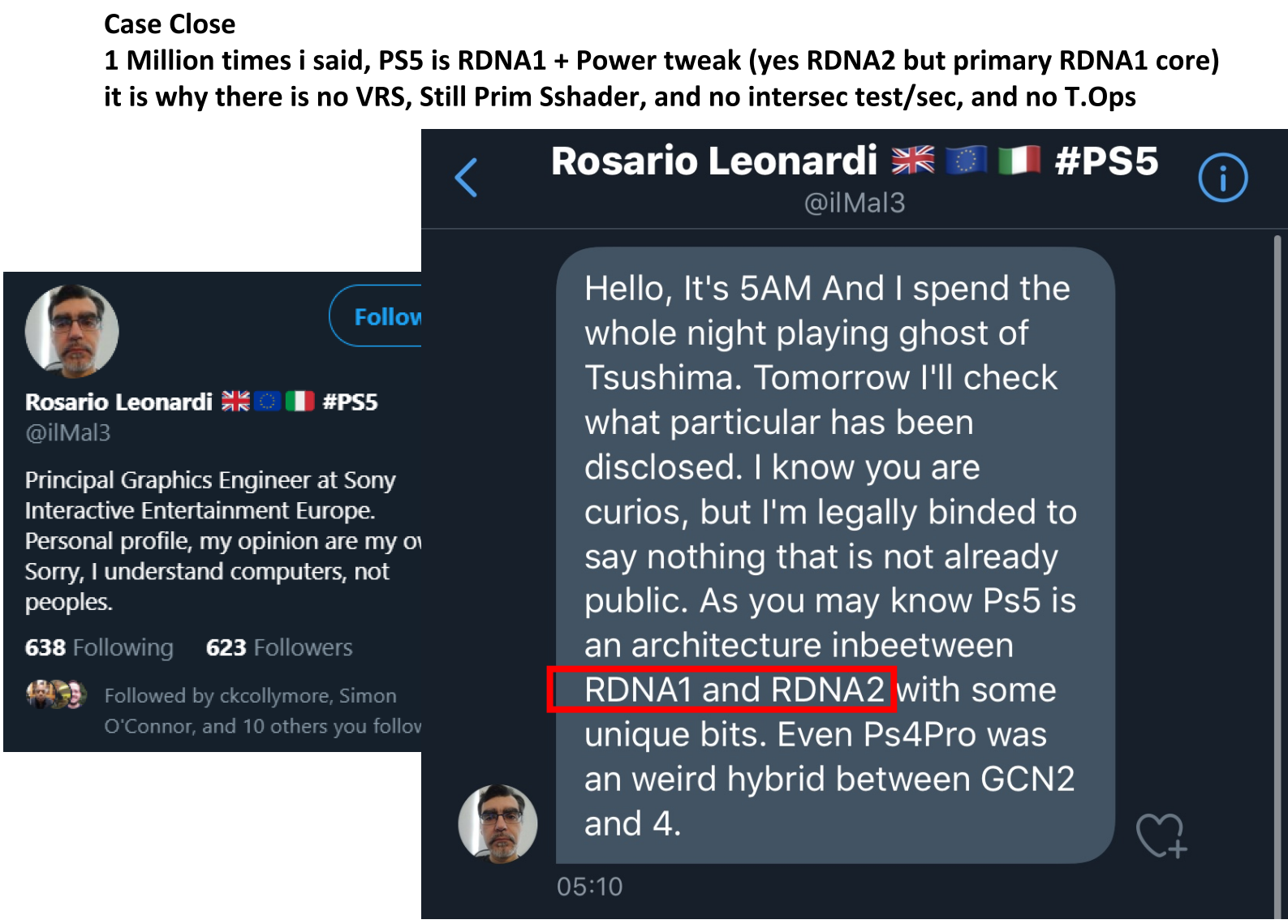

This is for you DJ12.

I highlighted the areas to see it better.

P.S. Before anyone come at me for adding in Infinity Cache, this is just all speculation.

Could be not in the PS5 at all.

Where is the proof that PS5 is lacking hardware to do Mesh Shaders, VRS and Sampler Feedback?

No just Sony... MS and AMD.Next question is haw much cpu and gpu time it takes away .. from other rendering budgets ..

Where is the proof, it has something equal.. that’s the mist Sony didn’t clear ..

That’s still on Sony... they need to clear it up, otherwise we have threads for years about this stuffNo just Sony... MS and AMD.

People keeping saying PS5 doesn't have the hardware to do these tasks but it is still RDNA 2.

So what is the difference in silicon that make these tasks possible on Series and RDNA 2 and not on PS5?

I mean it should be easy to explain if we know what makes it a hardware feature.

the response of xbox series X and ps5 being the same quality at same prize. Xbox Series has better quality, period, so it´s normal to have better architecture. Xbox series has rdna2 and ps5 doesnt.

Xbox series has rdna2 and ps5 doesnt

Isn't that what Geordieimp has been saying?If infinity cache were in ANY console it would definitely be cut down. Microsoft told DF there was 76MB of SRAM in Series X. We have never identified it all, but since it hasn't actually been confirmed I'm just going to assume there is no infinity cache in Series X, but there's a lot of cache unaccounted for on the SoC.

Where is the proof that PS5 is lacking hardware to do Mesh Shaders, VRS and Sampler Feedback?

SX has 12 teraflops but still ain't seen them.the response of xbox series X and ps5 being the same quality at same prize. Xbox Series has better quality, period, so it´s normal to have better architecture. Xbox series has rdna2 and ps5 doesnt.

the response of xbox series X and ps5 being the same quality at same prize. Xbox Series has better quality, period, so it´s normal to have better architecture. Xbox series has rdna2 and ps5 doesnt.

If the "PS5 is not RDNA 2", neither is the Series X.

They just don't get it, it's hilarious.

So, what is PS5 then?

the response of xbox series X and ps5 being the same quality at same prize. Xbox Series has better quality, period, so it´s normal to have better architecture. Xbox series has rdna2 and ps5 doesnt.

You do realize....so is the Series X and S, right?