ReBurn

Gold Member

That's true.Hardware features can't make a game "fun" but features like mesh shaders and vrs can improve fps which can make a gaming experience more enjoyable.

That's true.Hardware features can't make a game "fun" but features like mesh shaders and vrs can improve fps which can make a gaming experience more enjoyable.

Many SKUs being faster than my existing RTX 2080 Ti is good from my POV.Sure, but I am talking about performance per mm/2 in comparison to Nvidia, not the overall cost. It's conceivable that by having a huge cache AMD are sacrificing performance per mm/2 for performance per watt, and maybe for a lower total cost overall.

I agree that AMD seems to have made the right choice, since otherwise they would have had to reduce absolute performance, or increase power consumption, like Nvidia.Many SKUs being faster than my existing RTX 2080 Ti is good from my POV.

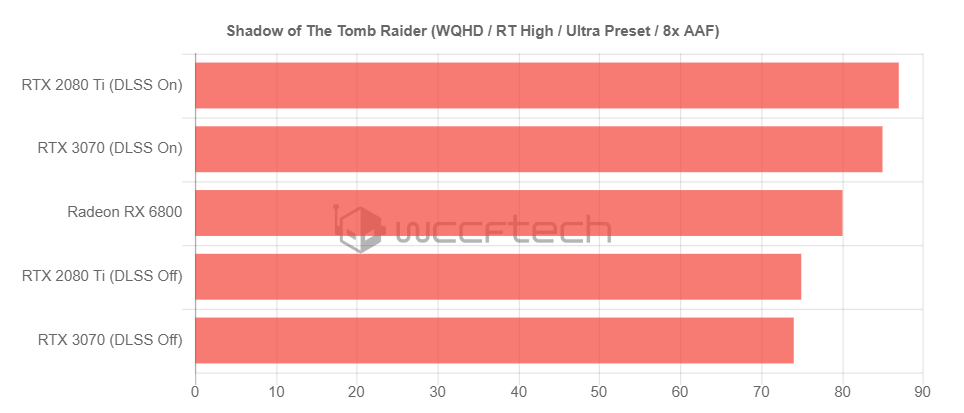

From https://wccftech.com/amd-radeon-rx-6800-rdna-2-graphics-card-ray-tracing-dxr-benchmarks-leak-out/

Without DLSS, RX 6800 seems to be a reasonable GA102 level GPU.

I still plan to buy RTX 3080 Ti AIB OC and maybe RX 6800 XT AIB OC on my second gaming PC rig. More selections = better.I agree that AMD seems to have made the right choice, since otherwise they would have had to reduce absolute performance, or increase power consumption, like Nvidia.

So? I'm getting the videocard that is available. Fuck the paper launch of Nvidia.

This is the kind of drivel hit piece I'd expect from Toms Hardware. I'd argue RDNA2 is more efficient. Smaller bus width and still trading blows at 4K? The Infinity Cache seems to be a great innovation. How about we spin this as Ampere isn't as efficient because it needs more bandwith and a bigger bus to keep up with RDNA2.

I spent a lot of money on RTX 3080 and 3090 cards so I can't handle the fact these AMD cards might be better and cheaper so I'm gonna make a thread to shit on them and make me feel better.

By the way, you users who will react to this post and have reacted to my other posts with the childish "LOL" emoji seriously need to grow up.

You may think that that's the case, but it's not. I genuinely have no regret about my purchases; if I wanted to, I could return my 3080 to Micro Center for a full refund since the 30-day return period has not ended yet; however, I'm keeping the card because I'm genuinely satisfied with it.

I regret absolutely nothing.

By the way, you users who will react to this post and have reacted to my other posts with the childish "LOL" emoji seriously need to grow up.

55" screen. I sit two to three feet away from it.

You have the RTX cards as your desktop background ffs; you're obviously a fanboy throwing a hissy fit and trying to shit on the competition.You may think that that's the case, but it's not. I genuinely have no regret about my purchases; if I wanted to, I could return my 3080 to Micro Center for a full refund since the 30-day return period has not ended yet; however, I'm keeping the card because I'm genuinely satisfied with it.

Says the dude with a bunch dolls next to his TV...By the way, you users who will react to this post and have reacted to my other posts with the childish "LOL" emoji seriously need to grow up.

Part of the reason for the reaction to your posts is how you present them. You come off like a snob and a know it all. You also from what I’ve seen are always keen to show off what you have any chance you get. If it’s that type of “show off your stuff” thread cool, but for example nobody asked you for photo evidence of your 3080 and 3090 in here, but you felt the need to post them. I’m sure there are many posters here who have a better system than you who don’t feel the need to seek constant validation every chance they get.

Nobody likes to deal with people like that in real life, let alone online...so if you want more hospitable responses to your posts maybe you need to address how you interact with others. A lot of your posts are tacky and cringe bruh just being real.

Dude this is the worst way to handle criticim, very John Linneman esque, and we all know what happened ot himI don't care for your opinion or irrelevant diatribes; just ignore me if you don't like my posts and don't post in my threads. Also, I'm not your bruh.

I spent a lot of money on RTX 3080 and 3090 cards so I can't handle the fact these AMD cards might be better and cheaper so I'm gonna make a thread to shit on them and make myself feel better.

I thought GAF is saying you are responsible for yourself and companies can do no wrong, given the "why don't you just quit if you don't like crunching at CDPR" attitude of many posters here.So what? I am still buying the 6800XT card to shit on nvidias lousy practities, like charging 1500 dollars for 2080ti because there were no competition.

question though, would it be safe to say that AMD’s first two cards in the lineup is more “future proof” than the 3080 and 3070 for the VRAM alone? Or are there other considerations? I know about DLSS right now, but it seems to me that for people who don’t upgrade their GPU every year AMD is probably the wiser decision.

As mentioned, likely lower than 6B in reality but still significant and it makes this transistor count argument more of a pears to apples comparison.https://www.anandtech.com/show/16202/amd-reveals-the-radeon-rx-6000-series-rdna2-starts-at-the-highend-coming-november-18th/2 said:Doing some quick paper napkin math and assuming AMD is using standard 6T SRAM, Navi 21’s Infinity Cache would be at least 6 billion transistors in size, which is a significant number of transistors even on TSMC’s 7nm process (for reference, the entirety of Navi 10 is 10.3B transistors). In practice I suspect AMD has some optimizations in place to minimize the number of transistors used and space occupied, but regardless, the amount of die space they have to be devoting to the Infinity Cache is significant. So this is a major architectural trade-off for the company.

There are fanboys for everything, even cell phones, operating systems etc. At least they aren't in here celebrating taking content away from another platform. Puts them miles above those sad sacks.I always find it ironic how PC players act like the adults in the room looking down their nose at “console wars” then realise they’re exactly the same in threads like this but instead of consoles it’s overpriced graphics cards they fight over

I've seen that, and that's why I was telling folks, this is mighty impressive for AMD's first run with RT, Yet NV has DLSS on for better frames.....So I will want to see the 6800's RT performance with Super Resolution on......That's when things will get interesting, because by the looks of it, the 6800 is already over the the 2080ti and 3070 when no upscaling technology is used. I shudder to think of AMD's RT performance with SR....Since rumor is that SR is more performant over DLSS relative to frames.....Many SKUs being faster than my existing RTX 2080 Ti is good from my POV.

From https://wccftech.com/amd-radeon-rx-6800-rdna-2-graphics-card-ray-tracing-dxr-benchmarks-leak-out/

Without DLSS, RX 6800 seems to be a reasonable GA102 level GPU.

Pretty lame shit post and has nothing to do with topic presented. We are all fans of something, not cool to shame someone's fandom. I think that in itself is "cringe".The capeshit posters and figurines are more cringe tbh.

I mean, you can find examples of "massive" differences like this when it comes to ryzen VS Intel too. With gains in favor of Intel. It's not the end of the conversation.

Hm...

I know they are cherry-picked, but that this is even possible at all says a lot.

lot of people like me were waiting for high end competition because of this.So what? I am still buying the 6800XT card to shit on nvidias lousy practities, like charging 1500 dollars for 2080ti because there were no competition.

I mean, you can find examples of "massive" differences like this when it comes to ryzen VS Intel too. With gains in favor of Intel. It's not the end of the conversation.

or - at the very least - isn't as efficiently put to use by AMD's drivers as Ampere is put to use by Nvidia's drivers.

Pretty lame shit post and has nothing to do with topic presented. We are all fans of something, not cool to shame someone's fandom. I think that in itself is "cringe".

And its our jobs as consumers to not reward them for trying underhanded and questionable tactics because they have not had good competition for a while.There's no need for personal grudges against a business; their purpose is to maximize profit however they can.

Best one so far. Hilarious....

The truth is this has been done so many times by NV. Release an expensive card, when the competition shows their wares they are ready to cut $500-700 off the prime card with more vram and better performance. So where was that performance all the time, why could they not offer their fans/customers that perf and price in the first place. Look AMD just launch Ampere, and they are already readying a refresh weeks later, most probably with more ram and at a lesser price.....

Yet I don't bother too much, Nvidia fans defend anything they do to the death, as an example, this thread here. So whatever they end up spending over and over again on the hypetrails of Jensen, they deserve......They got robbed spending all that cash on the 2080ti, with a 3080 Super offering similar performance later on. Same with the 2080.....The biggest hype for the 35.58TF 3090 and it can't beat the 23TF 6900XT.....People will defend NV to the death, no matter how much lower the performance and how much higher the price...I think this is now a fact of life........The only way that can change is for people to start rewarding the companies giving them the best bang for their bucks, that's clearly AMD.....It's the only way these proud NV fans will realize better performance per watt and dollar is extremely more important for the industry and all gamers as a whole.....Only when everybody goes to the red team including their friends, will they feel silly and left out.....Right now they are only riding the brand train instead of the sensible and logical train.....Yet the market is about to change drastically in the GPU space, just as the CPU space has changed.....Change is inevitable...

It's not a paper launch. The problem is that insanely high demand has been compounded by suppressed manufacturing and shipping speeds due to the pandemic.

If this were a paper launch, then one person wouldn't be able to have acquired two cards from two different vendors via two different means: an RTX 3080 FTW3 that was bought at Micro Center and a Revel Epic-X RTX 3090 that was ordered via phone from PNY.

There's no need for personal grudges against a business; their purpose is to maximize profit however they can.

There's no need for personal grudges against a business; their purpose is to maximize profit however they can.

A picture of them both sitting on a desk no less.Part of the reason for the reaction to your posts is how you present them. You come off like a snob and a know it all. You also from what I’ve seen are always keen to show off what you have any chance you get. If it’s that type of “show off your stuff” thread cool, but for example nobody asked you for photo evidence of your 3080 and 3090 in here, but you felt the need to post them. I’m sure there are many posters here who have a better system than you who don’t feel the need to seek constant validation every chance they get.

Nobody likes to deal with people like that in real life, let alone online...so if you want more hospitable responses to your posts maybe you need to address how you interact with others. A lot of your posts are tacky and cringe bruh just being real.