MisterXDTV

Member

So it's the upscaling of color basically?

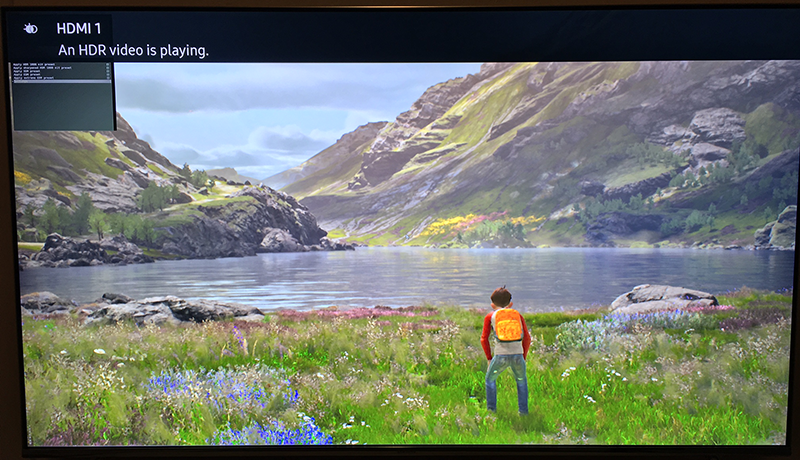

For console games I think the 8 bit source gets resampled to 10 bit and that's why the OG PS4 got HDR compatible with a firmware upgrade.

Internally the console applies the extended dynamic range (the light range between the deepest black and the brighter white) to the 8 bit source

I don't know about PC, I think only Alien Isolation has true 10 bit support