Minsc said:

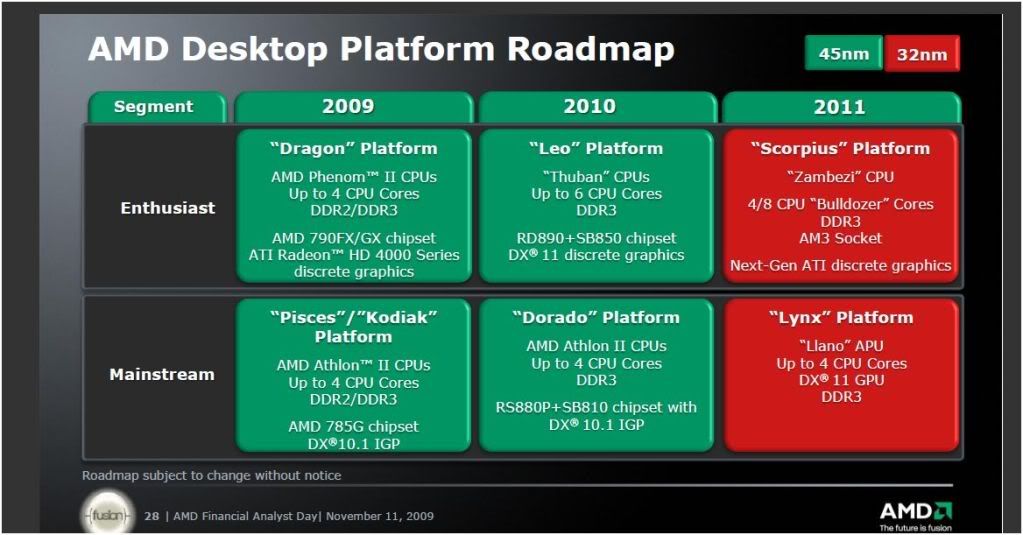

18 months is a pretty long time though, did you mean Phenom II? Not sure what an Athlon II is. 18 months puts us around Q2 2011 (without delays), hopefully it's still up to the task of being a ground breaking step forward for gaming. Larrabee has a new challenger though, which is good, because I'm not sure which one will make it out first.

What kind of performance is expected with it? I mean Crysis will run on mid-range laptops if you set the graphics to low. Is it going to top the 5800 series cards?

An Athlon ii is a Phenom ii without the L3 cache, this variant will ship with a boosted 1MB L2 cache per core, its a pretty capable quad core processor, more than enough for any average home user.

The packaged GPU

is a 5xxx series GPU, a 480 stream processor variant to be exact but customised and packaged right on the CPU die. Of course this isn't going to challenge a 5870, this is AMD's low end platform and as such stands as a huge upgrade in a market where Intel integrated graphics flat out won't even run modern games at all nevermind at decent enough settings.

The point is that AMD's

lowest end desktop and notebook chips will be shipping with enough grunt to possibly run Crysis at high settings/720p/30fps or somewhere close to that and that's a pretty big deal in my book when most current low end solutions would be lucky to to pass single digits at the game's lowest settings.

This isn't something that you or I will ever buy, but it is something that your aunty or cousin might, and whereas in the past their machine would probably be locked out of any games released in the past 3 years, if they buy a Fusion chip basically any PC game will run at playable standards. If every rig on the market is packing almost half a teraflop of computing power, it stands to reason that exploiting GPGPU becomes a whole lot more viable.

The point is that the low end is set for a huge upgrade when it comes to graphics, and when the low end rises by a significant amount, we all benefit. That a single chip with no dedicated GPU in site should run any multiplatform title better than the consoles do is pretty good news for PC gaming as far as I'm concerned, it really lowers the barriers to entry.

Acosta said:

Good times indeed, having such a jump in the lowest end of PC will definitely push PC gaming. Probably I'm starting to think that in 2/3 years the scenario of PC gaming will be dramatically changed (for the best).

I feel good with my decision of waiting some months for the upgrade I wanted to do before summer. 5850 and i5 750 looks a killer combination and I'm in the hunt for one.

BTW brain-stew, I guess there is nothing like nhancer for ATI cards rights? I'm going to play in a 720p televisor and want the best IQ possible (that's why I want the extra push of a 5800 instead of going for the 5770).

There's not but ATI's drivers themselves offer rotated grid supersampling on their new GPUs now. From what I gather they don't automatically alter the LOD bias, so you'll have to change that yourself but its done easily enough. PCgameshardware.com have a bunch of screenshots up showing it off and it looks stunning, pretty sure they explain how to set the LOD bias as well.