S0ULZB0URNE

Member

It's a PS4 game at its heart which means it should scale with all types of builds and maybe some legacy(like PS4) PC parts.Yeah but it won’t be noticeably large.

The game on ps5 already looks outstanding and sharp on a 4k screen regardless of which mode you pick

It is highly optimized for the PS5 and that just won’t be the case for PC

PC is good at brute forcing such games.

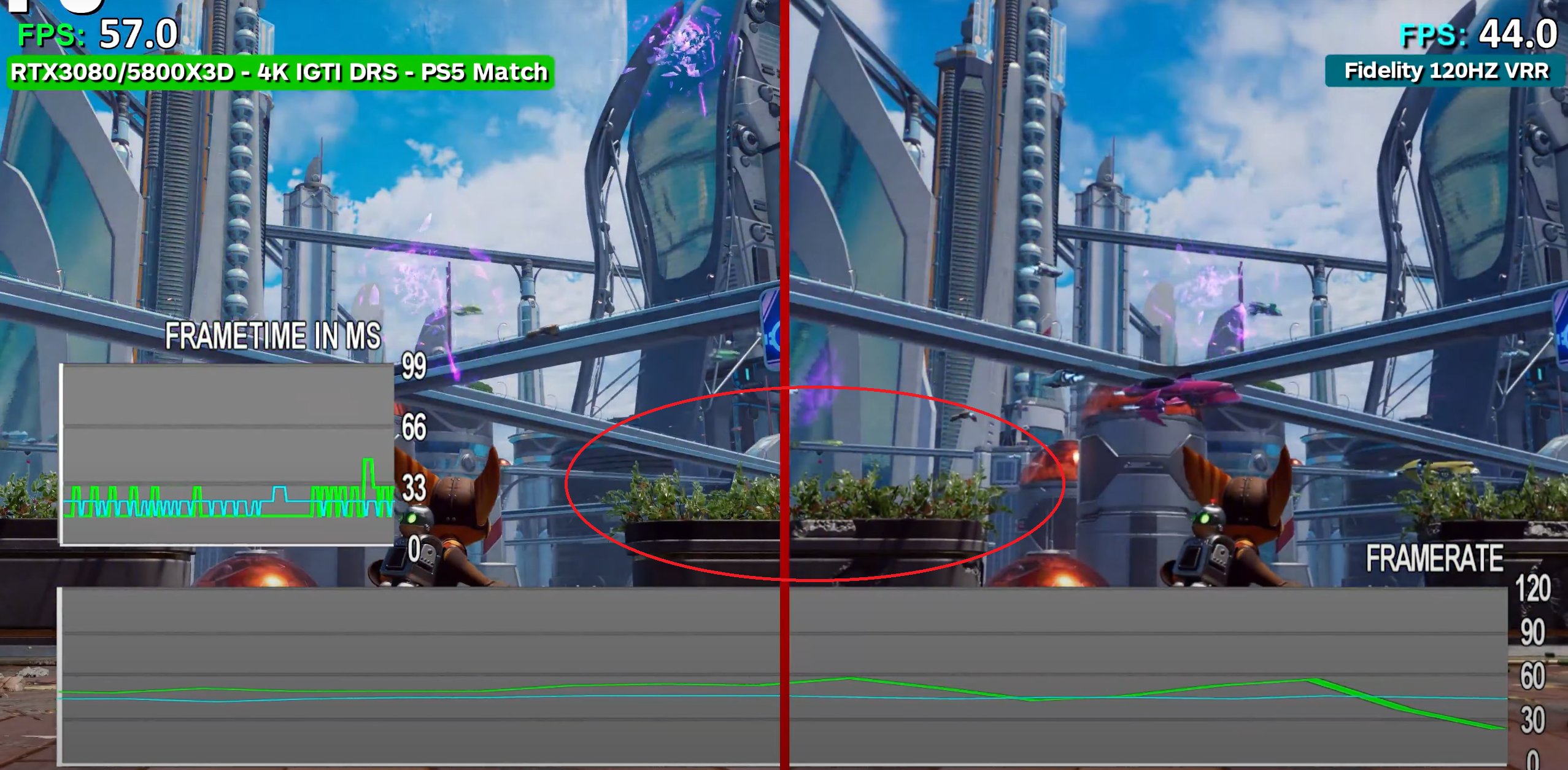

With "fake resolution and FPS"I don’t think your rig will be able to do 4k 120 but maybe get close

It might.

I recommend putting him on ignore as he's a rampant troll.Why did you laugh at my post?

Plus it's got industry’s fastest data streaming I/O.I know you said "like" but the PS5 is about 5.4x in terms of fill rates and in terms of TFLOPS, it's literally 2 PS4 Pro plus the base PS4.

Which they always leave out and don't understand.

Last edited: